Xiaoshan Yang

Replacing Parameters with Preferences: Federated Alignment of Heterogeneous Vision-Language Models

Jan 31, 2026Abstract:VLMs have broad potential in privacy-sensitive domains such as healthcare and finance, yet strict data-sharing constraints render centralized training infeasible. FL mitigates this issue by enabling decentralized training, but practical deployments face challenges due to client heterogeneity in computational resources, application requirements, and model architectures. We argue that while replacing data with model parameters characterizes the present of FL, replacing parameters with preferences represents a more scalable and privacy-preserving future. Motivated by this perspective, we propose MoR, a federated alignment framework based on GRPO with Mixture-of-Rewards for heterogeneous VLMs. MoR initializes a visual foundation model as a KL-regularized reference, while each client locally trains a reward model from local preference annotations, capturing specific evaluation signals without exposing raw data. To reconcile heterogeneous rewards, we introduce a routing-based fusion mechanism that adaptively aggregates client reward signals. Finally, the server performs GRPO with this mixed reward to optimize the base VLM. Experiments on three public VQA benchmarks demonstrate that MoR consistently outperforms federated alignment baselines in generalization, robustness, and cross-client adaptability. Our approach provides a scalable solution for privacy-preserving alignment of heterogeneous VLMs under federated settings.

Look Before You Leap: A GUI-Critic-R1 Model for Pre-Operative Error Diagnosis in GUI Automation

Jun 05, 2025Abstract:In recent years, Multimodal Large Language Models (MLLMs) have been extensively utilized for multimodal reasoning tasks, including Graphical User Interface (GUI) automation. Unlike general offline multimodal tasks, GUI automation is executed in online interactive environments, necessitating step-by-step decision-making based on real-time status of the environment. This task has a lower tolerance for decision-making errors at each step, as any mistakes may cumulatively disrupt the process and potentially lead to irreversible outcomes like deletions or payments. To address these issues, we introduce a pre-operative critic mechanism that provides effective feedback prior to the actual execution, by reasoning about the potential outcome and correctness of actions. Specifically, we propose a Suggestion-aware Gradient Relative Policy Optimization (S-GRPO) strategy to construct our pre-operative critic model GUI-Critic-R1, incorporating a novel suggestion reward to enhance the reliability of the model's feedback. Furthermore, we develop a reasoning-bootstrapping based data collection pipeline to create a GUI-Critic-Train and a GUI-Critic-Test, filling existing gaps in GUI critic data. Static experiments on the GUI-Critic-Test across both mobile and web domains reveal that our GUI-Critic-R1 offers significant advantages in critic accuracy compared to current MLLMs. Dynamic evaluation on GUI automation benchmark further highlights the effectiveness and superiority of our model, as evidenced by improved success rates and operational efficiency.

Harmony: A Unified Framework for Modality Incremental Learning

Apr 17, 2025Abstract:Incremental learning aims to enable models to continuously acquire knowledge from evolving data streams while preserving previously learned capabilities. While current research predominantly focuses on unimodal incremental learning and multimodal incremental learning where the modalities are consistent, real-world scenarios often present data from entirely new modalities, posing additional challenges. This paper investigates the feasibility of developing a unified model capable of incremental learning across continuously evolving modal sequences. To this end, we introduce a novel paradigm called Modality Incremental Learning (MIL), where each learning stage involves data from distinct modalities. To address this task, we propose a novel framework named Harmony, designed to achieve modal alignment and knowledge retention, enabling the model to reduce the modal discrepancy and learn from a sequence of distinct modalities, ultimately completing tasks across multiple modalities within a unified framework. Our approach introduces the adaptive compatible feature modulation and cumulative modal bridging. Through constructing historical modal features and performing modal knowledge accumulation and alignment, the proposed components collaboratively bridge modal differences and maintain knowledge retention, even with solely unimodal data available at each learning phase.These components work in concert to establish effective modality connections and maintain knowledge retention, even when only unimodal data is available at each learning stage. Extensive experiments on the MIL task demonstrate that our proposed method significantly outperforms existing incremental learning methods, validating its effectiveness in MIL scenarios.

Towards Visual Grounding: A Survey

Dec 28, 2024

Abstract:Visual Grounding is also known as Referring Expression Comprehension and Phrase Grounding. It involves localizing a natural number of specific regions within an image based on a given textual description. The objective of this task is to emulate the prevalent referential relationships in social conversations, equipping machines with human-like multimodal comprehension capabilities. Consequently, it has extensive applications in various domains. However, since 2021, visual grounding has witnessed significant advancements, with emerging new concepts such as grounded pre-training, grounding multimodal LLMs, generalized visual grounding, and giga-pixel grounding, which have brought numerous new challenges. In this survey, we initially examine the developmental history of visual grounding and provide an overview of essential background knowledge. We systematically track and summarize the advancements and meticulously organize the various settings in visual grounding, thereby establishing precise definitions of these settings to standardize future research and ensure a fair comparison. Additionally, we delve into several advanced topics and highlight numerous applications of visual grounding. Finally, we outline the challenges confronting visual grounding and propose valuable directions for future research, which may serve as inspiration for subsequent researchers. By extracting common technical details, this survey encompasses the representative works in each subtopic over the past decade. To the best, this paper presents the most comprehensive overview currently available in the field of grounding. This survey is designed to be suitable for both beginners and experienced researchers, serving as an invaluable resource for understanding key concepts and tracking the latest research developments. We keep tracing related works at https://github.com/linhuixiao/Awesome-Visual-Grounding.

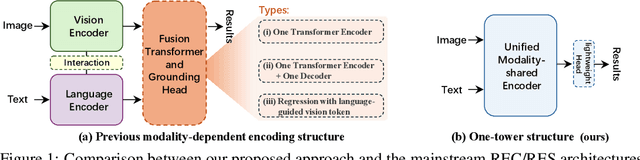

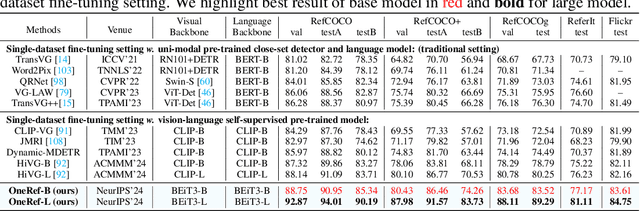

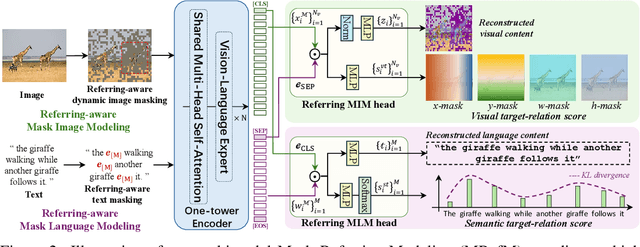

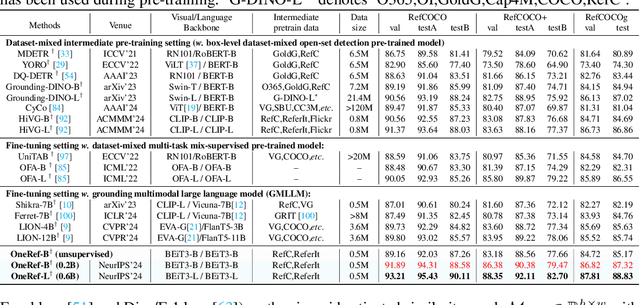

OneRef: Unified One-tower Expression Grounding and Segmentation with Mask Referring Modeling

Oct 10, 2024

Abstract:Constrained by the separate encoding of vision and language, existing grounding and referring segmentation works heavily rely on bulky Transformer-based fusion en-/decoders and a variety of early-stage interaction technologies. Simultaneously, the current mask visual language modeling (MVLM) fails to capture the nuanced referential relationship between image-text in referring tasks. In this paper, we propose OneRef, a minimalist referring framework built on the modality-shared one-tower transformer that unifies the visual and linguistic feature spaces. To modeling the referential relationship, we introduce a novel MVLM paradigm called Mask Referring Modeling (MRefM), which encompasses both referring-aware mask image modeling and referring-aware mask language modeling. Both modules not only reconstruct modality-related content but also cross-modal referring content. Within MRefM, we propose a referring-aware dynamic image masking strategy that is aware of the referred region rather than relying on fixed ratios or generic random masking schemes. By leveraging the unified visual language feature space and incorporating MRefM's ability to model the referential relations, our approach enables direct regression of the referring results without resorting to various complex techniques. Our method consistently surpasses existing approaches and achieves SoTA performance on both grounding and segmentation tasks, providing valuable insights for future research. Our code and models are available at https://github.com/linhuixiao/OneRef.

A Comprehensive Review of Few-shot Action Recognition

Jul 20, 2024

Abstract:Few-shot action recognition aims to address the high cost and impracticality of manually labeling complex and variable video data in action recognition. It requires accurately classifying human actions in videos using only a few labeled examples per class. Compared to few-shot learning in image scenarios, few-shot action recognition is more challenging due to the intrinsic complexity of video data. Recognizing actions involves modeling intricate temporal sequences and extracting rich semantic information, which goes beyond mere human and object identification in each frame. Furthermore, the issue of intra-class variance becomes particularly pronounced with limited video samples, complicating the learning of representative features for novel action categories. To overcome these challenges, numerous approaches have driven significant advancements in few-shot action recognition, which underscores the need for a comprehensive survey. Unlike early surveys that focus on few-shot image or text classification, we deeply consider the unique challenges of few-shot action recognition. In this survey, we review a wide variety of recent methods and summarize the general framework. Additionally, the survey presents the commonly used benchmarks and discusses relevant advanced topics and promising future directions. We hope this survey can serve as a valuable resource for researchers, offering essential guidance to newcomers and stimulating seasoned researchers with fresh insights.

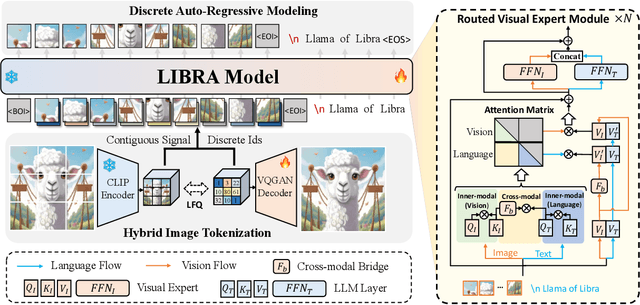

Libra: Building Decoupled Vision System on Large Language Models

May 16, 2024

Abstract:In this work, we introduce Libra, a prototype model with a decoupled vision system on a large language model (LLM). The decoupled vision system decouples inner-modal modeling and cross-modal interaction, yielding unique visual information modeling and effective cross-modal comprehension. Libra is trained through discrete auto-regressive modeling on both vision and language inputs. Specifically, we incorporate a routed visual expert with a cross-modal bridge module into a pretrained LLM to route the vision and language flows during attention computing to enable different attention patterns in inner-modal modeling and cross-modal interaction scenarios. Experimental results demonstrate that the dedicated design of Libra achieves a strong MLLM baseline that rivals existing works in the image-to-text scenario with merely 50 million training data, providing a new perspective for future multimodal foundation models. Code is available at https://github.com/YifanXu74/Libra.

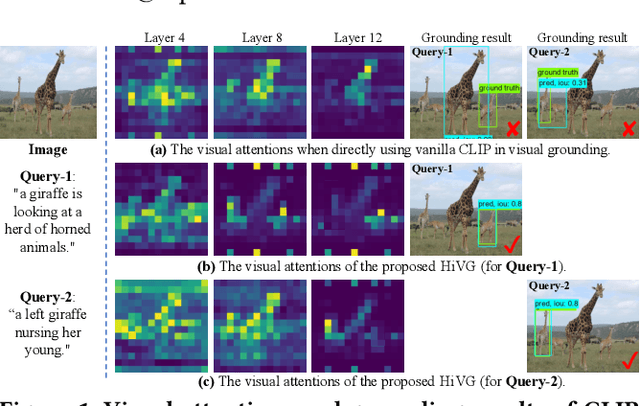

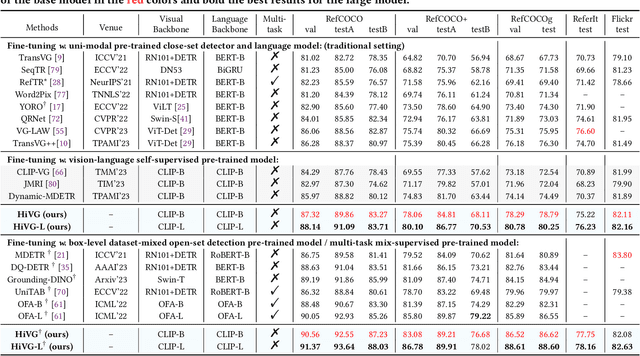

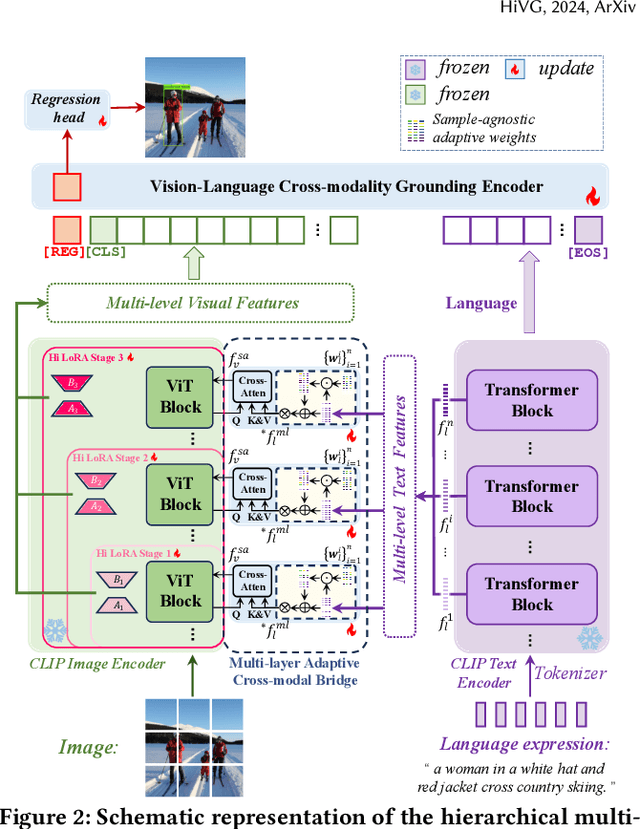

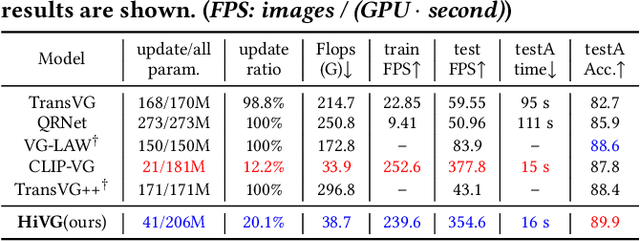

HiVG: Hierarchical Multimodal Fine-grained Modulation for Visual Grounding

Apr 20, 2024

Abstract:Visual grounding, which aims to ground a visual region via natural language, is a task that heavily relies on cross-modal alignment. Existing works utilized uni-modal pre-trained models to transfer visual/linguistic knowledge separately while ignoring the multimodal corresponding information. Motivated by recent advancements in contrastive language-image pre-training and low-rank adaptation (LoRA) methods, we aim to solve the grounding task based on multimodal pre-training. However, there exists significant task gaps between pre-training and grounding. Therefore, to address these gaps, we propose a concise and efficient hierarchical multimodal fine-grained modulation framework, namely HiVG. Specifically, HiVG consists of a multi-layer adaptive cross-modal bridge and a hierarchical multimodal low-rank adaptation (Hi LoRA) paradigm. The cross-modal bridge can address the inconsistency between visual features and those required for grounding, and establish a connection between multi-level visual and text features. Hi LoRA prevents the accumulation of perceptual errors by adapting the cross-modal features from shallow to deep layers in a hierarchical manner. Experimental results on five datasets demonstrate the effectiveness of our approach and showcase the significant grounding capabilities as well as promising energy efficiency advantages. The project page: https://github.com/linhuixiao/HiVG.

Exploring Multi-Modal Contextual Knowledge for Open-Vocabulary Object Detection

Aug 30, 2023Abstract:In this paper, we for the first time explore helpful multi-modal contextual knowledge to understand novel categories for open-vocabulary object detection (OVD). The multi-modal contextual knowledge stands for the joint relationship across regions and words. However, it is challenging to incorporate such multi-modal contextual knowledge into OVD. The reason is that previous detection frameworks fail to jointly model multi-modal contextual knowledge, as object detectors only support vision inputs and no caption description is provided at test time. To this end, we propose a multi-modal contextual knowledge distillation framework, MMC-Det, to transfer the learned contextual knowledge from a teacher fusion transformer with diverse multi-modal masked language modeling (D-MLM) to a student detector. The diverse multi-modal masked language modeling is realized by an object divergence constraint upon traditional multi-modal masked language modeling (MLM), in order to extract fine-grained region-level visual contexts, which are vital to object detection. Extensive experiments performed upon various detection datasets show the effectiveness of our multi-modal context learning strategy, where our approach well outperforms the recent state-of-the-art methods.

Multi-modal Queried Object Detection in the Wild

May 30, 2023Abstract:We introduce MQ-Det, an efficient architecture and pre-training strategy design to utilize both textual description with open-set generalization and visual exemplars with rich description granularity as category queries, namely, Multi-modal Queried object Detection, for real-world detection with both open-vocabulary categories and various granularity. MQ-Det incorporates vision queries into existing well-established language-queried-only detectors. A plug-and-play gated class-scalable perceiver module upon the frozen detector is proposed to augment category text with class-wise visual information. To address the learning inertia problem brought by the frozen detector, a vision conditioned masked language prediction strategy is proposed. MQ-Det's simple yet effective architecture and training strategy design is compatible with most language-queried object detectors, thus yielding versatile applications. Experimental results demonstrate that multi-modal queries largely boost open-world detection. For instance, MQ-Det significantly improves the state-of-the-art open-set detector GLIP by +7.8% zero-shot AP on the LVIS benchmark and averagely +6.3% AP on 13 few-shot downstream tasks, with merely 3% pre-training time required by GLIP. Code is available at https://github.com/YifanXu74/MQ-Det.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge