Xiabi Liu

Deep Distance Map Regression Network with Shape-aware Loss for Imbalanced Medical Image Segmentation

Jan 15, 2025Abstract:Small object segmentation, like tumor segmentation, is a difficult and critical task in the field of medical image analysis. Although deep learning based methods have achieved promising performance, they are restricted to the use of binary segmentation mask. Inspired by the rigorous mapping between binary segmentation mask and distance map, we adopt distance map as a novel ground truth and employ a network to fulfill the computation of distance map. Specially, we propose a new segmentation framework that incorporates the existing binary segmentation network and a light weight regression network (dubbed as LR-Net). Thus, the LR-Net can convert the distance map computation into a regression task and leverage the rich information of distance maps. Additionally, we derive a shape-aware loss by employing distance maps as penalty map to infer the complete shape of an object. We evaluated our approach on MICCAI 2017 Liver Tumor Segmentation (LiTS) Challenge dataset and a clinical dataset. Experimental results show that our approach outperforms the classification-based methods as well as other existing state-of-the-arts.

* Conference

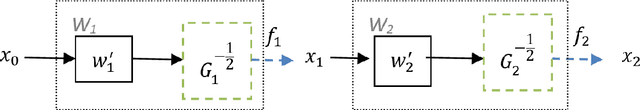

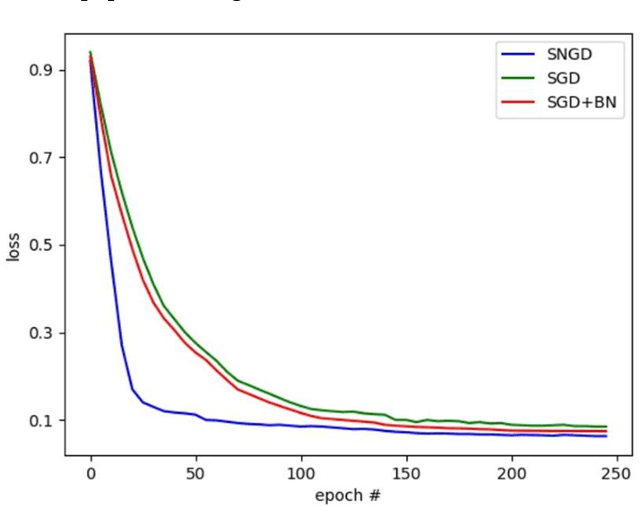

Reconstructing Deep Neural Networks: Unleashing the Optimization Potential of Natural Gradient Descent

Dec 10, 2024

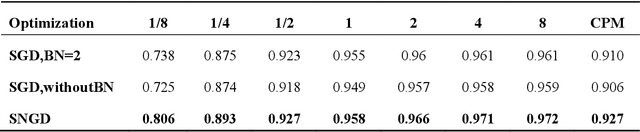

Abstract:Natural gradient descent (NGD) is a powerful optimization technique for machine learning, but the computational complexity of the inverse Fisher information matrix limits its application in training deep neural networks. To overcome this challenge, we propose a novel optimization method for training deep neural networks called structured natural gradient descent (SNGD). Theoretically, we demonstrate that optimizing the original network using NGD is equivalent to using fast gradient descent (GD) to optimize the reconstructed network with a structural transformation of the parameter matrix. Thereby, we decompose the calculation of the global Fisher information matrix into the efficient computation of local Fisher matrices via constructing local Fisher layers in the reconstructed network to speed up the training. Experimental results on various deep networks and datasets demonstrate that SNGD achieves faster convergence speed than NGD while retaining comparable solutions. Furthermore, our method outperforms traditional GDs in terms of efficiency and effectiveness. Thus, our proposed method has the potential to significantly improve the scalability and efficiency of NGD in deep learning applications. Our source code is available at https://github.com/Chaochao-Lin/SNGD.

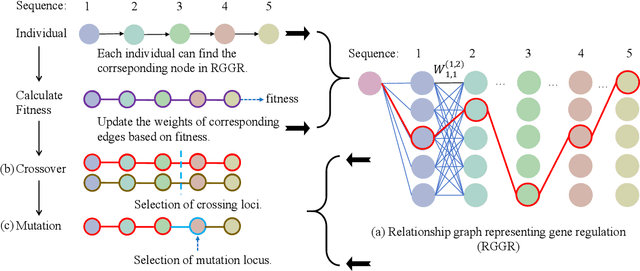

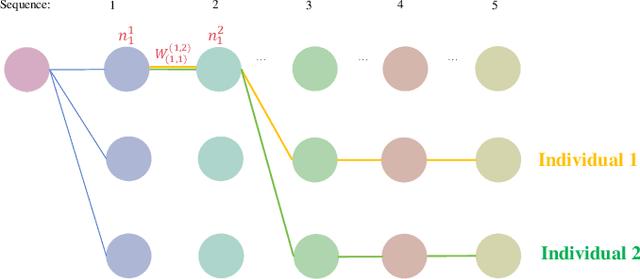

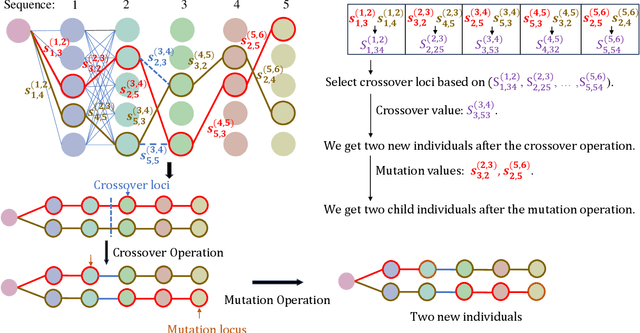

GARA: A novel approach to Improve Genetic Algorithms' Accuracy and Efficiency by Utilizing Relationships among Genes

Apr 28, 2024

Abstract:Genetic algorithms have played an important role in engineering optimization. Traditional GAs treat each gene separately. However, biophysical studies of gene regulatory networks revealed direct associations between different genes. It inspires us to propose an improvement to GA in this paper, Gene Regulatory Genetic Algorithm (GRGA), which, to our best knowledge, is the first time to utilize relationships among genes for improving GA's accuracy and efficiency. We design a directed multipartite graph encapsulating the solution space, called RGGR, where each node corresponds to a gene in the solution and the edge represents the relationship between adjacent nodes. The edge's weight reflects the relationship degree and is updated based on the idea that the edges' weights in a complete chain as candidate solution with acceptable or unacceptable performance should be strengthened or reduced, respectively. The obtained RGGR is then employed to determine appropriate loci of crossover and mutation operators, thereby directing the evolutionary process toward faster and better convergence. We analyze and validate our proposed GRGA approach in a single-objective multimodal optimization problem, and further test it on three types of applications, including feature selection, text summarization, and dimensionality reduction. Results illustrate that our GARA is effective and promising.

L2T-DLN: Learning to Teach with Dynamic Loss Network

Oct 30, 2023

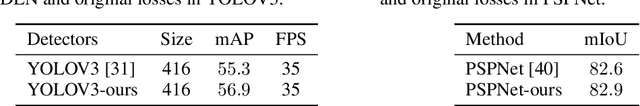

Abstract:With the concept of teaching being introduced to the machine learning community, a teacher model start using dynamic loss functions to teach the training of a student model. The dynamic intends to set adaptive loss functions to different phases of student model learning. In existing works, the teacher model 1) merely determines the loss function based on the present states of the student model, i.e., disregards the experience of the teacher; 2) only utilizes the states of the student model, e.g., training iteration number and loss/accuracy from training/validation sets, while ignoring the states of the loss function. In this paper, we first formulate the loss adjustment as a temporal task by designing a teacher model with memory units, and, therefore, enables the student learning to be guided by the experience of the teacher model. Then, with a dynamic loss network, we can additionally use the states of the loss to assist the teacher learning in enhancing the interactions between the teacher and the student model. Extensive experiments demonstrate our approach can enhance student learning and improve the performance of various deep models on real-world tasks, including classification, objective detection, and semantic segmentation scenarios.

LCCo: Lending CLIP to Co-Segmentation

Aug 22, 2023

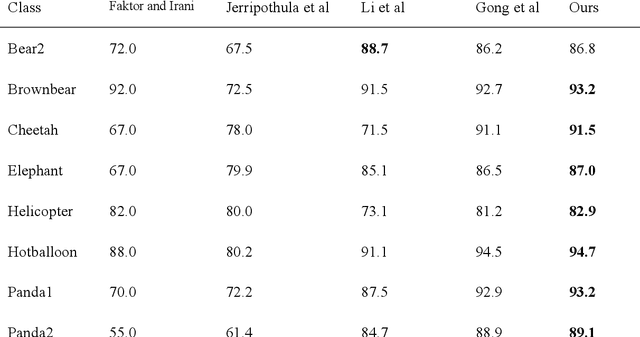

Abstract:This paper studies co-segmenting the common semantic object in a set of images. Existing works either rely on carefully engineered networks to mine the implicit semantic information in visual features or require extra data (i.e., classification labels) for training. In this paper, we leverage the contrastive language-image pre-training framework (CLIP) for the task. With a backbone segmentation network that independently processes each image from the set, we introduce semantics from CLIP into the backbone features, refining them in a coarse-to-fine manner with three key modules: i) an image set feature correspondence module, encoding global consistent semantic information of the image set; ii) a CLIP interaction module, using CLIP-mined common semantics of the image set to refine the backbone feature; iii) a CLIP regularization module, drawing CLIP towards this co-segmentation task, identifying the best CLIP semantic and using it to regularize the backbone feature. Experiments on four standard co-segmentation benchmark datasets show that the performance of our method outperforms state-of-the-art methods.

RL-CoSeg : A Novel Image Co-Segmentation Algorithm with Deep Reinforcement Learning

Apr 12, 2022

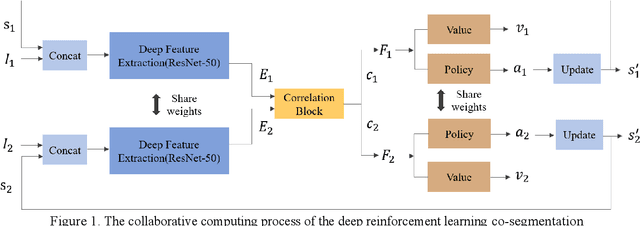

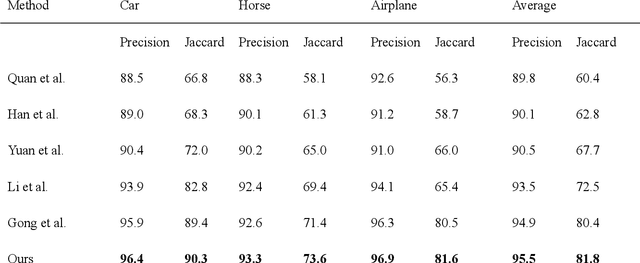

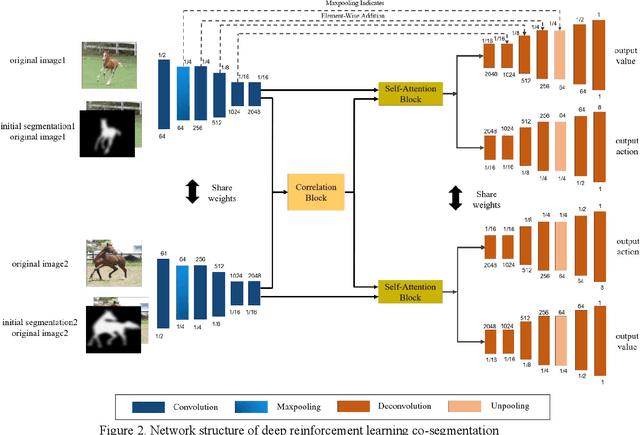

Abstract:This paper proposes an automatic image co-segmentation algorithm based on deep reinforcement learning (RL). Existing co-segmentation tasks mainly rely on deep learning methods, and the obtained foreground edges are often rough. In order to obtain more precise foreground edges, we use deep RL to solve this problem and achieve the finer segmentation. To our best knowledge, this is the first work to apply RL methods to co-segmentation. We define the problem as a Markov Decision Process (MDP) and optimize it by RL with asynchronous advantage actor-critic (A3C). The RL image co-segmentation network uses the correlation between images to segment common and salient objects from a set of related images. In order to achieve automatic segmentation, our RL-CoSeg method eliminates user's hints. For the image co-segmentation problem, we propose a collaborative RL algorithm based on the A3C model. We propose a Siamese RL co-segmentation network structure to obtain the co-attention of images for co-segmentation. We improve the self-attention for automatic RL algorithm to obtain long-distance dependence and enlarge the receptive field. The image feature information obtained by self-attention can be used to supplement the deleted user's hints and help to obtain more accurate actions. Experimental results have shown that our method can improve the performance effectively on both coarse and fine initial segmentations, and it achieves the state-of-the-art performance on Internet dataset, iCoseg dataset and MLMR-COS dataset.

Generating meta-learning tasks to evolve parametric loss for classification learning

Nov 20, 2021

Abstract:The field of meta-learning has seen a dramatic rise in interest in recent years. In existing meta-learning approaches, learning tasks for training meta-models are usually collected from public datasets, which brings the difficulty of obtaining a sufficient number of meta-learning tasks with a large amount of training data. In this paper, we propose a meta-learning approach based on randomly generated meta-learning tasks to obtain a parametric loss for classification learning based on big data. The loss is represented by a deep neural network, called meta-loss network (MLN). To train the MLN, we construct a large number of classification learning tasks through randomly generating training data, validation data, and corresponding ground-truth linear classifier. Our approach has two advantages. First, sufficient meta-learning tasks with large number of training data can be obtained easily. Second, the ground-truth classifier is given, so that the difference between the learned classifier and the ground-truth model can be measured to reflect the performance of MLN more precisely than validation accuracy. Based on this difference, we apply the evolutionary strategy algorithm to find out the optimal MLN. The resultant MLN not only leads to satisfactory learning effects on generated linear classifier learning tasks for testing, but also behaves very well on generated nonlinear classifier learning tasks and various public classification tasks. Our MLN stably surpass cross-entropy (CE) and mean square error (MSE) in testing accuracy and generalization ability. These results illustrate the possibility of achieving satisfactory meta-learning effects using generated learning tasks.

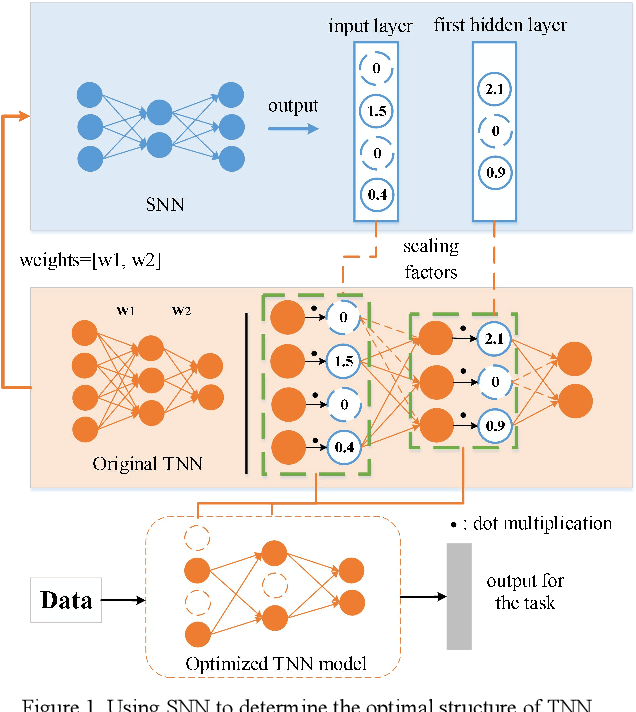

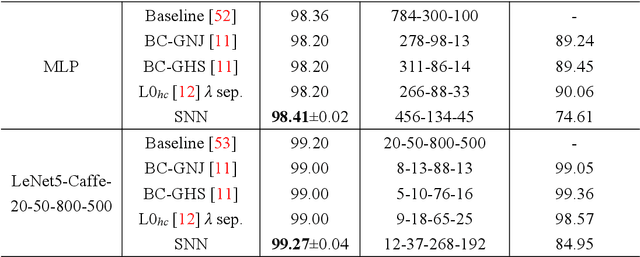

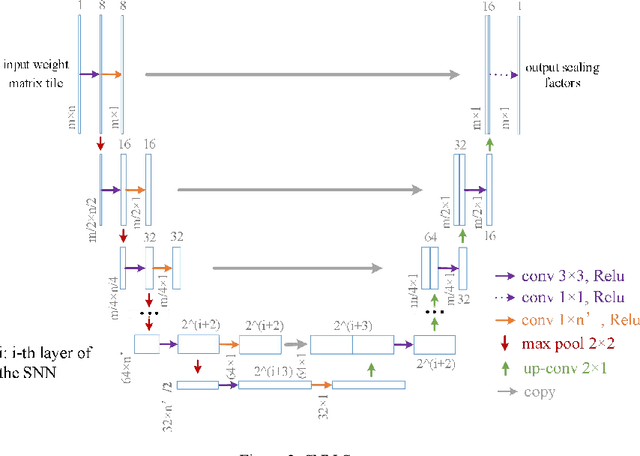

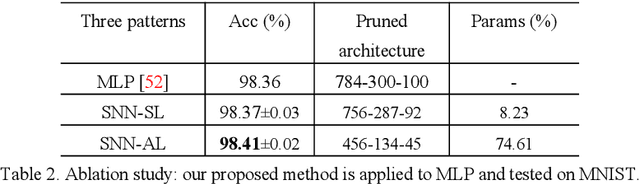

Mining the Weights Knowledge for Optimizing Neural Network Structures

Oct 11, 2021

Abstract:Knowledge embedded in the weights of the artificial neural network can be used to improve the network structure, such as in network compression. However, the knowledge is set up by hand, which may not be very accurate, and relevant information may be overlooked. Inspired by how learning works in the mammalian brain, we mine the knowledge contained in the weights of the neural network toward automatic architecture learning in this paper. We introduce a switcher neural network (SNN) that uses as inputs the weights of a task-specific neural network (called TNN for short). By mining the knowledge contained in the weights, the SNN outputs scaling factors for turning off and weighting neurons in the TNN. To optimize the structure and the parameters of TNN simultaneously, the SNN and TNN are learned alternately under the same performance evaluation of TNN using stochastic gradient descent. We test our method on widely used datasets and popular networks in classification applications. In terms of accuracy, we outperform baseline networks and other structure learning methods stably and significantly. At the same time, we compress the baseline networks without introducing any sparse induction mechanism, and our method, in particular, leads to a lower compression rate when dealing with simpler baselines or more difficult tasks. These results demonstrate that our method can produce a more reasonable structure.

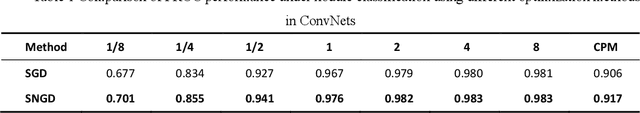

A Novel Structured Natural Gradient Descent for Deep Learning

Sep 21, 2021

Abstract:Natural gradient descent (NGD) provided deep insights and powerful tools to deep neural networks. However the computation of Fisher information matrix becomes more and more difficult as the network structure turns large and complex. This paper proposes a new optimization method whose main idea is to accurately replace the natural gradient optimization by reconstructing the network. More specifically, we reconstruct the structure of the deep neural network, and optimize the new network using traditional gradient descent (GD). The reconstructed network achieves the effect of the optimization way with natural gradient descent. Experimental results show that our optimization method can accelerate the convergence of deep network models and achieve better performance than GD while sharing its computational simplicity.

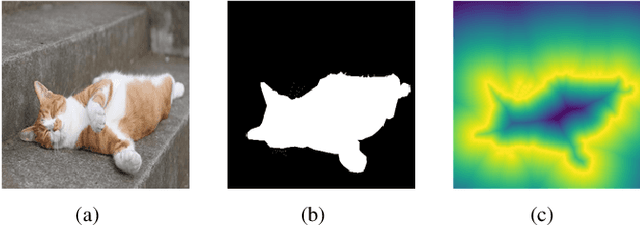

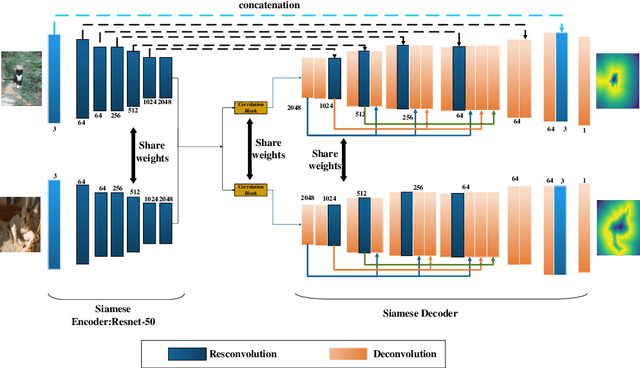

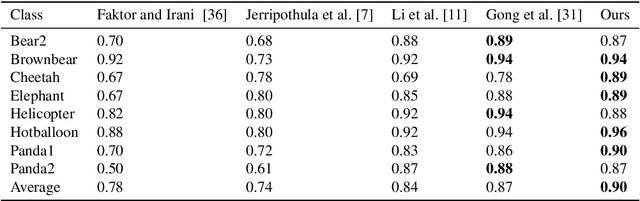

A Dense Siamese U-Net trained with Edge Enhanced 3D IOU Loss for Image Co-segmentation

Aug 17, 2021

Abstract:Image co-segmentation has attracted a lot of attentions in computer vision community. In this paper, we propose a new approach to image co-segmentation through introducing the dense connections into the decoder path of Siamese U-net and presenting a new edge enhanced 3D IOU loss measured over distance maps. Considering the rigorous mapping between the signed normalized distance map (SNDM) and the binary segmentation mask, we estimate the SNDMs directly from original images and use them to determine the segmentation results. We apply the Siamese U-net for solving this problem and improve its effectiveness by densely connecting each layer with subsequent layers in the decoder path. Furthermore, a new learning loss is designed to measure the 3D intersection over union (IOU) between the generated SNDMs and the labeled SNDMs. The experimental results on commonly used datasets for image co-segmentation demonstrate the effectiveness of our presented dense structure and edge enhanced 3D IOU loss of SNDM. To our best knowledge, they lead to the state-of-the-art performance on the Internet and iCoseg datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge