Huiyu Li

Effective Attention-Guided Multi-Scale Medical Network for Skin Lesion Segmentation

Dec 08, 2025Abstract:In the field of healthcare, precise skin lesion segmentation is crucial for the early detection and accurate diagnosis of skin diseases. Despite significant advances in deep learning for image processing, existing methods have yet to effectively address the challenges of irregular lesion shapes and low contrast. To address these issues, this paper proposes an innovative encoder-decoder network architecture based on multi-scale residual structures, capable of extracting rich feature information from different receptive fields to effectively identify lesion areas. By introducing a Multi-Resolution Multi-Channel Fusion (MRCF) module, our method captures cross-scale features, enhancing the clarity and accuracy of the extracted information. Furthermore, we propose a Cross-Mix Attention Module (CMAM), which redefines the attention scope and dynamically calculates weights across multiple contexts, thus improving the flexibility and depth of feature capture and enabling deeper exploration of subtle features. To overcome the information loss caused by skip connections in traditional U-Net, an External Attention Bridge (EAB) is introduced, facilitating the effective utilization of information in the decoder and compensating for the loss during upsampling. Extensive experimental evaluations on several skin lesion segmentation datasets demonstrate that the proposed model significantly outperforms existing transformer and convolutional neural network-based models, showcasing exceptional segmentation accuracy and robustness.

Generative Medical Image Anonymization Based on Latent Code Projection and Optimization

Jan 15, 2025Abstract:Medical image anonymization aims to protect patient privacy by removing identifying information, while preserving the data utility to solve downstream tasks. In this paper, we address the medical image anonymization problem with a two-stage solution: latent code projection and optimization. In the projection stage, we design a streamlined encoder to project input images into a latent space and propose a co-training scheme to enhance the projection process. In the optimization stage, we refine the latent code using two deep loss functions designed to address the trade-off between identity protection and data utility dedicated to medical images. Through a comprehensive set of qualitative and quantitative experiments, we showcase the effectiveness of our approach on the MIMIC-CXR chest X-ray dataset by generating anonymized synthetic images that can serve as training set for detecting lung pathologies. Source codes are available at https://github.com/Huiyu-Li/GMIA.

Deep Distance Map Regression Network with Shape-aware Loss for Imbalanced Medical Image Segmentation

Jan 15, 2025Abstract:Small object segmentation, like tumor segmentation, is a difficult and critical task in the field of medical image analysis. Although deep learning based methods have achieved promising performance, they are restricted to the use of binary segmentation mask. Inspired by the rigorous mapping between binary segmentation mask and distance map, we adopt distance map as a novel ground truth and employ a network to fulfill the computation of distance map. Specially, we propose a new segmentation framework that incorporates the existing binary segmentation network and a light weight regression network (dubbed as LR-Net). Thus, the LR-Net can convert the distance map computation into a regression task and leverage the rich information of distance maps. Additionally, we derive a shape-aware loss by employing distance maps as penalty map to infer the complete shape of an object. We evaluated our approach on MICCAI 2017 Liver Tumor Segmentation (LiTS) Challenge dataset and a clinical dataset. Experimental results show that our approach outperforms the classification-based methods as well as other existing state-of-the-arts.

* Conference

Detecting subtle macroscopic changes in a finite temperature classical scalar field with machine learning

Nov 21, 2023Abstract:The ability to detect macroscopic changes is important for probing the behaviors of experimental many-body systems from the classical to the quantum realm. Although abrupt changes near phase boundaries can easily be detected, subtle macroscopic changes are much more difficult to detect as the changes can be obscured by noise. In this study, as a toy model for detecting subtle macroscopic changes in many-body systems, we try to differentiate scalar field samples at varying temperatures. We compare different methods for making such differentiations, from physics method, statistics method, to AI method. Our finding suggests that the AI method outperforms both the statistical method and the physics method in its sensitivity. Our result provides a proof-of-concept that AI can potentially detect macroscopic changes in many-body systems that elude physical measures.

A Network Resource Allocation Recommendation Method with An Improved Similarity Measure

Jul 07, 2023Abstract:Recommender systems have been acknowledged as efficacious tools for managing information overload. Nevertheless, conventional algorithms adopted in such systems primarily emphasize precise recommendations and, consequently, overlook other vital aspects like the coverage, diversity, and novelty of items. This approach results in less exposure for long-tail items. In this paper, to personalize the recommendations and allocate recommendation resources more purposively, a method named PIM+RA is proposed. This method utilizes a bipartite network that incorporates self-connecting edges and weights. Furthermore, an improved Pearson correlation coefficient is employed for better redistribution. The evaluation of PIM+RA demonstrates a significant enhancement not only in accuracy but also in coverage, diversity, and novelty of the recommendation. It leads to a better balance in recommendation frequency by providing effective exposure to long-tail items, while allowing customized parameters to adjust the recommendation list bias.

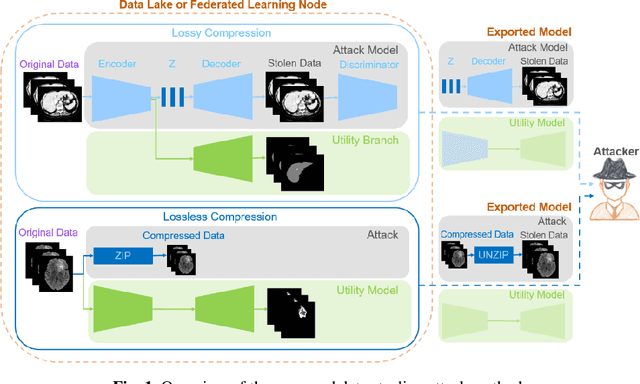

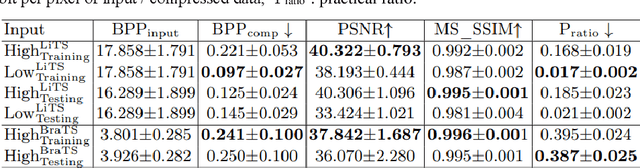

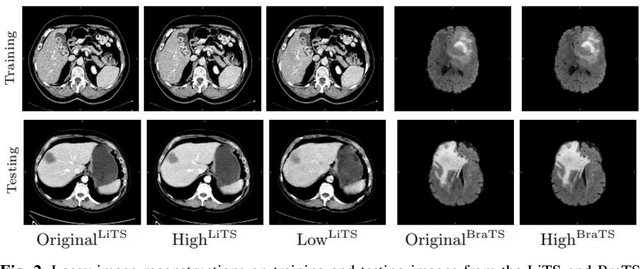

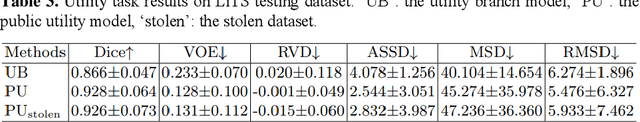

Data Stealing Attack on Medical Images: Is it Safe to Export Networks from Data Lakes?

Jun 07, 2022

Abstract:In privacy-preserving machine learning, it is common that the owner of the learned model does not have any physical access to the data. Instead, only a secured remote access to a data lake is granted to the model owner without any ability to retrieve data from the data lake. Yet, the model owner may want to export the trained model periodically from the remote repository and a question arises whether this may cause is a risk of data leakage. In this paper, we introduce the concept of data stealing attack during the export of neural networks. It consists in hiding some information in the exported network that allows the reconstruction outside the data lake of images initially stored in that data lake. More precisely, we show that it is possible to train a network that can perform lossy image compression and at the same time solve some utility tasks such as image segmentation. The attack then proceeds by exporting the compression decoder network together with some image codes that leads to the image reconstruction outside the data lake. We explore the feasibility of such attacks on databases of CT and MR images, showing that it is possible to obtain perceptually meaningful reconstructions of the target dataset, and that the stolen dataset can be used in turns to solve a broad range of tasks. Comprehensive experiments and analyses show that data stealing attacks should be considered as a threat for sensitive imaging data sources.

A Dense Siamese U-Net trained with Edge Enhanced 3D IOU Loss for Image Co-segmentation

Aug 17, 2021

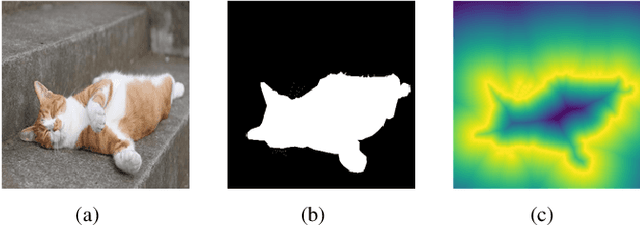

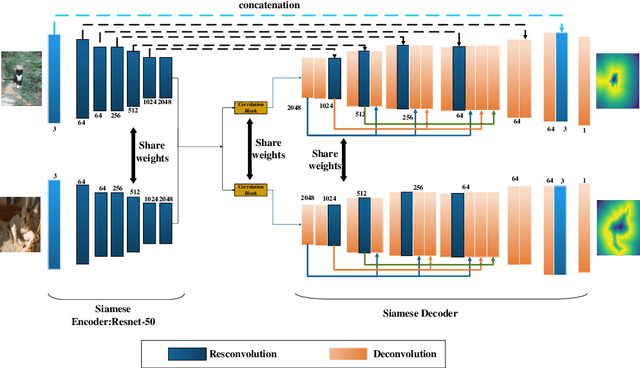

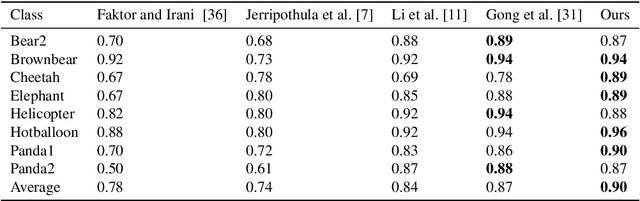

Abstract:Image co-segmentation has attracted a lot of attentions in computer vision community. In this paper, we propose a new approach to image co-segmentation through introducing the dense connections into the decoder path of Siamese U-net and presenting a new edge enhanced 3D IOU loss measured over distance maps. Considering the rigorous mapping between the signed normalized distance map (SNDM) and the binary segmentation mask, we estimate the SNDMs directly from original images and use them to determine the segmentation results. We apply the Siamese U-net for solving this problem and improve its effectiveness by densely connecting each layer with subsequent layers in the decoder path. Furthermore, a new learning loss is designed to measure the 3D intersection over union (IOU) between the generated SNDMs and the labeled SNDMs. The experimental results on commonly used datasets for image co-segmentation demonstrate the effectiveness of our presented dense structure and edge enhanced 3D IOU loss of SNDM. To our best knowledge, they lead to the state-of-the-art performance on the Internet and iCoseg datasets.

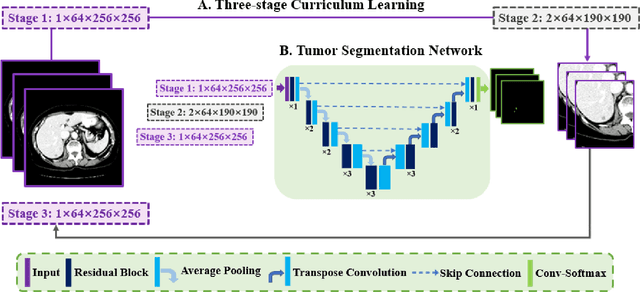

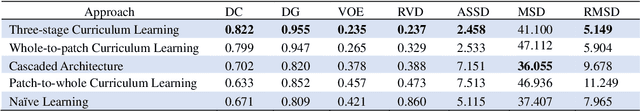

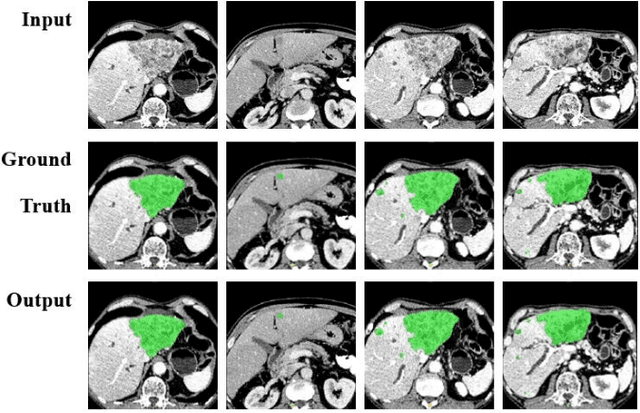

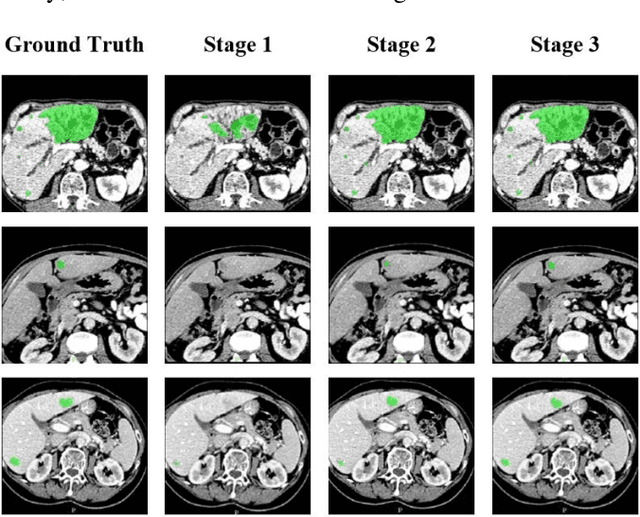

A New Three-stage Curriculum Learning Approach to Deep Network Based Liver Tumor Segmentation

Oct 17, 2019

Abstract:Automatic segmentation of liver tumors in medical images is crucial for the computer-aided diagnosis and therapy. It is a challenging task, since the tumors are notoriously small against the background voxels. This paper proposes a new three-stage curriculum learning approach for training deep networks to tackle this small object segmentation problem. The learning in the first stage is performed on the whole input to obtain an initial deep network for tumor segmenta-tion. Then the second stage of learning focuses the strength-ening of tumor specific features by continuing training the network on the tumor patches. Finally, we retrain the net-work on the whole input in the third stage, in order that the tumor specific features and the global context can be inte-grated ideally under the segmentation objective. Benefitting from the proposed learning approach, we only need to em-ploy one single network to segment the tumors directly. We evaluated our approach on the 2017 MICCAI Liver Tumor Segmentation challenge dataset. In the experiments, our approach exhibits significant improvement compared with the commonly used cascaded counterpart.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge