Xi Guo

InstaDrive: Instance-Aware Driving World Models for Realistic and Consistent Video Generation

Feb 03, 2026Abstract:Autonomous driving relies on robust models trained on high-quality, large-scale multi-view driving videos. While world models offer a cost-effective solution for generating realistic driving videos, they struggle to maintain instance-level temporal consistency and spatial geometric fidelity. To address these challenges, we propose InstaDrive, a novel framework that enhances driving video realism through two key advancements: (1) Instance Flow Guider, which extracts and propagates instance features across frames to enforce temporal consistency, preserving instance identity over time. (2) Spatial Geometric Aligner, which improves spatial reasoning, ensures precise instance positioning, and explicitly models occlusion hierarchies. By incorporating these instance-aware mechanisms, InstaDrive achieves state-of-the-art video generation quality and enhances downstream autonomous driving tasks on the nuScenes dataset. Additionally, we utilize CARLA's autopilot to procedurally and stochastically simulate rare but safety-critical driving scenarios across diverse maps and regions, enabling rigorous safety evaluation for autonomous systems. Our project page is https://shanpoyang654.github.io/InstaDrive/page.html.

AbductiveMLLM: Boosting Visual Abductive Reasoning Within MLLMs

Jan 06, 2026Abstract:Visual abductive reasoning (VAR) is a challenging task that requires AI systems to infer the most likely explanation for incomplete visual observations. While recent MLLMs develop strong general-purpose multimodal reasoning capabilities, they fall short in abductive inference, as compared to human beings. To bridge this gap, we draw inspiration from the interplay between verbal and pictorial abduction in human cognition, and propose to strengthen abduction of MLLMs by mimicking such dual-mode behavior. Concretely, we introduce AbductiveMLLM comprising of two synergistic components: REASONER and IMAGINER. The REASONER operates in the verbal domain. It first explores a broad space of possible explanations using a blind LLM and then prunes visually incongruent hypotheses based on cross-modal causal alignment. The remaining hypotheses are introduced into the MLLM as targeted priors, steering its reasoning toward causally coherent explanations. The IMAGINER, on the other hand, further guides MLLMs by emulating human-like pictorial thinking. It conditions a text-to-image diffusion model on both the input video and the REASONER's output embeddings to "imagine" plausible visual scenes that correspond to verbal explanation, thereby enriching MLLMs' contextual grounding. The two components are trained jointly in an end-to-end manner. Experiments on standard VAR benchmarks show that AbductiveMLLM achieves state-of-the-art performance, consistently outperforming traditional solutions and advanced MLLMs.

Physical Informed Driving World Model

Dec 13, 2024

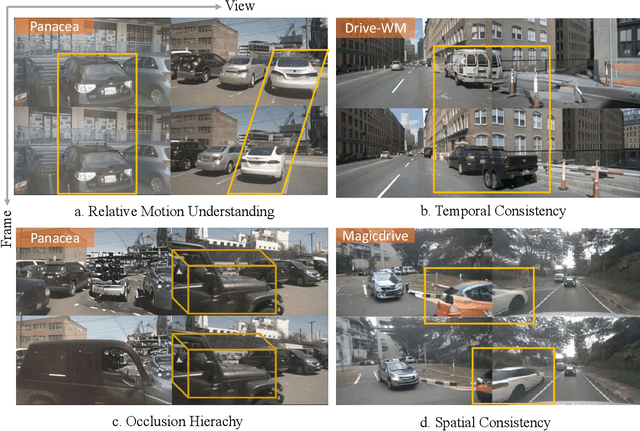

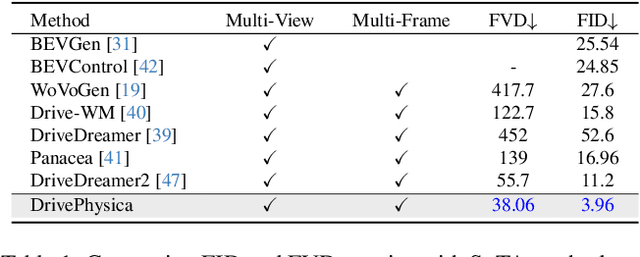

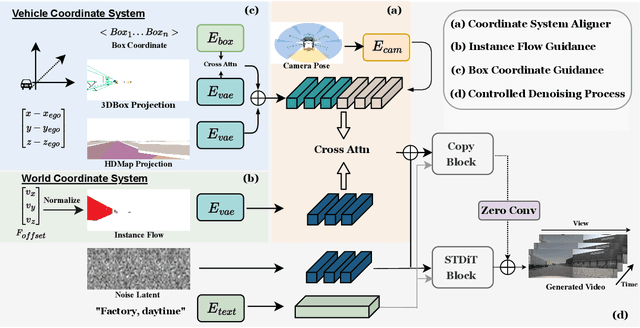

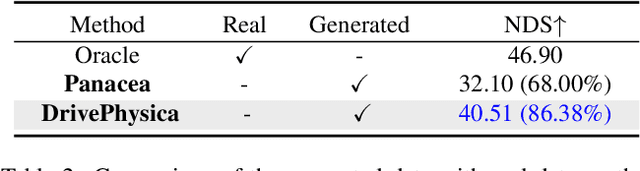

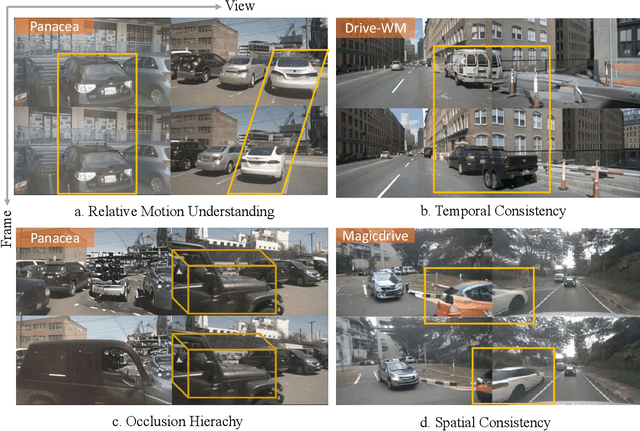

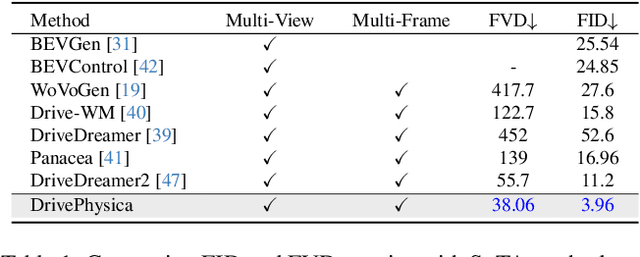

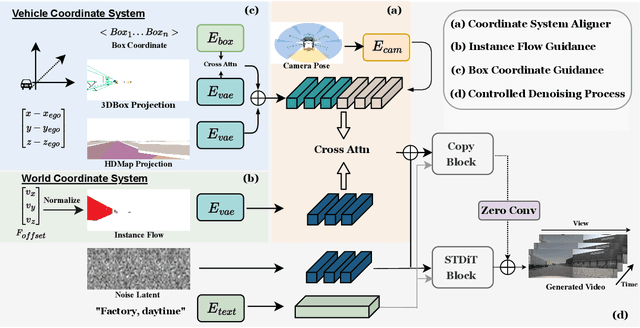

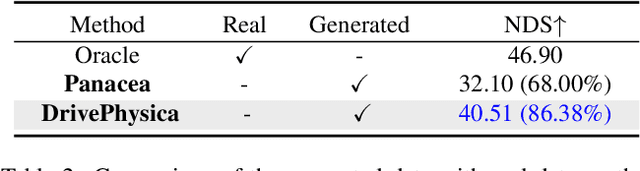

Abstract:Autonomous driving requires robust perception models trained on high-quality, large-scale multi-view driving videos for tasks like 3D object detection, segmentation and trajectory prediction. While world models provide a cost-effective solution for generating realistic driving videos, challenges remain in ensuring these videos adhere to fundamental physical principles, such as relative and absolute motion, spatial relationship like occlusion and spatial consistency, and temporal consistency. To address these, we propose DrivePhysica, an innovative model designed to generate realistic multi-view driving videos that accurately adhere to essential physical principles through three key advancements: (1) a Coordinate System Aligner module that integrates relative and absolute motion features to enhance motion interpretation, (2) an Instance Flow Guidance module that ensures precise temporal consistency via efficient 3D flow extraction, and (3) a Box Coordinate Guidance module that improves spatial relationship understanding and accurately resolves occlusion hierarchies. Grounded in physical principles, we achieve state-of-the-art performance in driving video generation quality (3.96 FID and 38.06 FVD on the Nuscenes dataset) and downstream perception tasks. Our project homepage: https://metadrivescape.github.io/papers_project/DrivePhysica/page.html

Pysical Informed Driving World Model

Dec 11, 2024

Abstract:Autonomous driving requires robust perception models trained on high-quality, large-scale multi-view driving videos for tasks like 3D object detection, segmentation and trajectory prediction. While world models provide a cost-effective solution for generating realistic driving videos, challenges remain in ensuring these videos adhere to fundamental physical principles, such as relative and absolute motion, spatial relationship like occlusion and spatial consistency, and temporal consistency. To address these, we propose DrivePhysica, an innovative model designed to generate realistic multi-view driving videos that accurately adhere to essential physical principles through three key advancements: (1) a Coordinate System Aligner module that integrates relative and absolute motion features to enhance motion interpretation, (2) an Instance Flow Guidance module that ensures precise temporal consistency via efficient 3D flow extraction, and (3) a Box Coordinate Guidance module that improves spatial relationship understanding and accurately resolves occlusion hierarchies. Grounded in physical principles, we achieve state-of-the-art performance in driving video generation quality (3.96 FID and 38.06 FVD on the Nuscenes dataset) and downstream perception tasks. Our project homepage: https://metadrivescape.github.io/papers_project/DrivePhysica/page.html

InfinityDrive: Breaking Time Limits in Driving World Models

Dec 02, 2024

Abstract:Autonomous driving systems struggle with complex scenarios due to limited access to diverse, extensive, and out-of-distribution driving data which are critical for safe navigation. World models offer a promising solution to this challenge; however, current driving world models are constrained by short time windows and limited scenario diversity. To bridge this gap, we introduce InfinityDrive, the first driving world model with exceptional generalization capabilities, delivering state-of-the-art performance in high fidelity, consistency, and diversity with minute-scale video generation. InfinityDrive introduces an efficient spatio-temporal co-modeling module paired with an extended temporal training strategy, enabling high-resolution (576$\times$1024) video generation with consistent spatial and temporal coherence. By incorporating memory injection and retention mechanisms alongside an adaptive memory curve loss to minimize cumulative errors, achieving consistent video generation lasting over 1500 frames (approximately 2 minutes). Comprehensive experiments in multiple datasets validate InfinityDrive's ability to generate complex and varied scenarios, highlighting its potential as a next-generation driving world model built for the evolving demands of autonomous driving. Our project homepage: https://metadrivescape.github.io/papers_project/InfinityDrive/page.html

GIG: Graph Data Imputation With Graph Differential Dependencies

Oct 21, 2024

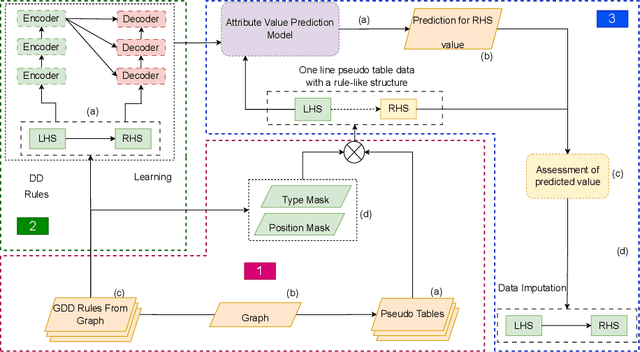

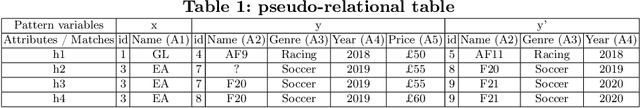

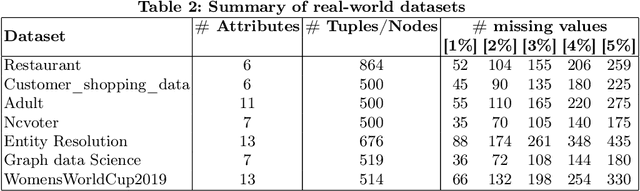

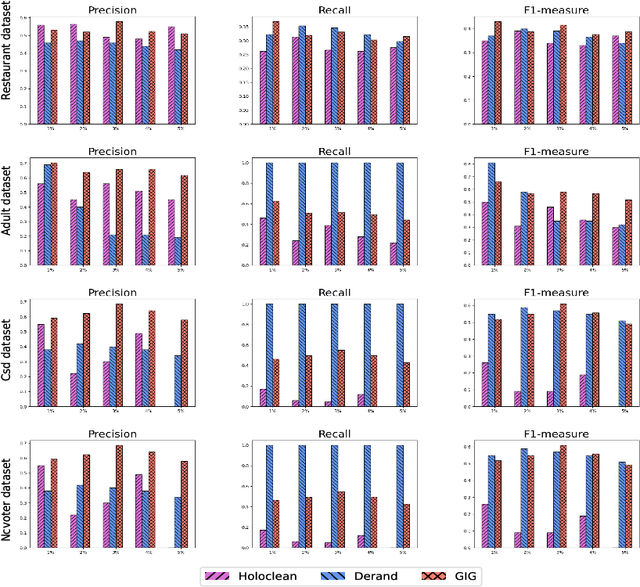

Abstract:Data imputation addresses the challenge of imputing missing values in database instances, ensuring consistency with the overall semantics of the dataset. Although several heuristics which rely on statistical methods, and ad-hoc rules have been proposed. These do not generalise well and often lack data context. Consequently, they also lack explainability. The existing techniques also mostly focus on the relational data context making them unsuitable for wider application contexts such as in graph data. In this paper, we propose a graph data imputation approach called GIG which relies on graph differential dependencies (GDDs). GIG, learns the GDDs from a given knowledge graph, and uses these rules to train a transformer model which then predicts the value of missing data within the graph. By leveraging GDDs, GIG incoporates semantic knowledge into the data imputation process making it more reliable and explainable. Experimental results on seven real-world datasets highlight GIG's effectiveness compared to existing state-of-the-art approaches.

Detection of Sleep Apnea-Hypopnea Events Using Millimeter-wave Radar and Pulse Oximeter

Sep 28, 2024

Abstract:Obstructive Sleep Apnea-Hypopnea Syndrome (OSAHS) is a sleep-related breathing disorder associated with significant morbidity and mortality worldwide. The gold standard for OSAHS diagnosis, polysomnography (PSG), faces challenges in popularization due to its high cost and complexity. Recently, radar has shown potential in detecting sleep apnea-hypopnea events (SAE) with the advantages of low cost and non-contact monitoring. However, existing studies, especially those using deep learning, employ segment-based classification approach for SAE detection, making the task of event quantity estimation difficult. Additionally, radar-based SAE detection is susceptible to interference from body movements and the environment. Oxygen saturation (SpO2) can offer valuable information about OSAHS, but it also has certain limitations and cannot be used alone for diagnosis. In this study, we propose a method using millimeter-wave radar and pulse oximeter to detect SAE, called ROSA. It fuses information from both sensors, and directly predicts the temporal localization of SAE. Experimental results demonstrate a high degree of consistency (ICC=0.9864) between AHI from ROSA and PSG. This study presents an effective method with low-load device for the diagnosis of OSAHS.

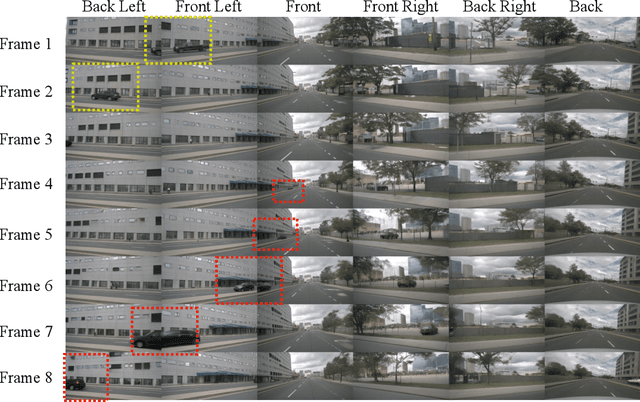

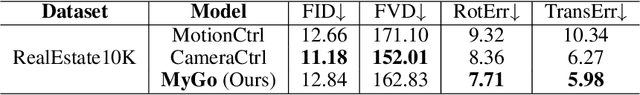

MyGo: Consistent and Controllable Multi-View Driving Video Generation with Camera Control

Sep 11, 2024

Abstract:High-quality driving video generation is crucial for providing training data for autonomous driving models. However, current generative models rarely focus on enhancing camera motion control under multi-view tasks, which is essential for driving video generation. Therefore, we propose MyGo, an end-to-end framework for video generation, introducing motion of onboard cameras as conditions to make progress in camera controllability and multi-view consistency. MyGo employs additional plug-in modules to inject camera parameters into the pre-trained video diffusion model, which retains the extensive knowledge of the pre-trained model as much as possible. Furthermore, we use epipolar constraints and neighbor view information during the generation process of each view to enhance spatial-temporal consistency. Experimental results show that MyGo has achieved state-of-the-art results in both general camera-controlled video generation and multi-view driving video generation tasks, which lays the foundation for more accurate environment simulation in autonomous driving. Project page: https://metadrivescape.github.io/papers_project/MyGo/page.html

DriveScape: Towards High-Resolution Controllable Multi-View Driving Video Generation

Sep 11, 2024

Abstract:Recent advancements in generative models have provided promising solutions for synthesizing realistic driving videos, which are crucial for training autonomous driving perception models. However, existing approaches often struggle with multi-view video generation due to the challenges of integrating 3D information while maintaining spatial-temporal consistency and effectively learning from a unified model. In this paper, we propose an end-to-end framework named DriveScape for multi-view, 3D condition-guided video generation. DriveScape not only streamlines the process by integrating camera data to ensure comprehensive spatial-temporal coverage, but also introduces a Bi-Directional Modulated Transformer module to effectively align 3D road structural information. As a result, our approach enables precise control over video generation, significantly enhancing realism and providing a robust solution for generating multi-view driving videos. Our framework achieves state-of-the-art results on the nuScenes dataset, demonstrating impressive generative quality metrics with an FID score of 8.34 and an FVD score of 76.39, as well as superior performance across various perception tasks. This paves the way for more accurate environmental simulations in autonomous driving. Our project homepage: https://metadrivescape.github.io/papers_project/drivescapev1/index.html

SGC-VQGAN: Towards Complex Scene Representation via Semantic Guided Clustering Codebook

Sep 09, 2024Abstract:Vector quantization (VQ) is a method for deterministically learning features through discrete codebook representations. Recent works have utilized visual tokenizers to discretize visual regions for self-supervised representation learning. However, a notable limitation of these tokenizers is lack of semantics, as they are derived solely from the pretext task of reconstructing raw image pixels in an auto-encoder paradigm. Additionally, issues like imbalanced codebook distribution and codebook collapse can adversely impact performance due to inefficient codebook utilization. To address these challenges, We introduce SGC-VQGAN through Semantic Online Clustering method to enhance token semantics through Consistent Semantic Learning. Utilizing inference results from segmentation model , our approach constructs a temporospatially consistent semantic codebook, addressing issues of codebook collapse and imbalanced token semantics. Our proposed Pyramid Feature Learning pipeline integrates multi-level features to capture both image details and semantics simultaneously. As a result, SGC-VQGAN achieves SOTA performance in both reconstruction quality and various downstream tasks. Its simplicity, requiring no additional parameter learning, enables its direct application in downstream tasks, presenting significant potential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge