Jiang Hua

GIG: Graph Data Imputation With Graph Differential Dependencies

Oct 21, 2024

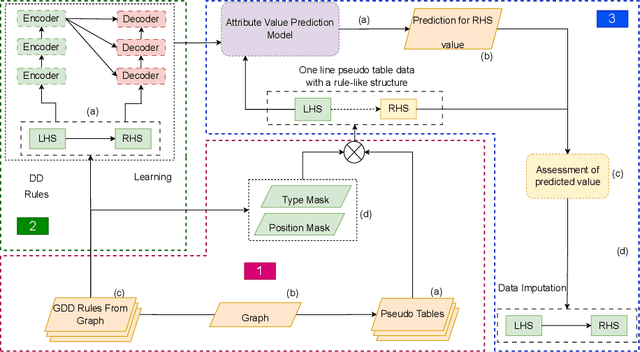

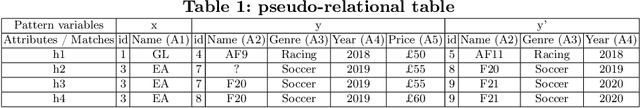

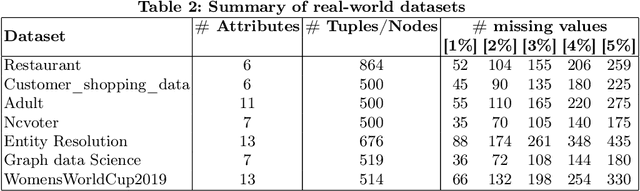

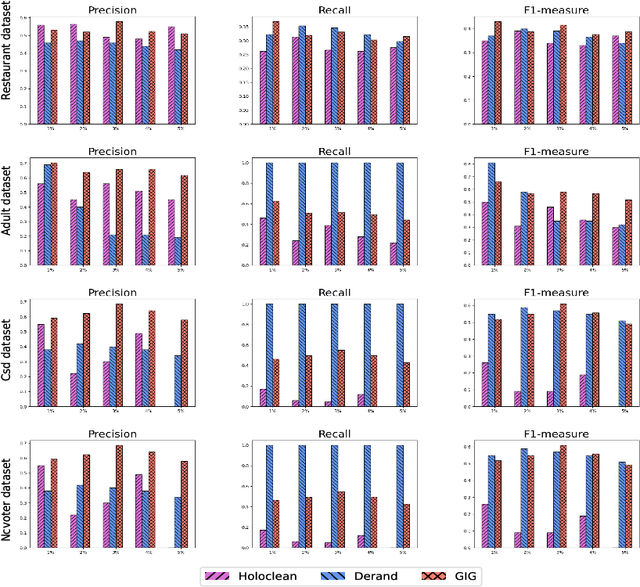

Abstract:Data imputation addresses the challenge of imputing missing values in database instances, ensuring consistency with the overall semantics of the dataset. Although several heuristics which rely on statistical methods, and ad-hoc rules have been proposed. These do not generalise well and often lack data context. Consequently, they also lack explainability. The existing techniques also mostly focus on the relational data context making them unsuitable for wider application contexts such as in graph data. In this paper, we propose a graph data imputation approach called GIG which relies on graph differential dependencies (GDDs). GIG, learns the GDDs from a given knowledge graph, and uses these rules to train a transformer model which then predicts the value of missing data within the graph. By leveraging GDDs, GIG incoporates semantic knowledge into the data imputation process making it more reliable and explainable. Experimental results on seven real-world datasets highlight GIG's effectiveness compared to existing state-of-the-art approaches.

OTFDM: A Novel 2D Modulation Waveform Modeling Dot-product Doubly-selective Channel

Apr 04, 2023

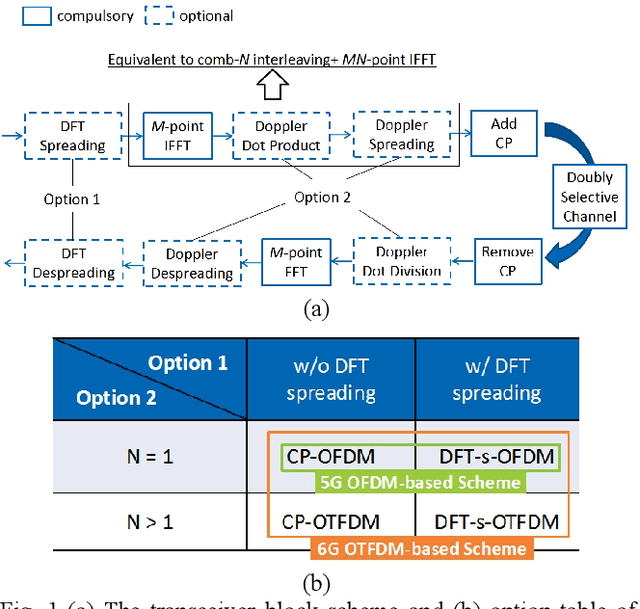

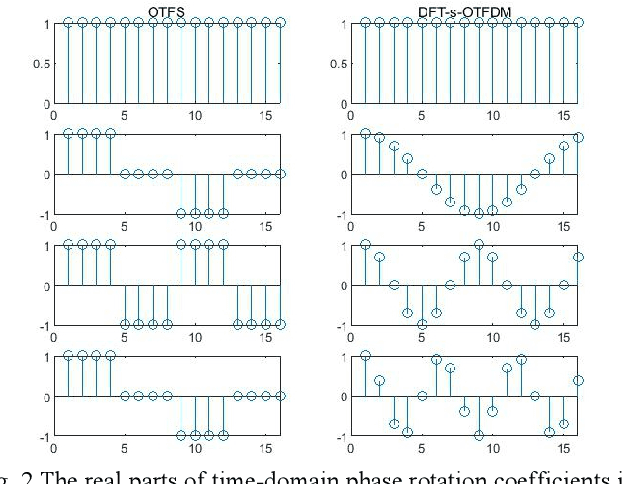

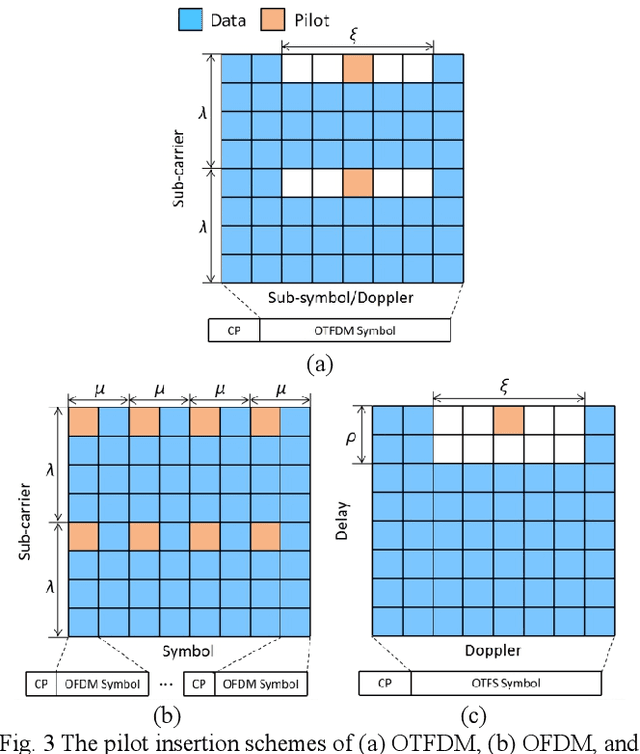

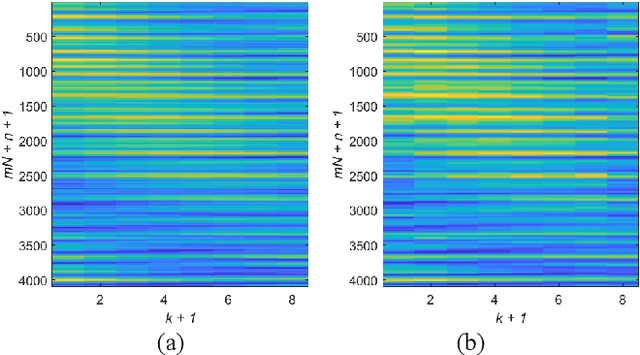

Abstract:Recently, a two-dimension (2D) modulation waveform of orthogonal time-frequency-space (OTFS) has been a popular 6G candidate to replace existing orthogonal frequency division multiplexing (OFDM). The extensive OTFS researches help to make both the advantages and limitations of OTFS more and more clear. The limitations are not easy to overcome as they come from OTFS on-grid 2D convolution channel model. Instead of solving OTFS inborn challenges, this paper proposes a novel 2D modulation waveform named orthogonal time-frequency division multiplexing (OTFDM). OTFDM uses a 2D dot-product channel model to cope with doubly-selectivity. Compared with OTFS, OTFDM supports grid-free channel delay and Doppler and gains a simple and efficient 2D equalization. The concise dot-division equalization can be easily combined with MIMO. The simulation result shows that OTFDM is able to bear high mobility and greatly outperforms OFDM in doubly-selective channel.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge