Vladimir Kostic

kooplearn: A Scikit-Learn Compatible Library of Algorithms for Evolution Operator Learning

Dec 24, 2025

Abstract:kooplearn is a machine-learning library that implements linear, kernel, and deep-learning estimators of dynamical operators and their spectral decompositions. kooplearn can model both discrete-time evolution operators (Koopman/Transfer) and continuous-time infinitesimal generators. By learning these operators, users can analyze dynamical systems via spectral methods, derive data-driven reduced-order models, and forecast future states and observables. kooplearn's interface is compliant with the scikit-learn API, facilitating its integration into existing machine learning and data science workflows. Additionally, kooplearn includes curated benchmark datasets to support experimentation, reproducibility, and the fair comparison of learning algorithms. The software is available at https://github.com/Machine-Learning-Dynamical-Systems/kooplearn.

Toward Scalable and Valid Conditional Independence Testing with Spectral Representations

Dec 22, 2025Abstract:Conditional independence (CI) is central to causal inference, feature selection, and graphical modeling, yet it is untestable in many settings without additional assumptions. Existing CI tests often rely on restrictive structural conditions, limiting their validity on real-world data. Kernel methods using the partial covariance operator offer a more principled approach but suffer from limited adaptivity, slow convergence, and poor scalability. In this work, we explore whether representation learning can help address these limitations. Specifically, we focus on representations derived from the singular value decomposition of the partial covariance operator and use them to construct a simple test statistic, reminiscent of the Hilbert-Schmidt Independence Criterion (HSIC). We also introduce a practical bi-level contrastive algorithm to learn these representations. Our theory links representation learning error to test performance and establishes asymptotic validity and power guarantees. Preliminary experiments suggest that this approach offers a practical and statistically grounded path toward scalable CI testing, bridging kernel-based theory with modern representation learning.

From Biased to Unbiased Dynamics: An Infinitesimal Generator Approach

Jun 13, 2024Abstract:We investigate learning the eigenfunctions of evolution operators for time-reversal invariant stochastic processes, a prime example being the Langevin equation used in molecular dynamics. Many physical or chemical processes described by this equation involve transitions between metastable states separated by high potential barriers that can hardly be crossed during a simulation. To overcome this bottleneck, data are collected via biased simulations that explore the state space more rapidly. We propose a framework for learning from biased simulations rooted in the infinitesimal generator of the process and the associated resolvent operator. We contrast our approach to more common ones based on the transfer operator, showing that it can provably learn the spectral properties of the unbiased system from biased data. In experiments, we highlight the advantages of our method over transfer operator approaches and recent developments based on generator learning, demonstrating its effectiveness in estimating eigenfunctions and eigenvalues. Importantly, we show that even with datasets containing only a few relevant transitions due to sub-optimal biasing, our approach recovers relevant information about the transition mechanism.

Morphological Symmetries in Robotics

Feb 23, 2024Abstract:We present a comprehensive framework for studying and leveraging morphological symmetries in robotic systems. These are intrinsic properties of the robot's morphology, frequently observed in animal biology and robotics, which stem from the replication of kinematic structures and the symmetrical distribution of mass. We illustrate how these symmetries extend to the robot's state space and both proprioceptive and exteroceptive sensor measurements, resulting in the equivariance of the robot's equations of motion and optimal control policies. Thus, we recognize morphological symmetries as a relevant and previously unexplored physics-informed geometric prior, with significant implications for both data-driven and analytical methods used in modeling, control, estimation and design in robotics. For data-driven methods, we demonstrate that morphological symmetries can enhance the sample efficiency and generalization of machine learning models through data augmentation, or by applying equivariant/invariant constraints on the model's architecture. In the context of analytical methods, we employ abstract harmonic analysis to decompose the robot's dynamics into a superposition of lower-dimensional, independent dynamics. We substantiate our claims with both synthetic and real-world experiments conducted on bipedal and quadrupedal robots. Lastly, we introduce the repository MorphoSymm to facilitate the practical use of the theory and applications outlined in this work.

A randomized algorithm to solve reduced rank operator regression

Dec 28, 2023Abstract:We present and analyze an algorithm designed for addressing vector-valued regression problems involving possibly infinite-dimensional input and output spaces. The algorithm is a randomized adaptation of reduced rank regression, a technique to optimally learn a low-rank vector-valued function (i.e. an operator) between sampled data via regularized empirical risk minimization with rank constraints. We propose Gaussian sketching techniques both for the primal and dual optimization objectives, yielding Randomized Reduced Rank Regression (R4) estimators that are efficient and accurate. For each of our R4 algorithms we prove that the resulting regularized empirical risk is, in expectation w.r.t. randomness of a sketch, arbitrarily close to the optimal value when hyper-parameteres are properly tuned. Numerical expreriments illustrate the tightness of our bounds and show advantages in two distinct scenarios: (i) solving a vector-valued regression problem using synthetic and large-scale neuroscience datasets, and (ii) regressing the Koopman operator of a nonlinear stochastic dynamical system.

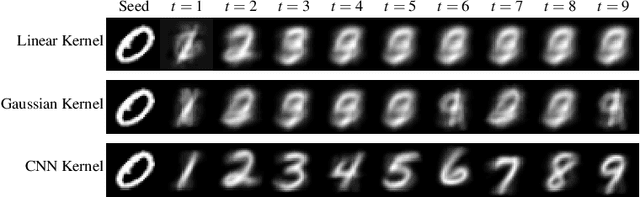

Consistent Long-Term Forecasting of Ergodic Dynamical Systems

Dec 20, 2023

Abstract:We study the evolution of distributions under the action of an ergodic dynamical system, which may be stochastic in nature. By employing tools from Koopman and transfer operator theory one can evolve any initial distribution of the state forward in time, and we investigate how estimators of these operators perform on long-term forecasting. Motivated by the observation that standard estimators may fail at this task, we introduce a learning paradigm that neatly combines classical techniques of eigenvalue deflation from operator theory and feature centering from statistics. This paradigm applies to any operator estimator based on empirical risk minimization, making them satisfy learning bounds which hold uniformly on the entire trajectory of future distributions, and abide to the conservation of mass for each of the forecasted distributions. Numerical experiments illustrates the advantages of our approach in practice.

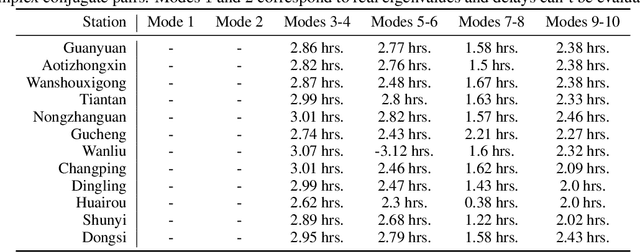

Dynamics Harmonic Analysis of Robotic Systems: Application in Data-Driven Koopman Modelling

Dec 12, 2023

Abstract:We introduce the use of harmonic analysis to decompose the state space of symmetric robotic systems into orthogonal isotypic subspaces. These are lower-dimensional spaces that capture distinct, symmetric, and synergistic motions. For linear dynamics, we characterize how this decomposition leads to a subdivision of the dynamics into independent linear systems on each subspace, a property we term dynamics harmonic analysis (DHA). To exploit this property, we use Koopman operator theory to propose an equivariant deep-learning architecture that leverages the properties of DHA to learn a global linear model of system dynamics. Our architecture, validated on synthetic systems and the dynamics of locomotion of a quadrupedal robot, demonstrates enhanced generalization, sample efficiency, and interpretability, with less trainable parameters and computational costs.

Koopman Operator Learning: Sharp Spectral Rates and Spurious Eigenvalues

Feb 07, 2023Abstract:Non-linear dynamical systems can be handily described by the associated Koopman operator, whose action evolves every observable of the system forward in time. Learning the Koopman operator from data is enabled by a number of algorithms. In this work we present nonasymptotic learning bounds for the Koopman eigenvalues and eigenfunctions estimated by two popular algorithms: Extended Dynamic Mode Decomposition (EDMD) and Reduced Rank Regression (RRR). We focus on time-reversal-invariant Markov chains, implying that the Koopman operator is self-adjoint. This includes important examples of stochastic dynamical systems, notably Langevin dynamics. Our spectral learning bounds are driven by the simultaneous control of the operator norm risk of the estimators and a metric distortion associated to the corresponding eigenfunctions. Our analysis indicates that both algorithms have similar variance, but EDMD suffers from a larger bias which might be detrimental to its learning rate. We further argue that a large metric distortion may lead to spurious eigenvalues, a phenomenon which has been empirically observed, and note that metric distortion can be estimated from data. Numerical experiments complement the theoretical findings.

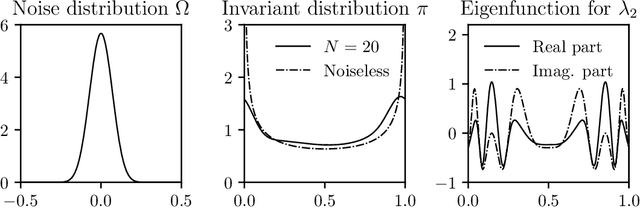

Learning Dynamical Systems via Koopman Operator Regression in Reproducing Kernel Hilbert Spaces

May 27, 2022

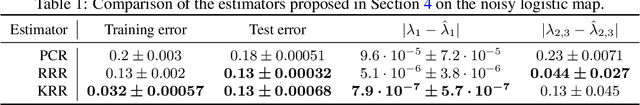

Abstract:We study a class of dynamical systems modelled as Markov chains that admit an invariant distribution via the corresponding transfer, or Koopman, operator. While data-driven algorithms to reconstruct such operators are well known, their relationship with statistical learning is largely unexplored. We formalize a framework to learn the Koopman operator from finite data trajectories of the dynamical system. We consider the restriction of this operator to a reproducing kernel Hilbert space and introduce a notion of risk, from which different estimators naturally arise. We link the risk with the estimation of the spectral decomposition of the Koopman operator. These observations motivate a reduced-rank operator regression (RRR) estimator. We derive learning bounds for the proposed estimator, holding both in i.i.d. and non i.i.d. settings, the latter in terms of mixing coefficients. Our results suggest RRR might be beneficial over other widely used estimators as confirmed in numerical experiments both for forecasting and mode decomposition.

Convergence of Batch Greenkhorn for Regularized Multimarginal Optimal Transport

Dec 03, 2021Abstract:In this work we propose a batch version of the Greenkhorn algorithm for multimarginal regularized optimal transport problems. Our framework is general enough to cover, as particular cases, some existing algorithms like Sinkhorn and Greenkhorn algorithm for the bi-marginal setting, and (greedy) MultiSinkhorn for multimarginal optimal transport. We provide a complete convergence analysis, which is based on the properties of the iterative Bregman projections (IBP) method with greedy control. Global linear rate of convergence and explicit bound on the iteration complexity are obtained. When specialized to above mentioned algorithms, our results give new insights and/or improve existing ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge