Tim Taubner

AirSim Drone Racing Lab

Mar 12, 2020

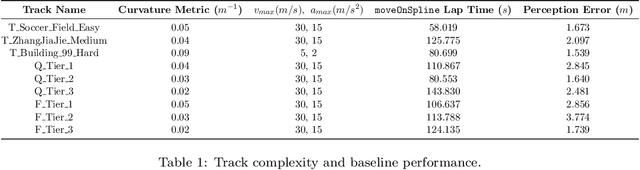

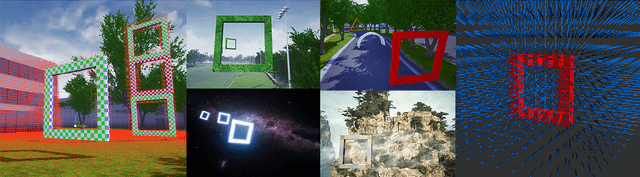

Abstract:Autonomous drone racing is a challenging research problem at the intersection of computer vision, planning, state estimation, and control. We introduce AirSim Drone Racing Lab, a simulation framework for enabling fast prototyping of algorithms for autonomy and enabling machine learning research in this domain, with the goal of reducing the time, money, and risks associated with field robotics. Our framework enables generation of racing tracks in multiple photo-realistic environments, orchestration of drone races, comes with a suite of gate assets, allows for multiple sensor modalities (monocular, depth, neuromorphic events, optical flow), different camera models, and benchmarking of planning, control, computer vision, and learning-based algorithms. We used our framework to host a simulation based drone racing competition at NeurIPS 2019. The competition binaries are available at our github repository.

Predicting Unobserved Space For Planning via Depth Map Augmentation

Nov 13, 2019

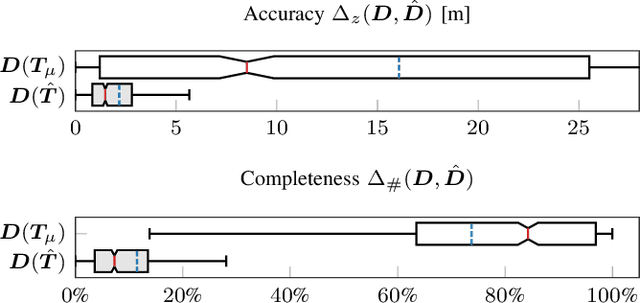

Abstract:Safe and efficient path planning is crucial for autonomous mobile robots. A prerequisite for path planning is to have a comprehensive understanding of the 3D structure of the robot's environment. On MAVs this is commonly achieved using low-cost sensors, such as stereo or RGB-D cameras. These sensors may fail to provide depth measurements in textureless or IR-absorbing areas and have limited effective range. In path planning, this results in inefficient trajectories or failure to recognize a feasible path to the goal, hence significantly impairing the robot's mobility. Recent advances in deep learning enables us to exploit prior experience about the shape of the world and hence to infer complete depth maps from color images and additional sparse depth measurements. In this work, we present an augmented planning system and investigate the effects of employing state-of-the-art depth completion techniques, specifically trained to augment sparse depth maps originating from RGB-D sensors, semi-dense methods and stereo matchers. We extensively evaluate our approach in online path planning experiments based on simulated data, as well as global path planning experiments based on real world MAV data. We show that our augmented system, provided with only sparse depth perception, can reach on-par performance to ground truth depth input in simulated online planning experiments. On real world MAV data the augmented system demonstrates superior performance compared to a planner based on very dense RGB-D depth maps.

Flexible Stereo: Constrained, Non-rigid, Wide-baseline Stereo Vision for Fixed-wing Aerial Platforms

Feb 26, 2018

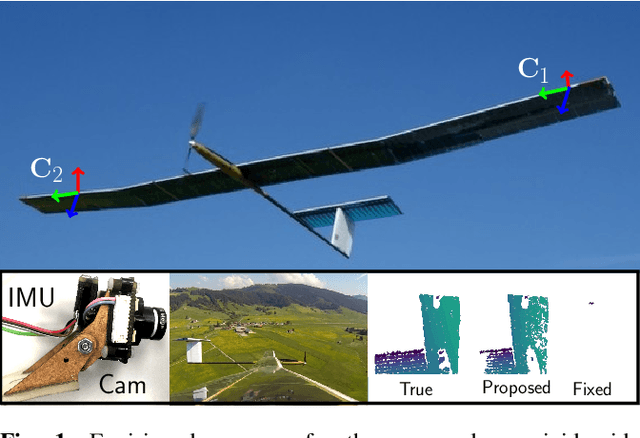

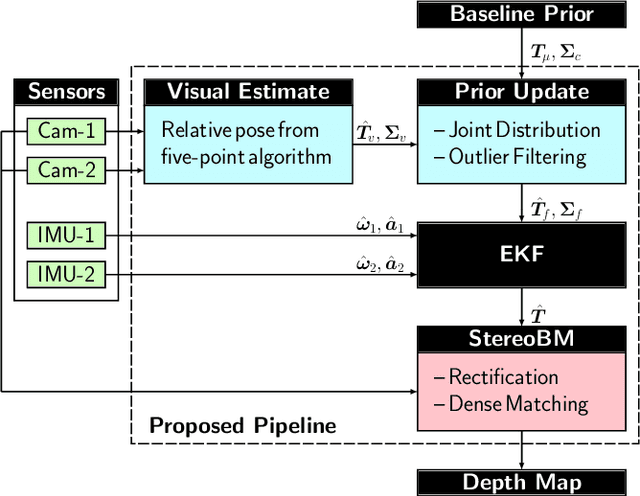

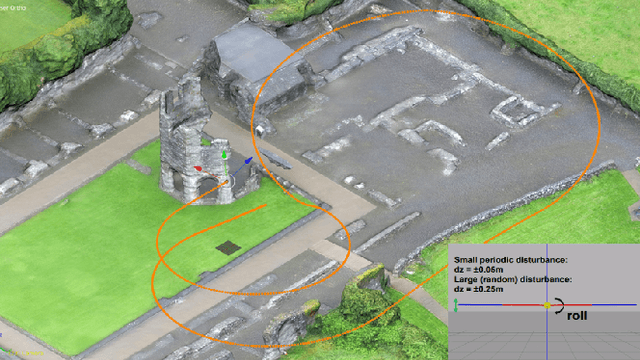

Abstract:This paper proposes a computationally efficient method to estimate the time-varying relative pose between two visual-inertial sensor rigs mounted on the flexible wings of a fixed-wing unmanned aerial vehicle (UAV). The estimated relative poses are used to generate highly accurate depth maps in real-time and can be employed for obstacle avoidance in low-altitude flights or landing maneuvers. The approach is structured as follows: Initially, a wing model is identified by fitting a probability density function to measured deviations from the nominal relative baseline transformation. At run-time, the prior knowledge about the wing model is fused in an Extended Kalman filter~(EKF) together with relative pose measurements obtained from solving a relative perspective N-point problem (PNP), and the linear accelerations and angular velocities measured by the two inertial measurement units (IMU) which are rigidly attached to the cameras. Results obtained from extensive synthetic experiments demonstrate that our proposed framework is able to estimate highly accurate baseline transformations and depth maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge