Timo Hinzmann

Multi-Resolution Elevation Mapping and Safe Landing Site Detection with Applications to Planetary Rotorcraft

Nov 11, 2021

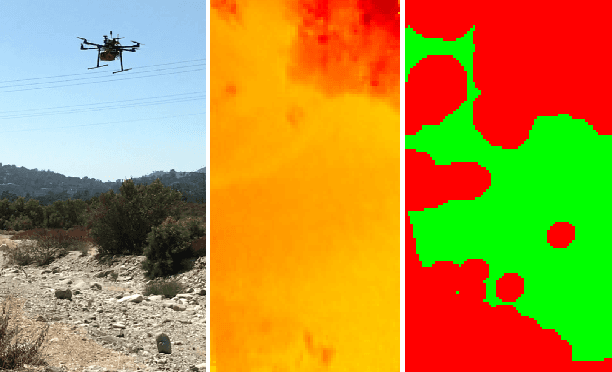

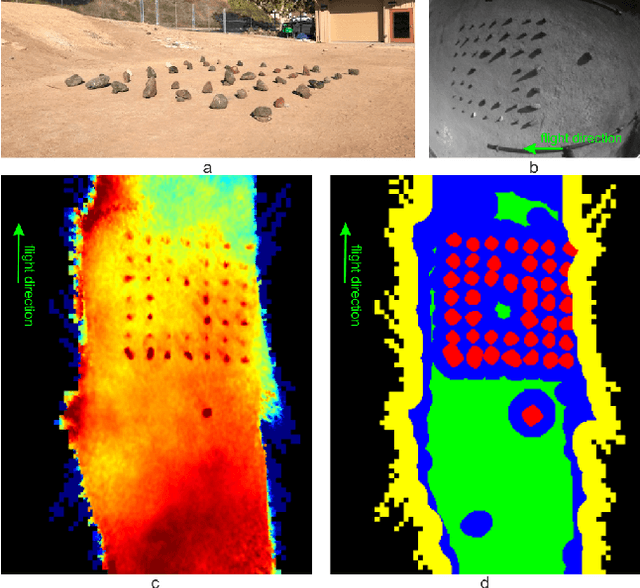

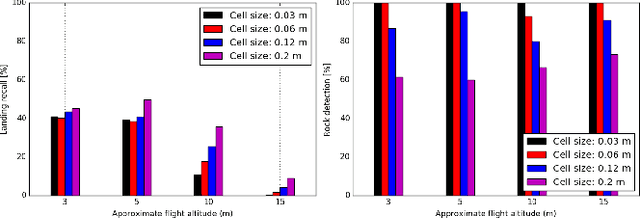

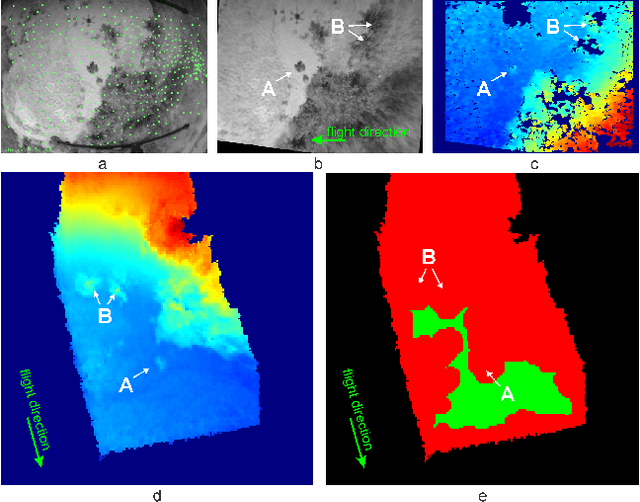

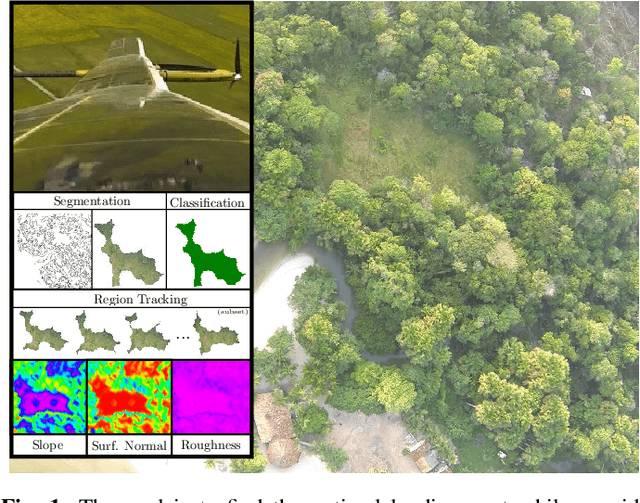

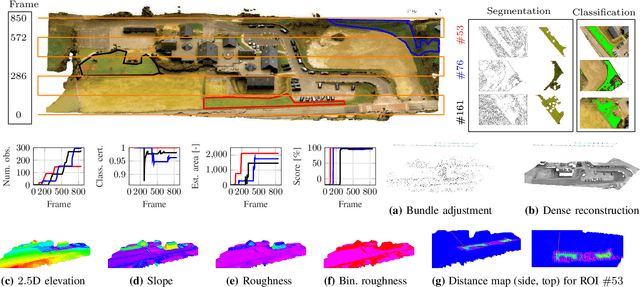

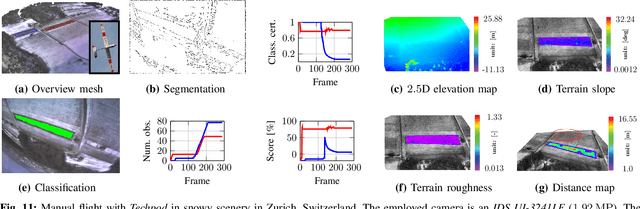

Abstract:In this paper, we propose a resource-efficient approach to provide an autonomous UAV with an on-board perception method to detect safe, hazard-free landing sites during flights over complex 3D terrain. We aggregate 3D measurements acquired from a sequence of monocular images by a Structure-from-Motion approach into a local, robot-centric, multi-resolution elevation map of the overflown terrain, which fuses depth measurements according to their lateral surface resolution (pixel-footprint) in a probabilistic framework based on the concept of dynamic Level of Detail. Map aggregation only requires depth maps and the associated poses, which are obtained from an onboard Visual Odometry algorithm. An efficient landing site detection method then exploits the features of the underlying multi-resolution map to detect safe landing sites based on slope, roughness, and quality of the reconstructed terrain surface. The evaluation of the performance of the mapping and landing site detection modules are analyzed independently and jointly in simulated and real-world experiments in order to establish the efficacy of the proposed approach.

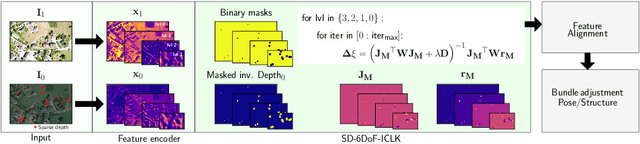

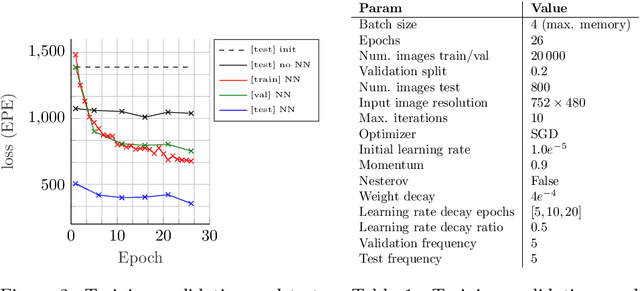

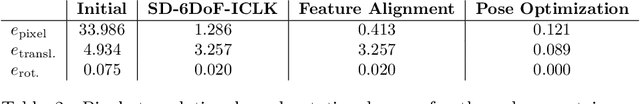

SD-6DoF-ICLK: Sparse and Deep Inverse Compositional Lucas-Kanade Algorithm on SE

Mar 30, 2021

Abstract:This paper introduces SD-6DoF-ICLK, a learning-based Inverse Compositional Lucas-Kanade (ICLK) pipeline that uses sparse depth information to optimize the relative pose that best aligns two images on SE(3). To compute this six Degrees-of-Freedom (DoF) relative transformation, the proposed formulation requires only sparse depth information in one of the images, which is often the only available depth source in visual-inertial odometry or Simultaneous Localization and Mapping (SLAM) pipelines. In an optional subsequent step, the framework further refines feature locations and the relative pose using individual feature alignment and bundle adjustment for pose and structure re-alignment. The resulting sparse point correspondences with subpixel-accuracy and refined relative pose can be used for depth map generation, or the image alignment module can be embedded in an odometry or mapping framework. Experiments with rendered imagery show that the forward SD-6DoF-ICLK runs at 145 ms per image pair with a resolution of 752 x 480 pixels each, and vastly outperforms the classical, sparse 6DoF-ICLK algorithm, making it the ideal framework for robust image alignment under severe conditions.

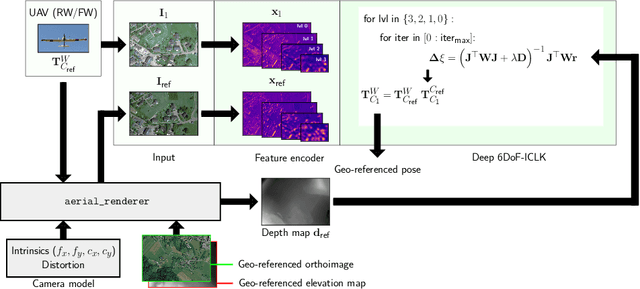

Deep UAV Localization with Reference View Rendering

Aug 11, 2020

Abstract:This paper presents a framework for the localization of Unmanned Aerial Vehicles (UAVs) in unstructured environments with the help of deep learning. A real-time rendering engine is introduced that generates optical and depth images given a six Degrees-of-Freedom (DoF) camera pose, camera model, geo-referenced orthoimage, and elevation map. The rendering engine is embedded into a learning-based six-DoF Inverse Compositional Lucas-Kanade (ICLK) algorithm that is able to robustly align the rendered and real-world image taken by the UAV. To learn the alignment under environmental changes, the architecture is trained using maps spanning multiple years at high resolution. The evaluation shows that the deep 6DoF-ICLK algorithm outperforms its non-trainable counterparts by a large margin. To further support the research in this field, the real-time rendering engine and accompanying datasets are released along with this publication.

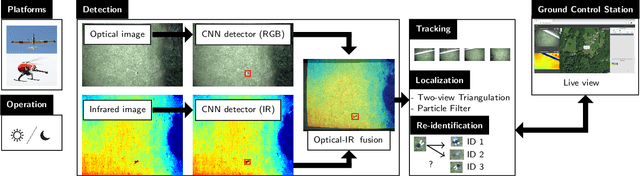

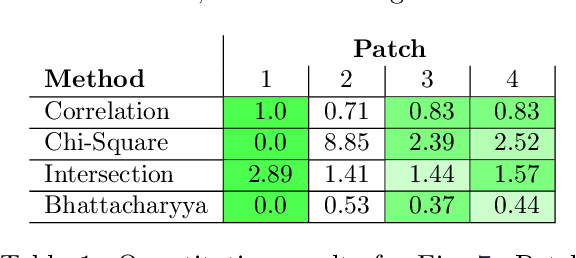

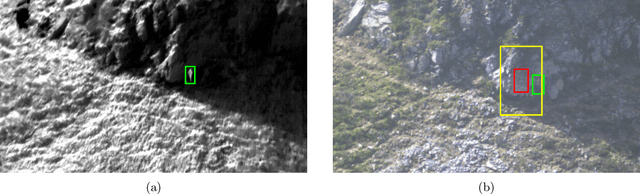

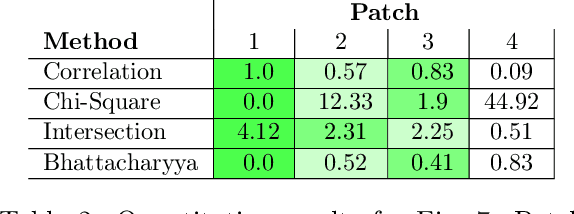

Deep Learning-based Human Detection for UAVs with Optical and Infrared Cameras: System and Experiments

Aug 10, 2020

Abstract:In this paper, we present our deep learning-based human detection system that uses optical (RGB) and long-wave infrared (LWIR) cameras to detect, track, localize, and re-identify humans from UAVs flying at high altitude. In each spectrum, a customized RetinaNet network with ResNet backbone provides human detections which are subsequently fused to minimize the overall false detection rate. We show that by optimizing the bounding box anchors and augmenting the image resolution the number of missed detections from high altitudes can be decreased by over 20 percent. Our proposed network is compared to different RetinaNet and YOLO variants, and to a classical optical-infrared human detection framework that uses hand-crafted features. Furthermore, along with the publication of this paper, we release a collection of annotated optical-infrared datasets recorded with different UAVs during search-and-rescue field tests and the source code of the implemented annotation tool.

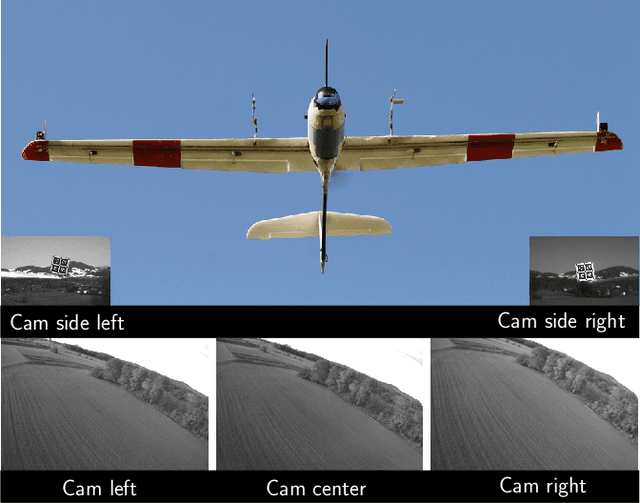

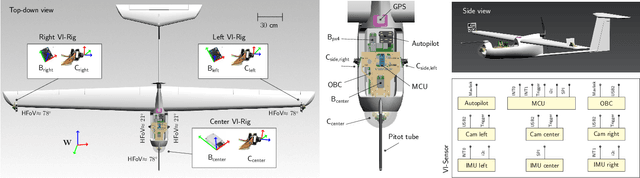

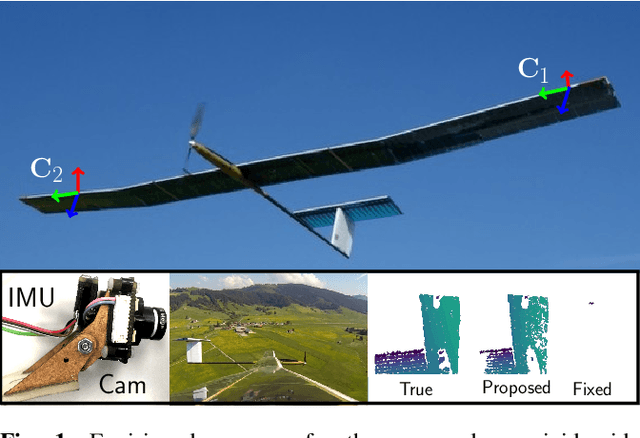

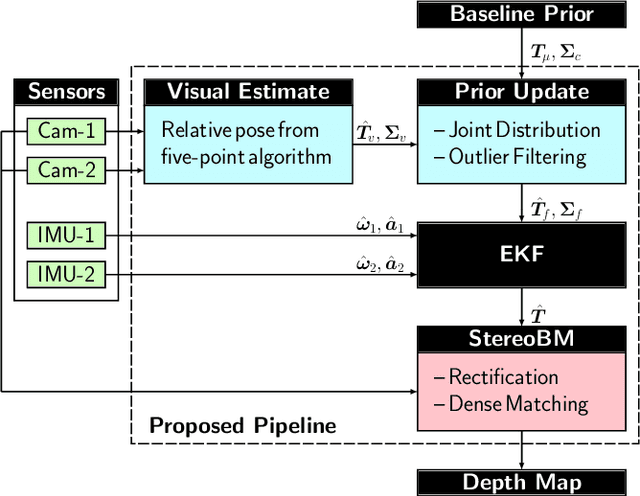

Flexible Trinocular: Non-rigid Multi-Camera-IMU Dense Reconstruction for UAV Navigation and Mapping

Aug 23, 2019

Abstract:In this paper, we propose a visual-inertial framework able to efficiently estimate the camera poses of a non-rigid trinocular baseline for long-range depth estimation on-board a fast moving aerial platform. The estimation of the time-varying baseline is based on relative inertial measurements, a photometric relative pose optimizer, and a probabilistic wing model fused in an efficient Extended Kalman Filter (EKF) formulation. The estimated depth measurements can be integrated into a geo-referenced global map to render a reconstruction of the environment useful for local replanning algorithms. Based on extensive real-world experiments we describe the challenges and solutions for obtaining the probabilistic wing model, reliable relative inertial measurements, and vision-based relative pose updates and demonstrate the computational efficiency and robustness of the overall system under challenging conditions.

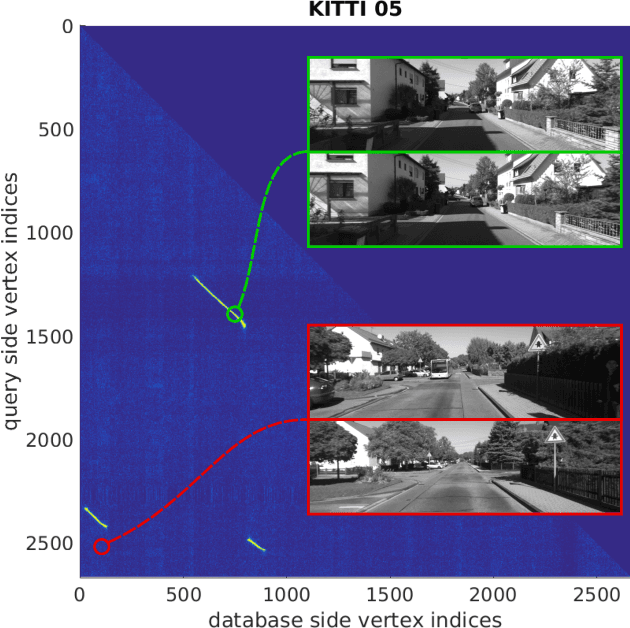

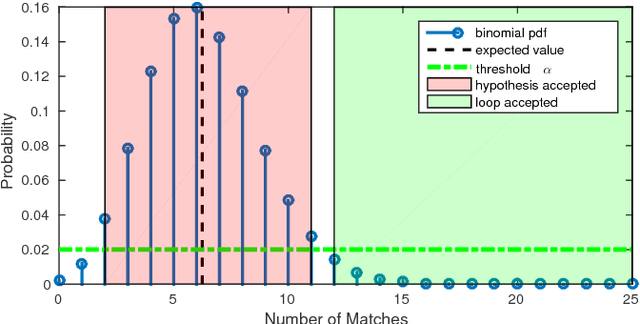

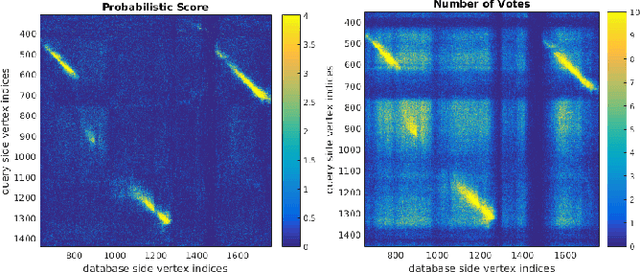

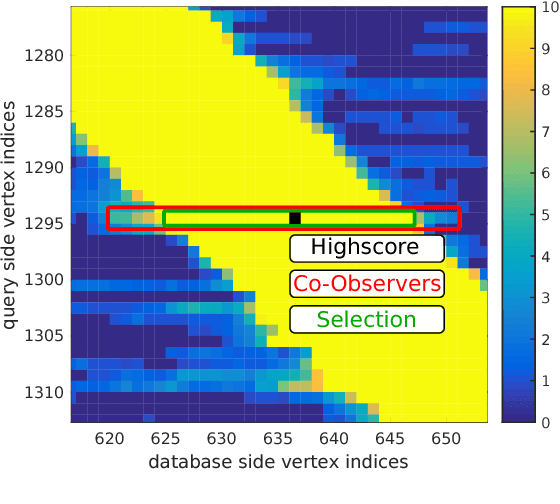

Visual Place Recognition with Probabilistic Vertex Voting

Jun 07, 2018

Abstract:We propose a novel scoring concept for visual place recognition based on nearest neighbor descriptor voting and demonstrate how the algorithm naturally emerges from the problem formulation. Based on the observation that the number of votes for matching places can be evaluated using a binomial distribution model, loop closures can be detected with high precision. By casting the problem into a probabilistic framework, we not only remove the need for commonly employed heuristic parameters but also provide a powerful score to classify matching and non-matching places. We present methods for both a 2D-2D pose-graph vertex matching and a 2D-3D landmark matching based on the above scoring. The approach maintains accuracy while being efficient enough for online application through the use of compact (low dimensional) descriptors and fast nearest neighbor retrieval techniques. The proposed methods are evaluated on several challenging datasets in varied environments, showing state-of-the-art results with high precision and high recall.

* 8 pages

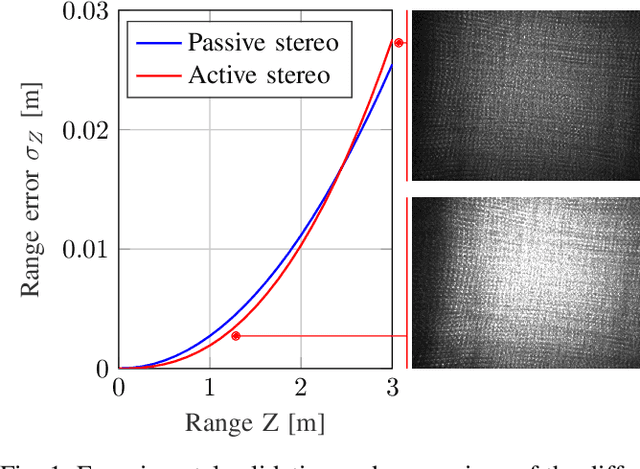

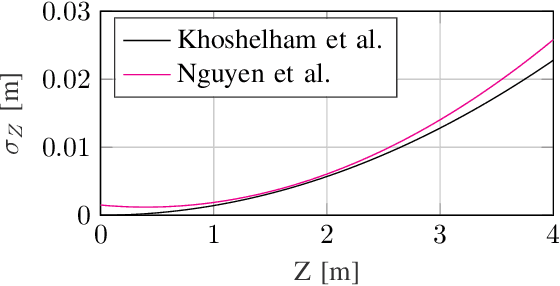

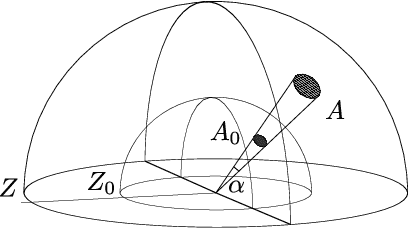

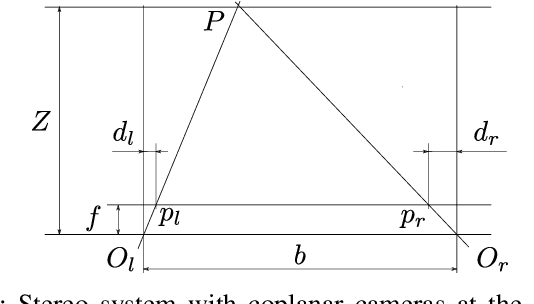

Cubic Range Error Model for Stereo Vision with Illuminators

Mar 11, 2018

Abstract:Use of low-cost depth sensors, such as a stereo camera setup with illuminators, is of particular interest for numerous applications ranging from robotics and transportation to mixed and augmented reality. The ability to quantify noise is crucial for these applications, e.g., when the sensor is used for map generation or to develop a sensor scheduling policy in a multi-sensor setup. Range error models provide uncertainty estimates and help weigh the data correctly in instances where range measurements are taken from different vantage points or with different sensors. The weighing is important to fuse range data into a map in a meaningful way, i.e., the high confidence data is relied on most heavily. Such a model is derived in this work. We show that the range error for stereo systems with integrated illuminators is cubic and validate the proposed model experimentally with an off-the-shelf structured light stereo system. The experiments confirm the validity of the model and simplify the application of this type of sensor in robotics. The proposed error model is relevant to any stereo system with low ambient light where the main light source is located at the camera system. Among others, this is the case for structured light stereo systems and night stereo systems with headlights. In this work, we propose that the range error is cubic in range for stereo systems with integrated illuminators. Experimental validation with an off-the-shelf structured light stereo system shows that the exponent is between 2.4 and 2.6. The deviation is attributed to our model considering only shot noise.

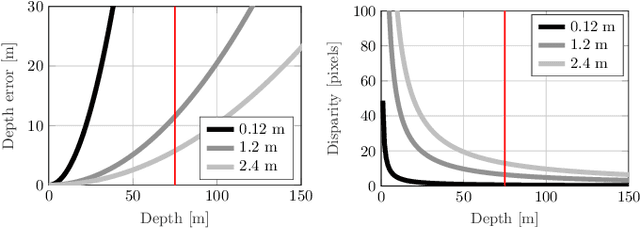

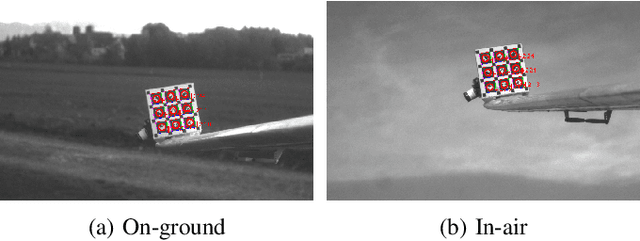

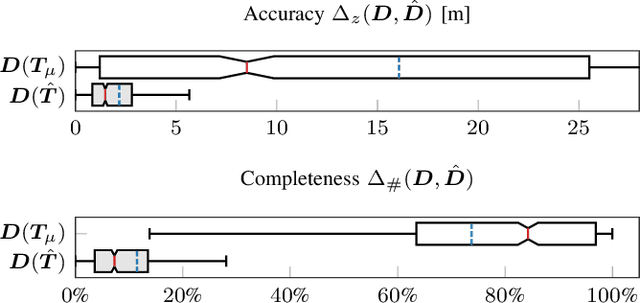

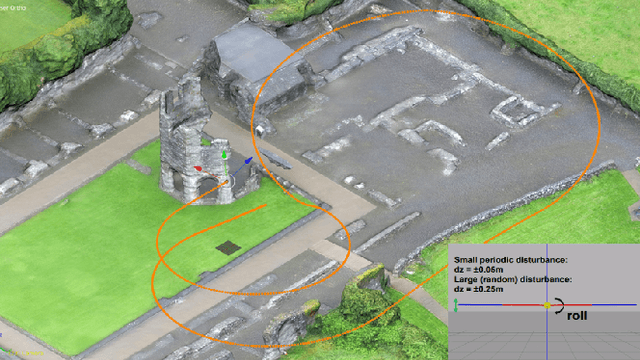

Flexible Stereo: Constrained, Non-rigid, Wide-baseline Stereo Vision for Fixed-wing Aerial Platforms

Feb 26, 2018

Abstract:This paper proposes a computationally efficient method to estimate the time-varying relative pose between two visual-inertial sensor rigs mounted on the flexible wings of a fixed-wing unmanned aerial vehicle (UAV). The estimated relative poses are used to generate highly accurate depth maps in real-time and can be employed for obstacle avoidance in low-altitude flights or landing maneuvers. The approach is structured as follows: Initially, a wing model is identified by fitting a probability density function to measured deviations from the nominal relative baseline transformation. At run-time, the prior knowledge about the wing model is fused in an Extended Kalman filter~(EKF) together with relative pose measurements obtained from solving a relative perspective N-point problem (PNP), and the linear accelerations and angular velocities measured by the two inertial measurement units (IMU) which are rigidly attached to the cameras. Results obtained from extensive synthetic experiments demonstrate that our proposed framework is able to estimate highly accurate baseline transformations and depth maps.

Free LSD: Prior-Free Visual Landing Site Detection for Autonomous Planes

Feb 25, 2018

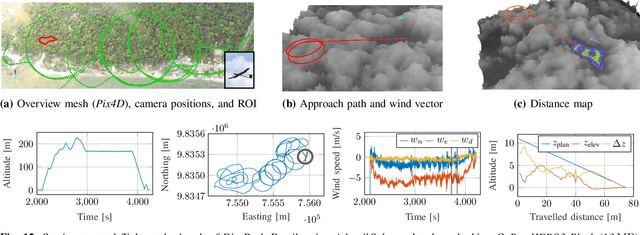

Abstract:Full autonomy for fixed-wing unmanned aerial vehicles (UAVs) requires the capability to autonomously detect potential landing sites in unknown and unstructured terrain, allowing for self-governed mission completion or handling of emergency situations. In this work, we propose a perception system addressing this challenge by detecting landing sites based on their texture and geometric shape without using any prior knowledge about the environment. The proposed method considers hazards within the landing region such as terrain roughness and slope, surrounding obstacles that obscure the landing approach path, and the local wind field that is estimated by the on-board EKF. The latter enables applicability of the proposed method on small-scale autonomous planes without landing gear. A safe approach path is computed based on the UAV dynamics, expected state estimation and actuator uncertainty, and the on-board computed elevation map. The proposed framework has been successfully tested on photo-realistic synthetic datasets and in challenging real-world environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge