Deep UAV Localization with Reference View Rendering

Paper and Code

Aug 11, 2020

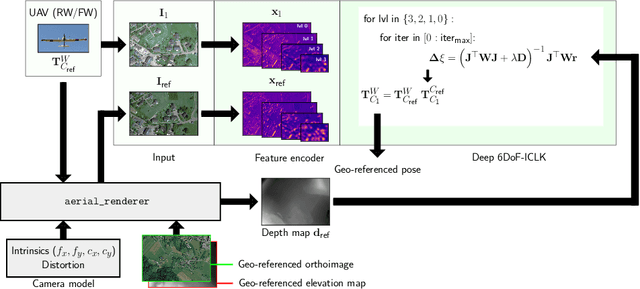

This paper presents a framework for the localization of Unmanned Aerial Vehicles (UAVs) in unstructured environments with the help of deep learning. A real-time rendering engine is introduced that generates optical and depth images given a six Degrees-of-Freedom (DoF) camera pose, camera model, geo-referenced orthoimage, and elevation map. The rendering engine is embedded into a learning-based six-DoF Inverse Compositional Lucas-Kanade (ICLK) algorithm that is able to robustly align the rendered and real-world image taken by the UAV. To learn the alignment under environmental changes, the architecture is trained using maps spanning multiple years at high resolution. The evaluation shows that the deep 6DoF-ICLK algorithm outperforms its non-trainable counterparts by a large margin. To further support the research in this field, the real-time rendering engine and accompanying datasets are released along with this publication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge