Tim Schreiter

Collecting Human Motion Data in Large and Occlusion-Prone Environments using Ultra-Wideband Localization

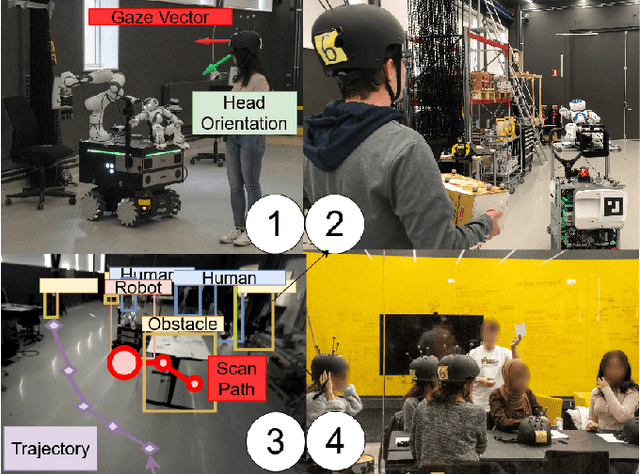

May 09, 2025Abstract:With robots increasingly integrating into human environments, understanding and predicting human motion is essential for safe and efficient interactions. Modern human motion and activity prediction approaches require high quality and quantity of data for training and evaluation, usually collected from motion capture systems, onboard or stationary sensors. Setting up these systems is challenging due to the intricate setup of hardware components, extensive calibration procedures, occlusions, and substantial costs. These constraints make deploying such systems in new and large environments difficult and limit their usability for in-the-wild measurements. In this paper we investigate the possibility to apply the novel Ultra-Wideband (UWB) localization technology as a scalable alternative for human motion capture in crowded and occlusion-prone environments. We include additional sensing modalities such as eye-tracking, onboard robot LiDAR and radar sensors, and record motion capture data as ground truth for evaluation and comparison. The environment imitates a museum setup, with up to four active participants navigating toward random goals in a natural way, and offers more than 130 minutes of multi-modal data. Our investigation provides a step toward scalable and accurate motion data collection beyond vision-based systems, laying a foundation for evaluating sensing modalities like UWB in larger and complex environments like warehouses, airports, or convention centers.

Multimodal Interaction and Intention Communication for Industrial Robots

Feb 25, 2025

Abstract:Successful adoption of industrial robots will strongly depend on their ability to safely and efficiently operate in human environments, engage in natural communication, understand their users, and express intentions intuitively while avoiding unnecessary distractions. To achieve this advanced level of Human-Robot Interaction (HRI), robots need to acquire and incorporate knowledge of their users' tasks and environment and adopt multimodal communication approaches with expressive cues that combine speech, movement, gazes, and other modalities. This paper presents several methods to design, enhance, and evaluate expressive HRI systems for non-humanoid industrial robots. We present the concept of a small anthropomorphic robot communicating as a proxy for its non-humanoid host, such as a forklift. We developed a multimodal and LLM-enhanced communication framework for this robot and evaluated it in several lab experiments, using gaze tracking and motion capture to quantify how users perceive the robot and measure the task progress.

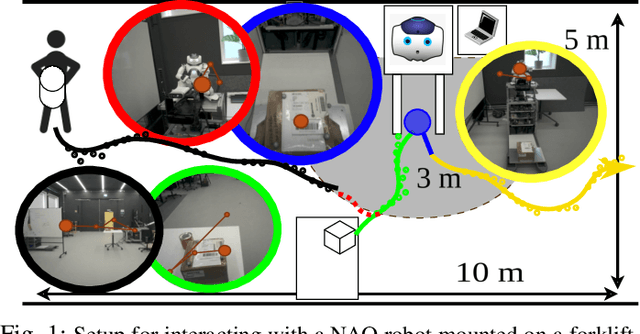

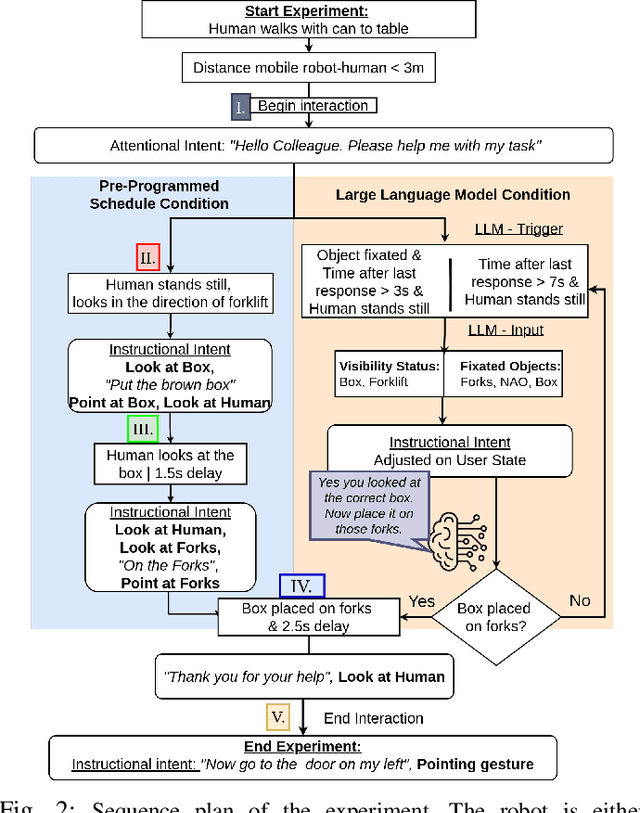

Evaluating Efficiency and Engagement in Scripted and LLM-Enhanced Human-Robot Interactions

Jan 21, 2025

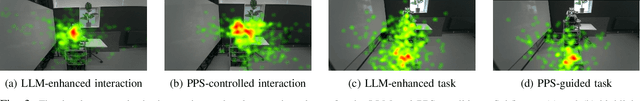

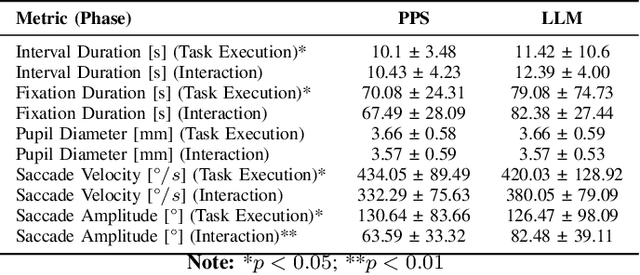

Abstract:To achieve natural and intuitive interaction with people, HRI frameworks combine a wide array of methods for human perception, intention communication, human-aware navigation and collaborative action. In practice, when encountering unpredictable behavior of people or unexpected states of the environment, these frameworks may lack the ability to dynamically recognize such states, adapt and recover to resume the interaction. Large Language Models (LLMs), owing to their advanced reasoning capabilities and context retention, present a promising solution for enhancing robot adaptability. This potential, however, may not directly translate to improved interaction metrics. This paper considers a representative interaction with an industrial robot involving approach, instruction, and object manipulation, implemented in two conditions: (1) fully scripted and (2) including LLM-enhanced responses. We use gaze tracking and questionnaires to measure the participants' task efficiency, engagement, and robot perception. The results indicate higher subjective ratings for the LLM condition, but objective metrics show that the scripted condition performs comparably, particularly in efficiency and focus during simple tasks. We also note that the scripted condition may have an edge over LLM-enhanced responses in terms of response latency and energy consumption, especially for trivial and repetitive interactions.

THÖR-MAGNI Act: Actions for Human Motion Modeling in Robot-Shared Industrial Spaces

Dec 18, 2024

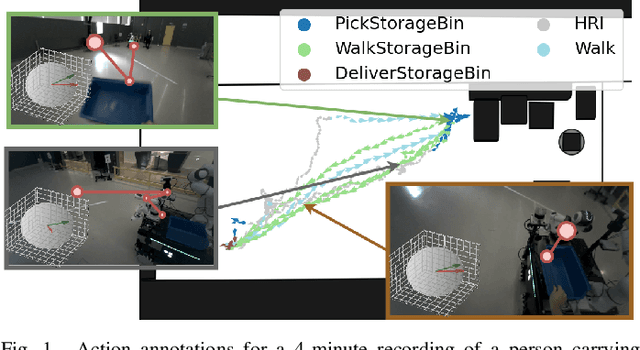

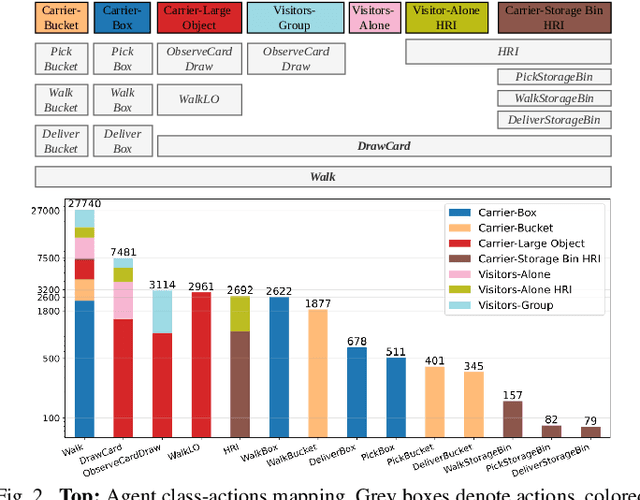

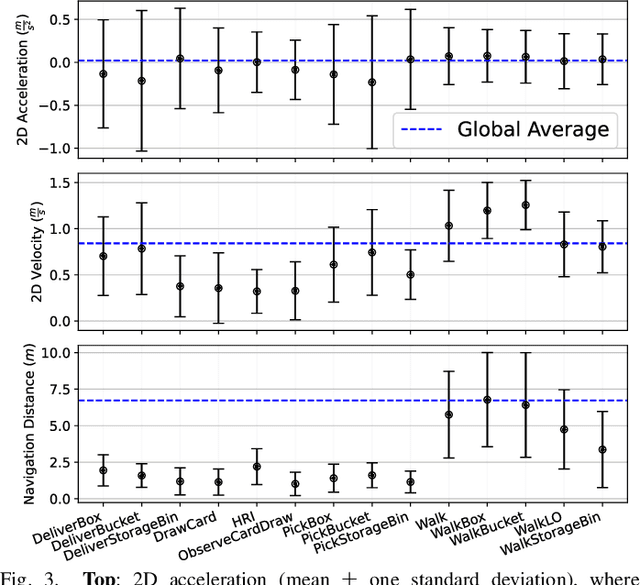

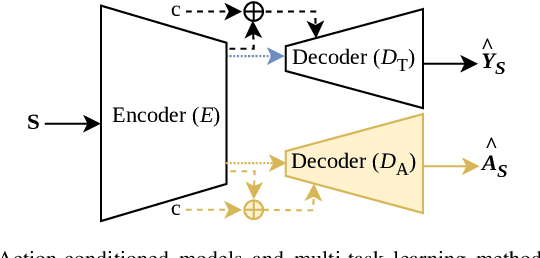

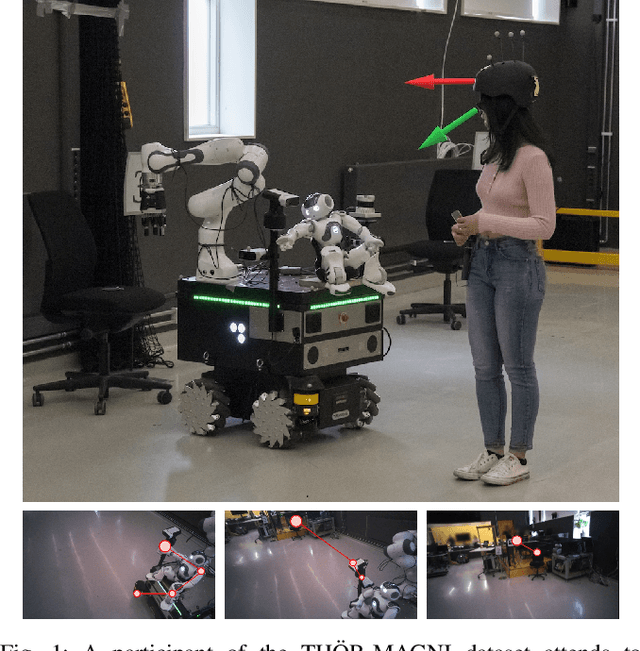

Abstract:Accurate human activity and trajectory prediction are crucial for ensuring safe and reliable human-robot interactions in dynamic environments, such as industrial settings, with mobile robots. Datasets with fine-grained action labels for moving people in industrial environments with mobile robots are scarce, as most existing datasets focus on social navigation in public spaces. This paper introduces the TH\"OR-MAGNI Act dataset, a substantial extension of the TH\"OR-MAGNI dataset, which captures participant movements alongside robots in diverse semantic and spatial contexts. TH\"OR-MAGNI Act provides 8.3 hours of manually labeled participant actions derived from egocentric videos recorded via eye-tracking glasses. These actions, aligned with the provided TH\"OR-MAGNI motion cues, follow a long-tailed distribution with diversified acceleration, velocity, and navigation distance profiles. We demonstrate the utility of TH\"OR-MAGNI Act for two tasks: action-conditioned trajectory prediction and joint action and trajectory prediction. We propose two efficient transformer-based models that outperform the baselines to address these tasks. These results underscore the potential of TH\"OR-MAGNI Act to develop predictive models for enhanced human-robot interaction in complex environments.

Bidirectional Intent Communication: A Role for Large Foundation Models

Aug 20, 2024Abstract:Integrating multimodal foundation models has significantly enhanced autonomous agents' language comprehension, perception, and planning capabilities. However, while existing works adopt a \emph{task-centric} approach with minimal human interaction, applying these models to developing assistive \emph{user-centric} robots that can interact and cooperate with humans remains underexplored. This paper introduces ``Bident'', a framework designed to integrate robots seamlessly into shared spaces with humans. Bident enhances the interactive experience by incorporating multimodal inputs like speech and user gaze dynamics. Furthermore, Bident supports verbal utterances and physical actions like gestures, making it versatile for bidirectional human-robot interactions. Potential applications include personalized education, where robots can adapt to individual learning styles and paces, and healthcare, where robots can offer personalized support, companionship, and everyday assistance in the home and workplace environments.

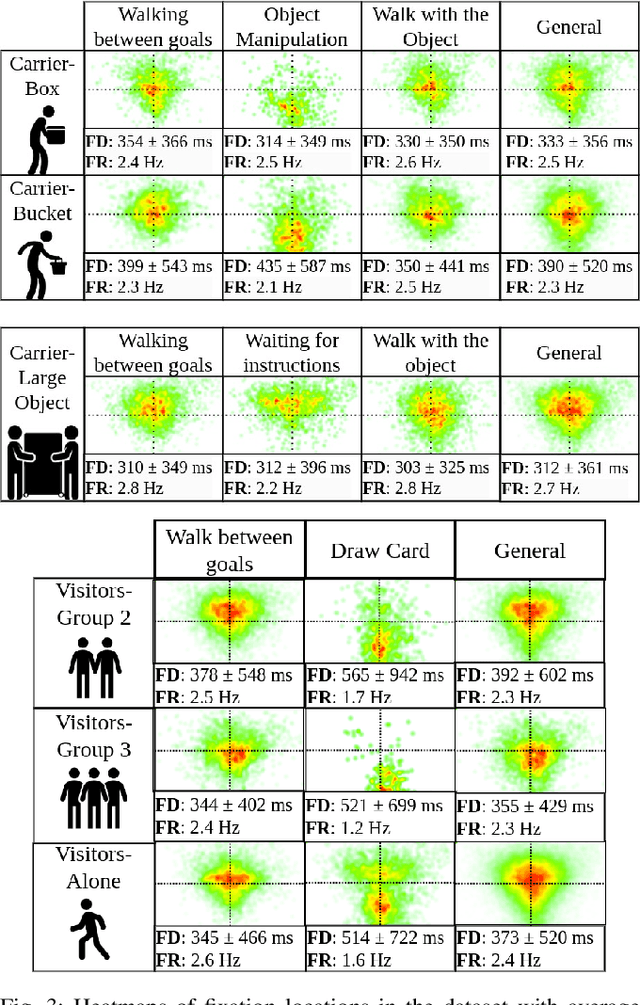

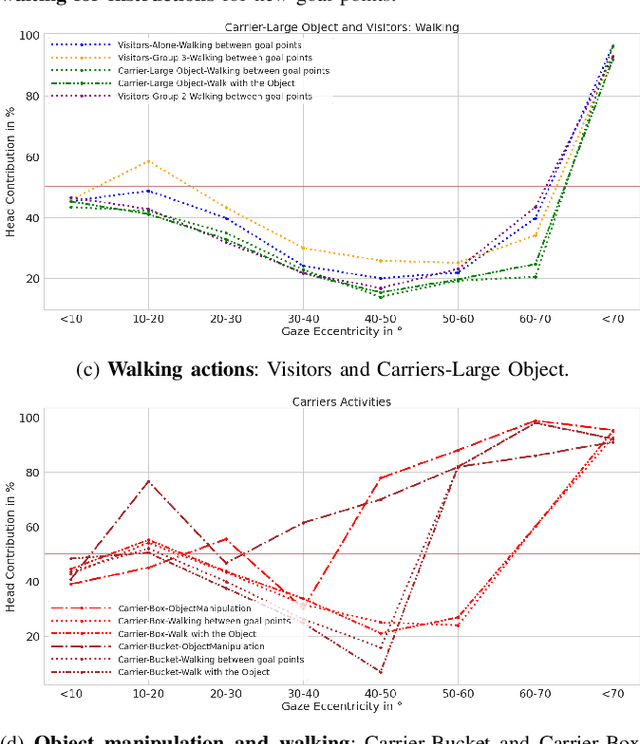

Human Gaze and Head Rotation during Navigation, Exploration and Object Manipulation in Shared Environments with Robots

Jun 10, 2024

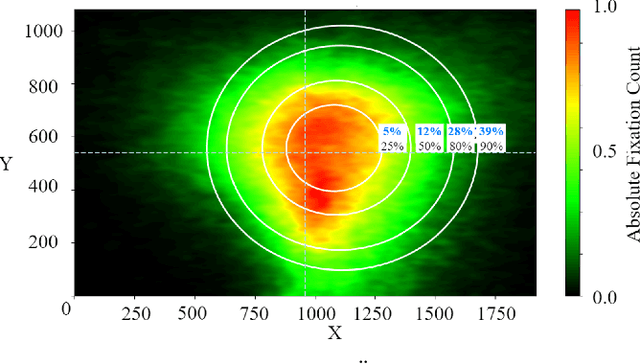

Abstract:The human gaze is an important cue to signal intention, attention, distraction, and the regions of interest in the immediate surroundings. Gaze tracking can transform how robots perceive, understand, and react to people, enabling new modes of robot control, interaction, and collaboration. In this paper, we use gaze tracking data from a rich dataset of human motion (TH\"OR-MAGNI) to investigate the coordination between gaze direction and head rotation of humans engaged in various indoor activities involving navigation, interaction with objects, and collaboration with a mobile robot. In particular, we study the spread and central bias of fixations in diverse activities and examine the correlation between gaze direction and head rotation. We introduce various human motion metrics to enhance the understanding of gaze behavior in dynamic interactions. Finally, we apply semantic object labeling to decompose the gaze distribution into activity-relevant regions.

* This is the final version of the accepted version of the manuscript that will be published in the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN). Copyright 2024 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses

THÖR-MAGNI: A Large-scale Indoor Motion Capture Recording of Human Movement and Robot Interaction

Mar 14, 2024

Abstract:We present a new large dataset of indoor human and robot navigation and interaction, called TH\"OR-MAGNI, that is designed to facilitate research on social navigation: e.g., modelling and predicting human motion, analyzing goal-oriented interactions between humans and robots, and investigating visual attention in a social interaction context. TH\"OR-MAGNI was created to fill a gap in available datasets for human motion analysis and HRI. This gap is characterized by a lack of comprehensive inclusion of exogenous factors and essential target agent cues, which hinders the development of robust models capable of capturing the relationship between contextual cues and human behavior in different scenarios. Unlike existing datasets, TH\"OR-MAGNI includes a broader set of contextual features and offers multiple scenario variations to facilitate factor isolation. The dataset includes many social human-human and human-robot interaction scenarios, rich context annotations, and multi-modal data, such as walking trajectories, gaze tracking data, and lidar and camera streams recorded from a mobile robot. We also provide a set of tools for visualization and processing of the recorded data. TH\"OR-MAGNI is, to the best of our knowledge, unique in the amount and diversity of sensor data collected in a contextualized and socially dynamic environment, capturing natural human-robot interactions.

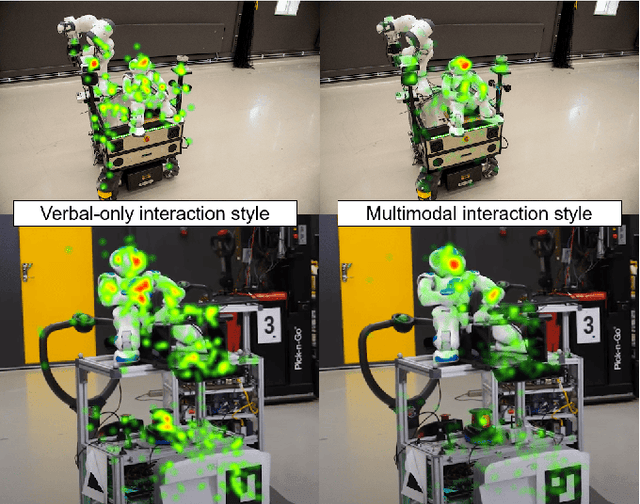

Advantages of Multimodal versus Verbal-Only Robot-to-Human Communication with an Anthropomorphic Robotic Mock Driver

Jul 03, 2023

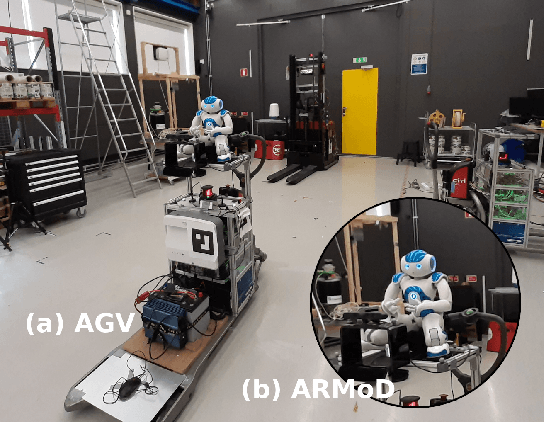

Abstract:Robots are increasingly used in shared environments with humans, making effective communication a necessity for successful human-robot interaction. In our work, we study a crucial component: active communication of robot intent. Here, we present an anthropomorphic solution where a humanoid robot communicates the intent of its host robot acting as an "Anthropomorphic Robotic Mock Driver" (ARMoD). We evaluate this approach in two experiments in which participants work alongside a mobile robot on various tasks, while the ARMoD communicates a need for human attention, when required, or gives instructions to collaborate on a joint task. The experiments feature two interaction styles of the ARMoD: a verbal-only mode using only speech and a multimodal mode, additionally including robotic gaze and pointing gestures to support communication and register intent in space. Our results show that the multimodal interaction style, including head movements and eye gaze as well as pointing gestures, leads to more natural fixation behavior. Participants naturally identified and fixated longer on the areas relevant for intent communication, and reacted faster to instructions in collaborative tasks. Our research further indicates that the ARMoD intent communication improves engagement and social interaction with mobile robots in workplace settings.

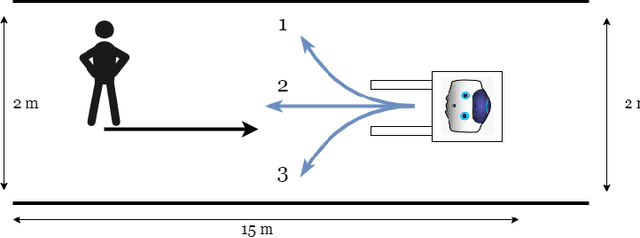

The Effect of Anthropomorphism on Trust in an Industrial Human-Robot Interaction

Sep 01, 2022

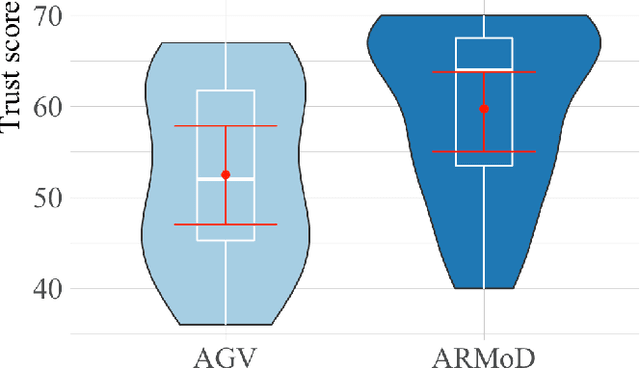

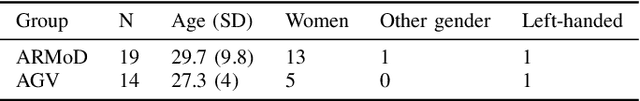

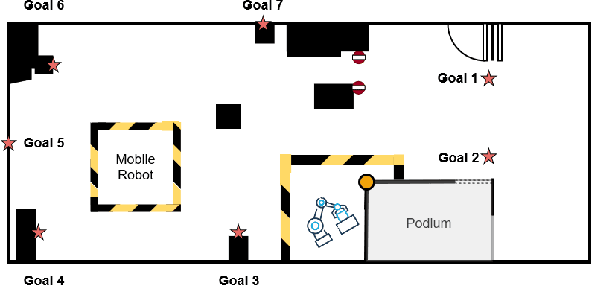

Abstract:Robots are increasingly deployed in spaces shared with humans, including home settings and industrial environments. In these environments, the interaction between humans and robots (HRI) is crucial for safety, legibility, and efficiency. A key factor in HRI is trust, which modulates the acceptance of the system. Anthropomorphism has been shown to modulate trust development in a robot, but robots in industrial environments are not usually anthropomorphic. We designed a simple interaction in an industrial environment in which an anthropomorphic mock driver (ARMoD) robot simulates to drive an autonomous guided vehicle (AGV). The task consisted of a human crossing paths with the AGV, with or without the ARMoD mounted on the top, in a narrow corridor. The human and the system needed to negotiate trajectories when crossing paths, meaning that the human had to attend to the trajectory of the robot to avoid a collision with it. There was a significant increment in the reported trust scores in the condition where the ARMoD was present, showing that the presence of an anthropomorphic robot is enough to modulate the trust, even in limited interactions as the one we present here.

The Magni Human Motion Dataset: Accurate, Complex, Multi-Modal, Natural, Semantically-Rich and Contextualized

Aug 31, 2022

Abstract:Rapid development of social robots stimulates active research in human motion modeling, interpretation and prediction, proactive collision avoidance, human-robot interaction and co-habitation in shared spaces. Modern approaches to this end require high quality datasets for training and evaluation. However, the majority of available datasets suffers from either inaccurate tracking data or unnatural, scripted behavior of the tracked people. This paper attempts to fill this gap by providing high quality tracking information from motion capture, eye-gaze trackers and on-board robot sensors in a semantically-rich environment. To induce natural behavior of the recorded participants, we utilise loosely scripted task assignment, which induces the participants navigate through the dynamic laboratory environment in a natural and purposeful way. The motion dataset, presented in this paper, sets a high quality standard, as the realistic and accurate data is enhanced with semantic information, enabling development of new algorithms which rely not only on the tracking information but also on contextual cues of the moving agents, static and dynamic environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge