Tiancheng Li

Reinforcing the Diffusion Chain of Lateral Thought with Diffusion Language Models

May 15, 2025Abstract:We introduce the \emph{Diffusion Chain of Lateral Thought (DCoLT)}, a reasoning framework for diffusion language models. DCoLT treats each intermediate step in the reverse diffusion process as a latent "thinking" action and optimizes the entire reasoning trajectory to maximize the reward on the correctness of the final answer with outcome-based Reinforcement Learning (RL). Unlike traditional Chain-of-Thought (CoT) methods that follow a causal, linear thinking process, DCoLT allows bidirectional, non-linear reasoning with no strict rule on grammatical correctness amid its intermediate steps of thought. We implement DCoLT on two representative Diffusion Language Models (DLMs). First, we choose SEDD as a representative continuous-time discrete diffusion model, where its concrete score derives a probabilistic policy to maximize the RL reward over the entire sequence of intermediate diffusion steps. We further consider the discrete-time masked diffusion language model -- LLaDA, and find that the order to predict and unmask tokens plays an essential role to optimize its RL action resulting from the ranking-based Unmasking Policy Module (UPM) defined by the Plackett-Luce model. Experiments on both math and code generation tasks show that using only public data and 16 H800 GPUs, DCoLT-reinforced DLMs outperform other DLMs trained by SFT or RL or even both. Notably, DCoLT-reinforced LLaDA boosts its reasoning accuracy by +9.8%, +5.7%, +11.4%, +19.5% on GSM8K, MATH, MBPP, and HumanEval.

From Target Tracking to Targeting Track -- Part II: Regularized Polynomial Trajectory Optimization

Feb 22, 2025

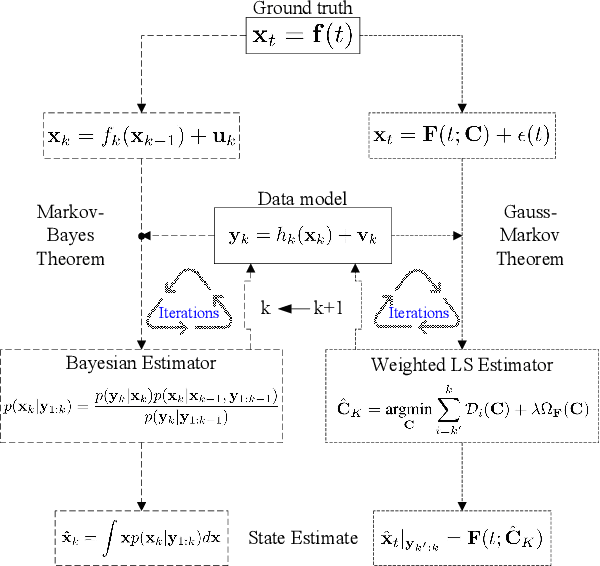

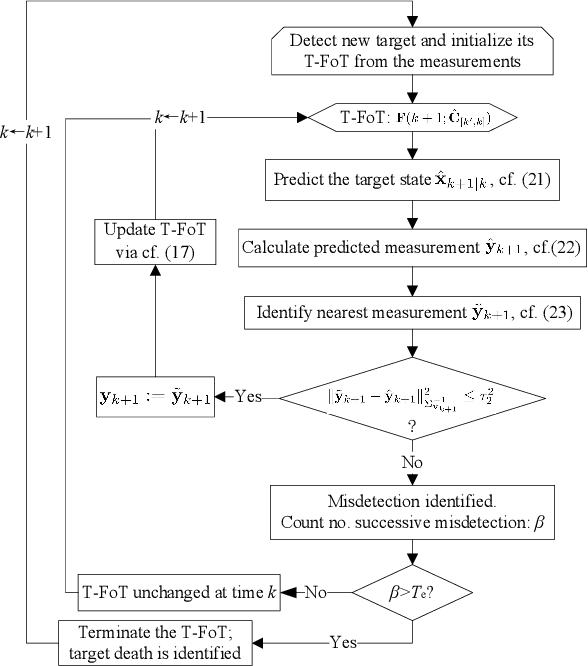

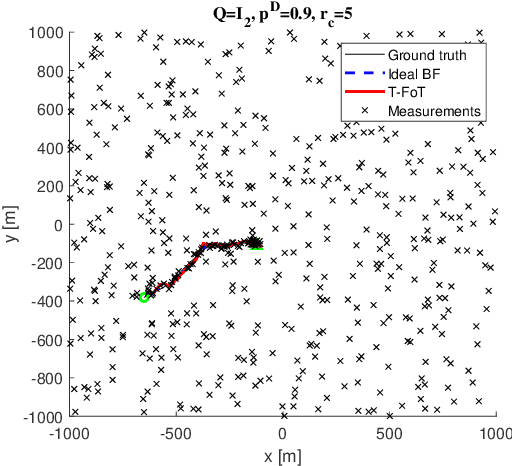

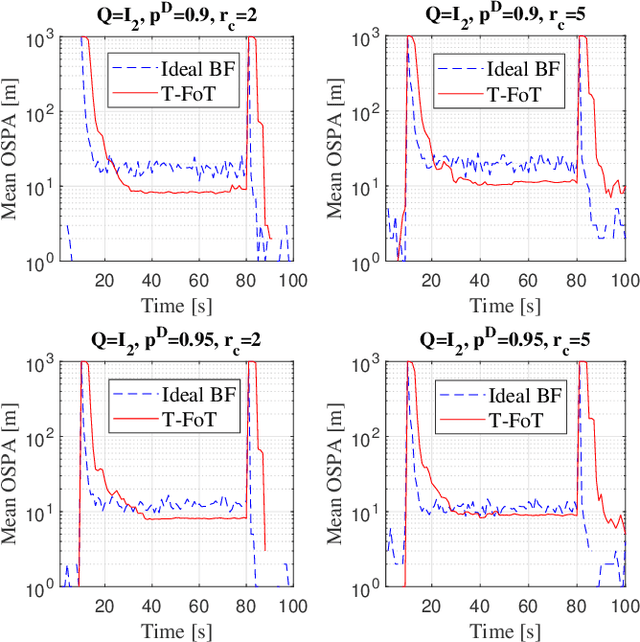

Abstract:Target tracking entails the estimation of the evolution of the target state over time, namely the target trajectory. Different from the classical state space model, our series of studies, including this paper, model the collection of the target state as a stochastic process (SP) that is further decomposed into a deterministic part which represents the trend of the trajectory and a residual SP representing the residual fitting error. Subsequently, the tracking problem is formulated as a learning problem regarding the trajectory SP for which a key part is to estimate a trajectory FoT (T-FoT) best fitting the measurements in time series. For this purpose, we consider the polynomial T-FoT and address the regularized polynomial T-FoT optimization employing two distinct regularization strategies seeking trade-off between the accuracy and simplicity. One limits the order of the polynomial and then the best choice is determined by grid searching in a narrow, bounded range while the other adopts $\ell_0$ norm regularization for which the hybrid Newton solver is employed. Simulation results obtained in both single and multiple maneuvering target scenarios demonstrate the effectiveness of our approaches.

Self-Guidance: Boosting Flow and Diffusion Generation on Their Own

Dec 08, 2024

Abstract:Proper guidance strategies are essential to get optimal generation results without re-training diffusion and flow-based text-to-image models. However, existing guidances either require specific training or strong inductive biases of neural network architectures, potentially limiting their applications. To address these issues, in this paper, we introduce Self-Guidance (SG), a strong diffusion guidance that neither needs specific training nor requires certain forms of neural network architectures. Different from previous approaches, the Self-Guidance calculates the guidance vectors by measuring the difference between the velocities of two successive diffusion timesteps. Therefore, SG can be readily applied for both conditional and unconditional models with flexible network architectures. We conduct intensive experiments on both text-to-image generation and text-to-video generations across flexible architectures including UNet-based models and diffusion transformer-based models. On current state-of-the-art diffusion models such as Stable Diffusion 3.5 and FLUX, SG significantly boosts the image generation performance in terms of FID, and Human Preference Scores. Moreover, we find that SG has a surprisingly positive effect on the generation of high-quality human bodies such as hands, faces, and arms, showing strong potential to overcome traditional challenges on human body generations with minimal effort. We will release our implementation of SG on SD 3.5 and FLUX models along with this paper.

Schedule On the Fly: Diffusion Time Prediction for Faster and Better Image Generation

Dec 02, 2024

Abstract:Diffusion and flow models have achieved remarkable successes in various applications such as text-to-image generation. However, these models typically rely on the same predetermined denoising schedules during inference for each prompt, which potentially limits the inference efficiency as well as the flexibility when handling different prompts. In this paper, we argue that the optimal noise schedule should adapt to each inference instance, and introduce the Time Prediction Diffusion Model (TPDM) to accomplish this. TPDM employs a plug-and-play Time Prediction Module (TPM) that predicts the next noise level based on current latent features at each denoising step. We train the TPM using reinforcement learning, aiming to maximize a reward that discounts the final image quality by the number of denoising steps. With such an adaptive scheduler, TPDM not only generates high-quality images that are aligned closely with human preferences but also adjusts the number of denoising steps and time on the fly, enhancing both performance and efficiency. We train TPDMs on multiple diffusion model benchmarks. With Stable Diffusion 3 Medium architecture, TPDM achieves an aesthetic score of 5.44 and a human preference score (HPS) of 29.59, while using around 50% fewer denoising steps to achieve better performance. We will release our best model alongside this paper.

Partial-to-Full Registration based on Gradient-SDF for Computer-Assisted Orthopedic Surgery

Oct 04, 2024Abstract:In computer-assisted orthopedic surgery (CAOS), accurate pre-operative to intra-operative bone registration is an essential and critical requirement for providing navigational guidance. This registration process is challenging since the intra-operative 3D points are sparse, only partially overlapped with the pre-operative model, and disturbed by noise and outliers. The commonly used method in current state-of-the-art orthopedic robotic system is bony landmarks based registration, but it is very time-consuming for the surgeons. To address these issues, we propose a novel partial-to-full registration framework based on gradient-SDF for CAOS. The simulation experiments using bone models from publicly available datasets and the phantom experiments performed under both optical tracking and electromagnetic tracking systems demonstrate that the proposed method can provide more accurate results than standard benchmarks and be robust to 90% outliers. Importantly, our method achieves convergence in less than 1 second in real scenarios and mean target registration error values as low as 2.198 mm for the entire bone model. Finally, it only requires random acquisition of points for registration by moving a surgical probe over the bone surface without correspondence with any specific bony landmarks, thus showing significant potential clinical value.

InstructRL4Pix: Training Diffusion for Image Editing by Reinforcement Learning

Jun 14, 2024Abstract:Instruction-based image editing has made a great process in using natural human language to manipulate the visual content of images. However, existing models are limited by the quality of the dataset and cannot accurately localize editing regions in images with complex object relationships. In this paper, we propose Reinforcement Learning Guided Image Editing Method(InstructRL4Pix) to train a diffusion model to generate images that are guided by the attention maps of the target object. Our method maximizes the output of the reward model by calculating the distance between attention maps as a reward function and fine-tuning the diffusion model using proximal policy optimization (PPO). We evaluate our model in object insertion, removal, replacement, and transformation. Experimental results show that InstructRL4Pix breaks through the limitations of traditional datasets and uses unsupervised learning to optimize editing goals and achieve accurate image editing based on natural human commands.

Arithmetic Average Density Fusion -- Part IV: Distributed Heterogeneous Fusion of RFS and LRFS Filters via Variational Approximation

Jan 31, 2024Abstract:This paper, the fourth part of a series of papers on the arithmetic average (AA) density fusion approach and its application for target tracking, addresses the intricate challenge of distributed heterogeneous multisensor multitarget tracking, where each inter-connected sensor operates a probability hypothesis density (PHD) filter, a multiple Bernoulli (MB) filter or a labeled MB (LMB) filter and they cooperate with each other via information fusion. Earlier papers in this series have proven that the proper AA fusion of these filters is all exactly built on averaging their respective unlabeled/labeled PHDs. Based on this finding, two PHD-AA fusion approaches are proposed via variational minimization of the upper bound of the Kullback-Leibler divergence between the local and multi-filter averaged PHDs subject to cardinality consensus based on the Gaussian mixture implementation, enabling heterogeneous filter cooperation. One focuses solely on fitting the weights of the local Gaussian components (L-GCs), while the other simultaneously fits all the parameters of the L-GCs at each sensor, both seeking average consensus on the unlabeled PHD, irrespective of the specific posterior form of the local filters. For the distributed peer-to-peer communication, both the classic consensus and flooding paradigms have been investigated. Simulations have demonstrated the effectiveness and flexibility of the proposed approaches in both homogeneous and heterogeneous scenarios.

Heterogeneous Unlabeled and Labeled RFS Filter Fusion for Scalable Multisensor Multitarget Tracking

Mar 12, 2023Abstract:This paper proposes a heterogenous density fusion approach to scalable multisensor multitarget tracking where the local, inter-connected sensors run different types of random finite set (RFS) filters according to their respective capacity and need. They result in heterogenous multitarget densities that are to be fused with each other in a proper means for more robust and accurate detection and localization of the targets. Our recent work has exposed a key common property of effective arithmetic average (AA) fusion approaches to both unlabeled and labeled RFS filters which are all built on averaging their relevant un-labeled/labeled probability hypothesis densities (PHDs). Thanks to this, this paper proposes the first ever heterogenous unlabeled and labeled RFS filter cooperation approach based on Gaussian mixture implementations where the local Gaussian components (L-GCs) are so optimized that the resulting unlabeled PHDs best fit their AA, regardless of the specific type of the local densities. To this end, a computationally efficient, approximate approach is proposed which only revises the weights of the L-GCs, keeping the other parameters of L-GCs unchanged. In particular, the PHD filter, the unlabeled and labeled multi-Bernoulli (MB/LMB) filters are considered. Simulations have demonstrated the effectiveness of the proposed approach for both homogeneous and heterogenous fusion of the PHD-MB- LMB filters in different configurations.

Multi-sensor Suboptimal Fusion Student's $t$ Filter

Apr 23, 2022

Abstract:A multi-sensor fusion Student's $t$ filter is proposed for time-series recursive estimation in the presence of heavy-tailed process and measurement noises. Driven from an information-theoretic optimization, the approach extends the single sensor Student's $t$ Kalman filter based on the suboptimal arithmetic average (AA) fusion approach. To ensure computationally efficient, closed-form $t$ density recursion, reasonable approximation has been used in both local-sensor filtering and inter-sensor fusion calculation. The overall framework accommodates any Gaussian-oriented fusion approach such as the covariance intersection (CI). Simulation demonstrates the effectiveness of the proposed multi-sensor AA fusion-based $t$ filter in dealing with outliers as compared with the classic Gaussian estimator, and the advantage of the AA fusion in comparison with the CI approach and the augmented measurement fusion.

A Computationally Efficient Approach to Non-cooperative Target Detection and Tracking with Almost No A-priori Information

Apr 20, 2021

Abstract:This paper addresses the problem of real-time detection and tracking of a non-cooperative target in the challenging scenario with almost no a-priori information about target birth, death, dynamics and detection probability. Furthermore, there are false and missing data at unknown yet low rates in the measurements. The only information given in advance is about the target-measurement model and the constraint that there is no more than one target in the scenario. To solve these challenges, we model the movement of the target by using a trajectory function of time (T-FoT). Data-driven T-FoT initiation and termination strategies are proposed for identifying the (re-)appearance and disappearance of the target. During the existence of the target, real target measurements are distinguished from clutter if the target indeed exists and is detected, in order to update the T-FoT at each scan for which we design a least-squares estimator. Simulations using either linear or nonlinear systems are conducted to demonstrate the effectiveness of our approach in comparison with the Bayes optimal Bernoulli filters. The results show that our approach is comparable to the perfectly-modeled filters, even outperforms them in some cases while requiring much less a-prior information and computing much faster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge