Theodor Cimpeanu

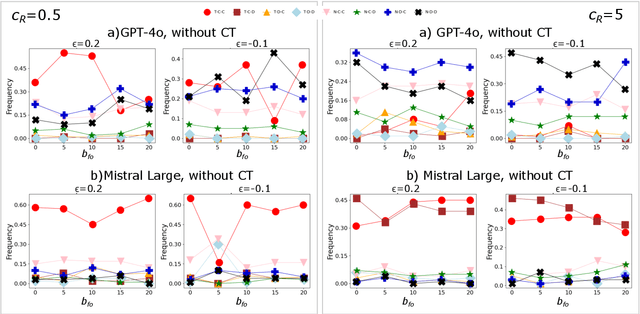

Do LLMs trust AI regulation? Emerging behaviour of game-theoretic LLM agents

Apr 11, 2025

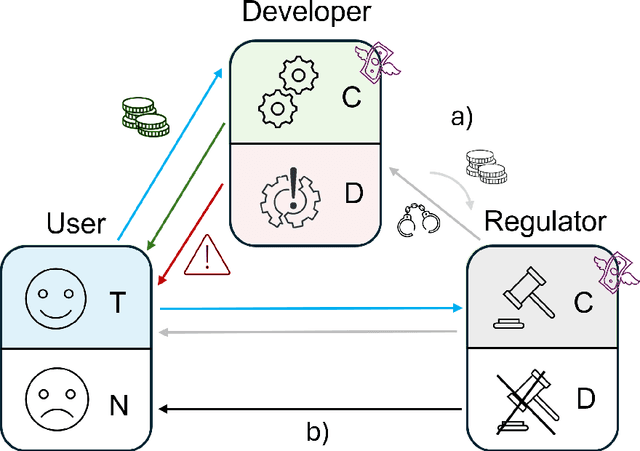

Abstract:There is general agreement that fostering trust and cooperation within the AI development ecosystem is essential to promote the adoption of trustworthy AI systems. By embedding Large Language Model (LLM) agents within an evolutionary game-theoretic framework, this paper investigates the complex interplay between AI developers, regulators and users, modelling their strategic choices under different regulatory scenarios. Evolutionary game theory (EGT) is used to quantitatively model the dilemmas faced by each actor, and LLMs provide additional degrees of complexity and nuances and enable repeated games and incorporation of personality traits. Our research identifies emerging behaviours of strategic AI agents, which tend to adopt more "pessimistic" (not trusting and defective) stances than pure game-theoretic agents. We observe that, in case of full trust by users, incentives are effective to promote effective regulation; however, conditional trust may deteriorate the "social pact". Establishing a virtuous feedback between users' trust and regulators' reputation thus appears to be key to nudge developers towards creating safe AI. However, the level at which this trust emerges may depend on the specific LLM used for testing. Our results thus provide guidance for AI regulation systems, and help predict the outcome of strategic LLM agents, should they be used to aid regulation itself.

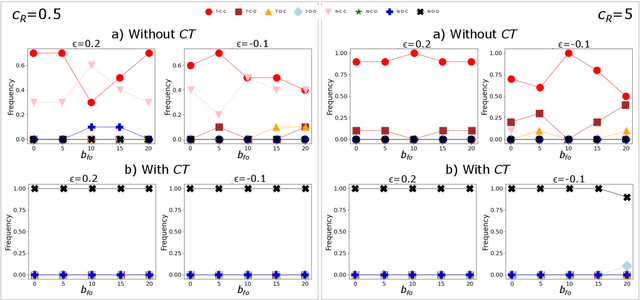

Media and responsible AI governance: a game-theoretic and LLM analysis

Mar 12, 2025Abstract:This paper investigates the complex interplay between AI developers, regulators, users, and the media in fostering trustworthy AI systems. Using evolutionary game theory and large language models (LLMs), we model the strategic interactions among these actors under different regulatory regimes. The research explores two key mechanisms for achieving responsible governance, safe AI development and adoption of safe AI: incentivising effective regulation through media reporting, and conditioning user trust on commentariats' recommendation. The findings highlight the crucial role of the media in providing information to users, potentially acting as a form of "soft" regulation by investigating developers or regulators, as a substitute to institutional AI regulation (which is still absent in many regions). Both game-theoretic analysis and LLM-based simulations reveal conditions under which effective regulation and trustworthy AI development emerge, emphasising the importance of considering the influence of different regulatory regimes from an evolutionary game-theoretic perspective. The study concludes that effective governance requires managing incentives and costs for high quality commentaries.

Multi-Agent Risks from Advanced AI

Feb 19, 2025

Abstract:The rapid development of advanced AI agents and the imminent deployment of many instances of these agents will give rise to multi-agent systems of unprecedented complexity. These systems pose novel and under-explored risks. In this report, we provide a structured taxonomy of these risks by identifying three key failure modes (miscoordination, conflict, and collusion) based on agents' incentives, as well as seven key risk factors (information asymmetries, network effects, selection pressures, destabilising dynamics, commitment problems, emergent agency, and multi-agent security) that can underpin them. We highlight several important instances of each risk, as well as promising directions to help mitigate them. By anchoring our analysis in a range of real-world examples and experimental evidence, we illustrate the distinct challenges posed by multi-agent systems and their implications for the safety, governance, and ethics of advanced AI.

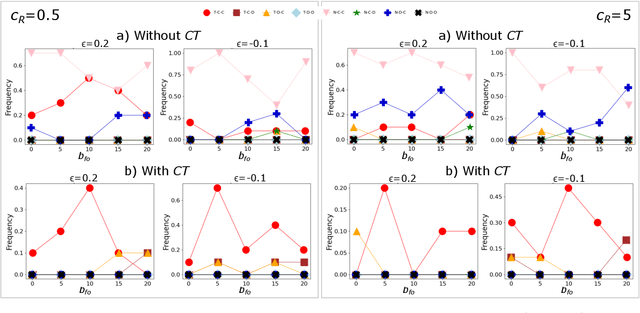

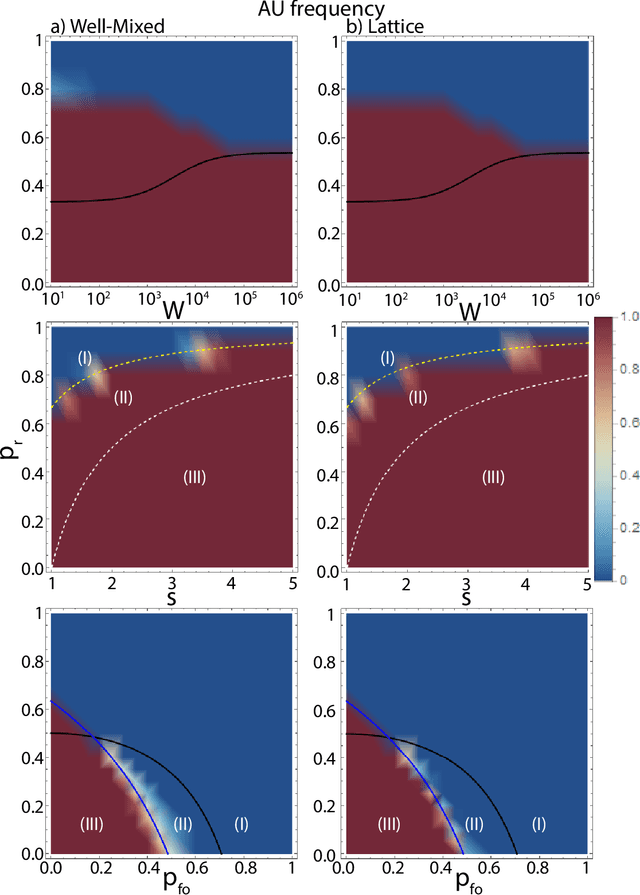

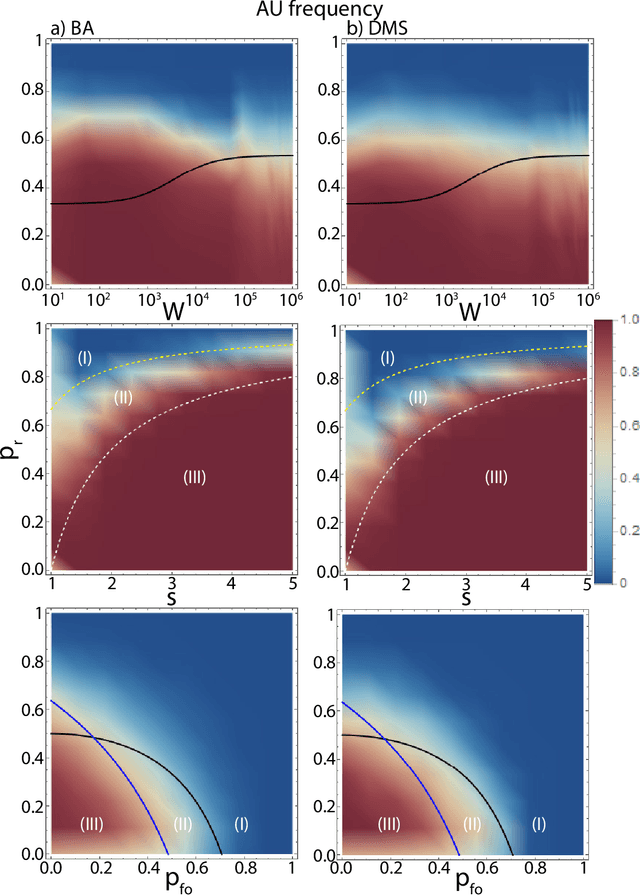

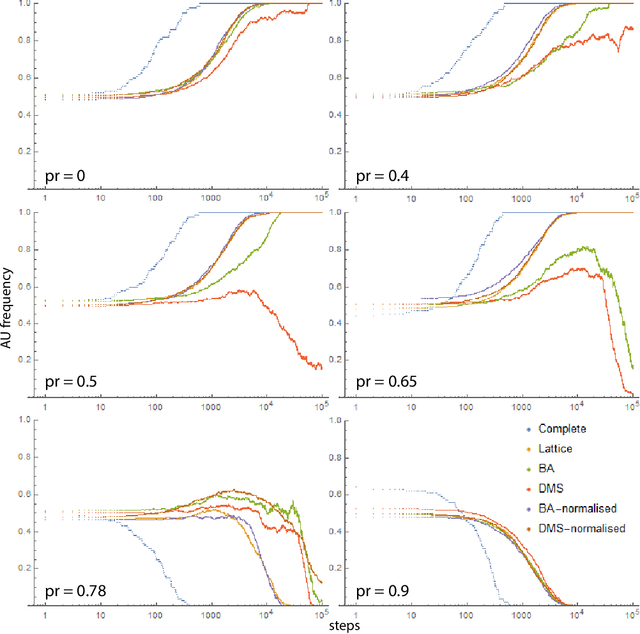

Trust AI Regulation? Discerning users are vital to build trust and effective AI regulation

Mar 14, 2024Abstract:There is general agreement that some form of regulation is necessary both for AI creators to be incentivised to develop trustworthy systems, and for users to actually trust those systems. But there is much debate about what form these regulations should take and how they should be implemented. Most work in this area has been qualitative, and has not been able to make formal predictions. Here, we propose that evolutionary game theory can be used to quantitatively model the dilemmas faced by users, AI creators, and regulators, and provide insights into the possible effects of different regulatory regimes. We show that creating trustworthy AI and user trust requires regulators to be incentivised to regulate effectively. We demonstrate the effectiveness of two mechanisms that can achieve this. The first is where governments can recognise and reward regulators that do a good job. In that case, if the AI system is not too risky for users then some level of trustworthy development and user trust evolves. We then consider an alternative solution, where users can condition their trust decision on the effectiveness of the regulators. This leads to effective regulation, and consequently the development of trustworthy AI and user trust, provided that the cost of implementing regulations is not too high. Our findings highlight the importance of considering the effect of different regulatory regimes from an evolutionary game theoretic perspective.

Co-evolution of Social and Non-Social Guilt

Feb 20, 2023

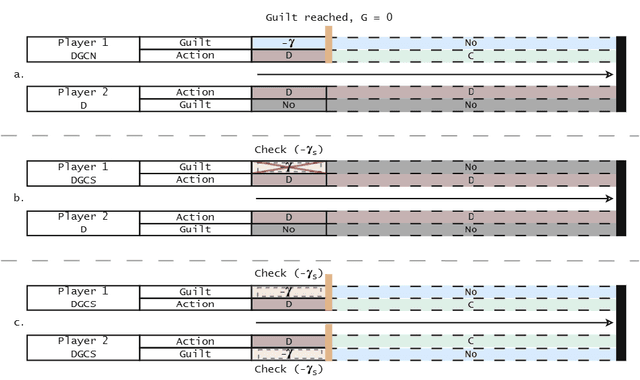

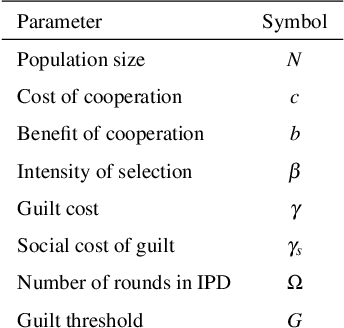

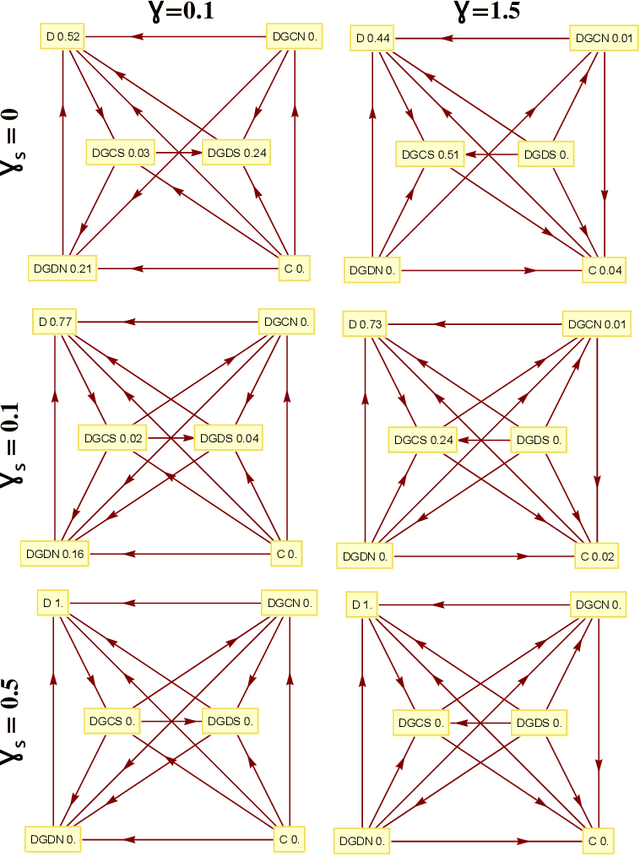

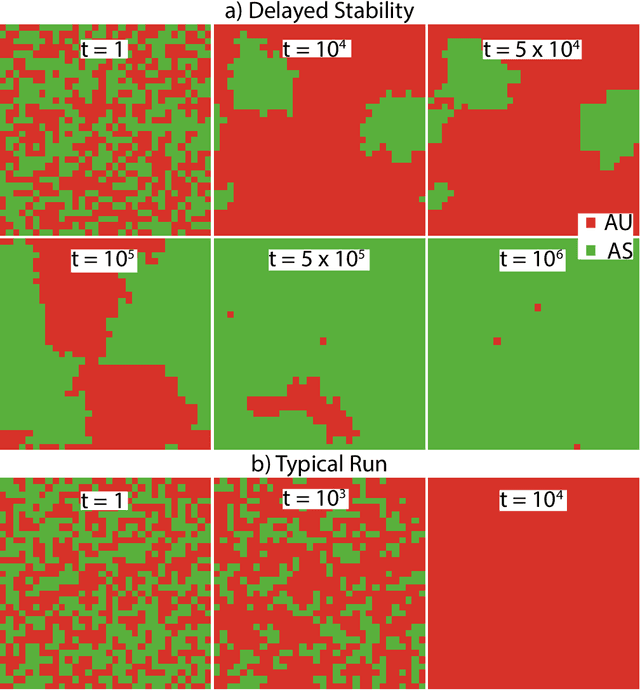

Abstract:Building ethical machines may involve bestowing upon them the emotional capacity to self-evaluate and repent on their actions. While reparative measures, such as apologies, are often considered as possible strategic interactions, the explicit evolution of the emotion of guilt as a behavioural phenotype is not yet well understood. Here, we study the co-evolution of social and non-social guilt of homogeneous or heterogeneous populations, including well-mixed, lattice and scale-free networks. Socially aware guilt comes at a cost, as it requires agents to make demanding efforts to observe and understand the internal state and behaviour of others, while non-social guilt only requires the awareness of the agents' own state and hence incurs no social cost. Those choosing to be non-social are however more sensitive to exploitation by other agents due to their social unawareness. Resorting to methods from evolutionary game theory, we study analytically, and through extensive numerical and agent-based simulations, whether and how such social and non-social guilt can evolve and deploy, depending on the underlying structure of the populations, or systems, of agents. The results show that, in both lattice and scale-free networks, emotional guilt prone strategies are dominant for a larger range of the guilt and social costs incurred, compared to the well-mixed population setting, leading therefore to significantly higher levels of cooperation for a wider range of the costs. In structured population settings, both social and non-social guilt can evolve and deploy through clustering with emotional prone strategies, allowing them to be protected from exploiters, especially in case of non-social (less costly) strategies. Overall, our findings provide important insights into the design and engineering of self-organised and distributed cooperative multi-agent systems.

Social Diversity Reduces the Complexity and Cost of Fostering Fairness

Nov 18, 2022

Abstract:Institutions and investors are constantly faced with the challenge of appropriately distributing endowments. No budget is limitless and optimising overall spending without sacrificing positive outcomes has been approached and resolved using several heuristics. To date, prior works have failed to consider how to encourage fairness in a population where social diversity is ubiquitous, and in which investors can only partially observe the population. Herein, by incorporating social diversity in the Ultimatum game through heterogeneous graphs, we investigate the effects of several interference mechanisms which assume incomplete information and flexible standards of fairness. We quantify the role of diversity and show how it reduces the need for information gathering, allowing us to relax a strict, costly interference process. Furthermore, we find that the influence of certain individuals, expressed by different network centrality measures, can be exploited to further reduce spending if minimal fairness requirements are lowered. Our results indicate that diversity changes and opens up novel mechanisms available to institutions wishing to promote fairness. Overall, our analysis provides novel insights to guide institutional policies in socially diverse complex systems.

AI Development Race Can Be Mediated on Heterogeneous Networks

Dec 30, 2020

Abstract:The field of Artificial Intelligence (AI) has been introducing a certain level of anxiety in research, business and also policy. Tensions are further heightened by an AI race narrative which makes many stakeholders fear that they might be missing out. Whether real or not, a belief in this narrative may be detrimental as some stakeholders will feel obliged to cut corners on safety precautions or ignore societal consequences. Starting from a game-theoretical model describing an idealised technology race in a well-mixed world, here we investigate how different interaction structures among race participants can alter collective choices and requirements for regulatory actions. Our findings indicate that, when participants portray a strong diversity in terms of connections and peer-influence (e.g., when scale-free networks shape interactions among parties), the conflicts that exist in homogeneous settings are significantly reduced, thereby lessening the need for regulatory actions. Furthermore, our results suggest that technology governance and regulation may profit from the world's patent heterogeneity and inequality among firms and nations to design and implement meticulous interventions on a minority of participants capable of influencing an entire population towards an ethical and sustainable use of AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge