Alessandro Di Stefano

When Numbers Start Talking: Implicit Numerical Coordination Among LLM-Based Agents

Jan 07, 2026Abstract:LLMs-based agents increasingly operate in multi-agent environments where strategic interaction and coordination are required. While existing work has largely focused on individual agents or on interacting agents sharing explicit communication, less is known about how interacting agents coordinate implicitly. In particular, agents may engage in covert communication, relying on indirect or non-linguistic signals embedded in their actions rather than on explicit messages. This paper presents a game-theoretic study of covert communication in LLM-driven multi-agent systems. We analyse interactions across four canonical game-theoretic settings under different communication regimes, including explicit, restricted, and absent communication. Considering heterogeneous agent personalities and both one-shot and repeated games, we characterise when covert signals emerge and how they shape coordination and strategic outcomes.

KERAIA: An Adaptive and Explainable Framework for Dynamic Knowledge Representation and Reasoning

May 07, 2025

Abstract:In this paper, we introduce KERAIA, a novel framework and software platform for symbolic knowledge engineering designed to address the persistent challenges of representing, reasoning with, and executing knowledge in dynamic, complex, and context-sensitive environments. The central research question that motivates this work is: How can unstructured, often tacit, human expertise be effectively transformed into computationally tractable algorithms that AI systems can efficiently utilise? KERAIA seeks to bridge this gap by building on foundational concepts such as Minsky's frame-based reasoning and K-lines, while introducing significant innovations. These include Clouds of Knowledge for dynamic aggregation, Dynamic Relations (DRels) for context-sensitive inheritance, explicit Lines of Thought (LoTs) for traceable reasoning, and Cloud Elaboration for adaptive knowledge transformation. This approach moves beyond the limitations of traditional, often static, knowledge representation paradigms. KERAIA is designed with Explainable AI (XAI) as a core principle, ensuring transparency and interpretability, particularly through the use of LoTs. The paper details the framework's architecture, the KSYNTH representation language, and the General Purpose Paradigm Builder (GPPB) to integrate diverse inference methods within a unified structure. We validate KERAIA's versatility, expressiveness, and practical applicability through detailed analysis of multiple case studies spanning naval warfare simulation, industrial diagnostics in water treatment plants, and strategic decision-making in the game of RISK. Furthermore, we provide a comparative analysis against established knowledge representation paradigms (including ontologies, rule-based systems, and knowledge graphs) and discuss the implementation aspects and computational considerations of the KERAIA platform.

FAIRGAME: a Framework for AI Agents Bias Recognition using Game Theory

Apr 22, 2025Abstract:Letting AI agents interact in multi-agent applications adds a layer of complexity to the interpretability and prediction of AI outcomes, with profound implications for their trustworthy adoption in research and society. Game theory offers powerful models to capture and interpret strategic interaction among agents, but requires the support of reproducible, standardized and user-friendly IT frameworks to enable comparison and interpretation of results. To this end, we present FAIRGAME, a Framework for AI Agents Bias Recognition using Game Theory. We describe its implementation and usage, and we employ it to uncover biased outcomes in popular games among AI agents, depending on the employed Large Language Model (LLM) and used language, as well as on the personality trait or strategic knowledge of the agents. Overall, FAIRGAME allows users to reliably and easily simulate their desired games and scenarios and compare the results across simulation campaigns and with game-theoretic predictions, enabling the systematic discovery of biases, the anticipation of emerging behavior out of strategic interplays, and empowering further research into strategic decision-making using LLM agents.

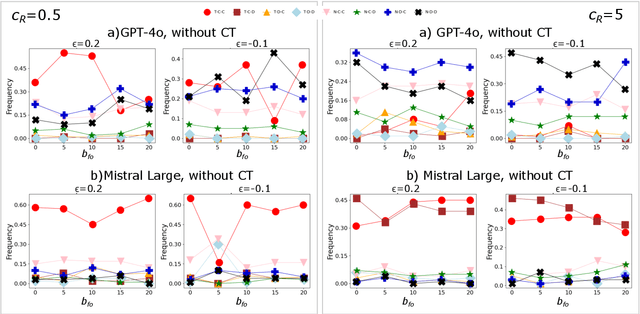

Do LLMs trust AI regulation? Emerging behaviour of game-theoretic LLM agents

Apr 11, 2025

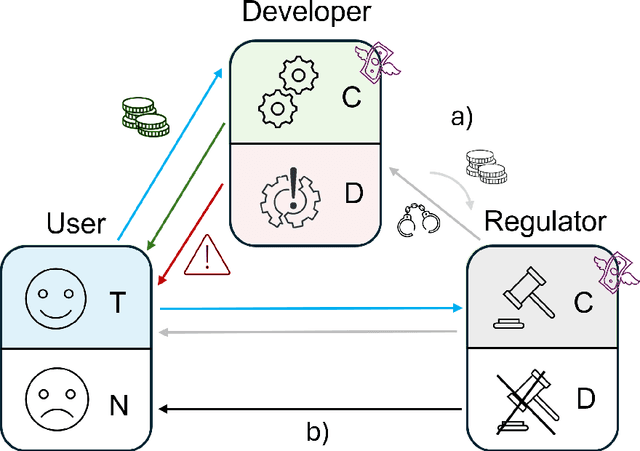

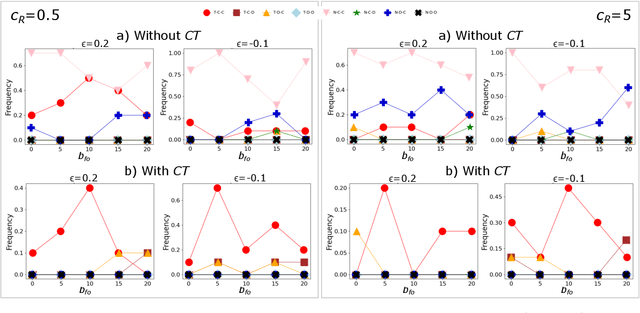

Abstract:There is general agreement that fostering trust and cooperation within the AI development ecosystem is essential to promote the adoption of trustworthy AI systems. By embedding Large Language Model (LLM) agents within an evolutionary game-theoretic framework, this paper investigates the complex interplay between AI developers, regulators and users, modelling their strategic choices under different regulatory scenarios. Evolutionary game theory (EGT) is used to quantitatively model the dilemmas faced by each actor, and LLMs provide additional degrees of complexity and nuances and enable repeated games and incorporation of personality traits. Our research identifies emerging behaviours of strategic AI agents, which tend to adopt more "pessimistic" (not trusting and defective) stances than pure game-theoretic agents. We observe that, in case of full trust by users, incentives are effective to promote effective regulation; however, conditional trust may deteriorate the "social pact". Establishing a virtuous feedback between users' trust and regulators' reputation thus appears to be key to nudge developers towards creating safe AI. However, the level at which this trust emerges may depend on the specific LLM used for testing. Our results thus provide guidance for AI regulation systems, and help predict the outcome of strategic LLM agents, should they be used to aid regulation itself.

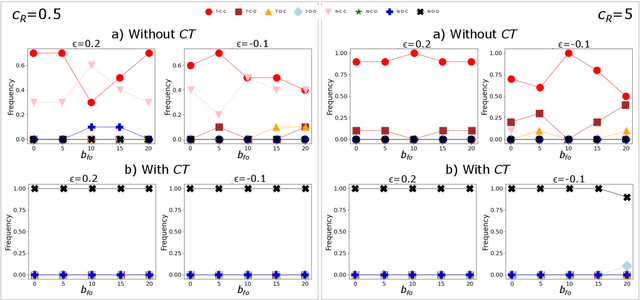

Media and responsible AI governance: a game-theoretic and LLM analysis

Mar 12, 2025Abstract:This paper investigates the complex interplay between AI developers, regulators, users, and the media in fostering trustworthy AI systems. Using evolutionary game theory and large language models (LLMs), we model the strategic interactions among these actors under different regulatory regimes. The research explores two key mechanisms for achieving responsible governance, safe AI development and adoption of safe AI: incentivising effective regulation through media reporting, and conditioning user trust on commentariats' recommendation. The findings highlight the crucial role of the media in providing information to users, potentially acting as a form of "soft" regulation by investigating developers or regulators, as a substitute to institutional AI regulation (which is still absent in many regions). Both game-theoretic analysis and LLM-based simulations reveal conditions under which effective regulation and trustworthy AI development emerge, emphasising the importance of considering the influence of different regulatory regimes from an evolutionary game-theoretic perspective. The study concludes that effective governance requires managing incentives and costs for high quality commentaries.

Quantifying detection rates for dangerous capabilities: a theoretical model of dangerous capability evaluations

Dec 19, 2024

Abstract:We present a quantitative model for tracking dangerous AI capabilities over time. Our goal is to help the policy and research community visualise how dangerous capability testing can give us an early warning about approaching AI risks. We first use the model to provide a novel introduction to dangerous capability testing and how this testing can directly inform policy. Decision makers in AI labs and government often set policy that is sensitive to the estimated danger of AI systems, and may wish to set policies that condition on the crossing of a set threshold for danger. The model helps us to reason about these policy choices. We then run simulations to illustrate how we might fail to test for dangerous capabilities. To summarise, failures in dangerous capability testing may manifest in two ways: higher bias in our estimates of AI danger, or larger lags in threshold monitoring. We highlight two drivers of these failure modes: uncertainty around dynamics in AI capabilities and competition between frontier AI labs. Effective AI policy demands that we address these failure modes and their drivers. Even if the optimal targeting of resources is challenging, we show how delays in testing can harm AI policy. We offer preliminary recommendations for building an effective testing ecosystem for dangerous capabilities and advise on a research agenda.

Trust AI Regulation? Discerning users are vital to build trust and effective AI regulation

Mar 14, 2024Abstract:There is general agreement that some form of regulation is necessary both for AI creators to be incentivised to develop trustworthy systems, and for users to actually trust those systems. But there is much debate about what form these regulations should take and how they should be implemented. Most work in this area has been qualitative, and has not been able to make formal predictions. Here, we propose that evolutionary game theory can be used to quantitatively model the dilemmas faced by users, AI creators, and regulators, and provide insights into the possible effects of different regulatory regimes. We show that creating trustworthy AI and user trust requires regulators to be incentivised to regulate effectively. We demonstrate the effectiveness of two mechanisms that can achieve this. The first is where governments can recognise and reward regulators that do a good job. In that case, if the AI system is not too risky for users then some level of trustworthy development and user trust evolves. We then consider an alternative solution, where users can condition their trust decision on the effectiveness of the regulators. This leads to effective regulation, and consequently the development of trustworthy AI and user trust, provided that the cost of implementing regulations is not too high. Our findings highlight the importance of considering the effect of different regulatory regimes from an evolutionary game theoretic perspective.

Both eyes open: Vigilant Incentives help Regulatory Markets improve AI Safety

Mar 06, 2023Abstract:In the context of rapid discoveries by leaders in AI, governments must consider how to design regulation that matches the increasing pace of new AI capabilities. Regulatory Markets for AI is a proposal designed with adaptability in mind. It involves governments setting outcome-based targets for AI companies to achieve, which they can show by purchasing services from a market of private regulators. We use an evolutionary game theory model to explore the role governments can play in building a Regulatory Market for AI systems that deters reckless behaviour. We warn that it is alarmingly easy to stumble on incentives which would prevent Regulatory Markets from achieving this goal. These 'Bounty Incentives' only reward private regulators for catching unsafe behaviour. We argue that AI companies will likely learn to tailor their behaviour to how much effort regulators invest, discouraging regulators from innovating. Instead, we recommend that governments always reward regulators, except when they find that those regulators failed to detect unsafe behaviour that they should have. These 'Vigilant Incentives' could encourage private regulators to find innovative ways to evaluate cutting-edge AI systems.

Social Diversity Reduces the Complexity and Cost of Fostering Fairness

Nov 18, 2022

Abstract:Institutions and investors are constantly faced with the challenge of appropriately distributing endowments. No budget is limitless and optimising overall spending without sacrificing positive outcomes has been approached and resolved using several heuristics. To date, prior works have failed to consider how to encourage fairness in a population where social diversity is ubiquitous, and in which investors can only partially observe the population. Herein, by incorporating social diversity in the Ultimatum game through heterogeneous graphs, we investigate the effects of several interference mechanisms which assume incomplete information and flexible standards of fairness. We quantify the role of diversity and show how it reduces the need for information gathering, allowing us to relax a strict, costly interference process. Furthermore, we find that the influence of certain individuals, expressed by different network centrality measures, can be exploited to further reduce spending if minimal fairness requirements are lowered. Our results indicate that diversity changes and opens up novel mechanisms available to institutions wishing to promote fairness. Overall, our analysis provides novel insights to guide institutional policies in socially diverse complex systems.

GRADE: Graph Dynamic Embedding

Jul 16, 2020

Abstract:Representation learning of static and more recently dynamically evolving graphs has gained noticeable attention. Existing approaches for modelling graph dynamics focus extensively on the evolution of individual nodes independently of the evolution of mesoscale community structures. As a result, current methods do not provide useful tools to study and cannot explicitly capture temporal community dynamics. To address this challenge, we propose GRADE - a probabilistic model that learns to generate evolving node and community representations by imposing a random walk prior over their trajectories. Our model also learns node community membership which is updated between time steps via a transition matrix. At each time step link generation is performed by first assigning node membership from a distribution over the communities, and then sampling a neighbor from a distribution over the nodes for the assigned community. We parametrize the node and community distributions with neural networks and learn their parameters via variational inference. Experiments demonstrate GRADE meets or outperforms baselines in dynamic link prediction, shows favourable performance on dynamic community detection, and identifies coherent and interpretable evolving communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge