Simon T. Powers

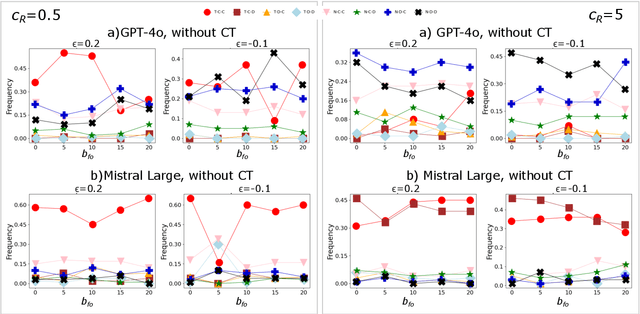

Do LLMs trust AI regulation? Emerging behaviour of game-theoretic LLM agents

Apr 11, 2025

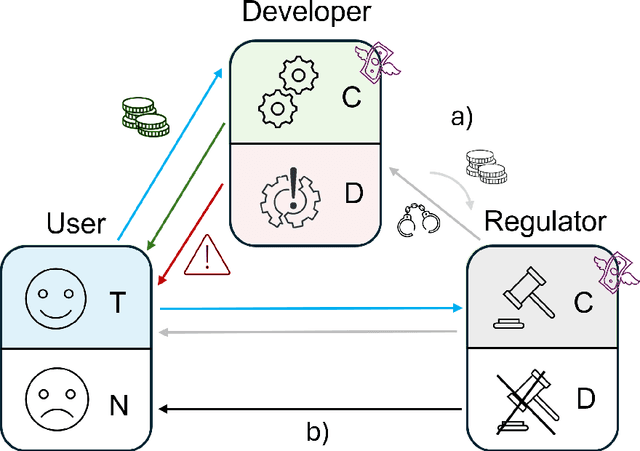

Abstract:There is general agreement that fostering trust and cooperation within the AI development ecosystem is essential to promote the adoption of trustworthy AI systems. By embedding Large Language Model (LLM) agents within an evolutionary game-theoretic framework, this paper investigates the complex interplay between AI developers, regulators and users, modelling their strategic choices under different regulatory scenarios. Evolutionary game theory (EGT) is used to quantitatively model the dilemmas faced by each actor, and LLMs provide additional degrees of complexity and nuances and enable repeated games and incorporation of personality traits. Our research identifies emerging behaviours of strategic AI agents, which tend to adopt more "pessimistic" (not trusting and defective) stances than pure game-theoretic agents. We observe that, in case of full trust by users, incentives are effective to promote effective regulation; however, conditional trust may deteriorate the "social pact". Establishing a virtuous feedback between users' trust and regulators' reputation thus appears to be key to nudge developers towards creating safe AI. However, the level at which this trust emerges may depend on the specific LLM used for testing. Our results thus provide guidance for AI regulation systems, and help predict the outcome of strategic LLM agents, should they be used to aid regulation itself.

Media and responsible AI governance: a game-theoretic and LLM analysis

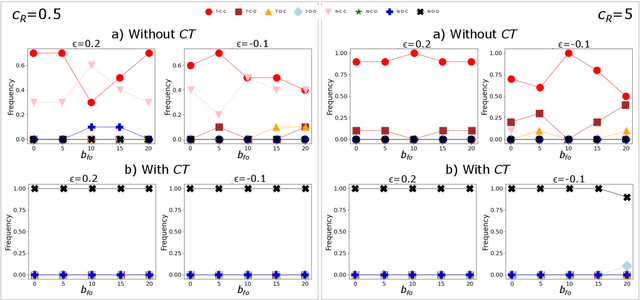

Mar 12, 2025Abstract:This paper investigates the complex interplay between AI developers, regulators, users, and the media in fostering trustworthy AI systems. Using evolutionary game theory and large language models (LLMs), we model the strategic interactions among these actors under different regulatory regimes. The research explores two key mechanisms for achieving responsible governance, safe AI development and adoption of safe AI: incentivising effective regulation through media reporting, and conditioning user trust on commentariats' recommendation. The findings highlight the crucial role of the media in providing information to users, potentially acting as a form of "soft" regulation by investigating developers or regulators, as a substitute to institutional AI regulation (which is still absent in many regions). Both game-theoretic analysis and LLM-based simulations reveal conditions under which effective regulation and trustworthy AI development emerge, emphasising the importance of considering the influence of different regulatory regimes from an evolutionary game-theoretic perspective. The study concludes that effective governance requires managing incentives and costs for high quality commentaries.

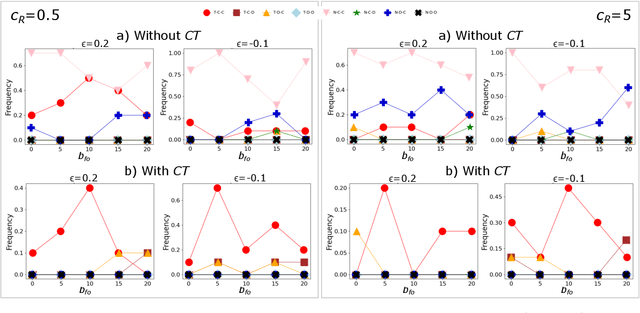

Trust AI Regulation? Discerning users are vital to build trust and effective AI regulation

Mar 14, 2024Abstract:There is general agreement that some form of regulation is necessary both for AI creators to be incentivised to develop trustworthy systems, and for users to actually trust those systems. But there is much debate about what form these regulations should take and how they should be implemented. Most work in this area has been qualitative, and has not been able to make formal predictions. Here, we propose that evolutionary game theory can be used to quantitatively model the dilemmas faced by users, AI creators, and regulators, and provide insights into the possible effects of different regulatory regimes. We show that creating trustworthy AI and user trust requires regulators to be incentivised to regulate effectively. We demonstrate the effectiveness of two mechanisms that can achieve this. The first is where governments can recognise and reward regulators that do a good job. In that case, if the AI system is not too risky for users then some level of trustworthy development and user trust evolves. We then consider an alternative solution, where users can condition their trust decision on the effectiveness of the regulators. This leads to effective regulation, and consequently the development of trustworthy AI and user trust, provided that the cost of implementing regulations is not too high. Our findings highlight the importance of considering the effect of different regulatory regimes from an evolutionary game theoretic perspective.

Evolved Open-Endedness in Cultural Evolution: A New Dimension in Open-Ended Evolution Research

Mar 24, 2022

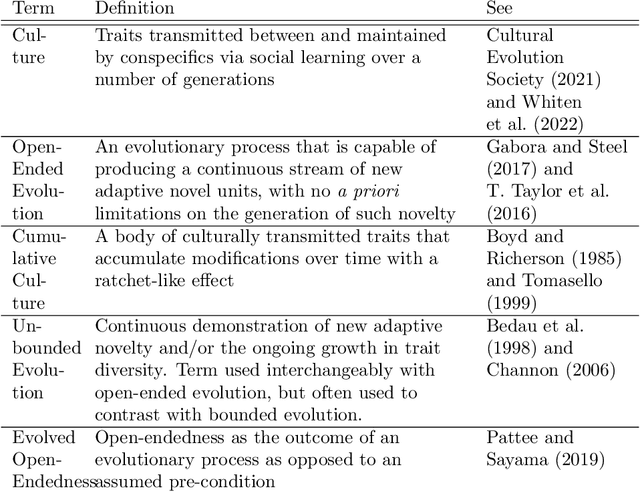

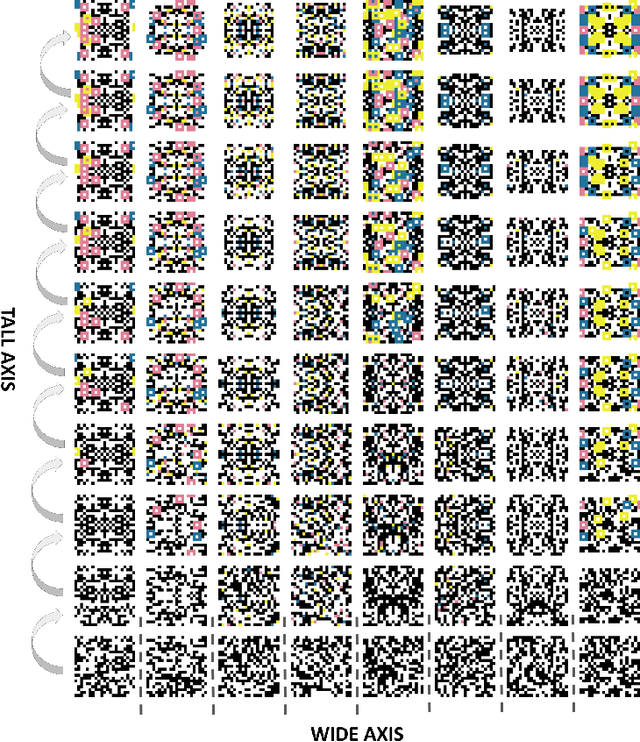

Abstract:The goal of Artificial Life research, as articulated by Chris Langton, is "to contribute to theoretical biology by locating life-as-we-know-it within the larger picture of life-as-it-could-be" (1989, p.1). The study and pursuit of open-ended evolution in artificial evolutionary systems exemplifies this goal. However, open-ended evolution research is hampered by two fundamental issues; the struggle to replicate open-endedness in an artificial evolutionary system, and the fact that we only have one system (genetic evolution) from which to draw inspiration. Here we argue that cultural evolution should be seen not only as another real-world example of an open-ended evolutionary system, but that the unique qualities seen in cultural evolution provide us with a new perspective from which we can assess the fundamental properties of, and ask new questions about, open-ended evolutionary systems, especially in regard to evolved open-endedness and transitions from bounded to unbounded evolution. Here we provide an overview of culture as an evolutionary system, highlight the interesting case of human cultural evolution as an open-ended evolutionary system, and contextualise cultural evolution under the framework of (evolved) open-ended evolution. We go on to provide a set of new questions that can be asked once we consider cultural evolution within the framework of open-ended evolution, and introduce new insights that we may be able to gain about evolved open-endedness as a result of asking these questions.

When to (or not to) trust intelligent machines: Insights from an evolutionary game theory analysis of trust in repeated games

Jul 22, 2020

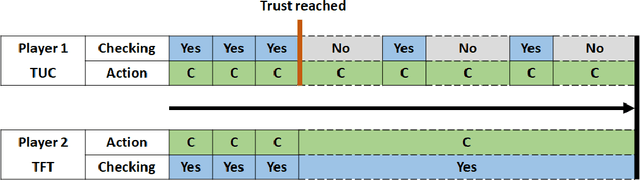

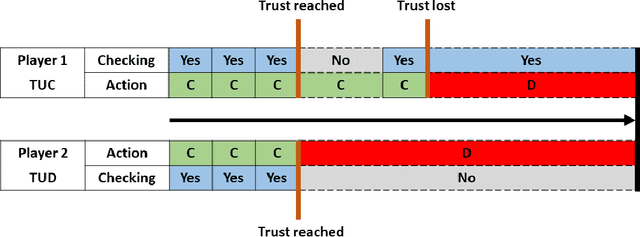

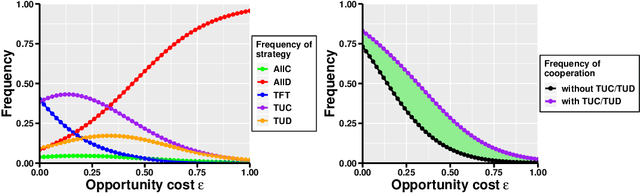

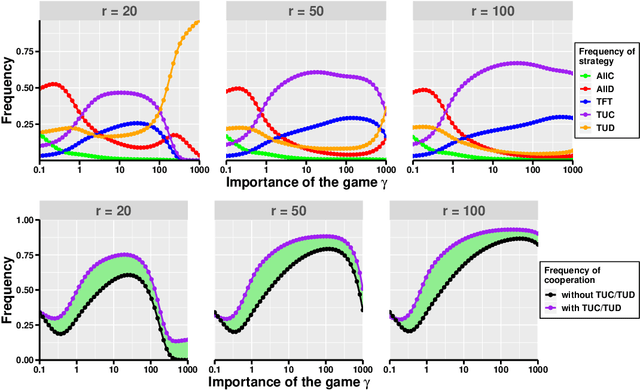

Abstract:The actions of intelligent agents, such as chatbots, recommender systems, and virtual assistants are typically not fully transparent to the user. Consequently, using such an agent involves the user exposing themselves to the risk that the agent may act in a way opposed to the user's goals. It is often argued that people use trust as a cognitive shortcut to reduce the complexity of such interactions. Here we formalise this by using the methods of evolutionary game theory to study the viability of trust-based strategies in repeated games. These are reciprocal strategies that cooperate as long as the other player is observed to be cooperating. Unlike classic reciprocal strategies, once mutual cooperation has been observed for a threshold number of rounds they stop checking their co-player's behaviour every round, and instead only check with some probability. By doing so, they reduce the opportunity cost of verifying whether the action of their co-player was actually cooperative. We demonstrate that these trust-based strategies can outcompete strategies that are always conditional, such as Tit-for-Tat, when the opportunity cost is non-negligible. We argue that this cost is likely to be greater when the interaction is between people and intelligent agents, because of the reduced transparency of the agent. Consequently, we expect people to use trust-based strategies more frequently in interactions with intelligent agents. Our results provide new, important insights into the design of mechanisms for facilitating interactions between humans and intelligent agents, where trust is an essential factor.

A hybrid artificial immune system and Self Organising Map for network intrusion detection

Aug 02, 2012

Abstract:Network intrusion detection is the problem of detecting unauthorised use of, or access to, computer systems over a network. Two broad approaches exist to tackle this problem: anomaly detection and misuse detection. An anomaly detection system is trained only on examples of normal connections, and thus has the potential to detect novel attacks. However, many anomaly detection systems simply report the anomalous activity, rather than analysing it further in order to report higher-level information that is of more use to a security officer. On the other hand, misuse detection systems recognise known attack patterns, thereby allowing them to provide more detailed information about an intrusion. However, such systems cannot detect novel attacks. A hybrid system is presented in this paper with the aim of combining the advantages of both approaches. Specifically, anomalous network connections are initially detected using an artificial immune system. Connections that are flagged as anomalous are then categorised using a Kohonen Self Organising Map, allowing higher-level information, in the form of cluster membership, to be extracted. Experimental results on the KDD 1999 Cup dataset show a low false positive rate and a detection and classification rate for Denial-of-Service and User-to-Root attacks that is higher than those in a sample of other works.

* Post-print of accepted manuscript. 32 pages and 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge