The Anh Han

More at Stake: How Payoff and Language Shape LLM Agent Strategies in Cooperation Dilemmas

Jan 27, 2026Abstract:As LLMs increasingly act as autonomous agents in interactive and multi-agent settings, understanding their strategic behavior is critical for safety, coordination, and AI-driven social and economic systems. We investigate how payoff magnitude and linguistic context shape LLM strategies in repeated social dilemmas, using a payoff-scaled Prisoner's Dilemma to isolate sensitivity to incentive strength. Across models and languages, we observe consistent behavioral patterns, including incentive-sensitive conditional strategies and cross-linguistic divergence. To interpret these dynamics, we train supervised classifiers on canonical repeated-game strategies and apply them to LLM decisions, revealing systematic, model- and language-dependent behavioral intentions, with linguistic framing sometimes matching or exceeding architectural effects. Our results provide a unified framework for auditing LLMs as strategic agents and highlight cooperation biases with direct implications for AI governance and multi-agent system design.

When Numbers Start Talking: Implicit Numerical Coordination Among LLM-Based Agents

Jan 07, 2026Abstract:LLMs-based agents increasingly operate in multi-agent environments where strategic interaction and coordination are required. While existing work has largely focused on individual agents or on interacting agents sharing explicit communication, less is known about how interacting agents coordinate implicitly. In particular, agents may engage in covert communication, relying on indirect or non-linguistic signals embedded in their actions rather than on explicit messages. This paper presents a game-theoretic study of covert communication in LLM-driven multi-agent systems. We analyse interactions across four canonical game-theoretic settings under different communication regimes, including explicit, restricted, and absent communication. Considering heterogeneous agent personalities and both one-shot and repeated games, we characterise when covert signals emerge and how they shape coordination and strategic outcomes.

Social welfare optimisation in well-mixed and structured populations

Dec 14, 2025Abstract:Research on promoting cooperation among autonomous, self-regarding agents has often focused on the bi-objective optimisation problem: minimising the total incentive cost while maximising the frequency of cooperation. However, the optimal value of social welfare under such constraints remains largely unexplored. In this work, we hypothesise that achieving maximal social welfare is not guaranteed at the minimal incentive cost required to drive agents to a desired cooperative state. To address this gap, we adopt to a single-objective approach focused on maximising social welfare, building upon foundational evolutionary game theory models that examined cost efficiency in finite populations, in both well-mixed and structured population settings. Our analytical model and agent-based simulations show how different interference strategies, including rewarding local versus global behavioural patterns, affect social welfare and dynamics of cooperation. Our results reveal a significant gap in the per-individual incentive cost between optimising for pure cost efficiency or cooperation frequency and optimising for maximal social welfare. Overall, our findings indicate that incentive design, policy, and benchmarking in multi-agent systems and human societies should prioritise welfare-centric objectives over proxy targets of cost or cooperation frequency.

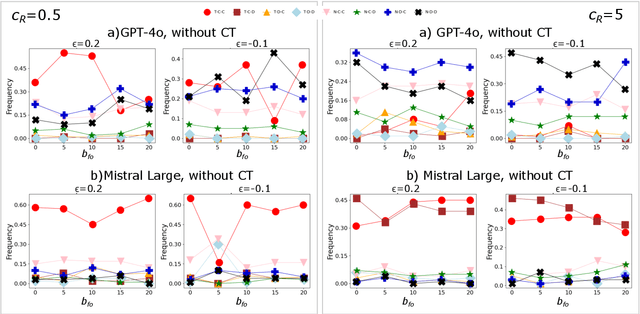

Understanding LLM Agent Behaviours via Game Theory: Strategy Recognition, Biases and Multi-Agent Dynamics

Dec 11, 2025Abstract:As Large Language Models (LLMs) increasingly operate as autonomous decision-makers in interactive and multi-agent systems and human societies, understanding their strategic behaviour has profound implications for safety, coordination, and the design of AI-driven social and economic infrastructures. Assessing such behaviour requires methods that capture not only what LLMs output, but the underlying intentions that guide their decisions. In this work, we extend the FAIRGAME framework to systematically evaluate LLM behaviour in repeated social dilemmas through two complementary advances: a payoff-scaled Prisoners Dilemma isolating sensitivity to incentive magnitude, and an integrated multi-agent Public Goods Game with dynamic payoffs and multi-agent histories. These environments reveal consistent behavioural signatures across models and languages, including incentive-sensitive cooperation, cross-linguistic divergence and end-game alignment toward defection. To interpret these patterns, we train traditional supervised classification models on canonical repeated-game strategies and apply them to FAIRGAME trajectories, showing that LLMs exhibit systematic, model- and language-dependent behavioural intentions, with linguistic framing at times exerting effects as strong as architectural differences. Together, these findings provide a unified methodological foundation for auditing LLMs as strategic agents and reveal systematic cooperation biases with direct implications for AI governance, collective decision-making, and the design of safe multi-agent systems.

KERAIA: An Adaptive and Explainable Framework for Dynamic Knowledge Representation and Reasoning

May 07, 2025

Abstract:In this paper, we introduce KERAIA, a novel framework and software platform for symbolic knowledge engineering designed to address the persistent challenges of representing, reasoning with, and executing knowledge in dynamic, complex, and context-sensitive environments. The central research question that motivates this work is: How can unstructured, often tacit, human expertise be effectively transformed into computationally tractable algorithms that AI systems can efficiently utilise? KERAIA seeks to bridge this gap by building on foundational concepts such as Minsky's frame-based reasoning and K-lines, while introducing significant innovations. These include Clouds of Knowledge for dynamic aggregation, Dynamic Relations (DRels) for context-sensitive inheritance, explicit Lines of Thought (LoTs) for traceable reasoning, and Cloud Elaboration for adaptive knowledge transformation. This approach moves beyond the limitations of traditional, often static, knowledge representation paradigms. KERAIA is designed with Explainable AI (XAI) as a core principle, ensuring transparency and interpretability, particularly through the use of LoTs. The paper details the framework's architecture, the KSYNTH representation language, and the General Purpose Paradigm Builder (GPPB) to integrate diverse inference methods within a unified structure. We validate KERAIA's versatility, expressiveness, and practical applicability through detailed analysis of multiple case studies spanning naval warfare simulation, industrial diagnostics in water treatment plants, and strategic decision-making in the game of RISK. Furthermore, we provide a comparative analysis against established knowledge representation paradigms (including ontologies, rule-based systems, and knowledge graphs) and discuss the implementation aspects and computational considerations of the KERAIA platform.

FAIRGAME: a Framework for AI Agents Bias Recognition using Game Theory

Apr 22, 2025Abstract:Letting AI agents interact in multi-agent applications adds a layer of complexity to the interpretability and prediction of AI outcomes, with profound implications for their trustworthy adoption in research and society. Game theory offers powerful models to capture and interpret strategic interaction among agents, but requires the support of reproducible, standardized and user-friendly IT frameworks to enable comparison and interpretation of results. To this end, we present FAIRGAME, a Framework for AI Agents Bias Recognition using Game Theory. We describe its implementation and usage, and we employ it to uncover biased outcomes in popular games among AI agents, depending on the employed Large Language Model (LLM) and used language, as well as on the personality trait or strategic knowledge of the agents. Overall, FAIRGAME allows users to reliably and easily simulate their desired games and scenarios and compare the results across simulation campaigns and with game-theoretic predictions, enabling the systematic discovery of biases, the anticipation of emerging behavior out of strategic interplays, and empowering further research into strategic decision-making using LLM agents.

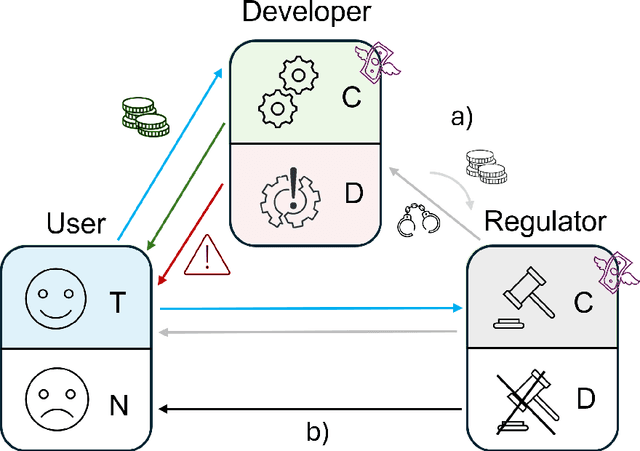

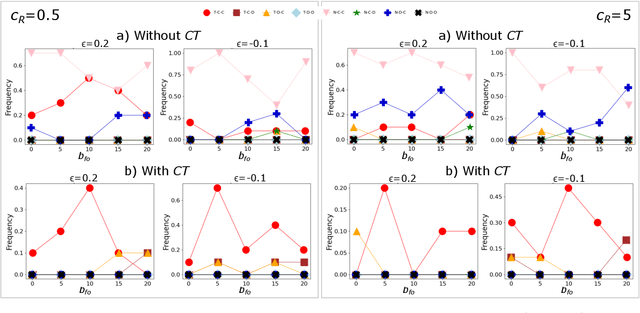

Do LLMs trust AI regulation? Emerging behaviour of game-theoretic LLM agents

Apr 11, 2025

Abstract:There is general agreement that fostering trust and cooperation within the AI development ecosystem is essential to promote the adoption of trustworthy AI systems. By embedding Large Language Model (LLM) agents within an evolutionary game-theoretic framework, this paper investigates the complex interplay between AI developers, regulators and users, modelling their strategic choices under different regulatory scenarios. Evolutionary game theory (EGT) is used to quantitatively model the dilemmas faced by each actor, and LLMs provide additional degrees of complexity and nuances and enable repeated games and incorporation of personality traits. Our research identifies emerging behaviours of strategic AI agents, which tend to adopt more "pessimistic" (not trusting and defective) stances than pure game-theoretic agents. We observe that, in case of full trust by users, incentives are effective to promote effective regulation; however, conditional trust may deteriorate the "social pact". Establishing a virtuous feedback between users' trust and regulators' reputation thus appears to be key to nudge developers towards creating safe AI. However, the level at which this trust emerges may depend on the specific LLM used for testing. Our results thus provide guidance for AI regulation systems, and help predict the outcome of strategic LLM agents, should they be used to aid regulation itself.

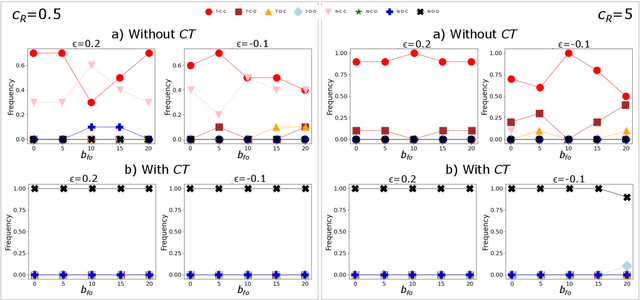

Media and responsible AI governance: a game-theoretic and LLM analysis

Mar 12, 2025Abstract:This paper investigates the complex interplay between AI developers, regulators, users, and the media in fostering trustworthy AI systems. Using evolutionary game theory and large language models (LLMs), we model the strategic interactions among these actors under different regulatory regimes. The research explores two key mechanisms for achieving responsible governance, safe AI development and adoption of safe AI: incentivising effective regulation through media reporting, and conditioning user trust on commentariats' recommendation. The findings highlight the crucial role of the media in providing information to users, potentially acting as a form of "soft" regulation by investigating developers or regulators, as a substitute to institutional AI regulation (which is still absent in many regions). Both game-theoretic analysis and LLM-based simulations reveal conditions under which effective regulation and trustworthy AI development emerge, emphasising the importance of considering the influence of different regulatory regimes from an evolutionary game-theoretic perspective. The study concludes that effective governance requires managing incentives and costs for high quality commentaries.

Multi-Agent Risks from Advanced AI

Feb 19, 2025

Abstract:The rapid development of advanced AI agents and the imminent deployment of many instances of these agents will give rise to multi-agent systems of unprecedented complexity. These systems pose novel and under-explored risks. In this report, we provide a structured taxonomy of these risks by identifying three key failure modes (miscoordination, conflict, and collusion) based on agents' incentives, as well as seven key risk factors (information asymmetries, network effects, selection pressures, destabilising dynamics, commitment problems, emergent agency, and multi-agent security) that can underpin them. We highlight several important instances of each risk, as well as promising directions to help mitigate them. By anchoring our analysis in a range of real-world examples and experimental evidence, we illustrate the distinct challenges posed by multi-agent systems and their implications for the safety, governance, and ethics of advanced AI.

Benchmarking Classical, Deep, and Generative Models for Human Activity Recognition

Jan 14, 2025Abstract:Human Activity Recognition (HAR) has gained significant importance with the growing use of sensor-equipped devices and large datasets. This paper evaluates the performance of three categories of models : classical machine learning, deep learning architectures, and Restricted Boltzmann Machines (RBMs) using five key benchmark datasets of HAR (UCI-HAR, OPPORTUNITY, PAMAP2, WISDM, and Berkeley MHAD). We assess various models, including Decision Trees, Random Forests, Convolutional Neural Networks (CNN), and Deep Belief Networks (DBNs), using metrics such as accuracy, precision, recall, and F1-score for a comprehensive comparison. The results show that CNN models offer superior performance across all datasets, especially on the Berkeley MHAD. Classical models like Random Forest do well on smaller datasets but face challenges with larger, more complex data. RBM-based models also show notable potential, particularly for feature learning. This paper offers a detailed comparison to help researchers choose the most suitable model for HAR tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge