Tao Gu

HoRD: Robust Humanoid Control via History-Conditioned Reinforcement Learning and Online Distillation

Feb 05, 2026Abstract:Humanoid robots can suffer significant performance drops under small changes in dynamics, task specifications, or environment setup. We propose HoRD, a two-stage learning framework for robust humanoid control under domain shift. First, we train a high-performance teacher policy via history-conditioned reinforcement learning, where the policy infers latent dynamics context from recent state--action trajectories to adapt online to diverse randomized dynamics. Second, we perform online distillation to transfer the teacher's robust control capabilities into a transformer-based student policy that operates on sparse root-relative 3D joint keypoint trajectories. By combining history-conditioned adaptation with online distillation, HoRD enables a single policy to adapt zero-shot to unseen domains without per-domain retraining. Extensive experiments show HoRD outperforms strong baselines in robustness and transfer, especially under unseen domains and external perturbations. Code and project page are available at https://tonywang-0517.github.io/hord/.

Energy and Memory-Efficient Federated Learning With Ordered Layer Freezing

Dec 29, 2025Abstract:Federated Learning (FL) has emerged as a privacy-preserving paradigm for training machine learning models across distributed edge devices in the Internet of Things (IoT). By keeping data local and coordinating model training through a central server, FL effectively addresses privacy concerns and reduces communication overhead. However, the limited computational power, memory, and bandwidth of IoT edge devices pose significant challenges to the efficiency and scalability of FL, especially when training deep neural networks. Various FL frameworks have been proposed to reduce computation and communication overheads through dropout or layer freezing. However, these approaches often sacrifice accuracy or neglect memory constraints. To this end, in this work, we introduce Federated Learning with Ordered Layer Freezing (FedOLF). FedOLF consistently freezes layers in a predefined order before training, significantly mitigating computation and memory requirements. To further reduce communication and energy costs, we incorporate Tensor Operation Approximation (TOA), a lightweight alternative to conventional quantization that better preserves model accuracy. Experimental results demonstrate that over non-iid data, FedOLF achieves at least 0.3%, 6.4%, 5.81%, 4.4%, 6.27% and 1.29% higher accuracy than existing works respectively on EMNIST (with CNN), CIFAR-10 (with AlexNet), CIFAR-100 (with ResNet20 and ResNet44), and CINIC-10 (with ResNet20 and ResNet44), along with higher energy efficiency and lower memory footprint.

Subspace-Based Super-Resolution Sensing for Bi-Static ISAC with Clock Asynchronism

May 15, 2025Abstract:Bi-static sensing is an attractive configuration for integrated sensing and communications (ISAC) systems; however, clock asynchronism between widely separated transmitters and receivers introduces time-varying time offsets (TO) and phase offsets (PO), posing significant challenges. This paper introduces a signal-subspace-based framework that estimates decoupled angles, delays, and complex gain sequences (CGS)-- the target-reflected signals -- for multiple dynamic target paths. The proposed framework begins with a novel TO alignment algorithm, leveraging signal subspace or covariance, to mitigate TO variations across temporal snapshots, enabling coherent delay-domain analysis. Subsequently, subspace-based methods are developed to compensate for TO residuals and to perform joint angle-delay estimation. Finally, leveraging the high resolution in the joint angle-delay domain, the framework compensates for the PO and estimates the CGS for each target. The framework can be applied to both single-antenna and multi-antenna systems. Extensive simulations and experiments using commercial Wi-Fi devices demonstrate that the proposed framework significantly surpasses existing solutions in parameter estimation accuracy and delay resolution. Notably, it uniquely achieves a super-resolution in the delay domain, with a probability-of-resolution curve tightly approaching that in synchronized systems.

Magic Clothing: Controllable Garment-Driven Image Synthesis

Apr 15, 2024

Abstract:We propose Magic Clothing, a latent diffusion model (LDM)-based network architecture for an unexplored garment-driven image synthesis task. Aiming at generating customized characters wearing the target garments with diverse text prompts, the image controllability is the most critical issue, i.e., to preserve the garment details and maintain faithfulness to the text prompts. To this end, we introduce a garment extractor to capture the detailed garment features, and employ self-attention fusion to incorporate them into the pretrained LDMs, ensuring that the garment details remain unchanged on the target character. Then, we leverage the joint classifier-free guidance to balance the control of garment features and text prompts over the generated results. Meanwhile, the proposed garment extractor is a plug-in module applicable to various finetuned LDMs, and it can be combined with other extensions like ControlNet and IP-Adapter to enhance the diversity and controllability of the generated characters. Furthermore, we design Matched-Points-LPIPS (MP-LPIPS), a robust metric for evaluating the consistency of the target image to the source garment. Extensive experiments demonstrate that our Magic Clothing achieves state-of-the-art results under various conditional controls for garment-driven image synthesis. Our source code is available at https://github.com/ShineChen1024/MagicClothing.

Performance Bounds for Passive Sensing in Asynchronous ISAC Systems -- Appendices

Mar 09, 2024Abstract:This document contains the appendices for our paper titled ``Performance Bounds for Passive Sensing in Asynchronous ISAC Systems." The appendices include rigorous derivations of key formulas, detailed proofs of the theorems and propositions introduced in the paper, and details of the algorithm tested in the numerical simulation for validation. These appendices aim to support and elaborate on the findings and methodologies presented in the main text. All external references to equations, theorems, and so forth, are directed towards the corresponding elements within the main paper.

OOTDiffusion: Outfitting Fusion based Latent Diffusion for Controllable Virtual Try-on

Mar 07, 2024

Abstract:We present OOTDiffusion, a novel network architecture for realistic and controllable image-based virtual try-on (VTON). We leverage the power of pretrained latent diffusion models, designing an outfitting UNet to learn the garment detail features. Without a redundant warping process, the garment features are precisely aligned with the target human body via the proposed outfitting fusion in the self-attention layers of the denoising UNet. In order to further enhance the controllability, we introduce outfitting dropout to the training process, which enables us to adjust the strength of the garment features through classifier-free guidance. Our comprehensive experiments on the VITON-HD and Dress Code datasets demonstrate that OOTDiffusion efficiently generates high-quality try-on results for arbitrary human and garment images, which outperforms other VTON methods in both realism and controllability, indicating an impressive breakthrough in virtual try-on. Our source code is available at https://github.com/levihsu/OOTDiffusion.

FLrce: Efficient Federated Learning with Relationship-based Client Selection and Early-Stopping Strategy

Oct 15, 2023Abstract:Federated learning (FL) achieves great popularity in broad areas as a powerful interface to offer intelligent services to customers while maintaining data privacy. Nevertheless, FL faces communication and computation bottlenecks due to limited bandwidth and resource constraints of edge devices. To comprehensively address the bottlenecks, the technique of dropout is introduced, where resource-constrained edge devices are allowed to collaboratively train a subset of the global model parameters. However, dropout impedes the learning efficiency of FL under unbalanced local data distributions. As a result, FL requires more rounds to achieve appropriate accuracy, consuming more communication and computation resources. In this paper, we present FLrce, an efficient FL framework with a relationship-based client selection and early-stopping strategy. FLrce accelerates the FL process by selecting clients with more significant effects, enabling the global model to converge to a high accuracy in fewer rounds. FLrce also leverages an early stopping mechanism to terminate FL in advance to save communication and computation resources. Experiment results show that FLrce increases the communication and computation efficiency by 6% to 73.9% and 20% to 79.5%, respectively, while maintaining competitive accuracy.

An Ontology-based Context Model in Intelligent Environments

Mar 06, 2020

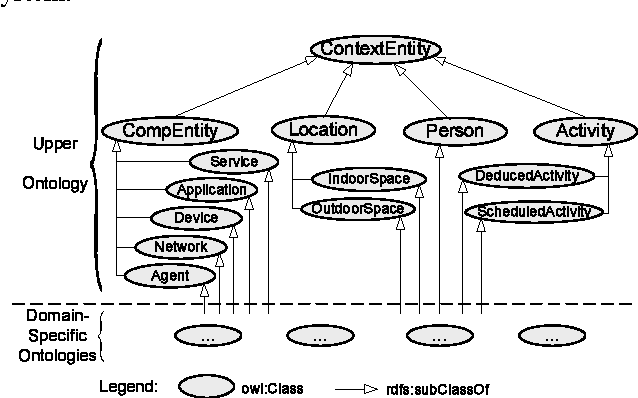

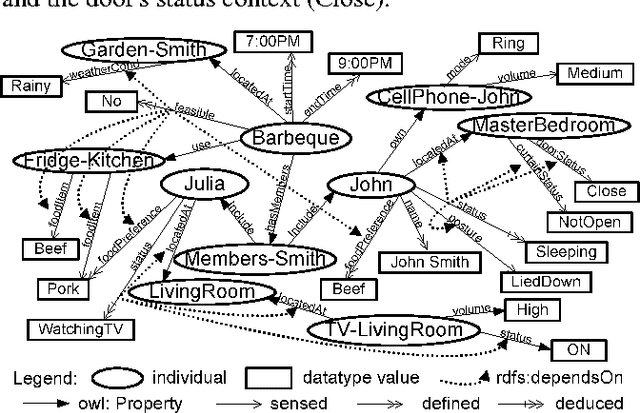

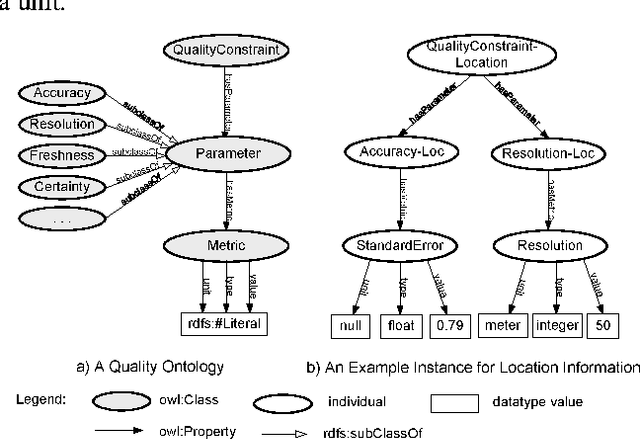

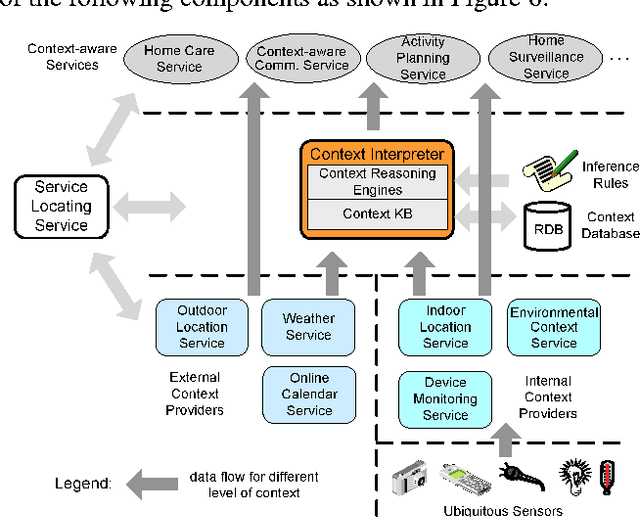

Abstract:Computing becomes increasingly mobile and pervasive today; these changes imply that applications and services must be aware of and adapt to their changing contexts in highly dynamic environments. Today, building context-aware systems is a complex task due to lack of an appropriate infrastructure support in intelligent environments. A context-aware infrastructure requires an appropriate context model to represent, manipulate and access context information. In this paper, we propose a formal context model based on ontology using OWL to address issues including semantic context representation, context reasoning and knowledge sharing, context classification, context dependency and quality of context. The main benefit of this model is the ability to reason about various contexts. Based on our context model, we also present a Service-Oriented Context-Aware Middleware (SOCAM) architecture for building of context-aware services.

MDLdroid: a ChainSGD-reduce Approach to Mobile Deep Learning for Personal Mobile Sensing

Feb 15, 2020

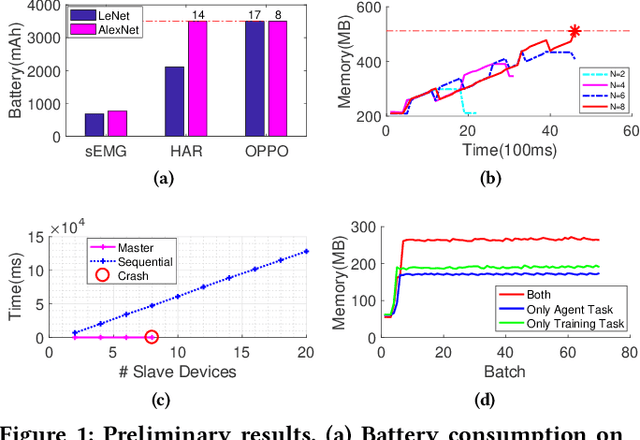

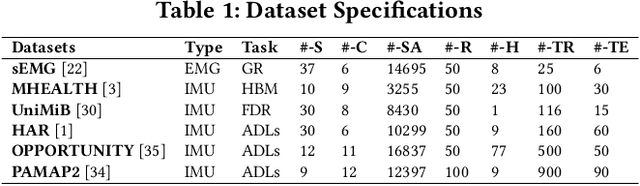

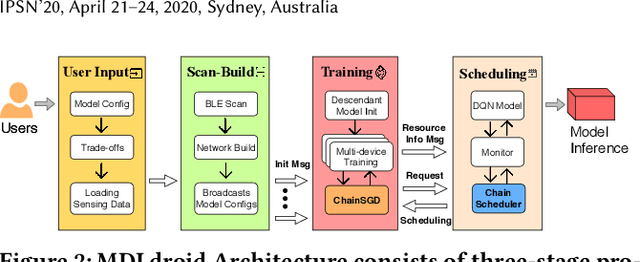

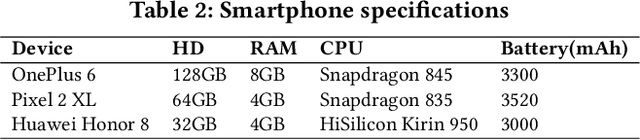

Abstract:Personal mobile sensing is fast permeating our daily lives to enable activity monitoring, healthcare and rehabilitation. Combined with deep learning, these applications have achieved significant success in recent years. Different from conventional cloud-based paradigms, running deep learning on devices offers several advantages including data privacy preservation and low-latency response for both model inference and update. Since data collection is costly in reality, Google's Federated Learning offers not only complete data privacy but also better model robustness based on multiple user data. However, personal mobile sensing applications are mostly user-specific and highly affected by environment. As a result, continuous local changes may seriously affect the performance of a global model generated by Federated Learning. In addition, deploying Federated Learning on a local server, e.g., edge server, may quickly reach the bottleneck due to resource constraint and serious failure by attacks. Towards pushing deep learning on devices, we present MDLdroid, a novel decentralized mobile deep learning framework to enable resource-aware on-device collaborative learning for personal mobile sensing applications. To address resource limitation, we propose a ChainSGD-reduce approach which includes a novel chain-directed Synchronous Stochastic Gradient Descent algorithm to effectively reduce overhead among multiple devices. We also design an agent-based multi-goal reinforcement learning mechanism to balance resources in a fair and efficient manner. Our evaluations show that our model training on off-the-shelf mobile devices achieves 2x to 3.5x faster than single-device training, and 1.5x faster than the master-slave approach.

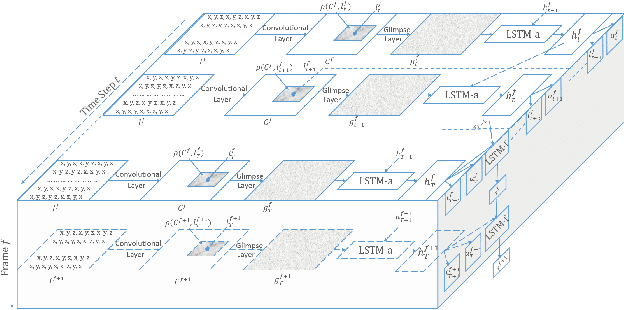

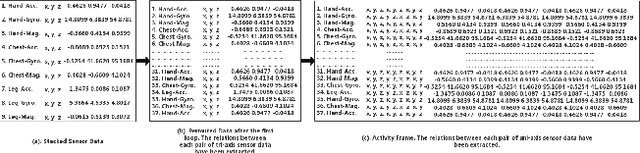

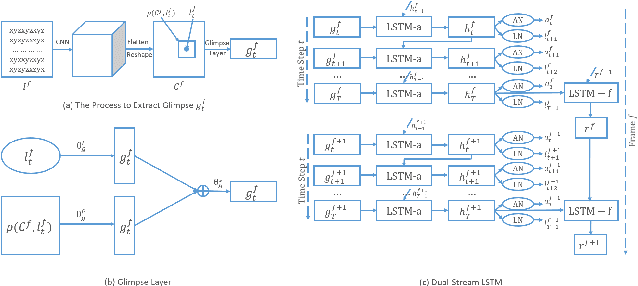

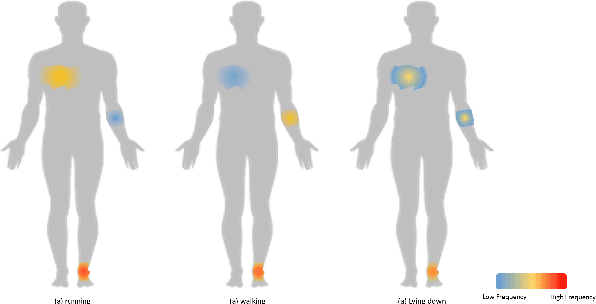

Interpretable Parallel Recurrent Neural Networks with Convolutional Attentions for Multi-Modality Activity Modeling

May 17, 2018

Abstract:Multimodal features play a key role in wearable sensor-based human activity recognition (HAR). Selecting the most salient features adaptively is a promising way to maximize the effectiveness of multimodal sensor data. In this regard, we propose a "collect fully and select wisely" principle as well as an interpretable parallel recurrent model with convolutional attentions to improve the recognition performance. We first collect modality features and the relations between each pair of features to generate activity frames, and then introduce an attention mechanism to select the most prominent regions from activity frames precisely. The selected frames not only maximize the utilization of valid features but also reduce the number of features to be computed effectively. We further analyze the accuracy and interpretability of the proposed model based on extensive experiments. The results show that our model achieves competitive performance on two benchmarked datasets and works well in real life scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge