MDLdroid: a ChainSGD-reduce Approach to Mobile Deep Learning for Personal Mobile Sensing

Paper and Code

Feb 15, 2020

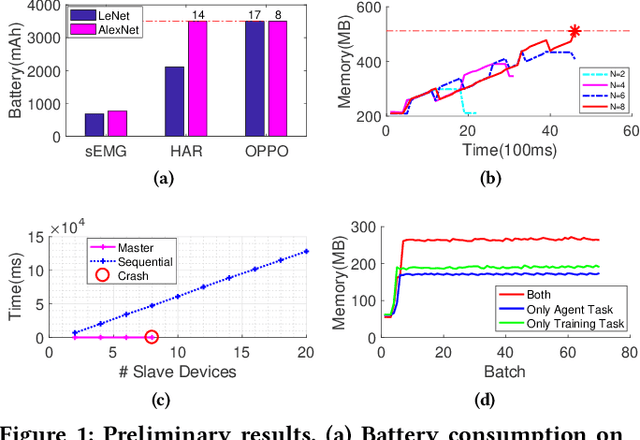

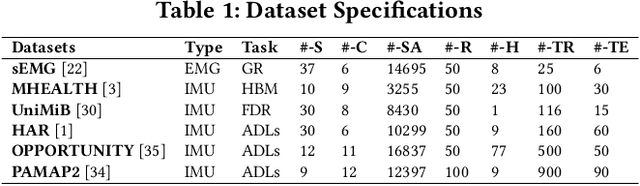

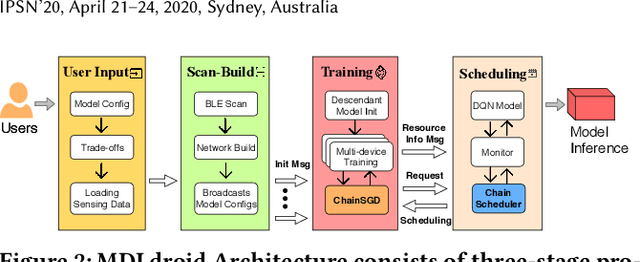

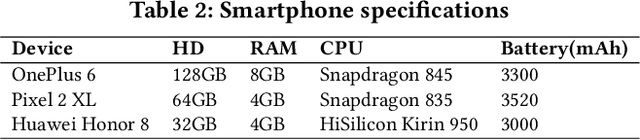

Personal mobile sensing is fast permeating our daily lives to enable activity monitoring, healthcare and rehabilitation. Combined with deep learning, these applications have achieved significant success in recent years. Different from conventional cloud-based paradigms, running deep learning on devices offers several advantages including data privacy preservation and low-latency response for both model inference and update. Since data collection is costly in reality, Google's Federated Learning offers not only complete data privacy but also better model robustness based on multiple user data. However, personal mobile sensing applications are mostly user-specific and highly affected by environment. As a result, continuous local changes may seriously affect the performance of a global model generated by Federated Learning. In addition, deploying Federated Learning on a local server, e.g., edge server, may quickly reach the bottleneck due to resource constraint and serious failure by attacks. Towards pushing deep learning on devices, we present MDLdroid, a novel decentralized mobile deep learning framework to enable resource-aware on-device collaborative learning for personal mobile sensing applications. To address resource limitation, we propose a ChainSGD-reduce approach which includes a novel chain-directed Synchronous Stochastic Gradient Descent algorithm to effectively reduce overhead among multiple devices. We also design an agent-based multi-goal reinforcement learning mechanism to balance resources in a fair and efficient manner. Our evaluations show that our model training on off-the-shelf mobile devices achieves 2x to 3.5x faster than single-device training, and 1.5x faster than the master-slave approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge