Tanghui Jia

UltraShape 1.0: High-Fidelity 3D Shape Generation via Scalable Geometric Refinement

Dec 24, 2025Abstract:In this report, we introduce UltraShape 1.0, a scalable 3D diffusion framework for high-fidelity 3D geometry generation. The proposed approach adopts a two-stage generation pipeline: a coarse global structure is first synthesized and then refined to produce detailed, high-quality geometry. To support reliable 3D generation, we develop a comprehensive data processing pipeline that includes a novel watertight processing method and high-quality data filtering. This pipeline improves the geometric quality of publicly available 3D datasets by removing low-quality samples, filling holes, and thickening thin structures, while preserving fine-grained geometric details. To enable fine-grained geometry refinement, we decouple spatial localization from geometric detail synthesis in the diffusion process. We achieve this by performing voxel-based refinement at fixed spatial locations, where voxel queries derived from coarse geometry provide explicit positional anchors encoded via RoPE, allowing the diffusion model to focus on synthesizing local geometric details within a reduced, structured solution space. Our model is trained exclusively on publicly available 3D datasets, achieving strong geometric quality despite limited training resources. Extensive evaluations demonstrate that UltraShape 1.0 performs competitively with existing open-source methods in both data processing quality and geometry generation. All code and trained models will be released to support future research.

Open-Sora Plan: Open-Source Large Video Generation Model

Nov 28, 2024

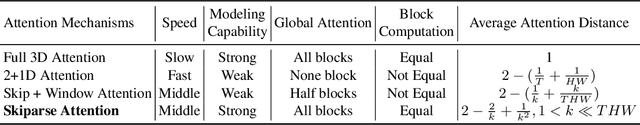

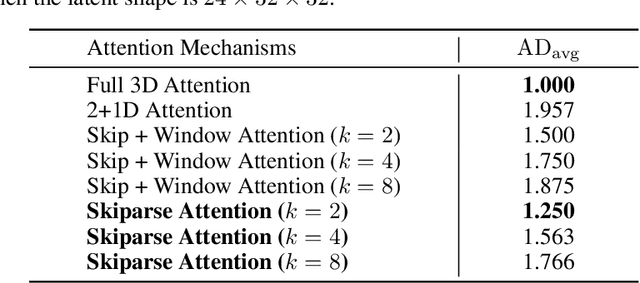

Abstract:We introduce Open-Sora Plan, an open-source project that aims to contribute a large generation model for generating desired high-resolution videos with long durations based on various user inputs. Our project comprises multiple components for the entire video generation process, including a Wavelet-Flow Variational Autoencoder, a Joint Image-Video Skiparse Denoiser, and various condition controllers. Moreover, many assistant strategies for efficient training and inference are designed, and a multi-dimensional data curation pipeline is proposed for obtaining desired high-quality data. Benefiting from efficient thoughts, our Open-Sora Plan achieves impressive video generation results in both qualitative and quantitative evaluations. We hope our careful design and practical experience can inspire the video generation research community. All our codes and model weights are publicly available at \url{https://github.com/PKU-YuanGroup/Open-Sora-Plan}.

Envision3D: One Image to 3D with Anchor Views Interpolation

Mar 13, 2024

Abstract:We present Envision3D, a novel method for efficiently generating high-quality 3D content from a single image. Recent methods that extract 3D content from multi-view images generated by diffusion models show great potential. However, it is still challenging for diffusion models to generate dense multi-view consistent images, which is crucial for the quality of 3D content extraction. To address this issue, we propose a novel cascade diffusion framework, which decomposes the challenging dense views generation task into two tractable stages, namely anchor views generation and anchor views interpolation. In the first stage, we train the image diffusion model to generate global consistent anchor views conditioning on image-normal pairs. Subsequently, leveraging our video diffusion model fine-tuned on consecutive multi-view images, we conduct interpolation on the previous anchor views to generate extra dense views. This framework yields dense, multi-view consistent images, providing comprehensive 3D information. To further enhance the overall generation quality, we introduce a coarse-to-fine sampling strategy for the reconstruction algorithm to robustly extract textured meshes from the generated dense images. Extensive experiments demonstrate that our method is capable of generating high-quality 3D content in terms of texture and geometry, surpassing previous image-to-3D baseline methods.

Scene-Generalizable Interactive Segmentation of Radiance Fields

Aug 09, 2023

Abstract:Existing methods for interactive segmentation in radiance fields entail scene-specific optimization and thus cannot generalize across different scenes, which greatly limits their applicability. In this work we make the first attempt at Scene-Generalizable Interactive Segmentation in Radiance Fields (SGISRF) and propose a novel SGISRF method, which can perform 3D object segmentation for novel (unseen) scenes represented by radiance fields, guided by only a few interactive user clicks in a given set of multi-view 2D images. In particular, the proposed SGISRF focuses on addressing three crucial challenges with three specially designed techniques. First, we devise the Cross-Dimension Guidance Propagation to encode the scarce 2D user clicks into informative 3D guidance representations. Second, the Uncertainty-Eliminated 3D Segmentation module is designed to achieve efficient yet effective 3D segmentation. Third, Concealment-Revealed Supervised Learning scheme is proposed to reveal and correct the concealed 3D segmentation errors resulted from the supervision in 2D space with only 2D mask annotations. Extensive experiments on two real-world challenging benchmarks covering diverse scenes demonstrate 1) effectiveness and scene-generalizability of the proposed method, 2) favorable performance compared to classical method requiring scene-specific optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge