T. Patrick Xiao

Scalable extensions to given-data Sobol' index estimators

Sep 11, 2025Abstract:Given-data methods for variance-based sensitivity analysis have significantly advanced the feasibility of Sobol' index computation for computationally expensive models and models with many inputs. However, the limitations of existing methods still preclude their application to models with an extremely large number of inputs. In this work, we present practical extensions to the existing given-data Sobol' index method, which allow variance-based sensitivity analysis to be efficiently performed on large models such as neural networks, which have $>10^4$ parameterizable inputs. For models of this size, holding all input-output evaluations simultaneously in memory -- as required by existing methods -- can quickly become impractical. These extensions also support nonstandard input distributions with many repeated values, which are not amenable to equiprobable partitions employed by existing given-data methods. Our extensions include a general definition of the given-data Sobol' index estimator with arbitrary partition, a streaming algorithm to process input-output samples in batches, and a heuristic to filter out small indices that are indistinguishable from zero indices due to statistical noise. We show that the equiprobable partition employed in existing given-data methods can introduce significant bias into Sobol' index estimates even at large sample sizes and provide numerical analyses that demonstrate why this can occur. We also show that our streaming algorithm can achieve comparable accuracy and runtimes with lower memory requirements, relative to current methods which process all samples at once. We demonstrate our novel developments on two application problems in neural network modeling.

Analog Bayesian neural networks are insensitive to the shape of the weight distribution

Jan 09, 2025Abstract:Recent work has demonstrated that Bayesian neural networks (BNN's) trained with mean field variational inference (MFVI) can be implemented in analog hardware, promising orders of magnitude energy savings compared to the standard digital implementations. However, while Gaussians are typically used as the variational distribution in MFVI, it is difficult to precisely control the shape of the noise distributions produced by sampling analog devices. This paper introduces a method for MFVI training using real device noise as the variational distribution. Furthermore, we demonstrate empirically that the predictive distributions from BNN's with the same weight means and variances converge to the same distribution, regardless of the shape of the variational distribution. This result suggests that analog device designers do not need to consider the shape of the device noise distribution when hardware-implementing BNNs performing MFVI.

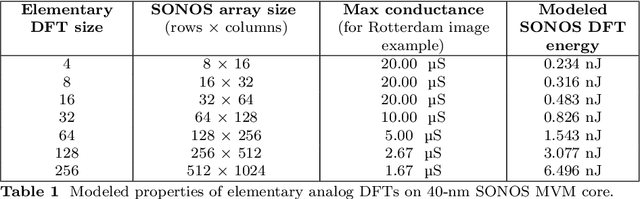

Analog fast Fourier transforms for scalable and efficient signal processing

Sep 27, 2024

Abstract:Edge devices are being deployed at increasing volumes to sense and act on information from the physical world. The discrete Fourier transform (DFT) is often necessary to make this sensed data suitable for further processing $\unicode{x2013}$ such as by artificial intelligence (AI) algorithms $\unicode{x2013}$ and for transmission over communication networks. Analog in-memory computing has been shown to be a fast and energy-efficient solution for processing edge AI workloads, but not for Fourier transforms. This is because of the existence of the fast Fourier transform (FFT) algorithm, which enormously reduces the complexity of the DFT but has so far belonged only to digital processors. Here, we show that the FFT can be mapped to analog in-memory computing systems, enabling them to efficiently scale to arbitrarily large Fourier transforms without requiring large sizes or large numbers of non-volatile memory arrays. We experimentally demonstrate analog FFTs on 1D audio and 2D image signals, using a large-scale charge-trapping memory array with precisely tunable, low-conductance analog states. The scalability of both the new analog FFT approach and the charge-trapping memory device is leveraged to compute a 65,536-point analog DFT, a scale that is otherwise inaccessible by analog systems and which is $>$1000$\times$ larger than any previous analog DFT demonstration. The analog FFT also provides more numerically precise DFTs with greater tolerance to device and circuit non-idealities than a direct matrix-vector multiplication approach. We show that the extension of the FFT algorithm to analog in-memory processors leads to design considerations that differ markedly from digital implementations, and that analog Fourier transforms have a substantial power efficiency advantage at all size scales over FFTs implemented on state-of-the-art digital hardware.

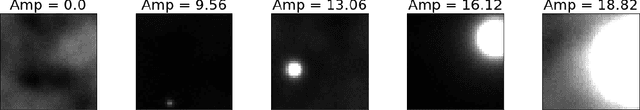

An out-of-distribution discriminator based on Bayesian neural network epistemic uncertainty

Oct 18, 2022

Abstract:Neural networks have revolutionized the field of machine learning with increased predictive capability. In addition to improving the predictions of neural networks, there is a simultaneous demand for reliable uncertainty quantification on estimates made by machine learning methods such as neural networks. Bayesian neural networks (BNNs) are an important type of neural network with built-in capability for quantifying uncertainty. This paper discusses aleatoric and epistemic uncertainty in BNNs and how they can be calculated. With an example dataset of images where the goal is to identify the amplitude of an event in the image, it is shown that epistemic uncertainty tends to be lower in images which are well-represented in the training dataset and tends to be high in images which are not well-represented. An algorithm for out-of-distribution (OoD) detection with BNN epistemic uncertainty is introduced along with various experiments demonstrating factors influencing the OoD detection capability in a BNN. The OoD detection capability with epistemic uncertainty is shown to be comparable to the OoD detection in the discriminator network of a generative adversarial network (GAN) with comparable network architecture.

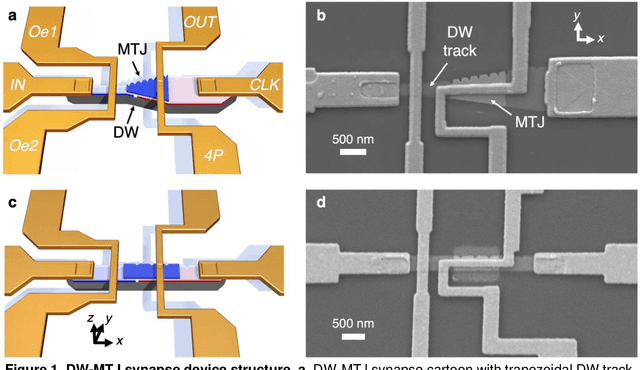

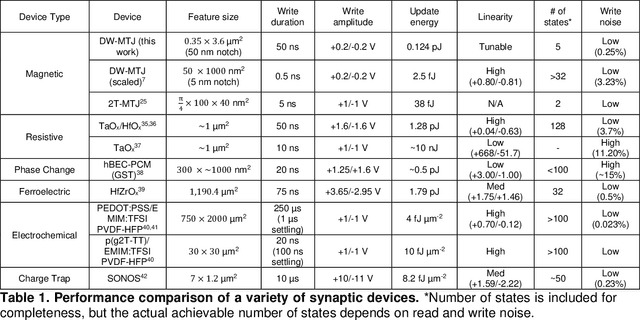

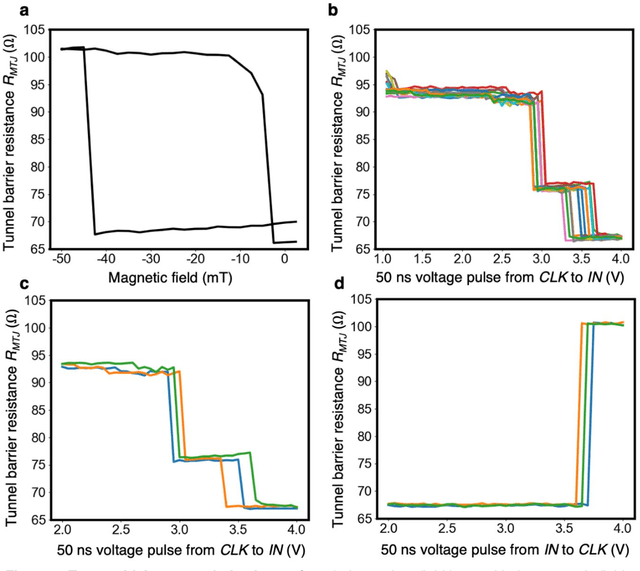

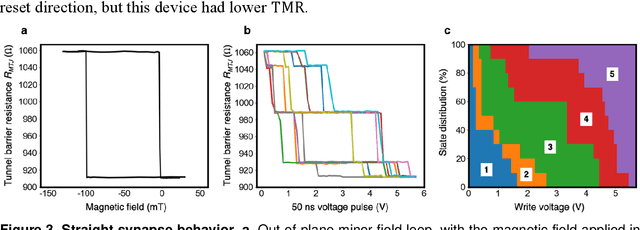

Shape-Dependent Multi-Weight Magnetic Artificial Synapses for Neuromorphic Computing

Nov 22, 2021

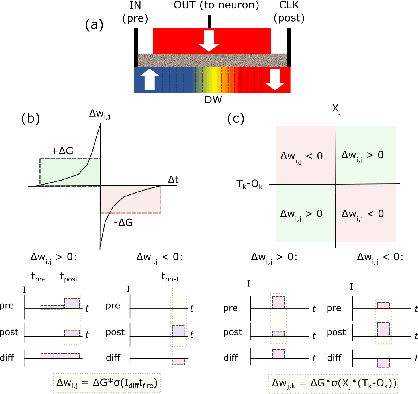

Abstract:In neuromorphic computing, artificial synapses provide a multi-weight conductance state that is set based on inputs from neurons, analogous to the brain. Additional properties of the synapse beyond multiple weights can be needed, and can depend on the application, requiring the need for generating different synapse behaviors from the same materials. Here, we measure artificial synapses based on magnetic materials that use a magnetic tunnel junction and a magnetic domain wall. By fabricating lithographic notches in a domain wall track underneath a single magnetic tunnel junction, we achieve 4-5 stable resistance states that can be repeatably controlled electrically using spin orbit torque. We analyze the effect of geometry on the synapse behavior, showing that a trapezoidal device has asymmetric weight updates with high controllability, while a straight device has higher stochasticity, but with stable resistance levels. The device data is input into neuromorphic computing simulators to show the usefulness of application-specific synaptic functions. Implementing an artificial neural network applied on streamed Fashion-MNIST data, we show that the trapezoidal magnetic synapse can be used as a metaplastic function for efficient online learning. Implementing a convolutional neural network for CIFAR-100 image recognition, we show that the straight magnetic synapse achieves near-ideal inference accuracy, due to the stability of its resistance levels. This work shows multi-weight magnetic synapses are a feasible technology for neuromorphic computing and provides design guidelines for emerging artificial synapse technologies.

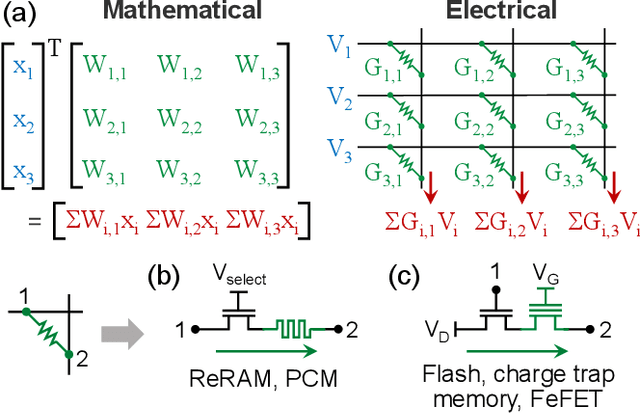

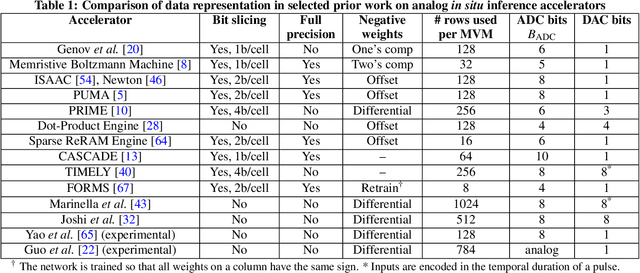

On the Accuracy of Analog Neural Network Inference Accelerators

Sep 12, 2021

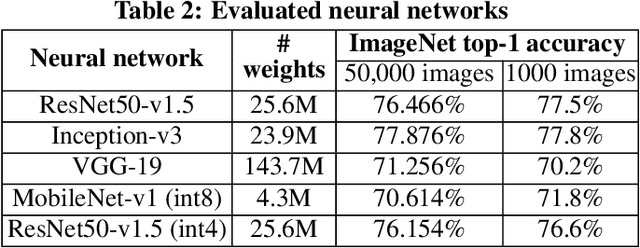

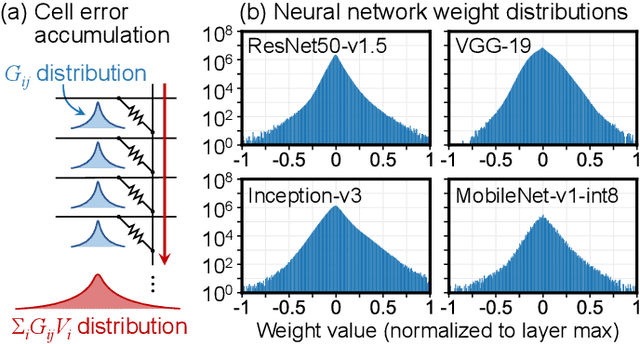

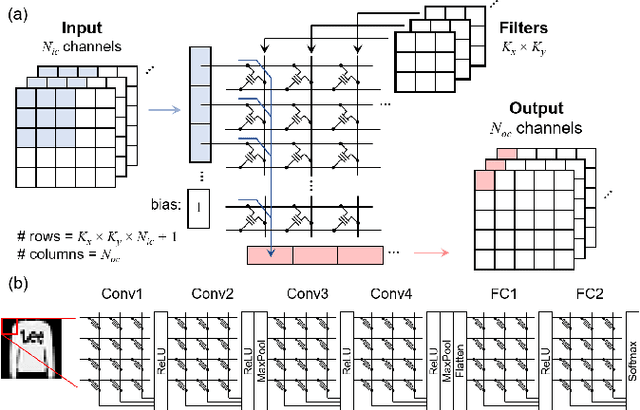

Abstract:Specialized accelerators have recently garnered attention as a method to reduce the power consumption of neural network inference. A promising category of accelerators utilizes nonvolatile memory arrays to both store weights and perform $\textit{in situ}$ analog computation inside the array. While prior work has explored the design space of analog accelerators to optimize performance and energy efficiency, there is seldom a rigorous evaluation of the accuracy of these accelerators. This work shows how architectural design decisions, particularly in mapping neural network parameters to analog memory cells, influence inference accuracy. When evaluated using ResNet50 on ImageNet, the resilience of the system to analog non-idealities - cell programming errors, analog-to-digital converter resolution, and array parasitic resistances - all improve when analog quantities in the hardware are made proportional to the weights in the network. Moreover, contrary to the assumptions of prior work, nearly equivalent resilience to cell imprecision can be achieved by fully storing weights as analog quantities, rather than spreading weight bits across multiple devices, often referred to as bit slicing. By exploiting proportionality, analog system designers have the freedom to match the precision of the hardware to the needs of the algorithm, rather than attempting to guarantee the same level of precision in the intermediate results as an equivalent digital accelerator. This ultimately results in an analog accelerator that is more accurate, more robust to analog errors, and more energy-efficient.

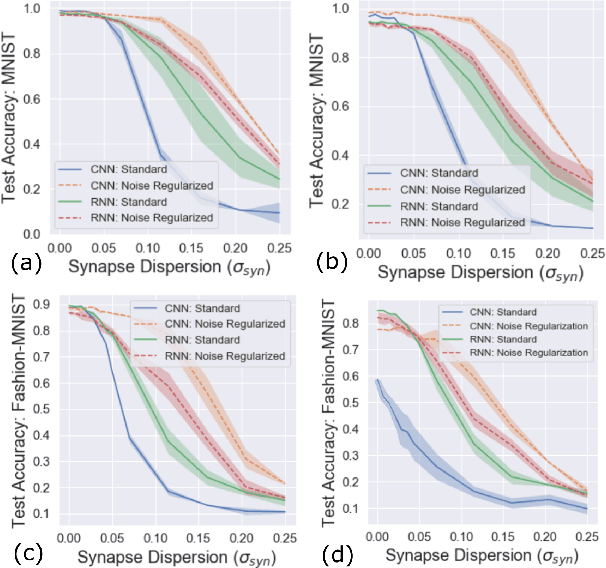

Device-aware inference operations in SONOS nonvolatile memory arrays

Apr 02, 2020

Abstract:Non-volatile memory arrays can deploy pre-trained neural network models for edge inference. However, these systems are affected by device-level noise and retention issues. Here, we examine damage caused by these effects, introduce a mitigation strategy, and demonstrate its use in fabricated array of SONOS (Silicon-Oxide-Nitride-Oxide-Silicon) devices. On MNIST, fashion-MNIST, and CIFAR-10 tasks, our approach increases resilience to synaptic noise and drift. We also show strong performance can be realized with ADCs of 5-8 bits precision.

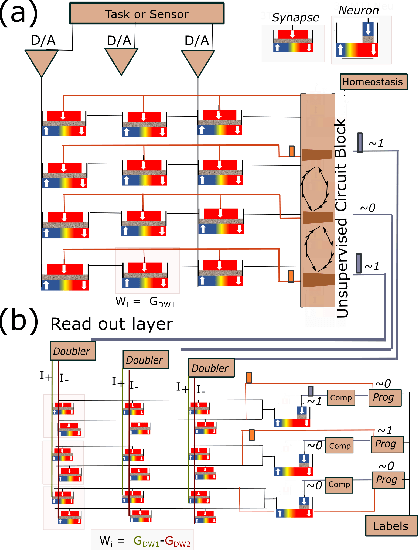

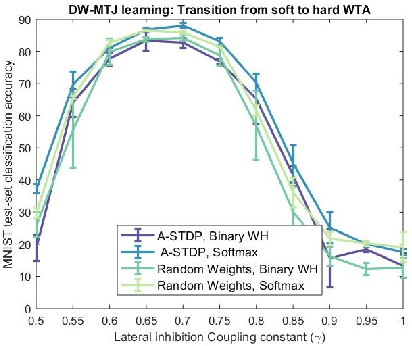

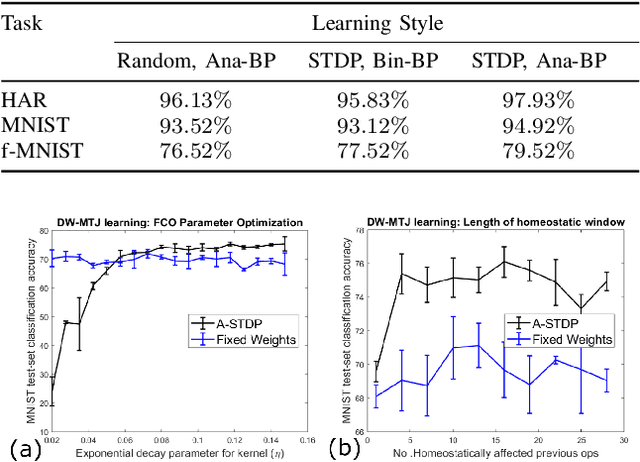

Plasticity-Enhanced Domain-Wall MTJ Neural Networks for Energy-Efficient Online Learning

Mar 04, 2020

Abstract:Machine learning implements backpropagation via abundant training samples. We demonstrate a multi-stage learning system realized by a promising non-volatile memory device, the domain-wall magnetic tunnel junction (DW-MTJ). The system consists of unsupervised (clustering) as well as supervised sub-systems, and generalizes quickly (with few samples). We demonstrate interactions between physical properties of this device and optimal implementation of neuroscience-inspired plasticity learning rules, and highlight performance on a suite of tasks. Our energy analysis confirms the value of the approach, as the learning budget stays below 20 $\mu J$ even for large tasks used typically in machine learning.

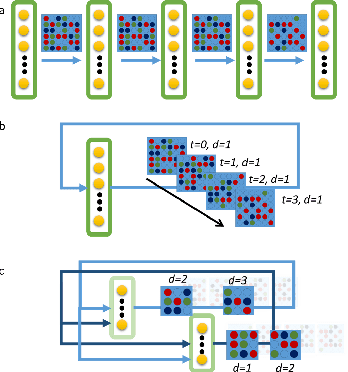

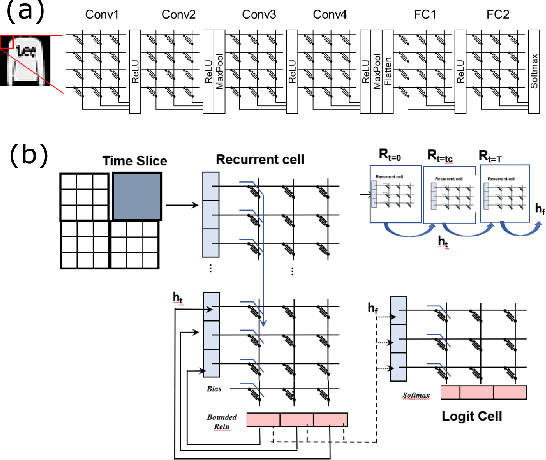

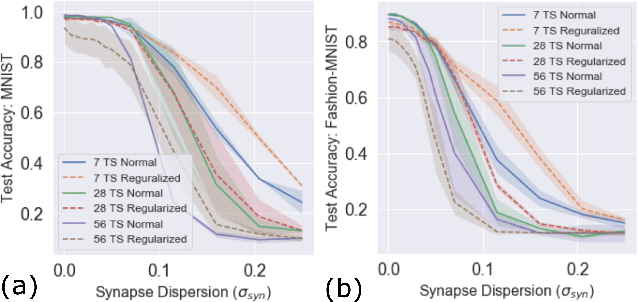

Evaluating complexity and resilience trade-offs in emerging memory inference machines

Feb 25, 2020

Abstract:Neuromorphic-style inference only works well if limited hardware resources are maximized properly, e.g. accuracy continues to scale with parameters and complexity in the face of potential disturbance. In this work, we use realistic crossbar simulations to highlight that compact implementations of deep neural networks are unexpectedly susceptible to collapse from multiple system disturbances. Our work proposes a middle path towards high performance and strong resilience utilizing the Mosaics framework, and specifically by re-using synaptic connections in a recurrent neural network implementation that possesses a natural form of noise-immunity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge