Samuel Liu

Stochastic Domain Wall-Magnetic Tunnel Junction Artificial Neurons for Noise-Resilient Spiking Neural Networks

Apr 10, 2023

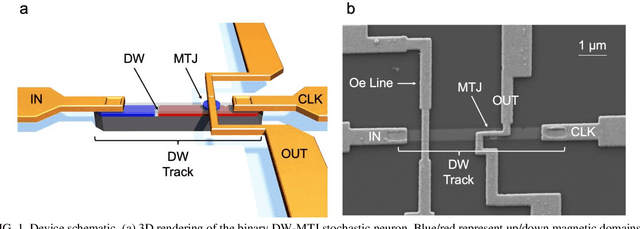

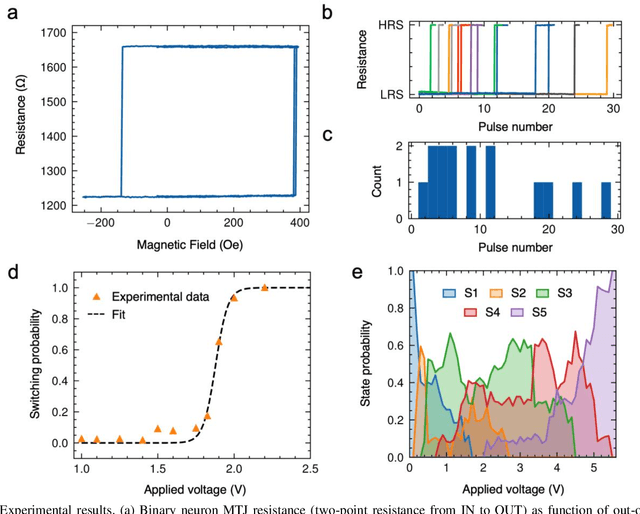

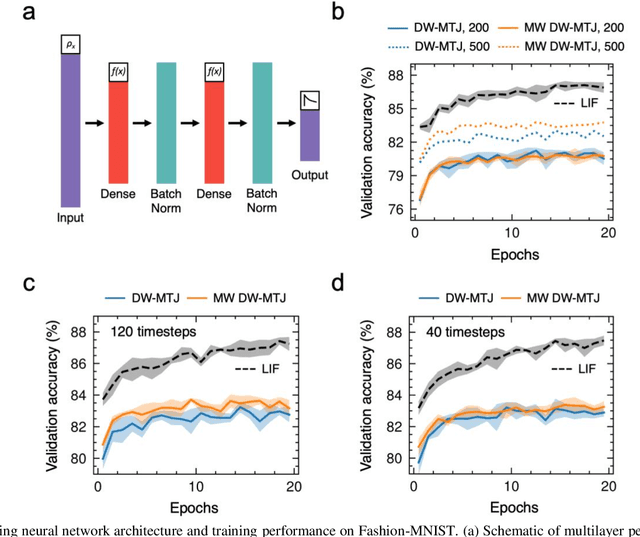

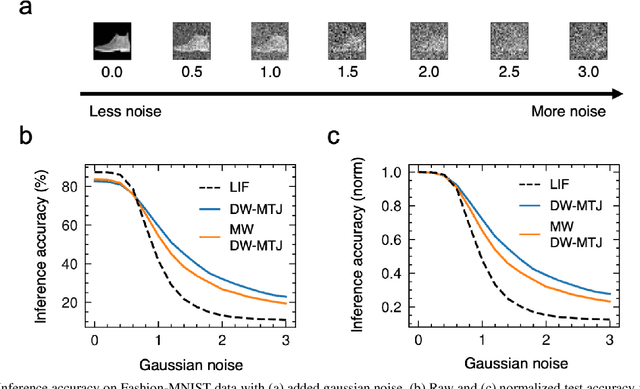

Abstract:The spatiotemporal nature of neuronal behavior in spiking neural networks (SNNs) make SNNs promising for edge applications that require high energy efficiency. To realize SNNs in hardware, spintronic neuron implementations can bring advantages of scalability and energy efficiency. Domain wall (DW) based magnetic tunnel junction (MTJ) devices are well suited for probabilistic neural networks given their intrinsic integrate-and-fire behavior with tunable stochasticity. Here, we present a scaled DW-MTJ neuron with voltage-dependent firing probability. The measured behavior was used to simulate a SNN that attains accuracy during learning compared to an equivalent, but more complicated, multi-weight (MW) DW-MTJ device. The validation accuracy during training was also shown to be comparable to an ideal leaky integrate and fire (LIF) device. However, during inference, the binary DW-MTJ neuron outperformed the other devices after gaussian noise was introduced to the Fashion-MNIST classification task. This work shows that DW-MTJ devices can be used to construct noise-resilient networks suitable for neuromorphic computing on the edge.

Shape-Dependent Multi-Weight Magnetic Artificial Synapses for Neuromorphic Computing

Nov 22, 2021

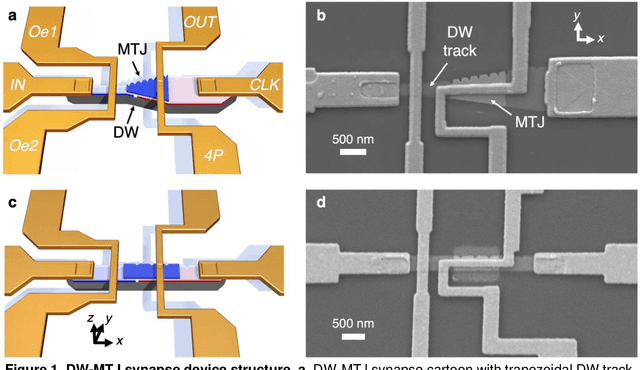

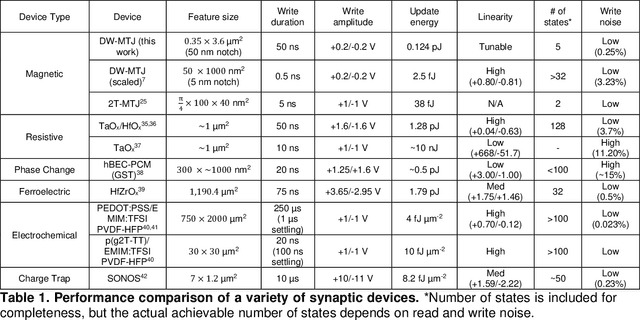

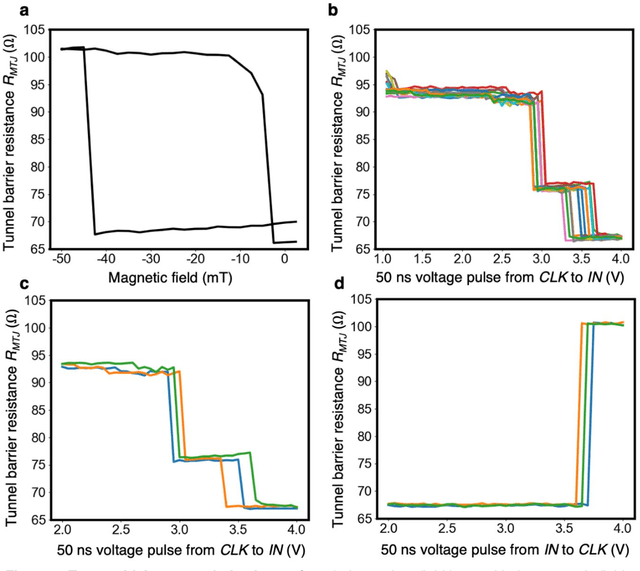

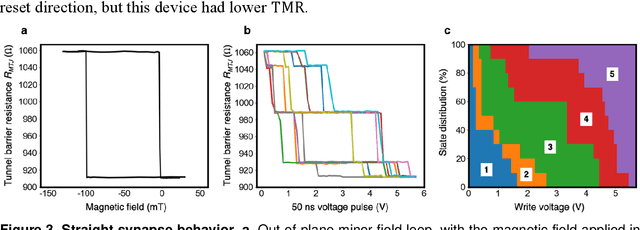

Abstract:In neuromorphic computing, artificial synapses provide a multi-weight conductance state that is set based on inputs from neurons, analogous to the brain. Additional properties of the synapse beyond multiple weights can be needed, and can depend on the application, requiring the need for generating different synapse behaviors from the same materials. Here, we measure artificial synapses based on magnetic materials that use a magnetic tunnel junction and a magnetic domain wall. By fabricating lithographic notches in a domain wall track underneath a single magnetic tunnel junction, we achieve 4-5 stable resistance states that can be repeatably controlled electrically using spin orbit torque. We analyze the effect of geometry on the synapse behavior, showing that a trapezoidal device has asymmetric weight updates with high controllability, while a straight device has higher stochasticity, but with stable resistance levels. The device data is input into neuromorphic computing simulators to show the usefulness of application-specific synaptic functions. Implementing an artificial neural network applied on streamed Fashion-MNIST data, we show that the trapezoidal magnetic synapse can be used as a metaplastic function for efficient online learning. Implementing a convolutional neural network for CIFAR-100 image recognition, we show that the straight magnetic synapse achieves near-ideal inference accuracy, due to the stability of its resistance levels. This work shows multi-weight magnetic synapses are a feasible technology for neuromorphic computing and provides design guidelines for emerging artificial synapse technologies.

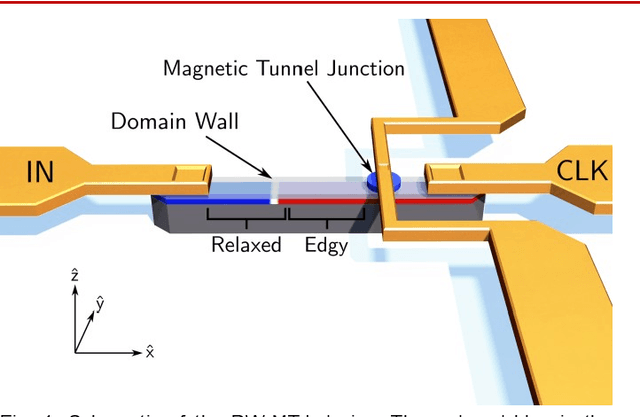

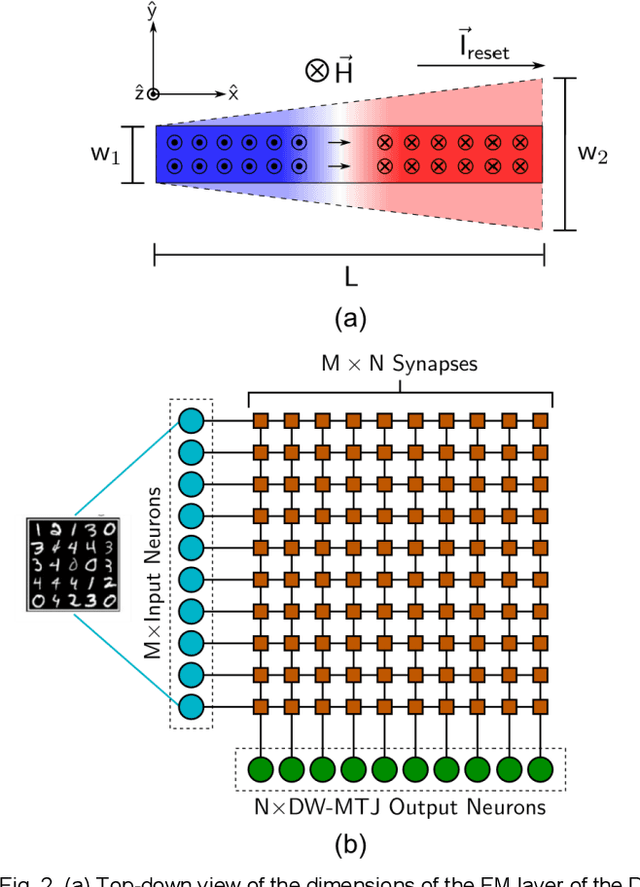

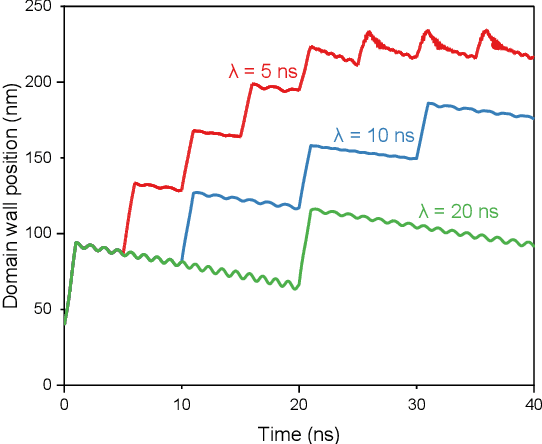

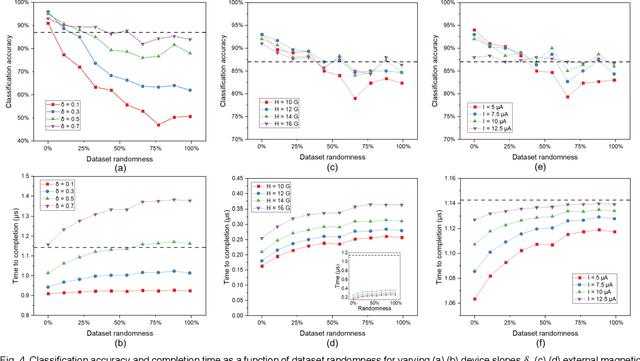

Controllable reset behavior in domain wall-magnetic tunnel junction artificial neurons for task-adaptable computation

Jan 08, 2021

Abstract:Neuromorphic computing with spintronic devices has been of interest due to the limitations of CMOS-driven von Neumann computing. Domain wall-magnetic tunnel junction (DW-MTJ) devices have been shown to be able to intrinsically capture biological neuron behavior. Edgy-relaxed behavior, where a frequently firing neuron experiences a lower action potential threshold, may provide additional artificial neuronal functionality when executing repeated tasks. In this study, we demonstrate that this behavior can be implemented in DW-MTJ artificial neurons via three alternative mechanisms: shape anisotropy, magnetic field, and current-driven soft reset. Using micromagnetics and analytical device modeling to classify the Optdigits handwritten digit dataset, we show that edgy-relaxed behavior improves both classification accuracy and classification rate for ordered datasets while sacrificing little to no accuracy for a randomized dataset. This work establishes methods by which artificial spintronic neurons can be flexibly adapted to datasets.

PAC Learning Guarantees Under Covariate Shift

Dec 16, 2018Abstract:We consider the Domain Adaptation problem, also known as the covariate shift problem, where the distributions that generate the training and test data differ while retaining the same labeling function. This problem occurs across a large range of practical applications, and is related to the more general challenge of transfer learning. Most recent work on the topic focuses on optimization techniques that are specific to an algorithm or practical use case rather than a more general approach. The sparse literature attempting to provide general bounds seems to suggest that efficient learning even under strong assumptions is not possible for covariate shift. Our main contribution is to recontextualize these results by showing that any Probably Approximately Correct (PAC) learnable concept class is still PAC learnable under covariate shift conditions with only a polynomial increase in the number of training samples. This approach essentially demonstrates that the Domain Adaptation learning problem is as hard as the underlying PAC learning problem, provided some conditions over the training and test distributions. We also present bounds for the rejection sampling algorithm, justifying it as a solution to the Domain Adaptation problem in certain scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge