Joseph S. Friedman

Online unsupervised Hebbian learning in deep photonic neuromorphic networks

Jan 29, 2026Abstract:While software implementations of neural networks have driven significant advances in computation, the von Neumann architecture imposes fundamental limitations on speed and energy efficiency. Neuromorphic networks, with structures inspired by the brain's architecture, offer a compelling solution with the potential to approach the extreme energy efficiency of neurobiological systems. Photonic neuromorphic networks (PNNs) are particularly attractive because they leverage the inherent advantages of light, namely high parallelism, low latency, and exceptional energy efficiency. Previous PNN demonstrations have largely focused on device-level functionalities or system-level implementations reliant on supervised learning and inefficient optical-electrical-optical (OEO) conversions. Here, we introduce a purely photonic deep PNN architecture that enables online, unsupervised learning. We propose a local feedback mechanism operating entirely in the optical domain that implements a Hebbian learning rule using non-volatile phase-change material synapses. We experimentally demonstrate this approach on a non-trivial letter recognition task using a commercially available fiber-optic platform and achieve a 100 percent recognition rate, showcasing an all-optical solution for efficient, real-time information processing. This work unlocks the potential of photonic computing for complex artificial intelligence applications by enabling direct, high-throughput processing of optical information without intermediate OEO signal conversions.

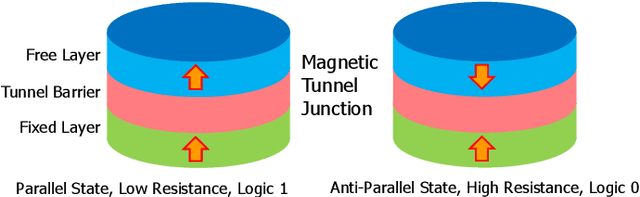

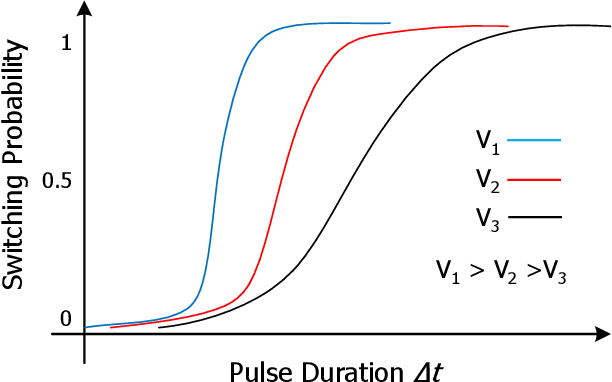

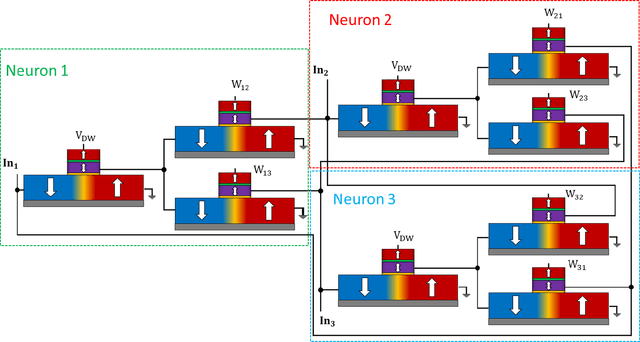

Neuromorphic Hebbian learning with magnetic tunnel junction synapses

Aug 21, 2023

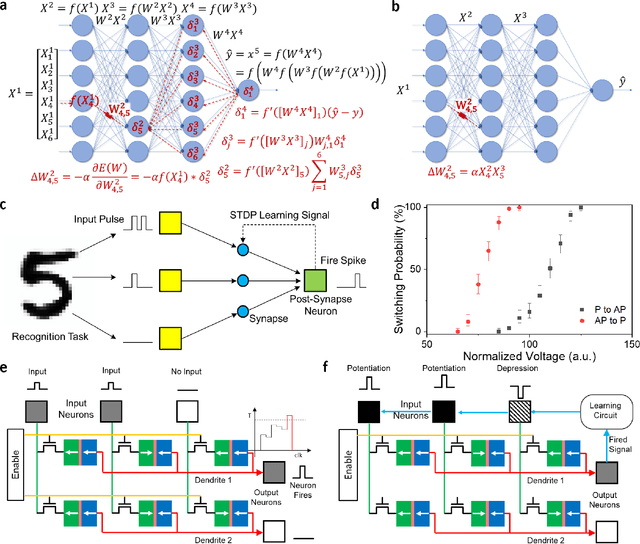

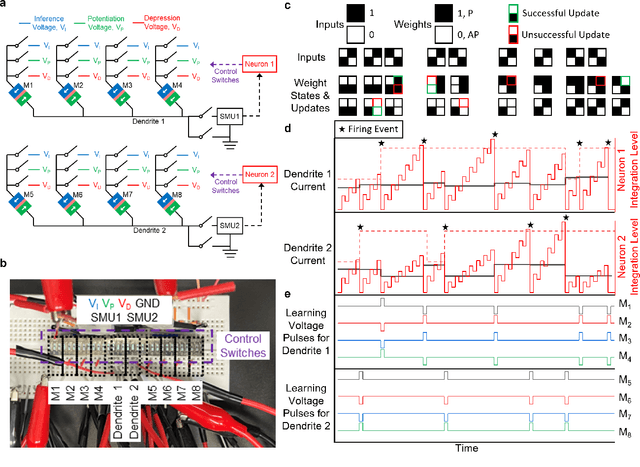

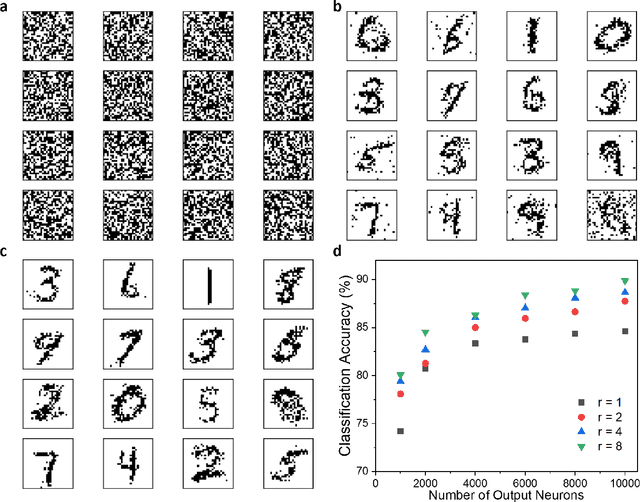

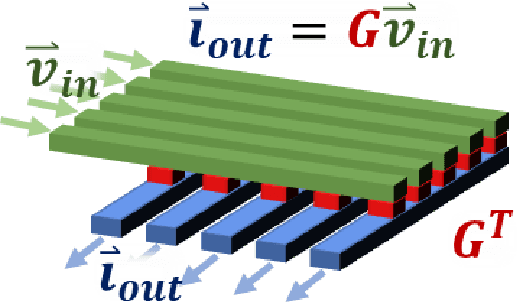

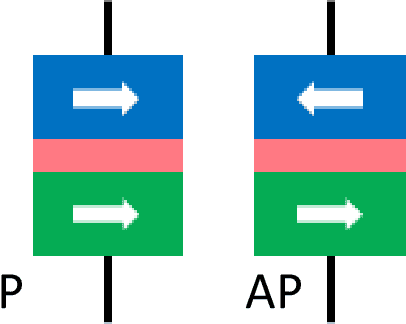

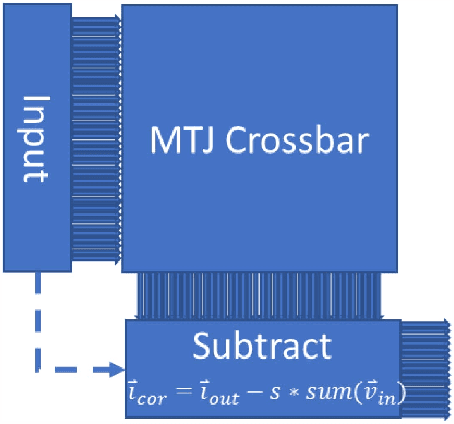

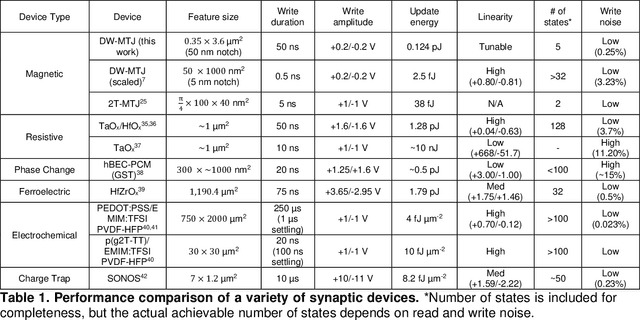

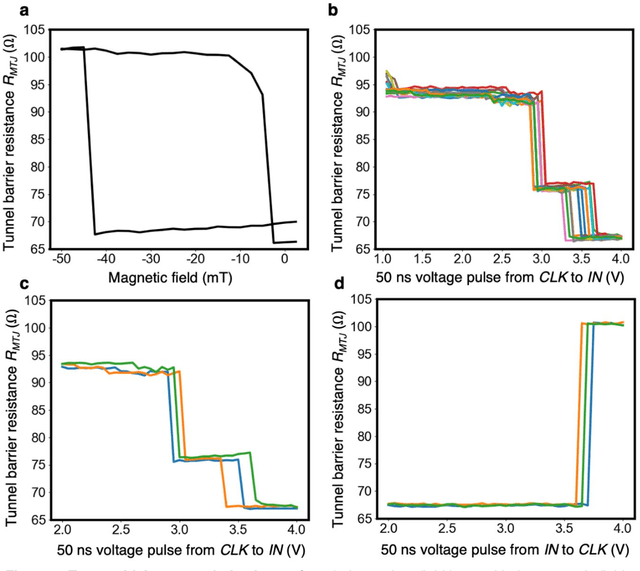

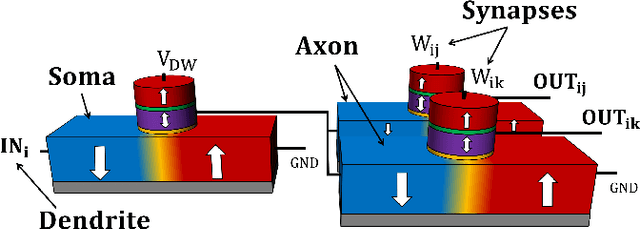

Abstract:Neuromorphic computing aims to mimic both the function and structure of biological neural networks to provide artificial intelligence with extreme efficiency. Conventional approaches store synaptic weights in non-volatile memory devices with analog resistance states, permitting in-memory computation of neural network operations while avoiding the costs associated with transferring synaptic weights from a memory array. However, the use of analog resistance states for storing weights in neuromorphic systems is impeded by stochastic writing, weights drifting over time through stochastic processes, and limited endurance that reduces the precision of synapse weights. Here we propose and experimentally demonstrate neuromorphic networks that provide high-accuracy inference thanks to the binary resistance states of magnetic tunnel junctions (MTJs), while leveraging the analog nature of their stochastic spin-transfer torque (STT) switching for unsupervised Hebbian learning. We performed the first experimental demonstration of a neuromorphic network directly implemented with MTJ synapses, for both inference and spike-timing-dependent plasticity learning. We also demonstrated through simulation that the proposed system for unsupervised Hebbian learning with stochastic STT-MTJ synapses can achieve competitive accuracies for MNIST handwritten digit recognition. By appropriately applying neuromorphic principles through hardware-aware design, the proposed STT-MTJ neuromorphic learning networks provide a pathway toward artificial intelligence hardware that learns autonomously with extreme efficiency.

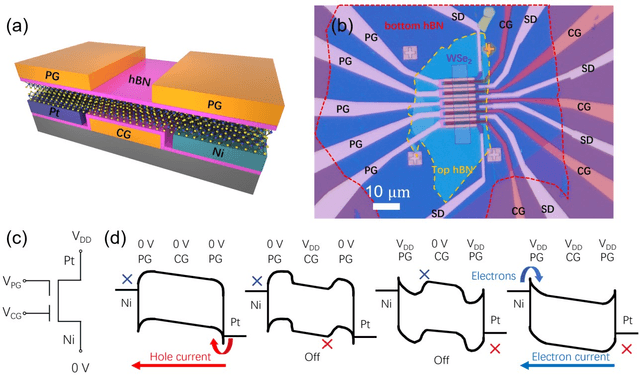

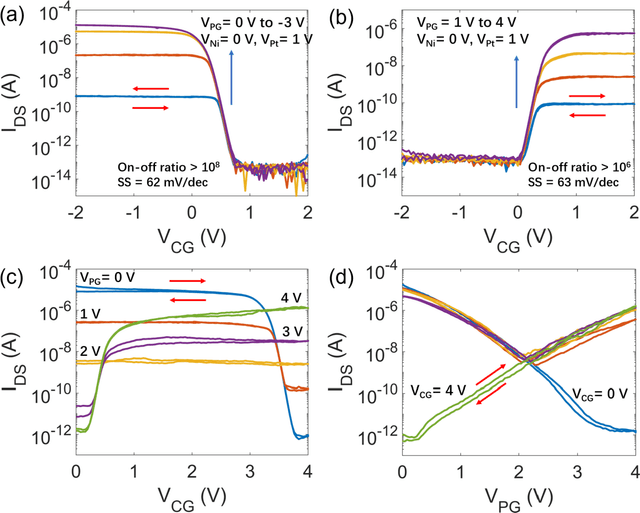

Cascaded Logic Gates Based on High-Performance Ambipolar Dual-Gate WSe2 Thin Film Transistors

May 02, 2023

Abstract:Ambipolar dual-gate transistors based on two-dimensional (2D) materials, such as graphene, carbon nanotubes, black phosphorus, and certain transition metal dichalcogenides (TMDs), enable reconfigurable logic circuits with suppressed off-state current. These circuits achieve the same logical output as CMOS with fewer transistors and offer greater flexibility in design. The primary challenge lies in the cascadability and power consumption of these logic gates with static CMOS-like connections. In this article, high-performance ambipolar dual-gate transistors based on tungsten diselenide (WSe2) are fabricated. A high on-off ratio of 10^8 and 10^6, a low off-state current of 100 to 300 fA, a negligible hysteresis, and an ideal subthreshold swing of 62 and 63 mV/dec are measured in the p- and n-type transport, respectively. For the first time, we demonstrate cascadable and cascaded logic gates using ambipolar TMD transistors with minimal static power consumption, including inverters, XOR, NAND, NOR, and buffers made by cascaded inverters. A thorough study of both the control gate and polarity gate behavior is conducted, which has previously been lacking. The noise margin of the logic gates is measured and analyzed. The large noise margin enables the implementation of VT-drop circuits, a type of logic with reduced transistor number and simplified circuit design. Finally, the speed performance of the VT-drop and other circuits built by dual-gate devices are qualitatively analyzed. This work lays the foundation for future developments in the field of ambipolar dual-gate TMD transistors, showing their potential for low-power, high-speed and more flexible logic circuits.

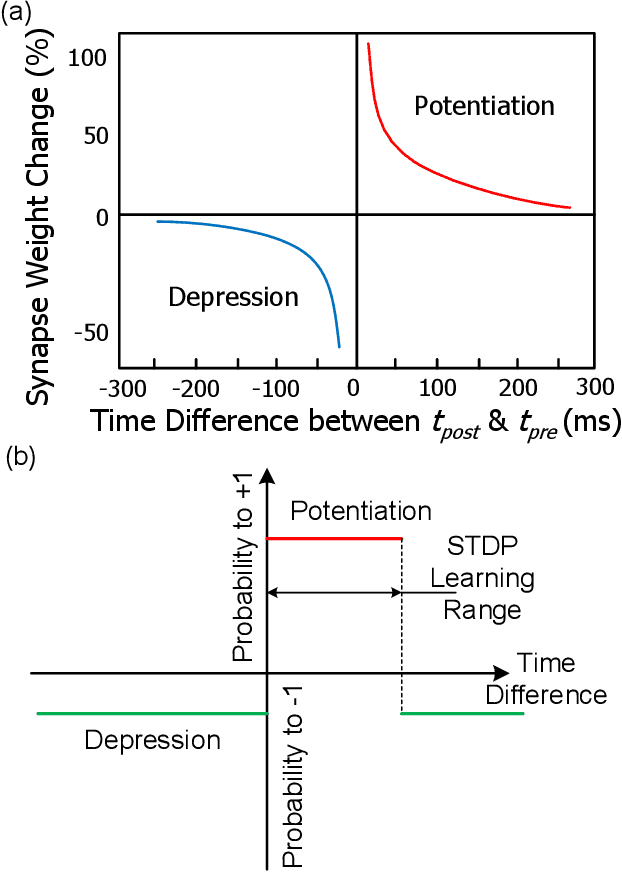

Synchronous Unsupervised STDP Learning with Stochastic STT-MRAM Switching

Dec 10, 2021

Abstract:The use of analog resistance states for storing weights in neuromorphic systems is impeded by fabrication imprecision and device stochasticity that limit the precision of synapse weights. This challenge can be resolved by emulating analog behavior with the stochastic switching of the binary states of spin-transfer torque magnetoresistive random-access memory (STT-MRAM). However, previous approaches based on STT-MRAM operate in an asynchronous manner that is difficult to implement experimentally. This paper proposes a synchronous spiking neural network system with clocked circuits that perform unsupervised learning leveraging the stochastic switching of STT-MRAM. The proposed system enables a single-layer network to achieve 90% inference accuracy on the MNIST dataset.

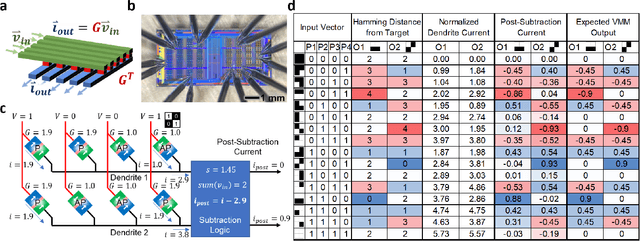

Experimental Demonstration of Neuromorphic Network with STT MTJ Synapses

Dec 09, 2021

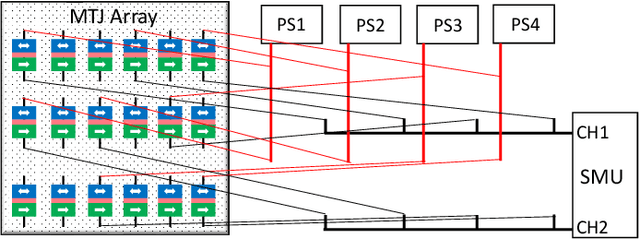

Abstract:We present the first experimental demonstration of a neuromorphic network with magnetic tunnel junction (MTJ) synapses, which performs image recognition via vector-matrix multiplication. We also simulate a large MTJ network performing MNIST handwritten digit recognition, demonstrating that MTJ crossbars can match memristor accuracy while providing increased precision, stability, and endurance.

Shape-Dependent Multi-Weight Magnetic Artificial Synapses for Neuromorphic Computing

Nov 22, 2021

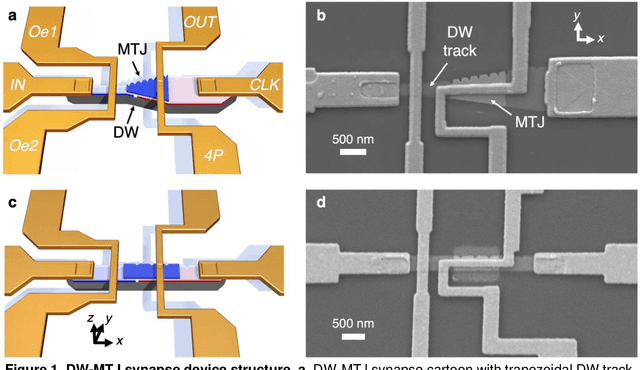

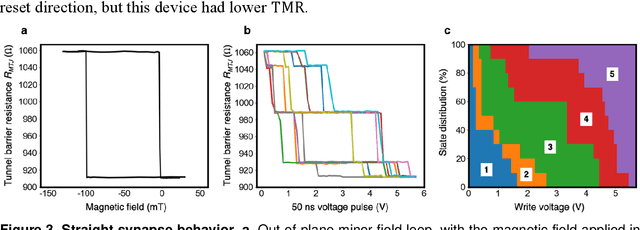

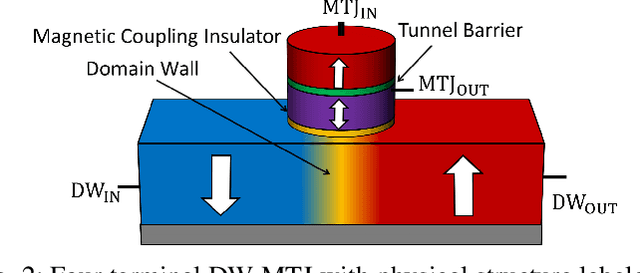

Abstract:In neuromorphic computing, artificial synapses provide a multi-weight conductance state that is set based on inputs from neurons, analogous to the brain. Additional properties of the synapse beyond multiple weights can be needed, and can depend on the application, requiring the need for generating different synapse behaviors from the same materials. Here, we measure artificial synapses based on magnetic materials that use a magnetic tunnel junction and a magnetic domain wall. By fabricating lithographic notches in a domain wall track underneath a single magnetic tunnel junction, we achieve 4-5 stable resistance states that can be repeatably controlled electrically using spin orbit torque. We analyze the effect of geometry on the synapse behavior, showing that a trapezoidal device has asymmetric weight updates with high controllability, while a straight device has higher stochasticity, but with stable resistance levels. The device data is input into neuromorphic computing simulators to show the usefulness of application-specific synaptic functions. Implementing an artificial neural network applied on streamed Fashion-MNIST data, we show that the trapezoidal magnetic synapse can be used as a metaplastic function for efficient online learning. Implementing a convolutional neural network for CIFAR-100 image recognition, we show that the straight magnetic synapse achieves near-ideal inference accuracy, due to the stability of its resistance levels. This work shows multi-weight magnetic synapses are a feasible technology for neuromorphic computing and provides design guidelines for emerging artificial synapse technologies.

High-Speed CMOS-Free Purely Spintronic Asynchronous Recurrent Neural Network

Jul 05, 2021

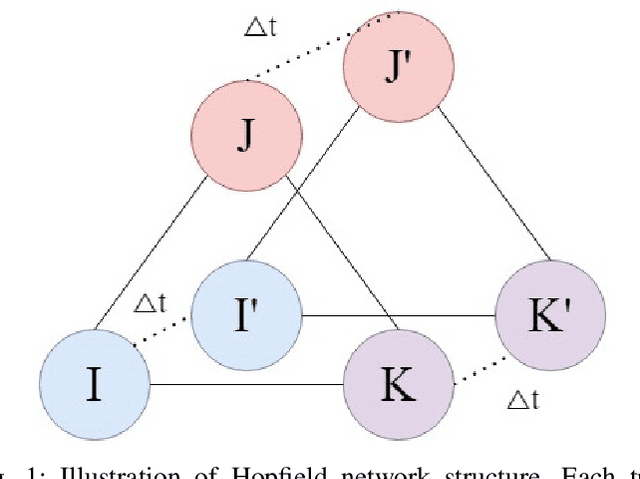

Abstract:Neuromorphic computing systems overcome the limitations of traditional von Neumann computing architectures. These computing systems can be further improved upon by using emerging technologies that are more efficient than CMOS for neural computation. Recent research has demonstrated memristors and spintronic devices in various neural network designs boost efficiency and speed. This paper presents a biologically inspired fully spintronic neuron used in a fully spintronic Hopfield RNN. The network is used to solve tasks, and the results are compared against those of current Hopfield neuromorphic architectures which use emerging technologies.

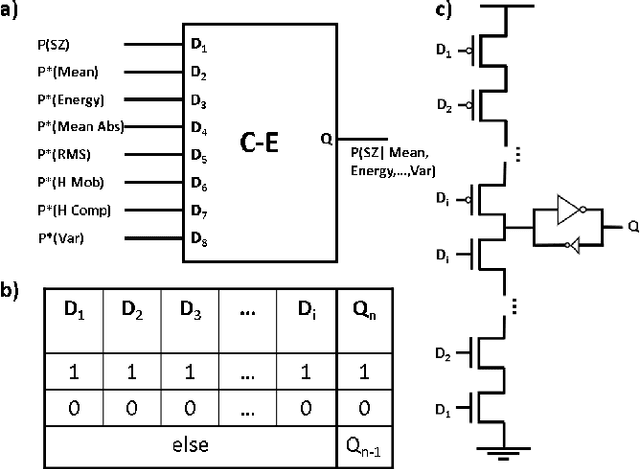

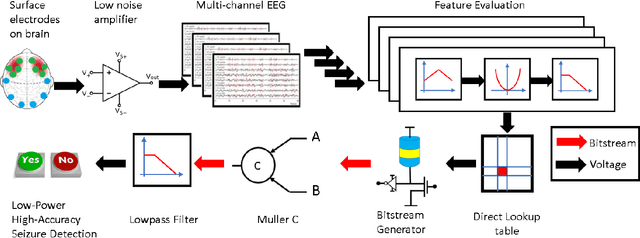

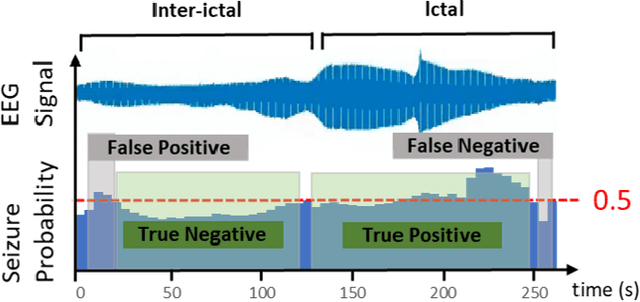

Analog Seizure Detection for Implanted Responsive Neurostimulation

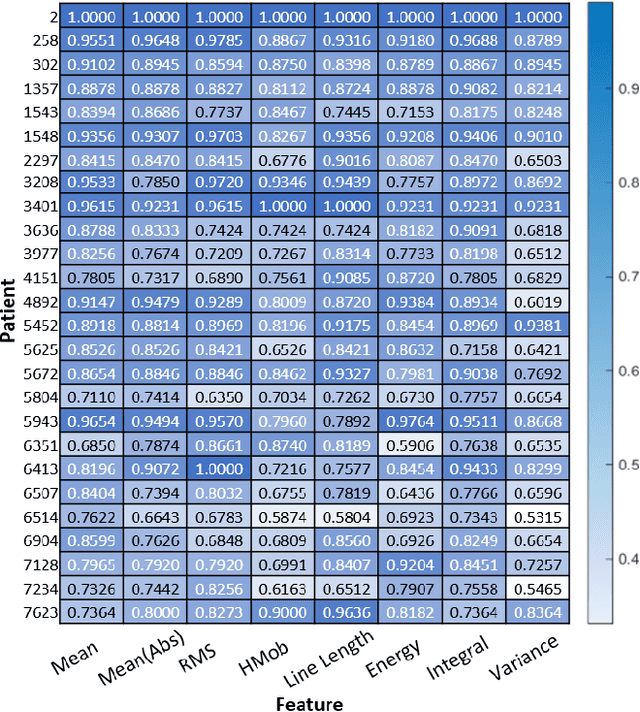

Jun 11, 2021

Abstract:Epilepsy can be treated with medication, however, $30\%$ of epileptic patients are still drug resistive. Devices like responsive neurostimluation systems are implanted in select patients who may not be amenable to surgical resection. However, state-of-the-art devices suffer from low accuracy and high sensitivity. We propose a novel patient-specific seizure detection system based on na\"ive Bayesian inference using M\"uller C-elements. The system improves upon the current leading neurostimulation device, NeuroPace's RNS by implementing analog signal processing for feature extraction, minimizing the power consumption compared to the digital counterpart. Preliminary simulations were performed in MATLAB, demonstrating that through integrating multiple channels and features, up to $98\%$ detection accuracy for individual patients can be achieved. Similarly, power calculations were performed, demonstrating that the system uses $6.5 \mu W$ per channel, which when compared to the state-of-the-art NeuroPace system would increase battery life by up to $50 \%$.

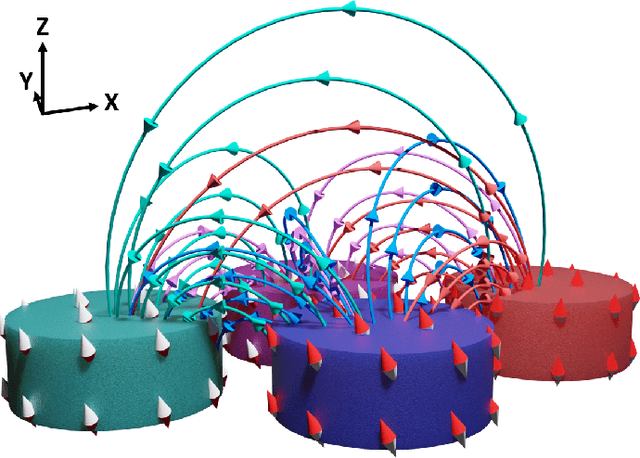

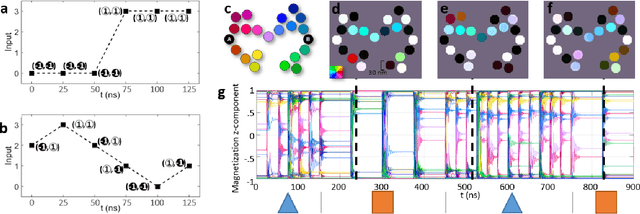

Frustrated Arrays of Nanomagnets for Efficient Reservoir Computing

Mar 16, 2021

Abstract:We simulated our nanomagnet reservoir computer (NMRC) design on benchmark tasks, demonstrating NMRC's high memory content and expressibility. In support of the feasibility of this method, we fabricated a frustrated nanomagnet reservoir layer. Using this structure, we describe a low-power, low-area system with an area-energy-delay product $10^7$ lower than conventional RC systems, that is therefore promising for size, weight, and power (SWaP) constrained applications.

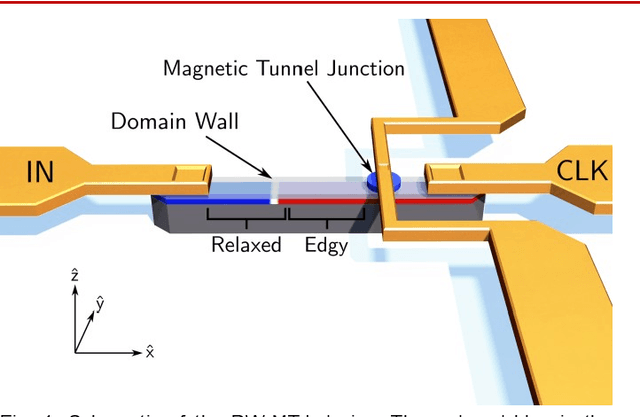

Controllable reset behavior in domain wall-magnetic tunnel junction artificial neurons for task-adaptable computation

Jan 08, 2021

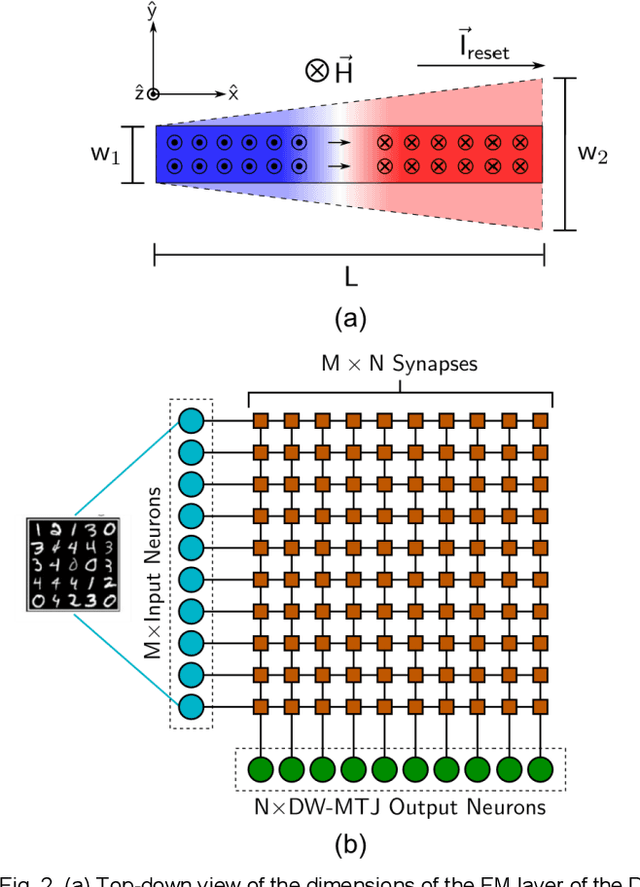

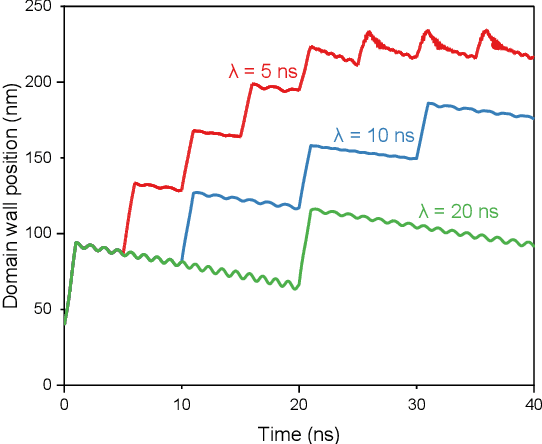

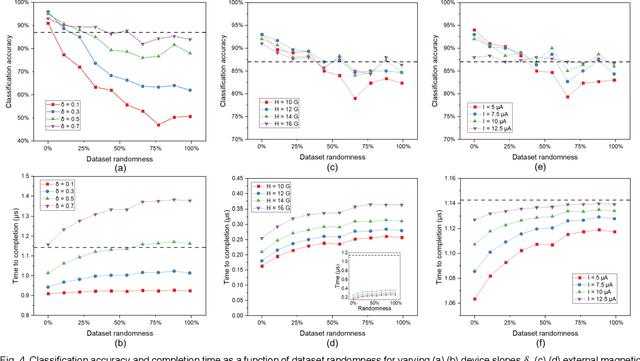

Abstract:Neuromorphic computing with spintronic devices has been of interest due to the limitations of CMOS-driven von Neumann computing. Domain wall-magnetic tunnel junction (DW-MTJ) devices have been shown to be able to intrinsically capture biological neuron behavior. Edgy-relaxed behavior, where a frequently firing neuron experiences a lower action potential threshold, may provide additional artificial neuronal functionality when executing repeated tasks. In this study, we demonstrate that this behavior can be implemented in DW-MTJ artificial neurons via three alternative mechanisms: shape anisotropy, magnetic field, and current-driven soft reset. Using micromagnetics and analytical device modeling to classify the Optdigits handwritten digit dataset, we show that edgy-relaxed behavior improves both classification accuracy and classification rate for ordered datasets while sacrificing little to no accuracy for a randomized dataset. This work establishes methods by which artificial spintronic neurons can be flexibly adapted to datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge