Subhabrata Majumdar

Consistency in Language Models: Current Landscape, Challenges, and Future Directions

May 01, 2025Abstract:The hallmark of effective language use lies in consistency -- expressing similar meanings in similar contexts and avoiding contradictions. While human communication naturally demonstrates this principle, state-of-the-art language models struggle to maintain reliable consistency across different scenarios. This paper examines the landscape of consistency research in AI language systems, exploring both formal consistency (including logical rule adherence) and informal consistency (such as moral and factual coherence). We analyze current approaches to measure aspects of consistency, identify critical research gaps in standardization of definitions, multilingual assessment, and methods to improve consistency. Our findings point to an urgent need for robust benchmarks to measure and interdisciplinary approaches to ensure consistency in the application of language models on domain-specific tasks while preserving the utility and adaptability.

Improving Consistency in Large Language Models through Chain of Guidance

Feb 21, 2025Abstract:Consistency is a fundamental dimension of trustworthiness in Large Language Models (LLMs). For humans to be able to trust LLM-based applications, their outputs should be consistent when prompted with inputs that carry the same meaning or intent. Despite this need, there is no known mechanism to control and guide LLMs to be more consistent at inference time. In this paper, we introduce a novel alignment strategy to maximize semantic consistency in LLM outputs. Our proposal is based on Chain of Guidance (CoG), a multistep prompting technique that generates highly consistent outputs from LLMs. For closed-book question-answering (Q&A) tasks, when compared to direct prompting, the outputs generated using CoG show improved consistency. While other approaches like template-based responses and majority voting may offer alternative paths to consistency, our work focuses on exploring the potential of guided prompting. We use synthetic data sets comprised of consistent input-output pairs to fine-tune LLMs to produce consistent and correct outputs. Our fine-tuned models are more than twice as consistent compared to base models and show strong generalization capabilities by producing consistent outputs over datasets not used in the fine-tuning process.

Embedding-based classifiers can detect prompt injection attacks

Oct 29, 2024Abstract:Large Language Models (LLMs) are seeing significant adoption in every type of organization due to their exceptional generative capabilities. However, LLMs are found to be vulnerable to various adversarial attacks, particularly prompt injection attacks, which trick them into producing harmful or inappropriate content. Adversaries execute such attacks by crafting malicious prompts to deceive the LLMs. In this paper, we propose a novel approach based on embedding-based Machine Learning (ML) classifiers to protect LLM-based applications against this severe threat. We leverage three commonly used embedding models to generate embeddings of malicious and benign prompts and utilize ML classifiers to predict whether an input prompt is malicious. Out of several traditional ML methods, we achieve the best performance with classifiers built using Random Forest and XGBoost. Our classifiers outperform state-of-the-art prompt injection classifiers available in open-source implementations, which use encoder-only neural networks.

Representation noising effectively prevents harmful fine-tuning on LLMs

May 23, 2024

Abstract:Releasing open-source large language models (LLMs) presents a dual-use risk since bad actors can easily fine-tune these models for harmful purposes. Even without the open release of weights, weight stealing and fine-tuning APIs make closed models vulnerable to harmful fine-tuning attacks (HFAs). While safety measures like preventing jailbreaks and improving safety guardrails are important, such measures can easily be reversed through fine-tuning. In this work, we propose Representation Noising (RepNoise), a defence mechanism that is effective even when attackers have access to the weights and the defender no longer has any control. RepNoise works by removing information about harmful representations such that it is difficult to recover them during fine-tuning. Importantly, our defence is also able to generalize across different subsets of harm that have not been seen during the defence process. Our method does not degrade the general capability of LLMs and retains the ability to train the model on harmless tasks. We provide empirical evidence that the effectiveness of our defence lies in its "depth": the degree to which information about harmful representations is removed across all layers of the LLM.

Semantic Consistency for Assuring Reliability of Large Language Models

Aug 17, 2023Abstract:Large Language Models (LLMs) exhibit remarkable fluency and competence across various natural language tasks. However, recent research has highlighted their sensitivity to variations in input prompts. To deploy LLMs in a safe and reliable manner, it is crucial for their outputs to be consistent when prompted with expressions that carry the same meaning or intent. While some existing work has explored how state-of-the-art LLMs address this issue, their evaluations have been confined to assessing lexical equality of single- or multi-word answers, overlooking the consistency of generative text sequences. For a more comprehensive understanding of the consistency of LLMs in open-ended text generation scenarios, we introduce a general measure of semantic consistency, and formulate multiple versions of this metric to evaluate the performance of various LLMs. Our proposal demonstrates significantly higher consistency and stronger correlation with human evaluations of output consistency than traditional metrics based on lexical consistency. Finally, we propose a novel prompting strategy, called Ask-to-Choose (A2C), to enhance semantic consistency. When evaluated for closed-book question answering based on answer variations from the TruthfulQA benchmark, A2C increases accuracy metrics for pretrained and finetuned LLMs by up to 47%, and semantic consistency metrics for instruction-tuned models by up to 7-fold.

Network Security Modelling with Distributional Data

Nov 24, 2022

Abstract:We investigate the detection of botnet command and control (C2) hosts in massive IP traffic using machine learning methods. To this end, we use NetFlow data -- the industry standard for monitoring of IP traffic -- and ML models using two sets of features: conventional NetFlow variables and distributional features based on NetFlow variables. In addition to using static summaries of NetFlow features, we use quantiles of their IP-level distributions as input features in predictive models to predict whether an IP belongs to known botnet families. These models are used to develop intrusion detection systems to predict traffic traces identified with malicious attacks. The results are validated by matching predictions to existing denylists of published malicious IP addresses and deep packet inspection. The usage of our proposed novel distributional features, combined with techniques that enable modelling complex input feature spaces result in highly accurate predictions by our trained models.

Measuring Reliability of Large Language Models through Semantic Consistency

Nov 10, 2022Abstract:While large pretrained language models (PLMs) demonstrate incredible fluency and performance on many natural language tasks, recent work has shown that well-performing PLMs are very sensitive to what prompts are feed into them. Even when prompts are semantically identical, language models may give very different answers. When considering safe and trustworthy deployments of PLMs we would like their outputs to be consistent under prompts that mean the same thing or convey the same intent. While some work has looked into how state-of-the-art PLMs address this need, they have been limited to only evaluating lexical equality of single- or multi-word answers and do not address consistency of generative text sequences. In order to understand consistency of PLMs under text generation settings, we develop a measure of semantic consistency that allows the comparison of open-ended text outputs. We implement several versions of this consistency metric to evaluate the performance of a number of PLMs on paraphrased versions of questions in the TruthfulQA dataset, we find that our proposed metrics are considerably more consistent than traditional metrics embodying lexical consistency, and also correlate with human evaluation of output consistency to a higher degree.

Towards Algorithmic Fairness in Space-Time: Filling in Black Holes

Nov 08, 2022Abstract:New technologies and the availability of geospatial data have drawn attention to spatio-temporal biases present in society. For example: the COVID-19 pandemic highlighted disparities in the availability of broadband service and its role in the digital divide; the environmental justice movement in the United States has raised awareness to health implications for minority populations stemming from historical redlining practices; and studies have found varying quality and coverage in the collection and sharing of open-source geospatial data. Despite the extensive literature on machine learning (ML) fairness, few algorithmic strategies have been proposed to mitigate such biases. In this paper we highlight the unique challenges for quantifying and addressing spatio-temporal biases, through the lens of use cases presented in the scientific literature and media. We envision a roadmap of ML strategies that need to be developed or adapted to quantify and overcome these challenges -- including transfer learning, active learning, and reinforcement learning techniques. Further, we discuss the potential role of ML in providing guidance to policy makers on issues related to spatial fairness.

Feature Selection using e-values

Jun 16, 2022

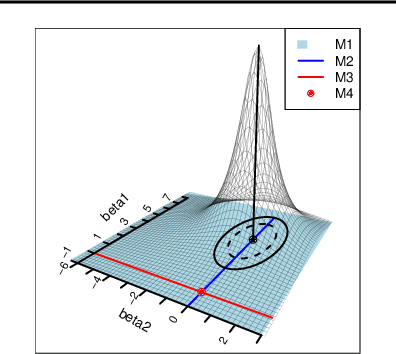

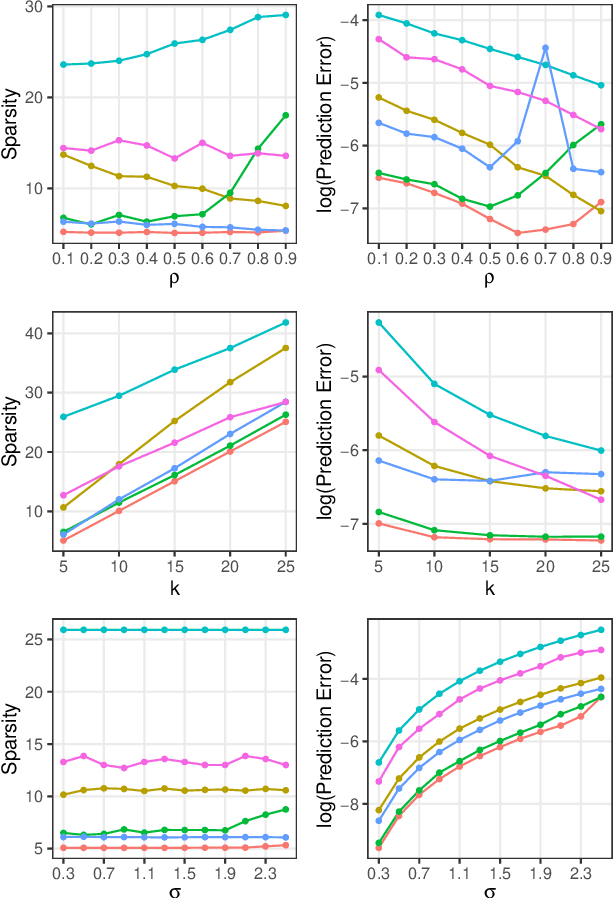

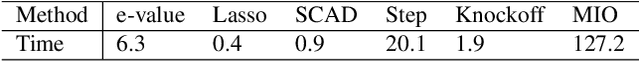

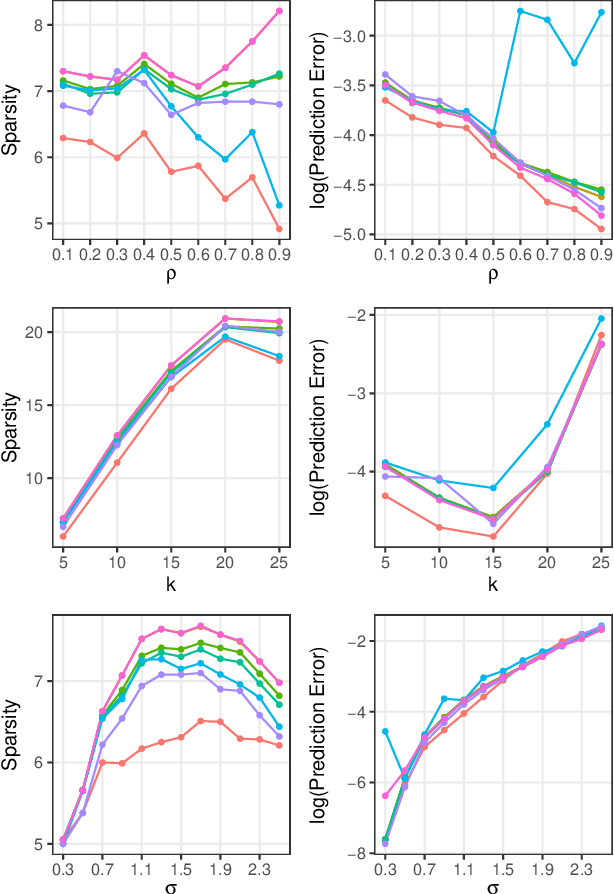

Abstract:In the context of supervised parametric models, we introduce the concept of e-values. An e-value is a scalar quantity that represents the proximity of the sampling distribution of parameter estimates in a model trained on a subset of features to that of the model trained on all features (i.e. the full model). Under general conditions, a rank ordering of e-values separates models that contain all essential features from those that do not. The e-values are applicable to a wide range of parametric models. We use data depths and a fast resampling-based algorithm to implement a feature selection procedure using e-values, providing consistency results. For a $p$-dimensional feature space, this procedure requires fitting only the full model and evaluating $p+1$ models, as opposed to the traditional requirement of fitting and evaluating $2^p$ models. Through experiments across several model settings and synthetic and real datasets, we establish that the e-values method as a promising general alternative to existing model-specific methods of feature selection.

Scaling New Peaks: A Viewership-centric Approach to Automated Content Curation

Aug 09, 2021

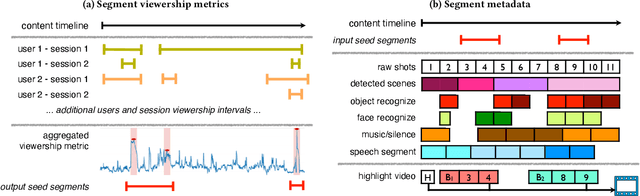

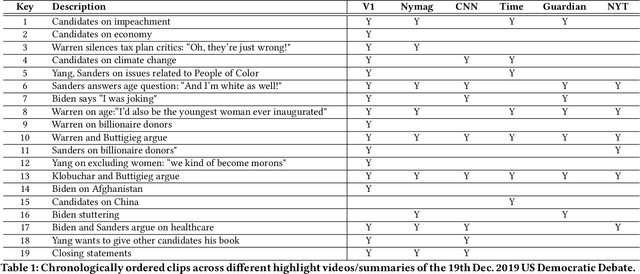

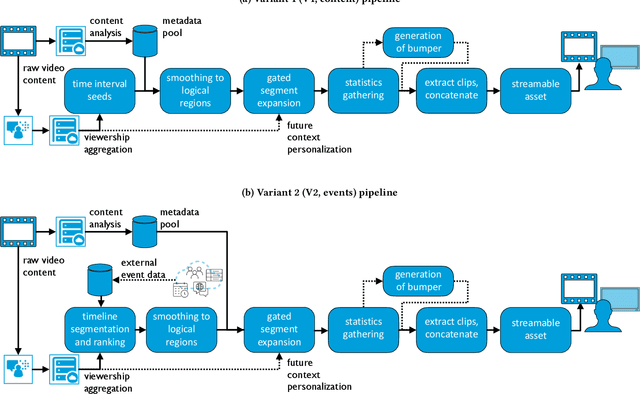

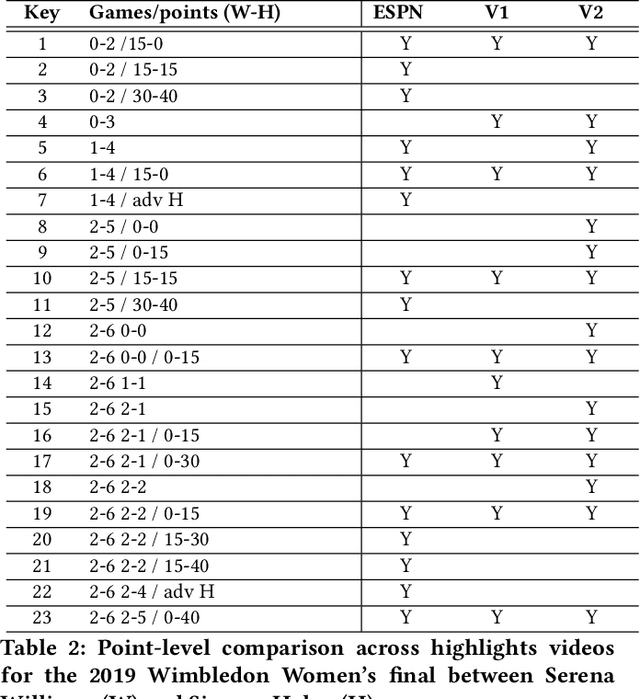

Abstract:Summarizing video content is important for video streaming services to engage the user in a limited time span. To this end, current methods involve manual curation or using passive interest cues to annotate potential high-interest segments to form the basis of summarized videos, and are costly and unreliable. We propose a viewership-driven, automated method that accommodates a range of segment identification goals. Using satellite television viewership data as a source of ground truth for viewer interest, we apply statistical anomaly detection on a timeline of viewership metrics to identify 'seed' segments of high viewer interest. These segments are post-processed using empirical rules and several sources of content metadata, e.g. shot boundaries, adding in personalization aspects to produce the final highlights video. To demonstrate the flexibility of our approach, we present two case studies, on the United States Democratic Presidential Debate on 19th December 2019, and Wimbledon Women's Final 2019. We perform qualitative comparisons with their publicly available highlights, as well as early vs. late viewership comparisons for insights into possible media and social influence on viewing behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge