Stephen Bates

Conformal Prediction for Generative Models via Adaptive Cluster-Based Density Estimation

Jan 29, 2026Abstract:Conditional generative models map input variables to complex, high-dimensional distributions, enabling realistic sample generation in a diverse set of domains. A critical challenge with these models is the absence of calibrated uncertainty, which undermines trust in individual outputs for high-stakes applications. To address this issue, we propose a systematic conformal prediction approach tailored to conditional generative models, leveraging density estimation on model-generated samples. We introduce a novel method called CP4Gen, which utilizes clustering-based density estimation to construct prediction sets that are less sensitive to outliers, more interpretable, and of lower structural complexity than existing methods. Extensive experiments on synthetic datasets and real-world applications, including climate emulation tasks, demonstrate that CP4Gen consistently achieves superior performance in terms of prediction set volume and structural simplicity. Our approach offers practitioners a powerful tool for uncertainty estimation associated with conditional generative models, particularly in scenarios demanding rigorous and interpretable prediction sets.

Thought calibration: Efficient and confident test-time scaling

May 23, 2025Abstract:Reasoning large language models achieve impressive test-time scaling by thinking for longer, but this performance gain comes at significant compute cost. Directly limiting test-time budget hurts overall performance, but not all problems are equally difficult. We propose thought calibration to decide dynamically when thinking can be terminated. To calibrate our decision rule, we view a language model's growing body of thoughts as a nested sequence of reasoning trees, where the goal is to identify the point at which novel reasoning plateaus. We realize this framework through lightweight probes that operate on top of the language model's hidden representations, which are informative of both the reasoning structure and overall consistency of response. Based on three reasoning language models and four datasets, thought calibration preserves model performance with up to a 60% reduction in thinking tokens on in-distribution data, and up to 20% in out-of-distribution data.

Conformal Prediction with Corrupted Labels: Uncertain Imputation and Robust Re-weighting

May 07, 2025Abstract:We introduce a framework for robust uncertainty quantification in situations where labeled training data are corrupted, through noisy or missing labels. We build on conformal prediction, a statistical tool for generating prediction sets that cover the test label with a pre-specified probability. The validity of conformal prediction, however, holds under the i.i.d assumption, which does not hold in our setting due to the corruptions in the data. To account for this distribution shift, the privileged conformal prediction (PCP) method proposed leveraging privileged information (PI) -- additional features available only during training -- to re-weight the data distribution, yielding valid prediction sets under the assumption that the weights are accurate. In this work, we analyze the robustness of PCP to inaccuracies in the weights. Our analysis indicates that PCP can still yield valid uncertainty estimates even when the weights are poorly estimated. Furthermore, we introduce uncertain imputation (UI), a new conformal method that does not rely on weight estimation. Instead, we impute corrupted labels in a way that preserves their uncertainty. Our approach is supported by theoretical guarantees and validated empirically on both synthetic and real benchmarks. Finally, we show that these techniques can be integrated into a triply robust framework, ensuring statistically valid predictions as long as at least one underlying method is valid.

Lipschitz-Driven Inference: Bias-corrected Confidence Intervals for Spatial Linear Models

Feb 09, 2025

Abstract:Linear models remain ubiquitous in modern spatial applications - including climate science, public health, and economics - due to their interpretability, speed, and reproducibility. While practitioners generally report a form of uncertainty, popular spatial uncertainty quantification methods do not jointly handle model misspecification and distribution shift - despite both being essentially always present in spatial problems. In the present paper, we show that existing methods for constructing confidence (or credible) intervals in spatial linear models fail to provide correct coverage due to unaccounted-for bias. In contrast to classical methods that rely on an i.i.d. assumption that is inappropriate in spatial problems, in the present work we instead make a spatial smoothness (Lipschitz) assumption. We are then able to propose a new confidence-interval construction that accounts for bias in the estimation procedure. We demonstrate that our new method achieves nominal coverage via both theory and experiments. Code to reproduce experiments is available at https://github.com/DavidRBurt/Lipschitz-Driven-Inference.

Contextual Online Decision Making with Infinite-Dimensional Functional Regression

Jan 30, 2025

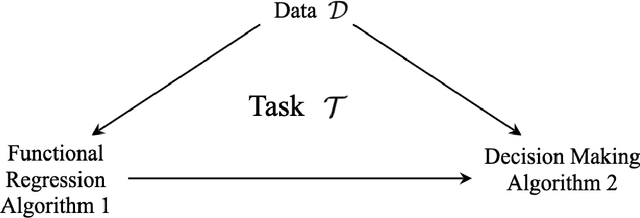

Abstract:Contextual sequential decision-making problems play a crucial role in machine learning, encompassing a wide range of downstream applications such as bandits, sequential hypothesis testing and online risk control. These applications often require different statistical measures, including expectation, variance and quantiles. In this paper, we provide a universal admissible algorithm framework for dealing with all kinds of contextual online decision-making problems that directly learns the whole underlying unknown distribution instead of focusing on individual statistics. This is much more difficult because the dimension of the regression is uncountably infinite, and any existing linear contextual bandits algorithm will result in infinite regret. To overcome this issue, we propose an efficient infinite-dimensional functional regression oracle for contextual cumulative distribution functions (CDFs), where each data point is modeled as a combination of context-dependent CDF basis functions. Our analysis reveals that the decay rate of the eigenvalue sequence of the design integral operator governs the regression error rate and, consequently, the utility regret rate. Specifically, when the eigenvalue sequence exhibits a polynomial decay of order $\frac{1}{\gamma}\ge 1$, the utility regret is bounded by $\tilde{\mathcal{O}}\Big(T^{\frac{3\gamma+2}{2(\gamma+2)}}\Big)$. By setting $\gamma=0$, this recovers the existing optimal regret rate for contextual bandits with finite-dimensional regression and is optimal under a stronger exponential decay assumption. Additionally, we provide a numerical method to compute the eigenvalue sequence of the integral operator, enabling the practical implementation of our framework.

Prediction-Powered Inference with Imputed Covariates and Nonuniform Sampling

Jan 30, 2025

Abstract:Machine learning models are increasingly used to produce predictions that serve as input data in subsequent statistical analyses. For example, computer vision predictions of economic and environmental indicators based on satellite imagery are used in downstream regressions; similarly, language models are widely used to approximate human ratings and opinions in social science research. However, failure to properly account for errors in the machine learning predictions renders standard statistical procedures invalid. Prior work uses what we call the Predict-Then-Debias estimator to give valid confidence intervals when machine learning algorithms impute missing variables, assuming a small complete sample from the population of interest. We expand the scope by introducing bootstrap confidence intervals that apply when the complete data is a nonuniform (i.e., weighted, stratified, or clustered) sample and to settings where an arbitrary subset of features is imputed. Importantly, the method can be applied to many settings without requiring additional calculations. We prove that these confidence intervals are valid under no assumptions on the quality of the machine learning model and are no wider than the intervals obtained by methods that do not use machine learning predictions.

Sharp Results for Hypothesis Testing with Risk-Sensitive Agents

Dec 21, 2024Abstract:Statistical protocols are often used for decision-making involving multiple parties, each with their own incentives, private information, and ability to influence the distributional properties of the data. We study a game-theoretic version of hypothesis testing in which a statistician, also known as a principal, interacts with strategic agents that can generate data. The statistician seeks to design a testing protocol with controlled error, while the data-generating agents, guided by their utility and prior information, choose whether or not to opt in based on expected utility maximization. This strategic behavior affects the data observed by the statistician and, consequently, the associated testing error. We analyze this problem for general concave and monotonic utility functions and prove an upper bound on the Bayes false discovery rate (FDR). Underlying this bound is a form of prior elicitation: we show how an agent's choice to opt in implies a certain upper bound on their prior null probability. Our FDR bound is unimprovable in a strong sense, achieving equality at a single point for an individual agent and at any countable number of points for a population of agents. We also demonstrate that our testing protocols exhibit a desirable maximin property when the principal's utility is considered. To illustrate the qualitative predictions of our theory, we examine the effects of risk aversion, reward stochasticity, and signal-to-noise ratio, as well as the implications for the Food and Drug Administration's testing protocols.

Theoretical Foundations of Conformal Prediction

Nov 18, 2024

Abstract:This book is about conformal prediction and related inferential techniques that build on permutation tests and exchangeability. These techniques are useful in a diverse array of tasks, including hypothesis testing and providing uncertainty quantification guarantees for machine learning systems. Much of the current interest in conformal prediction is due to its ability to integrate into complex machine learning workflows, solving the problem of forming prediction sets without any assumptions on the form of the data generating distribution. Since contemporary machine learning algorithms have generally proven difficult to analyze directly, conformal prediction's main appeal is its ability to provide formal, finite-sample guarantees when paired with such methods. The goal of this book is to teach the reader about the fundamental technical arguments that arise when researching conformal prediction and related questions in distribution-free inference. Many of these proof strategies, especially the more recent ones, are scattered among research papers, making it difficult for researchers to understand where to look, which results are important, and how exactly the proofs work. We hope to bridge this gap by curating what we believe to be some of the most important results in the literature and presenting their proofs in a unified language, with illustrations, and with an eye towards pedagogy.

Quantifying uncertainty in area and regression coefficient estimation from remote sensing maps

Jul 18, 2024

Abstract:Remote sensing map products are used to obtain estimates of environmental quantities, such as deforested area or the effect of conservation zones on deforestation. However, the quality of map products varies, and - because maps are outputs of complex machine learning algorithms that take in a variety of remotely sensed variables as inputs - errors are difficult to characterize. Without capturing the biases that may be present, naive calculations of population-level estimates from such maps are statistically invalid. In this paper, we compare several uncertainty quantification methods - stratification, Olofsson area estimation method, and prediction-powered inference - that combine a small amount of randomly sampled ground truth data with large-scale remote sensing map products to generate statistically valid estimates. Applying these methods across four remote sensing use cases in area and regression coefficient estimation, we find that they result in estimates that are more reliable than naively using the map product as if it were 100% accurate and have lower uncertainty than using only the ground truth and ignoring the map product. Prediction-powered inference uses ground truth data to correct for bias in the map product estimate and (unlike stratification) does not require us to choose a map product before sampling. This is the first work to (1) apply prediction-powered inference to remote sensing estimation tasks, and (2) perform uncertainty quantification on remote sensing regression coefficients without assumptions on the structure of map product errors. To improve the utility of machine learning-generated remote sensing maps for downstream applications, we recommend that map producers provide a holdout ground truth dataset to be used for calibration in uncertainty quantification alongside their maps.

Data-Adaptive Tradeoffs among Multiple Risks in Distribution-Free Prediction

Mar 28, 2024Abstract:Decision-making pipelines are generally characterized by tradeoffs among various risk functions. It is often desirable to manage such tradeoffs in a data-adaptive manner. As we demonstrate, if this is done naively, state-of-the art uncertainty quantification methods can lead to significant violations of putative risk guarantees. To address this issue, we develop methods that permit valid control of risk when threshold and tradeoff parameters are chosen adaptively. Our methodology supports monotone and nearly-monotone risks, but otherwise makes no distributional assumptions. To illustrate the benefits of our approach, we carry out numerical experiments on synthetic data and the large-scale vision dataset MS-COCO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge