Sriram Baireddy

Conditional Synthetic Food Image Generation

Mar 16, 2023

Abstract:Generative Adversarial Networks (GAN) have been widely investigated for image synthesis based on their powerful representation learning ability. In this work, we explore the StyleGAN and its application of synthetic food image generation. Despite the impressive performance of GAN for natural image generation, food images suffer from high intra-class diversity and inter-class similarity, resulting in overfitting and visual artifacts for synthetic images. Therefore, we aim to explore the capability and improve the performance of GAN methods for food image generation. Specifically, we first choose StyleGAN3 as the baseline method to generate synthetic food images and analyze the performance. Then, we identify two issues that can cause performance degradation on food images during the training phase: (1) inter-class feature entanglement during multi-food classes training and (2) loss of high-resolution detail during image downsampling. To address both issues, we propose to train one food category at a time to avoid feature entanglement and leverage image patches cropped from high-resolution datasets to retain fine details. We evaluate our method on the Food-101 dataset and show improved quality of generated synthetic food images compared with the baseline. Finally, we demonstrate the great potential of improving the performance of downstream tasks, such as food image classification by including high-quality synthetic training samples in the data augmentation.

High-Resolution UAV Image Generation for Sorghum Panicle Detection

May 08, 2022

Abstract:The number of panicles (or heads) of Sorghum plants is an important phenotypic trait for plant development and grain yield estimation. The use of Unmanned Aerial Vehicles (UAVs) enables the capability of collecting and analyzing Sorghum images on a large scale. Deep learning can provide methods for estimating phenotypic traits from UAV images but requires a large amount of labeled data. The lack of training data due to the labor-intensive ground truthing of UAV images causes a major bottleneck in developing methods for Sorghum panicle detection and counting. In this paper, we present an approach that uses synthetic training images from generative adversarial networks (GANs) for data augmentation to enhance the performance of Sorghum panicle detection and counting. Our method can generate synthetic high-resolution UAV RGB images with panicle labels by using image-to-image translation GANs with a limited ground truth dataset of real UAV RGB images. The results show the improvements in panicle detection and counting using our data augmentation approach.

Leaf Tar Spot Detection Using RGB Images

May 02, 2022

Abstract:Tar spot disease is a fungal disease that appears as a series of black circular spots containing spores on corn leaves. Tar spot has proven to be an impactful disease in terms of reducing crop yield. To quantify disease progression, experts usually have to visually phenotype leaves from the plant. This process is very time-consuming and is difficult to incorporate in any high-throughput phenotyping system. Deep neural networks could provide quick, automated tar spot detection with sufficient ground truth. However, manually labeling tar spots in images to serve as ground truth is also tedious and time-consuming. In this paper we first describe an approach that uses automated image analysis tools to generate ground truth images that are then used for training a Mask R-CNN. We show that a Mask R-CNN can be used effectively to detect tar spots in close-up images of leaf surfaces. We additionally show that the Mask R-CNN can also be used for in-field images of whole leaves to capture the number of tar spots and area of the leaf infected by the disease.

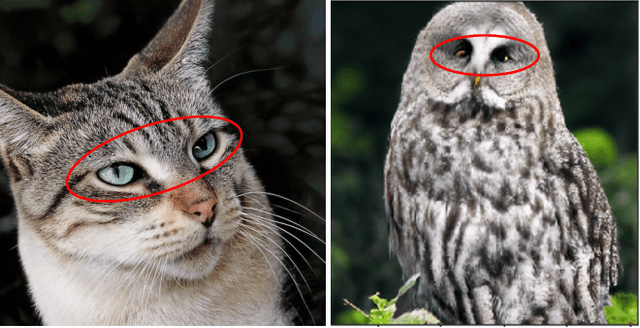

An Overview of Recent Work in Media Forensics: Methods and Threats

Apr 26, 2022

Abstract:In this paper, we review recent work in media forensics for digital images, video, audio (specifically speech), and documents. For each data modality, we discuss synthesis and manipulation techniques that can be used to create and modify digital media. We then review technological advancements for detecting and quantifying such manipulations. Finally, we consider open issues and suggest directions for future research.

Improving Building Segmentation for Off-Nadir Satellite Imagery

Sep 08, 2021

Abstract:Automatic building segmentation is an important task for satellite imagery analysis and scene understanding. Most existing segmentation methods focus on the case where the images are taken from directly overhead (i.e., low off-nadir/viewing angle). These methods often fail to provide accurate results on satellite images with larger off-nadir angles due to the higher noise level and lower spatial resolution. In this paper, we propose a method that is able to provide accurate building segmentation for satellite imagery captured from a large range of off-nadir angles. Based on Bayesian deep learning, we explicitly design our method to learn the data noise via aleatoric and epistemic uncertainty modeling. Satellite image metadata (e.g., off-nadir angle and ground sample distance) is also used in our model to further improve the result. We show that with uncertainty modeling and metadata injection, our method achieves better performance than the baseline method, especially for noisy images taken from large off-nadir angles.

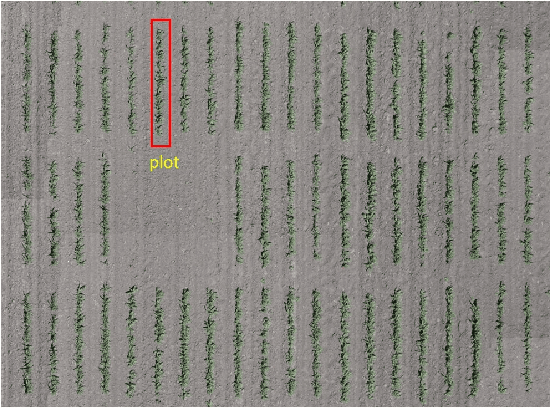

Field-Based Plot Extraction Using UAV RGB Images

Sep 01, 2021

Abstract:Unmanned Aerial Vehicles (UAVs) have become popular for use in plant phenotyping of field based crops, such as maize and sorghum, due to their ability to acquire high resolution data over field trials. Field experiments, which may comprise thousands of plants, are planted according to experimental designs to evaluate varieties or management practices. For many types of phenotyping analysis, we examine smaller groups of plants known as "plots." In this paper, we propose a new plot extraction method that will segment a UAV image into plots. We will demonstrate that our method achieves higher plot extraction accuracy than existing approaches.

Panicle Counting in UAV Images For Estimating Flowering Time in Sorghum

Jul 15, 2021

Abstract:Flowering time (time to flower after planting) is important for estimating plant development and grain yield for many crops including sorghum. Flowering time of sorghum can be approximated by counting the number of panicles (clusters of grains on a branch) across multiple dates. Traditional manual methods for panicle counting are time-consuming and tedious. In this paper, we propose a method for estimating flowering time and rapidly counting panicles using RGB images acquired by an Unmanned Aerial Vehicle (UAV). We evaluate three different deep neural network structures for panicle counting and location. Experimental results demonstrate that our method is able to accurately detect panicles and estimate sorghum flowering time.

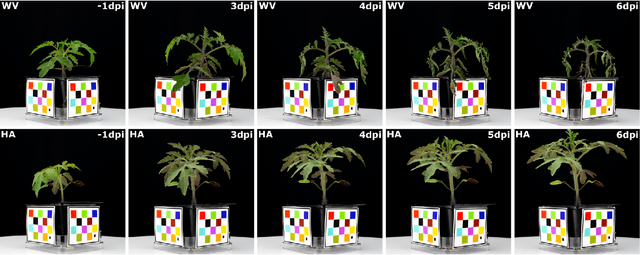

Image-Based Plant Wilting Estimation

May 27, 2021

Abstract:Many plants become limp or droop through heat, loss of water, or disease. This is also known as wilting. In this paper, we examine plant wilting caused by bacterial infection. In particular, we want to design a metric for wilting based on images acquired of the plant. A quantifiable wilting metric will be useful in studying bacterial wilt and identifying resistance genes. Since there is no standard way to estimate wilting, it is common to use ad hoc visual scores. This is very subjective and requires expert knowledge of the plants and the disease mechanism. Our solution consists of using various wilting metrics acquired from RGB images of the plants. We also designed several experiments to demonstrate that our metrics are effective at estimating wilting in plants.

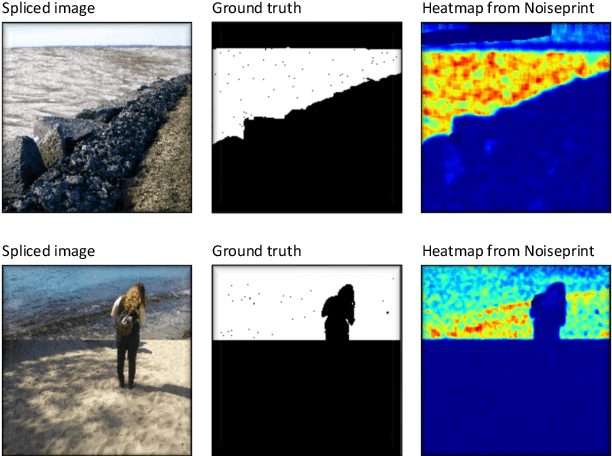

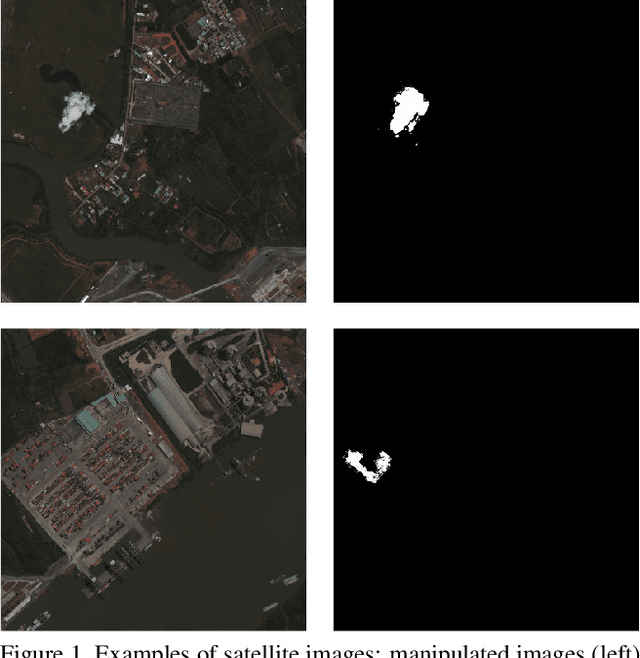

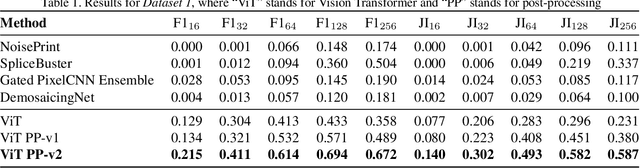

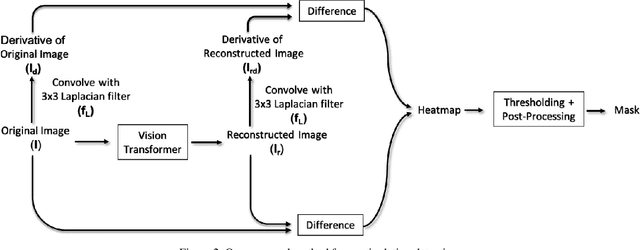

Manipulation Detection in Satellite Images Using Vision Transformer

May 13, 2021

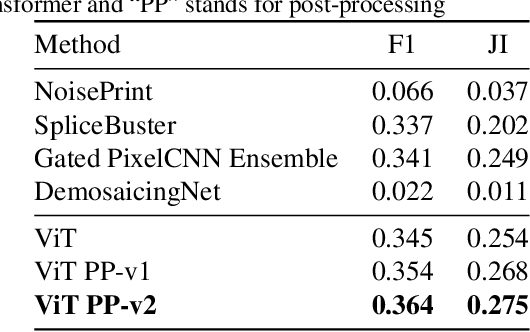

Abstract:A growing number of commercial satellite companies provide easily accessible satellite imagery. Overhead imagery is used by numerous industries including agriculture, forestry, natural disaster analysis, and meteorology. Satellite images, just as any other images, can be tampered with image manipulation tools. Manipulation detection methods created for images captured by "consumer cameras" tend to fail when used on satellite images due to the differences in image sensors, image acquisition, and processing. In this paper we propose an unsupervised technique that uses a Vision Transformer to detect spliced areas within satellite images. We introduce a new dataset which includes manipulated satellite images that contain spliced objects. We show that our proposed approach performs better than existing unsupervised splicing detection techniques.

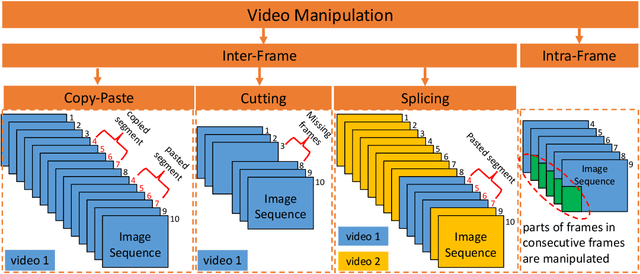

Forensic Analysis of Video Files Using Metadata

May 13, 2021

Abstract:The unprecedented ease and ability to manipulate video content has led to a rapid spread of manipulated media. The availability of video editing tools greatly increased in recent years, allowing one to easily generate photo-realistic alterations. Such manipulations can leave traces in the metadata embedded in video files. This metadata information can be used to determine video manipulations, brand of video recording device, the type of video editing tool, and other important evidence. In this paper, we focus on the metadata contained in the popular MP4 video wrapper/container. We describe our method for metadata extractor that uses the MP4's tree structure. Our approach for analyzing the video metadata produces a more compact representation. We will describe how we construct features from the metadata and then use dimensionality reduction and nearest neighbor classification for forensic analysis of a video file. Our approach allows one to visually inspect the distribution of metadata features and make decisions. The experimental results confirm that the performance of our approach surpasses other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge