Melba Crawford

Investigation of Hierarchical Spectral Vision Transformer Architecture for Classification of Hyperspectral Imagery

Sep 14, 2024Abstract:In the past three years, there has been significant interest in hyperspectral imagery (HSI) classification using vision Transformers for analysis of remotely sensed data. Previous research predominantly focused on the empirical integration of convolutional neural networks (CNNs) to augment the network's capability to extract local feature information. Yet, the theoretical justification for vision Transformers out-performing CNN architectures in HSI classification remains a question. To address this issue, a unified hierarchical spectral vision Transformer architecture, specifically tailored for HSI classification, is investigated. In this streamlined yet effective vision Transformer architecture, multiple mixer modules are strategically integrated separately. These include the CNN-mixer, which executes convolution operations; the spatial self-attention (SSA)-mixer and channel self-attention (CSA)-mixer, both of which are adaptations of classical self-attention blocks; and hybrid models such as the SSA+CNN-mixer and CSA+CNN-mixer, which merge convolution with self-attention operations. This integration facilitates the development of a broad spectrum of vision Transformer-based models tailored for HSI classification. In terms of the training process, a comprehensive analysis is performed, contrasting classical CNN models and vision Transformer-based counterparts, with particular attention to disturbance robustness and the distribution of the largest eigenvalue of the Hessian. From the evaluations conducted on various mixer models rooted in the unified architecture, it is concluded that the unique strength of vision Transformers can be attributed to their overarching architecture, rather than being exclusively reliant on individual multi-head self-attention (MSA) components.

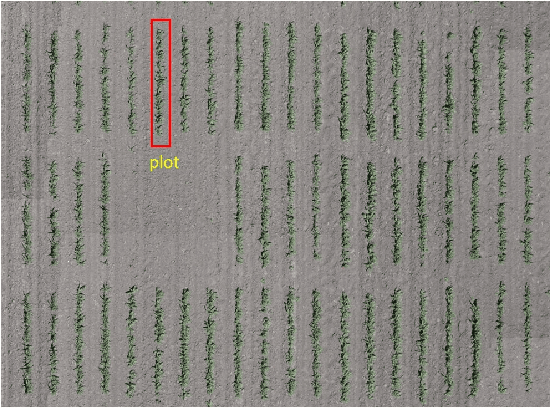

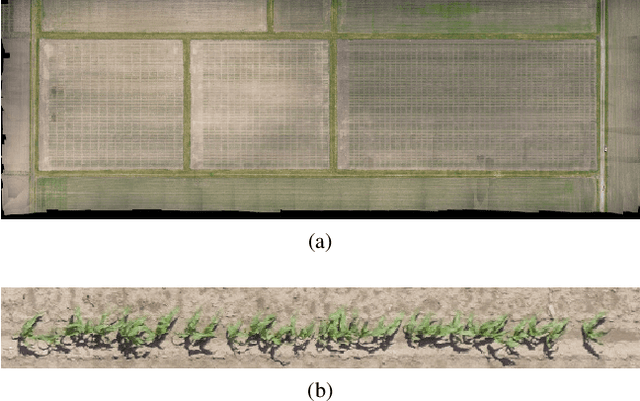

Field-Based Plot Extraction Using UAV RGB Images

Sep 01, 2021

Abstract:Unmanned Aerial Vehicles (UAVs) have become popular for use in plant phenotyping of field based crops, such as maize and sorghum, due to their ability to acquire high resolution data over field trials. Field experiments, which may comprise thousands of plants, are planted according to experimental designs to evaluate varieties or management practices. For many types of phenotyping analysis, we examine smaller groups of plants known as "plots." In this paper, we propose a new plot extraction method that will segment a UAV image into plots. We will demonstrate that our method achieves higher plot extraction accuracy than existing approaches.

Panicle Counting in UAV Images For Estimating Flowering Time in Sorghum

Jul 15, 2021

Abstract:Flowering time (time to flower after planting) is important for estimating plant development and grain yield for many crops including sorghum. Flowering time of sorghum can be approximated by counting the number of panicles (clusters of grains on a branch) across multiple dates. Traditional manual methods for panicle counting are time-consuming and tedious. In this paper, we propose a method for estimating flowering time and rapidly counting panicles using RGB images acquired by an Unmanned Aerial Vehicle (UAV). We evaluate three different deep neural network structures for panicle counting and location. Experimental results demonstrate that our method is able to accurately detect panicles and estimate sorghum flowering time.

Deep Transfer Learning For Plant Center Localization

Apr 29, 2020

Abstract:Plant phenotyping focuses on the measurement of plant characteristics throughout the growing season, typically with the goal of evaluating genotypes for plant breeding. Estimating plant location is important for identifying genotypes which have low emergence, which is also related to the environment and management practices such as fertilizer applications. The goal of this paper is to investigate methods that estimate plant locations for a field-based crop using RGB aerial images captured using Unmanned Aerial Vehicles (UAVs). Deep learning approaches provide promising capability for locating plants observed in RGB images, but they require large quantities of labeled data (ground truth) for training. Using a deep learning architecture fine-tuned on a single field or a single type of crop on fields in other geographic areas or with other crops may not have good results. The problem of generating ground truth for each new field is labor-intensive and tedious. In this paper, we propose a method for estimating plant centers by transferring an existing model to a new scenario using limited ground truth data. We describe the use of transfer learning using a model fine-tuned for a single field or a single type of plant on a varied set of similar crops and fields. We show that transfer learning provides promising results for detecting plant locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge