Saurabh Prasad

mViSE: A Visual Search Engine for Analyzing Multiplex IHC Brain Tissue Images

Dec 12, 2025Abstract:Whole-slide multiplex imaging of brain tissue generates massive information-dense images that are challenging to analyze and require custom software. We present an alternative query-driven programming-free strategy using a multiplex visual search engine (mViSE) that learns the multifaceted brain tissue chemoarchitecture, cytoarchitecture, and myeloarchitecture. Our divide-and-conquer strategy organizes the data into panels of related molecular markers and uses self-supervised learning to train a multiplex encoder for each panel with explicit visual confirmation of successful learning. Multiple panels can be combined to process visual queries for retrieving similar communities of individual cells or multicellular niches using information-theoretic methods. The retrievals can be used for diverse purposes including tissue exploration, delineating brain regions and cortical cell layers, profiling and comparing brain regions without computer programming. We validated mViSE's ability to retrieve single cells, proximal cell pairs, tissue patches, delineate cortical layers, brain regions and sub-regions. mViSE is provided as an open-source QuPath plug-in.

Weak-to-Strong Generalization Enables Fully Automated De Novo Training of Multi-head Mask-RCNN Model for Segmenting Densely Overlapping Cell Nuclei in Multiplex Whole-slice Brain Images

Dec 12, 2025Abstract:We present a weak to strong generalization methodology for fully automated training of a multi-head extension of the Mask-RCNN method with efficient channel attention for reliable segmentation of overlapping cell nuclei in multiplex cyclic immunofluorescent (IF) whole-slide images (WSI), and present evidence for pseudo-label correction and coverage expansion, the key phenomena underlying weak to strong generalization. This method can learn to segment de novo a new class of images from a new instrument and/or a new imaging protocol without the need for human annotations. We also present metrics for automated self-diagnosis of segmentation quality in production environments, where human visual proofreading of massive WSI images is unaffordable. Our method was benchmarked against five current widely used methods and showed a significant improvement. The code, sample WSI images, and high-resolution segmentation results are provided in open form for community adoption and adaptation.

A Sensor Agnostic Domain Generalization Framework for Leveraging Geospatial Foundation Models: Enhancing Semantic Segmentation viaSynergistic Pseudo-Labeling and Generative Learning

May 02, 2025Abstract:Remote sensing enables a wide range of critical applications such as land cover and land use mapping, crop yield prediction, and environmental monitoring. Advances in satellite technology have expanded remote sensing datasets, yet high-performance segmentation models remain dependent on extensive labeled data, challenged by annotation scarcity and variability across sensors, illumination, and geography. Domain adaptation offers a promising solution to improve model generalization. This paper introduces a domain generalization approach to leveraging emerging geospatial foundation models by combining soft-alignment pseudo-labeling with source-to-target generative pre-training. We further provide new mathematical insights into MAE-based generative learning for domain-invariant feature learning. Experiments with hyperspectral and multispectral remote sensing datasets confirm our method's effectiveness in enhancing adaptability and segmentation.

Foundation Models and Adaptive Feature Selection: A Synergistic Approach to Video Question Answering

Dec 12, 2024

Abstract:This paper tackles the intricate challenge of video question-answering (VideoQA). Despite notable progress, current methods fall short of effectively integrating questions with video frames and semantic object-level abstractions to create question-aware video representations. We introduce Local-Global Question Aware Video Embedding (LGQAVE), which incorporates three major innovations to integrate multi-modal knowledge better and emphasize semantic visual concepts relevant to specific questions. LGQAVE moves beyond traditional ad-hoc frame sampling by utilizing a cross-attention mechanism that precisely identifies the most relevant frames concerning the questions. It captures the dynamics of objects within these frames using distinct graphs, grounding them in question semantics with the miniGPT model. These graphs are processed by a question-aware dynamic graph transformer (Q-DGT), which refines the outputs to develop nuanced global and local video representations. An additional cross-attention module integrates these local and global embeddings to generate the final video embeddings, which a language model uses to generate answers. Extensive evaluations across multiple benchmarks demonstrate that LGQAVE significantly outperforms existing models in delivering accurate multi-choice and open-ended answers.

Investigation of Hierarchical Spectral Vision Transformer Architecture for Classification of Hyperspectral Imagery

Sep 14, 2024Abstract:In the past three years, there has been significant interest in hyperspectral imagery (HSI) classification using vision Transformers for analysis of remotely sensed data. Previous research predominantly focused on the empirical integration of convolutional neural networks (CNNs) to augment the network's capability to extract local feature information. Yet, the theoretical justification for vision Transformers out-performing CNN architectures in HSI classification remains a question. To address this issue, a unified hierarchical spectral vision Transformer architecture, specifically tailored for HSI classification, is investigated. In this streamlined yet effective vision Transformer architecture, multiple mixer modules are strategically integrated separately. These include the CNN-mixer, which executes convolution operations; the spatial self-attention (SSA)-mixer and channel self-attention (CSA)-mixer, both of which are adaptations of classical self-attention blocks; and hybrid models such as the SSA+CNN-mixer and CSA+CNN-mixer, which merge convolution with self-attention operations. This integration facilitates the development of a broad spectrum of vision Transformer-based models tailored for HSI classification. In terms of the training process, a comprehensive analysis is performed, contrasting classical CNN models and vision Transformer-based counterparts, with particular attention to disturbance robustness and the distribution of the largest eigenvalue of the Hessian. From the evaluations conducted on various mixer models rooted in the unified architecture, it is concluded that the unique strength of vision Transformers can be attributed to their overarching architecture, rather than being exclusively reliant on individual multi-head self-attention (MSA) components.

Anomaly Detection in Satellite Videos using Diffusion Models

May 25, 2023Abstract:The definition of anomaly detection is the identification of an unexpected event. Real-time detection of extreme events such as wildfires, cyclones, or floods using satellite data has become crucial for disaster management. Although several earth-observing satellites provide information about disasters, satellites in the geostationary orbit provide data at intervals as frequent as every minute, effectively creating a video from space. There are many techniques that have been proposed to identify anomalies in surveillance videos; however, the available datasets do not have dynamic behavior, so we discuss an anomaly framework that can work on very high-frequency datasets to find very fast-moving anomalies. In this work, we present a diffusion model which does not need any motion component to capture the fast-moving anomalies and outperforms the other baseline methods.

Attacks as Defenses: Designing Robust Audio CAPTCHAs Using Attacks on Automatic Speech Recognition Systems

Mar 10, 2022

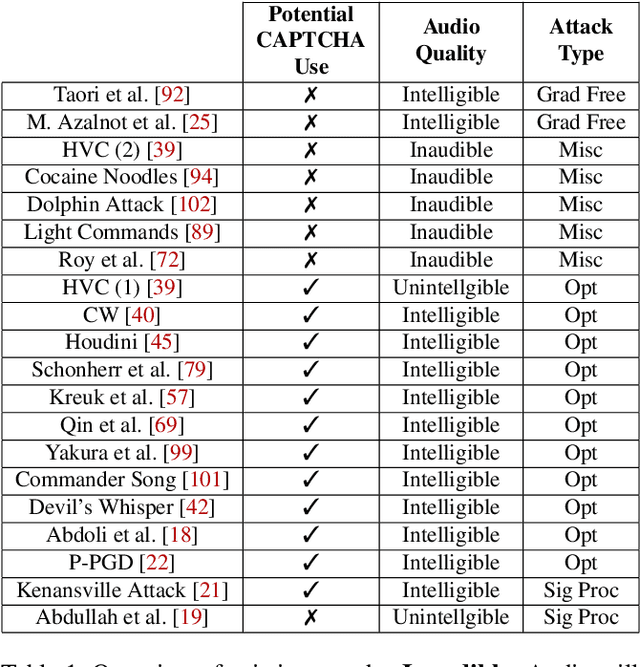

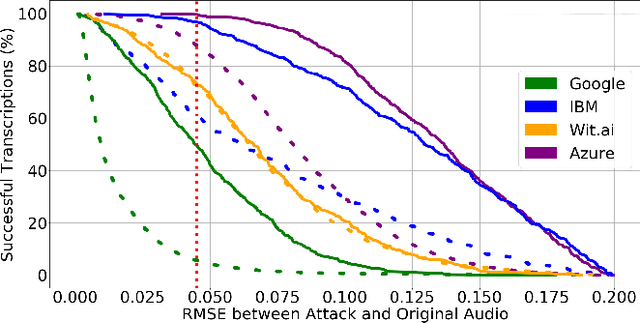

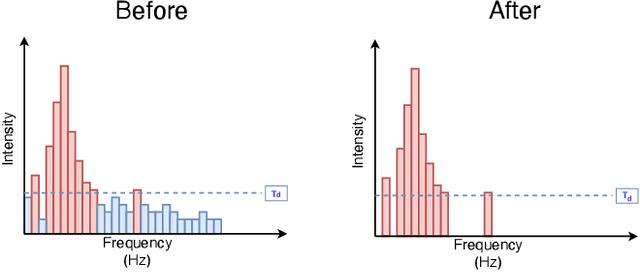

Abstract:Audio CAPTCHAs are supposed to provide a strong defense for online resources; however, advances in speech-to-text mechanisms have rendered these defenses ineffective. Audio CAPTCHAs cannot simply be abandoned, as they are specifically named by the W3C as important enablers of accessibility. Accordingly, demonstrably more robust audio CAPTCHAs are important to the future of a secure and accessible Web. We look to recent literature on attacks on speech-to-text systems for inspiration for the construction of robust, principle-driven audio defenses. We begin by comparing 20 recent attack papers, classifying and measuring their suitability to serve as the basis of new "robust to transcription" but "easy for humans to understand" CAPTCHAs. After showing that none of these attacks alone are sufficient, we propose a new mechanism that is both comparatively intelligible (evaluated through a user study) and hard to automatically transcribe (i.e., $P({\rm transcription}) = 4 \times 10^{-5}$). Finally, we demonstrate that our audio samples have a high probability of being detected as CAPTCHAs when given to speech-to-text systems ($P({\rm evasion}) = 1.77 \times 10^{-4}$). In so doing, we not only demonstrate a CAPTCHA that is approximately four orders of magnitude more difficult to crack, but that such systems can be designed based on the insights gained from attack papers using the differences between the ways that humans and computers process audio.

Advances in Deep Learning for Hyperspectral Image Analysis--Addressing Challenges Arising in Practical Imaging Scenarios

Jul 16, 2020

Abstract:Deep neural networks have proven to be very effective for computer vision tasks, such as image classification, object detection, and semantic segmentation -- these are primarily applied to color imagery and video. In recent years, there has been an emergence of deep learning algorithms being applied to hyperspectral and multispectral imagery for remote sensing and biomedicine tasks. These multi-channel images come with their own unique set of challenges that must be addressed for effective image analysis. Challenges include limited ground truth (annotation is expensive and extensive labeling is often not feasible), and high dimensional nature of the data (each pixel is represented by hundreds of spectral bands), despite being presented by a large amount of unlabeled data and the potential to leverage multiple sensors/sources that observe the same scene. In this chapter, we will review recent advances in the community that leverage deep learning for robust hyperspectral image analysis despite these unique challenges -- specifically, we will review unsupervised, semi-supervised and active learning approaches to image analysis, as well as transfer learning approaches for multi-source (e.g. multi-sensor, or multi-temporal) image analysis.

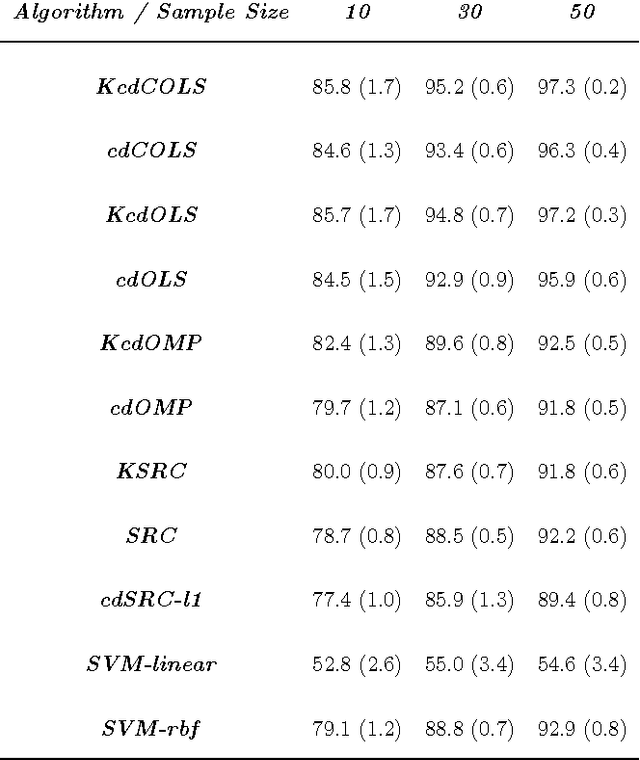

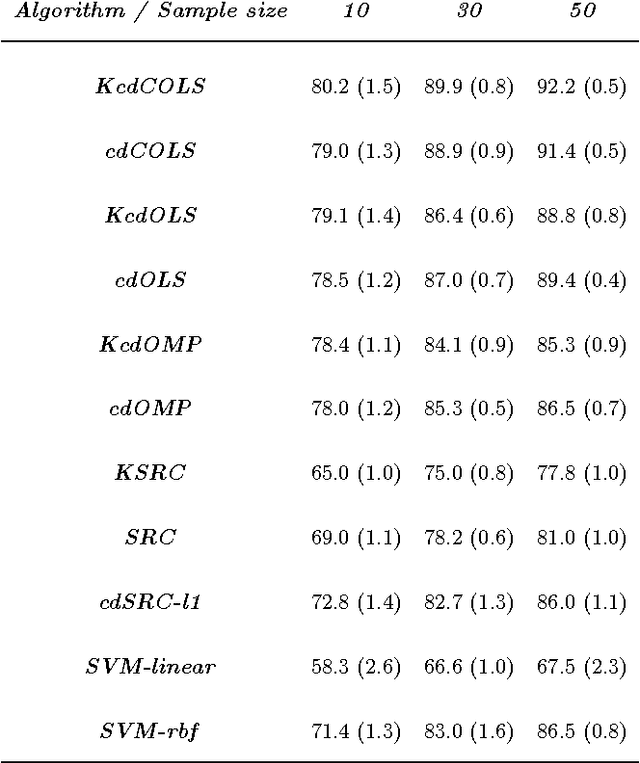

Sparse Representation-Based Classification: Orthogonal Least Squares or Orthogonal Matching Pursuit?

Jul 18, 2016

Abstract:Spare representation of signals has received significant attention in recent years. Based on these developments, a sparse representation-based classification (SRC) has been proposed for a variety of classification and related tasks, including face recognition. Recently, a class dependent variant of SRC was proposed to overcome the limitations of SRC for remote sensing image classification. Traditionally, greedy pursuit based method such as orthogonal matching pursuit (OMP) are used for sparse coefficient recovery due to their simplicity as well as low time-complexity. However, orthogonal least square (OLS) has not yet been widely used in classifiers that exploit the sparse representation properties of data. Since OLS produces lower signal reconstruction error than OMP under similar conditions, we hypothesize that more accurate signal estimation will further improve the classification performance of classifiers that exploiting the sparsity of data. In this paper, we present a classification method based on OLS, which implements OLS in a classwise manner to perform the classification. We also develop and present its kernelized variant to handle nonlinearly separable data. Based on two real-world benchmarking hyperspectral datasets, we demonstrate that class dependent OLS based methods outperform several baseline methods including traditional SRC and the support vector machine classifier.

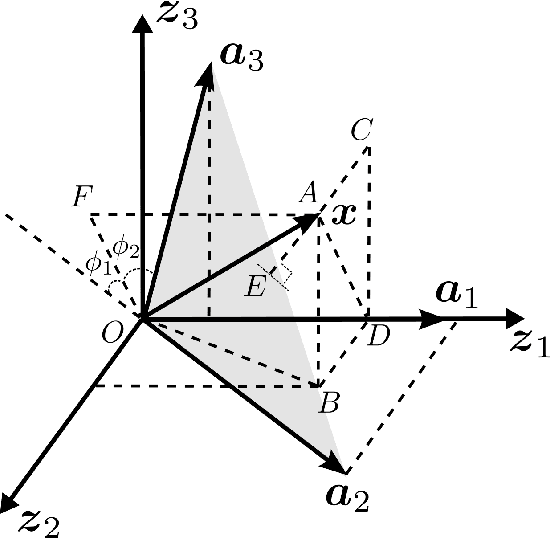

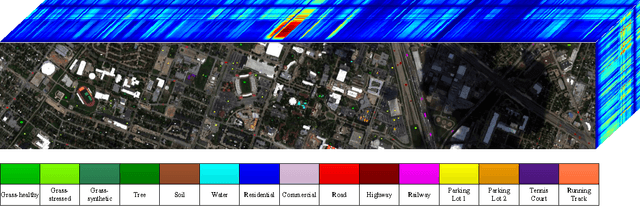

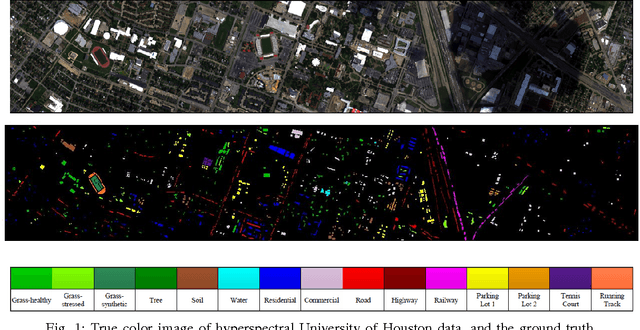

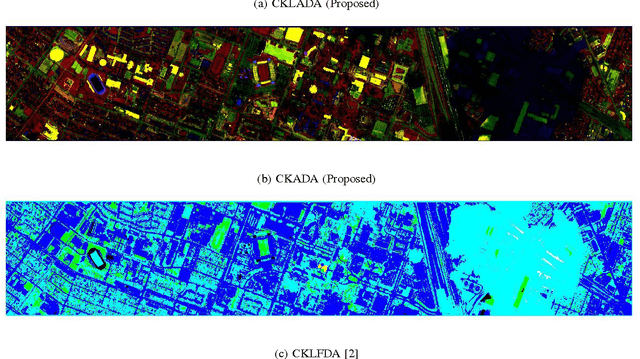

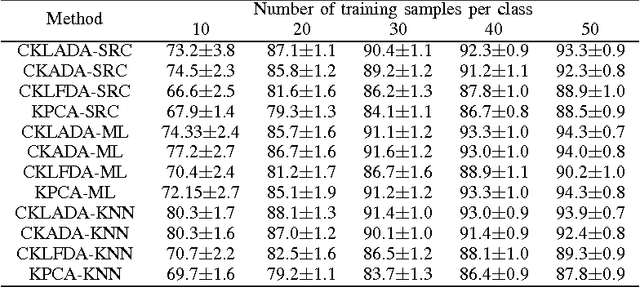

Composite Kernel Local Angular Discriminant Analysis for Multi-Sensor Geospatial Image Analysis

Jul 18, 2016

Abstract:With the emergence of passive and active optical sensors available for geospatial imaging, information fusion across sensors is becoming ever more important. An important aspect of single (or multiple) sensor geospatial image analysis is feature extraction - the process of finding "optimal" lower dimensional subspaces that adequately characterize class-specific information for subsequent analysis tasks, such as classification, change and anomaly detection etc. In recent work, we proposed and developed an angle-based discriminant analysis approach that projected data onto subspaces with maximal "angular" separability in the input (raw) feature space and Reproducible Kernel Hilbert Space (RKHS). We also developed an angular locality preserving variant of this algorithm. In this letter, we advance this work and make it suitable for information fusion - we propose and validate a composite kernel local angular discriminant analysis projection, that can operate on an ensemble of feature sources (e.g. from different sources), and project the data onto a unified space through composite kernels where the data are maximally separated in an angular sense. We validate this method with the multi-sensor University of Houston hyperspectral and LiDAR dataset, and demonstrate that the proposed method significantly outperforms other composite kernel approaches to sensor (information) fusion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge