Minshan Cui

Sparse Representation-Based Classification: Orthogonal Least Squares or Orthogonal Matching Pursuit?

Jul 18, 2016

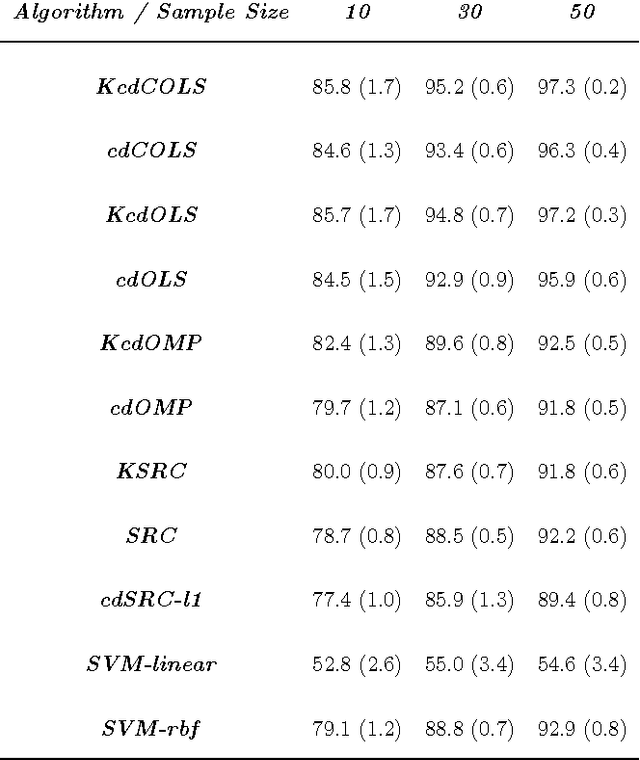

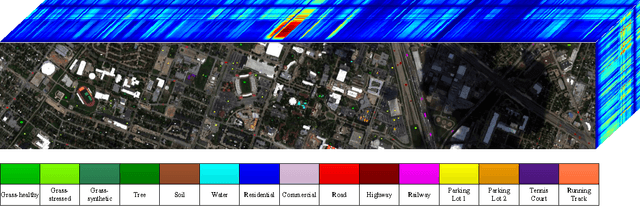

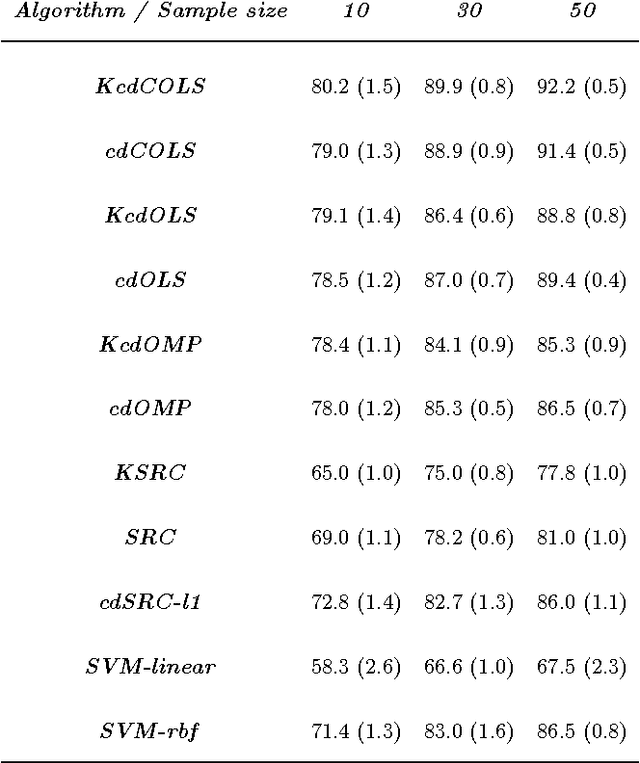

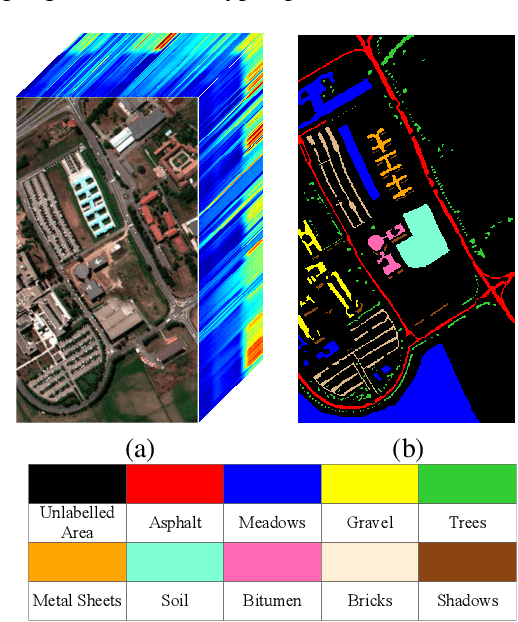

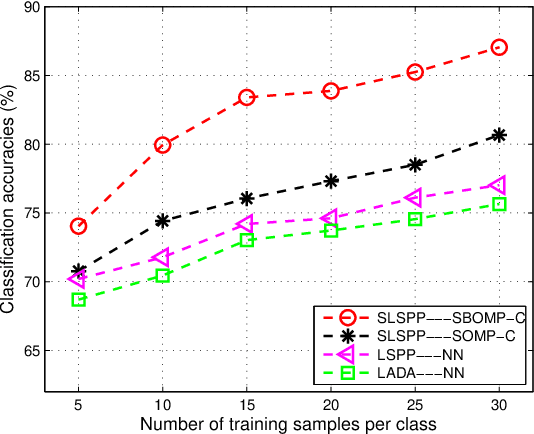

Abstract:Spare representation of signals has received significant attention in recent years. Based on these developments, a sparse representation-based classification (SRC) has been proposed for a variety of classification and related tasks, including face recognition. Recently, a class dependent variant of SRC was proposed to overcome the limitations of SRC for remote sensing image classification. Traditionally, greedy pursuit based method such as orthogonal matching pursuit (OMP) are used for sparse coefficient recovery due to their simplicity as well as low time-complexity. However, orthogonal least square (OLS) has not yet been widely used in classifiers that exploit the sparse representation properties of data. Since OLS produces lower signal reconstruction error than OMP under similar conditions, we hypothesize that more accurate signal estimation will further improve the classification performance of classifiers that exploiting the sparsity of data. In this paper, we present a classification method based on OLS, which implements OLS in a classwise manner to perform the classification. We also develop and present its kernelized variant to handle nonlinearly separable data. Based on two real-world benchmarking hyperspectral datasets, we demonstrate that class dependent OLS based methods outperform several baseline methods including traditional SRC and the support vector machine classifier.

Composite Kernel Local Angular Discriminant Analysis for Multi-Sensor Geospatial Image Analysis

Jul 18, 2016

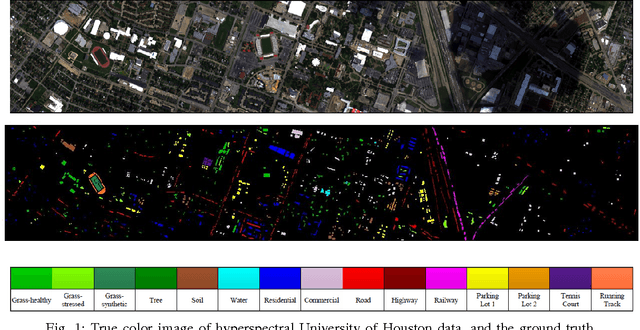

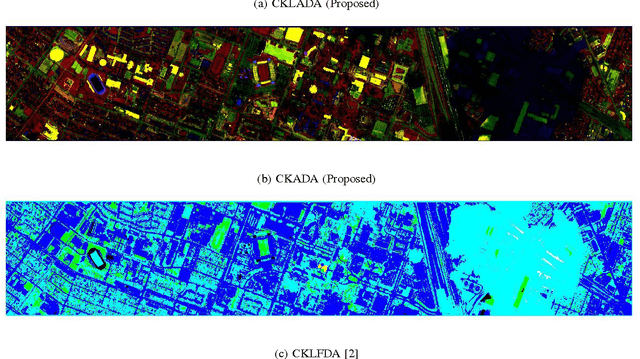

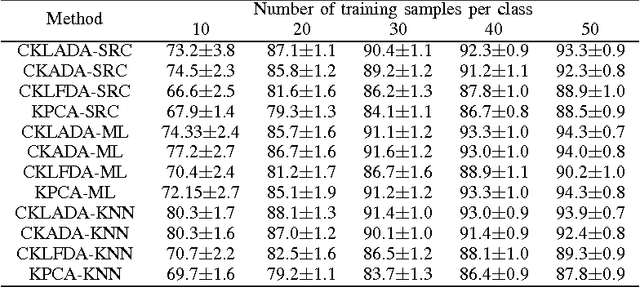

Abstract:With the emergence of passive and active optical sensors available for geospatial imaging, information fusion across sensors is becoming ever more important. An important aspect of single (or multiple) sensor geospatial image analysis is feature extraction - the process of finding "optimal" lower dimensional subspaces that adequately characterize class-specific information for subsequent analysis tasks, such as classification, change and anomaly detection etc. In recent work, we proposed and developed an angle-based discriminant analysis approach that projected data onto subspaces with maximal "angular" separability in the input (raw) feature space and Reproducible Kernel Hilbert Space (RKHS). We also developed an angular locality preserving variant of this algorithm. In this letter, we advance this work and make it suitable for information fusion - we propose and validate a composite kernel local angular discriminant analysis projection, that can operate on an ensemble of feature sources (e.g. from different sources), and project the data onto a unified space through composite kernels where the data are maximally separated in an angular sense. We validate this method with the multi-sensor University of Houston hyperspectral and LiDAR dataset, and demonstrate that the proposed method significantly outperforms other composite kernel approaches to sensor (information) fusion.

Person Re-identification with Hyperspectral Multi-Camera Systems --- A Pilot Study

Jul 15, 2016

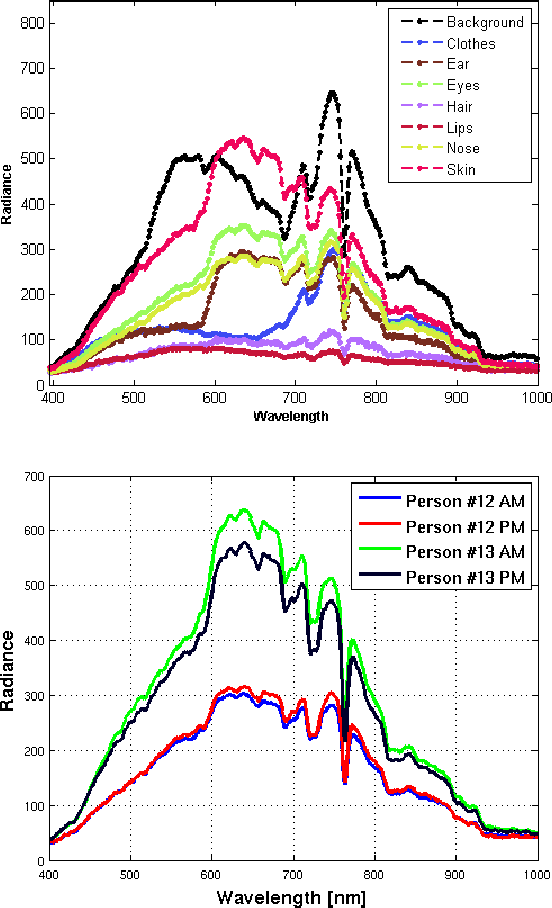

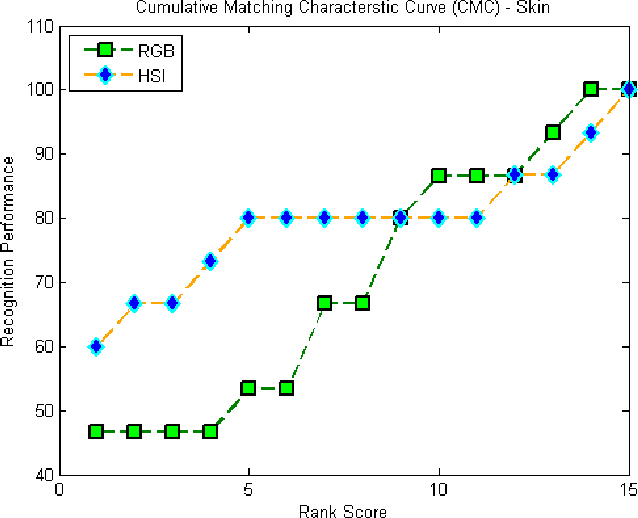

Abstract:Person re-identification in a multi-camera environment is an important part of modern surveillance systems. Person re-identification from color images has been the focus of much active research, due to the numerous challenges posed with such analysis tasks, such as variations in illumination, pose and viewpoints. In this paper, we suggest that hyperspectral imagery has the potential to provide unique information that is expected to be beneficial for the re-identification task. Specifically, we assert that by accurately characterizing the unique spectral signature for each person's skin, hyperspectral imagery can provide very useful descriptors (e.g. spectral signatures from skin pixels) for re-identification. Towards this end, we acquired proof-of-concept hyperspectral re-identification data under challenging (practical) conditions from 15 people. Our results indicate that hyperspectral data result in a substantially enhanced re-identification performance compared to color (RGB) images, when using spectral signatures over skin as the feature descriptor.

Spatial Context based Angular Information Preserving Projection for Hyperspectral Image Classification

Jul 15, 2016

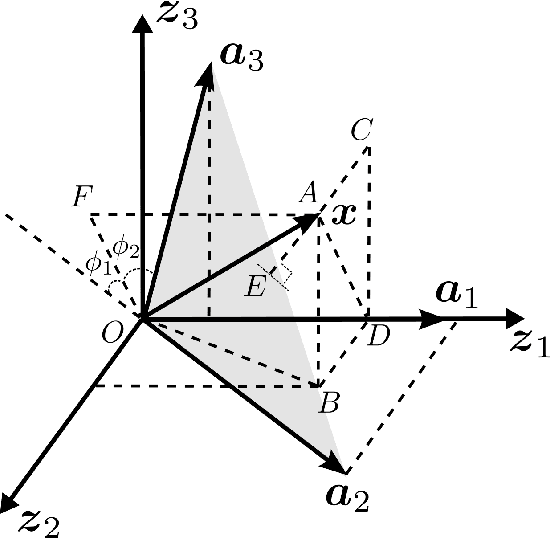

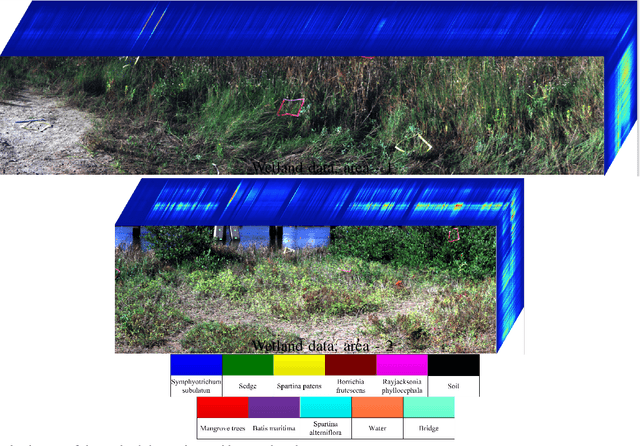

Abstract:Dimensionality reduction is a crucial preprocessing for hyperspectral data analysis - finding an appropriate subspace is often required for subsequent image classification. In recent work, we proposed supervised angular information based dimensionality reduction methods to find effective subspaces. Since unlabeled data are often more readily available compared to labeled data, we propose an unsupervised projection that finds a lower dimensional subspace where local angular information is preserved. To exploit spatial information from the hyperspectral images, we further extend our unsupervised projection to incorporate spatial contextual information around each pixel in the image. Additionally, we also propose a sparse representation based classifier which is optimized to exploit spatial information during classification - we hence assert that our proposed projection is particularly suitable for classifiers where local similarity and spatial context are both important. Experimental results with two real-world hyperspectral datasets demonstrate that our proposed methods provide a robust classification performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge