Akash Awasthi

DualProtoSeg: Simple and Efficient Design with Text- and Image-Guided Prototype Learning for Weakly Supervised Histopathology Image Segmentation

Dec 11, 2025Abstract:Weakly supervised semantic segmentation (WSSS) in histopathology seeks to reduce annotation cost by learning from image-level labels, yet it remains limited by inter-class homogeneity, intra-class heterogeneity, and the region-shrinkage effect of CAM-based supervision. We propose a simple and effective prototype-driven framework that leverages vision-language alignment to improve region discovery under weak supervision. Our method integrates CoOp-style learnable prompt tuning to generate text-based prototypes and combines them with learnable image prototypes, forming a dual-modal prototype bank that captures both semantic and appearance cues. To address oversmoothing in ViT representations, we incorporate a multi-scale pyramid module that enhances spatial precision and improves localization quality. Experiments on the BCSS-WSSS benchmark show that our approach surpasses existing state-of-the-art methods, and detailed analyses demonstrate the benefits of text description diversity, context length, and the complementary behavior of text and image prototypes. These results highlight the effectiveness of jointly leveraging textual semantics and visual prototype learning for WSSS in digital pathology.

ConStruct: Structural Distillation of Foundation Models for Prototype-Based Weakly Supervised Histopathology Segmentation

Dec 11, 2025Abstract:Weakly supervised semantic segmentation (WSSS) in histopathology relies heavily on classification backbones, yet these models often localize only the most discriminative regions and struggle to capture the full spatial extent of tissue structures. Vision-language models such as CONCH offer rich semantic alignment and morphology-aware representations, while modern segmentation backbones like SegFormer preserve fine-grained spatial cues. However, combining these complementary strengths remains challenging, especially under weak supervision and without dense annotations. We propose a prototype learning framework for WSSS in histopathological images that integrates morphology-aware representations from CONCH, multi-scale structural cues from SegFormer, and text-guided semantic alignment to produce prototypes that are simultaneously semantically discriminative and spatially coherent. To effectively leverage these heterogeneous sources, we introduce text-guided prototype initialization that incorporates pathology descriptions to generate more complete and semantically accurate pseudo-masks. A structural distillation mechanism transfers spatial knowledge from SegFormer to preserve fine-grained morphological patterns and local tissue boundaries during prototype learning. Our approach produces high-quality pseudo masks without pixel-level annotations, improves localization completeness, and enhances semantic consistency across tissue types. Experiments on BCSS-WSSS datasets demonstrate that our prototype learning framework outperforms existing WSSS methods while remaining computationally efficient through frozen foundation model backbones and lightweight trainable adapters.

Contrastive Integrated Gradients: A Feature Attribution-Based Method for Explaining Whole Slide Image Classification

Nov 14, 2025Abstract:Interpretability is essential in Whole Slide Image (WSI) analysis for computational pathology, where understanding model predictions helps build trust in AI-assisted diagnostics. While Integrated Gradients (IG) and related attribution methods have shown promise, applying them directly to WSIs introduces challenges due to their high-resolution nature. These methods capture model decision patterns but may overlook class-discriminative signals that are crucial for distinguishing between tumor subtypes. In this work, we introduce Contrastive Integrated Gradients (CIG), a novel attribution method that enhances interpretability by computing contrastive gradients in logit space. First, CIG highlights class-discriminative regions by comparing feature importance relative to a reference class, offering sharper differentiation between tumor and non-tumor areas. Second, CIG satisfies the axioms of integrated attribution, ensuring consistency and theoretical soundness. Third, we propose two attribution quality metrics, MIL-AIC and MIL-SIC, which measure how predictive information and model confidence evolve with access to salient regions, particularly under weak supervision. We validate CIG across three datasets spanning distinct cancer types: CAMELYON16 (breast cancer metastasis in lymph nodes), TCGA-RCC (renal cell carcinoma), and TCGA-Lung (lung cancer). Experimental results demonstrate that CIG yields more informative attributions both quantitatively, using MIL-AIC and MIL-SIC, and qualitatively, through visualizations that align closely with ground truth tumor regions, underscoring its potential for interpretable and trustworthy WSI-based diagnostics

Beyond the First Read: AI-Assisted Perceptual Error Detection in Chest Radiography Accounting for Interobserver Variability

Jun 16, 2025Abstract:Chest radiography is widely used in diagnostic imaging. However, perceptual errors -- especially overlooked but visible abnormalities -- remain common and clinically significant. Current workflows and AI systems provide limited support for detecting such errors after interpretation and often lack meaningful human--AI collaboration. We introduce RADAR (Radiologist--AI Diagnostic Assistance and Review), a post-interpretation companion system. RADAR ingests finalized radiologist annotations and CXR images, then performs regional-level analysis to detect and refer potentially missed abnormal regions. The system supports a "second-look" workflow and offers suggested regions of interest (ROIs) rather than fixed labels to accommodate inter-observer variation. We evaluated RADAR on a simulated perceptual-error dataset derived from de-identified CXR cases, using F1 score and Intersection over Union (IoU) as primary metrics. RADAR achieved a recall of 0.78, precision of 0.44, and an F1 score of 0.56 in detecting missed abnormalities in the simulated perceptual-error dataset. Although precision is moderate, this reduces over-reliance on AI by encouraging radiologist oversight in human--AI collaboration. The median IoU was 0.78, with more than 90% of referrals exceeding 0.5 IoU, indicating accurate regional localization. RADAR effectively complements radiologist judgment, providing valuable post-read support for perceptual-error detection in CXR interpretation. Its flexible ROI suggestions and non-intrusive integration position it as a promising tool in real-world radiology workflows. To facilitate reproducibility and further evaluation, we release a fully open-source web implementation alongside a simulated error dataset. All code, data, demonstration videos, and the application are publicly available at https://github.com/avutukuri01/RADAR.

GazeSearch: Radiology Findings Search Benchmark

Nov 08, 2024

Abstract:Medical eye-tracking data is an important information source for understanding how radiologists visually interpret medical images. This information not only improves the accuracy of deep learning models for X-ray analysis but also their interpretability, enhancing transparency in decision-making. However, the current eye-tracking data is dispersed, unprocessed, and ambiguous, making it difficult to derive meaningful insights. Therefore, there is a need to create a new dataset with more focus and purposeful eyetracking data, improving its utility for diagnostic applications. In this work, we propose a refinement method inspired by the target-present visual search challenge: there is a specific finding and fixations are guided to locate it. After refining the existing eye-tracking datasets, we transform them into a curated visual search dataset, called GazeSearch, specifically for radiology findings, where each fixation sequence is purposefully aligned to the task of locating a particular finding. Subsequently, we introduce a scan path prediction baseline, called ChestSearch, specifically tailored to GazeSearch. Finally, we employ the newly introduced GazeSearch as a benchmark to evaluate the performance of current state-of-the-art methods, offering a comprehensive assessment for visual search in the medical imaging domain.

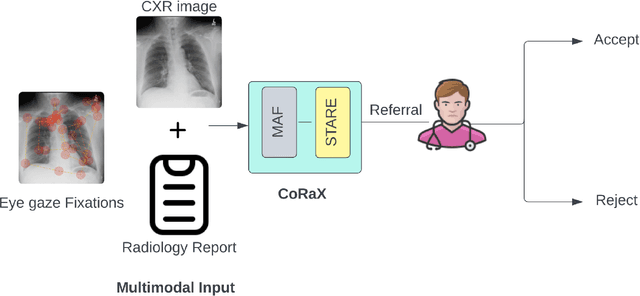

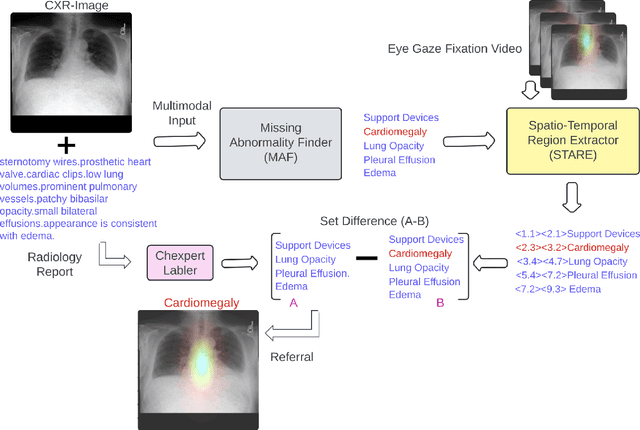

Enhancing Radiological Diagnosis: A Collaborative Approach Integrating AI and Human Expertise for Visual Miss Correction

Jun 28, 2024

Abstract:Human-AI collaboration to identify and correct perceptual errors in chest radiographs has not been previously explored. This study aimed to develop a collaborative AI system, CoRaX, which integrates eye gaze data and radiology reports to enhance diagnostic accuracy in chest radiology by pinpointing perceptual errors and refining the decision-making process. Using public datasets REFLACX and EGD-CXR, the study retrospectively developed CoRaX, employing a large multimodal model to analyze image embeddings, eye gaze data, and radiology reports. The system's effectiveness was evaluated based on its referral-making process, the quality of referrals, and performance in collaborative diagnostic settings. CoRaX was tested on a simulated error dataset of 271 samples with 28% (93 of 332) missed abnormalities. The system corrected 21% (71 of 332) of these errors, leaving 7% (22 of 312) unresolved. The Referral-Usefulness score, indicating the accuracy of predicted regions for all true referrals, was 0.63 (95% CI 0.59, 0.68). The Total-Usefulness score, reflecting the diagnostic accuracy of CoRaX's interactions with radiologists, showed that 84% (237 of 280) of these interactions had a score above 0.40. In conclusion, CoRaX efficiently collaborates with radiologists to address perceptual errors across various abnormalities, with potential applications in the education and training of novice radiologists.

Multimodal Learning and Cognitive Processes in Radiology: MedGaze for Chest X-ray Scanpath Prediction

Jun 28, 2024

Abstract:Predicting human gaze behavior within computer vision is integral for developing interactive systems that can anticipate user attention, address fundamental questions in cognitive science, and hold implications for fields like human-computer interaction (HCI) and augmented/virtual reality (AR/VR) systems. Despite methodologies introduced for modeling human eye gaze behavior, applying these models to medical imaging for scanpath prediction remains unexplored. Our proposed system aims to predict eye gaze sequences from radiology reports and CXR images, potentially streamlining data collection and enhancing AI systems using larger datasets. However, predicting human scanpaths on medical images presents unique challenges due to the diverse nature of abnormal regions. Our model predicts fixation coordinates and durations critical for medical scanpath prediction, outperforming existing models in the computer vision community. Utilizing a two-stage training process and large publicly available datasets, our approach generates static heatmaps and eye gaze videos aligned with radiology reports, facilitating comprehensive analysis. We validate our approach by comparing its performance with state-of-the-art methods and assessing its generalizability among different radiologists, introducing novel strategies to model radiologists' search patterns during CXR image diagnosis. Based on the radiologist's evaluation, MedGaze can generate human-like gaze sequences with a high focus on relevant regions over the CXR images. It sometimes also outperforms humans in terms of redundancy and randomness in the scanpaths.

Decoding Radiologists' Intentions: A Novel System for Accurate Region Identification in Chest X-ray Image Analysis

Apr 29, 2024Abstract:In the realm of chest X-ray (CXR) image analysis, radiologists meticulously examine various regions, documenting their observations in reports. The prevalence of errors in CXR diagnoses, particularly among inexperienced radiologists and hospital residents, underscores the importance of understanding radiologists' intentions and the corresponding regions of interest. This understanding is crucial for correcting mistakes by guiding radiologists to the accurate regions of interest, especially in the diagnosis of chest radiograph abnormalities. In response to this imperative, we propose a novel system designed to identify the primary intentions articulated by radiologists in their reports and the corresponding regions of interest in CXR images. This system seeks to elucidate the visual context underlying radiologists' textual findings, with the potential to rectify errors made by less experienced practitioners and direct them to precise regions of interest. Importantly, the proposed system can be instrumental in providing constructive feedback to inexperienced radiologists or junior residents in the hospital, bridging the gap in face-to-face communication. The system represents a valuable tool for enhancing diagnostic accuracy and fostering continuous learning within the medical community.

Apoptosis classification using attention based spatio temporal graph convolution neural network

Nov 16, 2023Abstract:Accurate classification of apoptosis plays an important role in cell biology research. There are many state-of-the-art approaches which use deep CNNs to perform the apoptosis classification but these approaches do not account for the cell interaction. Our paper proposes the Attention Graph spatio-temporal graph convolutional network to classify the cell death based on the target cells in the video. This method considers the interaction of multiple target cells at each time stamp. We model the whole video sequence as a set of graphs and classify the target cell in the video as dead or alive. Our method encounters both spatial and temporal relationships.

Anomaly Detection in Satellite Videos using Diffusion Models

May 25, 2023Abstract:The definition of anomaly detection is the identification of an unexpected event. Real-time detection of extreme events such as wildfires, cyclones, or floods using satellite data has become crucial for disaster management. Although several earth-observing satellites provide information about disasters, satellites in the geostationary orbit provide data at intervals as frequent as every minute, effectively creating a video from space. There are many techniques that have been proposed to identify anomalies in surveillance videos; however, the available datasets do not have dynamic behavior, so we discuss an anomaly framework that can work on very high-frequency datasets to find very fast-moving anomalies. In this work, we present a diffusion model which does not need any motion component to capture the fast-moving anomalies and outperforms the other baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge