Stefano Tubaro

Enhanced Water Leak Detection with Convolutional Neural Networks and One-Class Support Vector Machine

Nov 10, 2025

Abstract:Water is a critical resource that must be managed efficiently. However, a substantial amount of water is lost each year due to leaks in Water Distribution Networks (WDNs). This underscores the need for reliable and effective leak detection and localization systems. In recent years, various solutions have been proposed, with data-driven approaches gaining increasing attention due to their superior performance. In this paper, we propose a new method for leak detection. The method is based on water pressure measurements acquired at a series of nodes of a WDN. Our technique is a fully data-driven solution that makes only use of the knowledge of the WDN topology, and a series of pressure data acquisitions obtained in absence of leaks. The proposed solution is based on an feature extractor and a one-class Support Vector Machines (SVM) trained on no-leak data, so that leaks are detected as anomalies. The results achieved on a simulate dataset using the Modena WDN demonstrate that the proposed solution outperforms recent methods for leak detection.

Source Verification for Speech Deepfakes

May 20, 2025

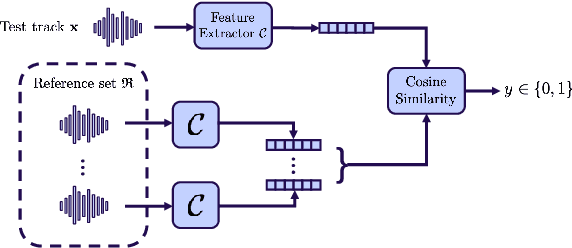

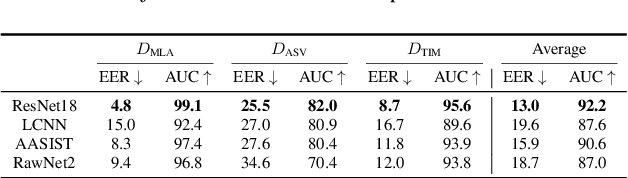

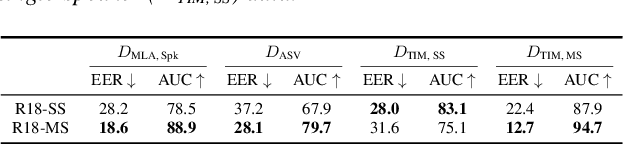

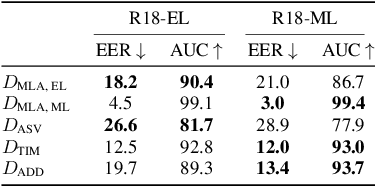

Abstract:With the proliferation of speech deepfake generators, it becomes crucial not only to assess the authenticity of synthetic audio but also to trace its origin. While source attribution models attempt to address this challenge, they often struggle in open-set conditions against unseen generators. In this paper, we introduce the source verification task, which, inspired by speaker verification, determines whether a test track was produced using the same model as a set of reference signals. Our approach leverages embeddings from a classifier trained for source attribution, computing distance scores between tracks to assess whether they originate from the same source. We evaluate multiple models across diverse scenarios, analyzing the impact of speaker diversity, language mismatch, and post-processing operations. This work provides the first exploration of source verification, highlighting its potential and vulnerabilities, and offers insights for real-world forensic applications.

Leveraging Land Cover Priors for Isoprene Emission Super-Resolution

Mar 24, 2025Abstract:Remote sensing plays a crucial role in monitoring Earth's ecosystems, yet satellite-derived data often suffer from limited spatial resolution, restricting their applicability in atmospheric modeling and climate research. In this work, we propose a deep learning-based Super-Resolution (SR) framework that leverages land cover information to enhance the spatial accuracy of Biogenic Volatile Organic Compounds (BVOCs) emissions, with a particular focus on isoprene. Our approach integrates land cover priors as emission drivers, capturing spatial patterns more effectively than traditional methods. We evaluate the model's performance across various climate conditions and analyze statistical correlations between isoprene emissions and key environmental information such as cropland and tree cover data. Additionally, we assess the generalization capabilities of our SR model by applying it to unseen climate zones and geographical regions. Experimental results demonstrate that incorporating land cover data significantly improves emission SR accuracy, particularly in heterogeneous landscapes. This study contributes to atmospheric chemistry and climate modeling by providing a cost-effective, data-driven approach to refining BVOC emission maps. The proposed method enhances the usability of satellite-based emissions data, supporting applications in air quality forecasting, climate impact assessments, and environmental studies.

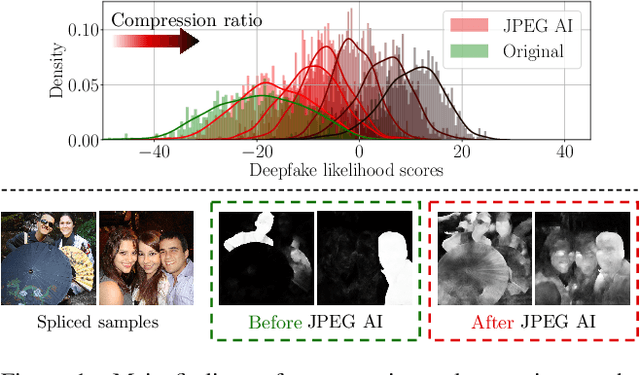

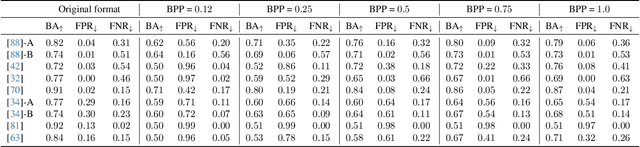

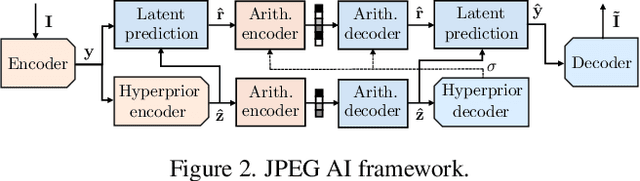

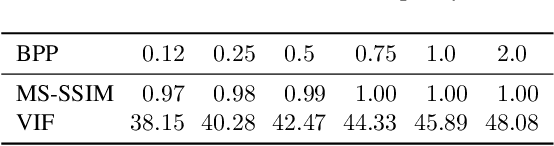

Is JPEG AI going to change image forensics?

Dec 04, 2024

Abstract:In this paper, we investigate the counter-forensic effects of the forthcoming JPEG AI standard based on neural image compression, focusing on two critical areas: deepfake image detection and image splicing localization. Neural image compression leverages advanced neural network algorithms to achieve higher compression rates while maintaining image quality. However, it introduces artifacts that closely resemble those generated by image synthesis techniques and image splicing pipelines, complicating the work of researchers when discriminating pristine from manipulated content. We comprehensively analyze JPEG AI's counter-forensic effects through extensive experiments on several state-of-the-art detectors and datasets. Our results demonstrate that an increase in false alarms impairs the performance of leading forensic detectors when analyzing genuine content processed through JPEG AI. By exposing the vulnerabilities of the available forensic tools we aim to raise the urgent need for multimedia forensics researchers to include JPEG AI images in their experimental setups and develop robust forensic techniques to distinguish between neural compression artifacts and actual manipulations.

POLIPHONE: A Dataset for Smartphone Model Identification from Audio Recordings

Oct 08, 2024Abstract:When dealing with multimedia data, source attribution is a key challenge from a forensic perspective. This task aims to determine how a given content was captured, providing valuable insights for various applications, including legal proceedings and integrity investigations. The source attribution problem has been addressed in different domains, from identifying the camera model used to capture specific photographs to detecting the synthetic speech generator or microphone model used to create or record given audio tracks. Recent advancements in this area rely heavily on machine learning and data-driven techniques, which often outperform traditional signal processing-based methods. However, a drawback of these systems is their need for large volumes of training data, which must reflect the latest technological trends to produce accurate and reliable predictions. This presents a significant challenge, as the rapid pace of technological progress makes it difficult to maintain datasets that are up-to-date with real-world conditions. For instance, in the task of smartphone model identification from audio recordings, the available datasets are often outdated or acquired inconsistently, making it difficult to develop solutions that are valid beyond a research environment. In this paper we present POLIPHONE, a dataset for smartphone model identification from audio recordings. It includes data from 20 recent smartphones recorded in a controlled environment to ensure reproducibility and scalability for future research. The released tracks contain audio data from various domains (i.e., speech, music, environmental sounds), making the corpus versatile and applicable to a wide range of use cases. We also present numerous experiments to benchmark the proposed dataset using a state-of-the-art classifier for smartphone model identification from audio recordings.

Freeze and Learn: Continual Learning with Selective Freezing for Speech Deepfake Detection

Sep 26, 2024

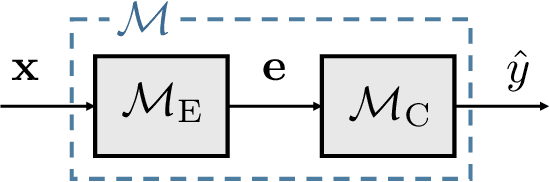

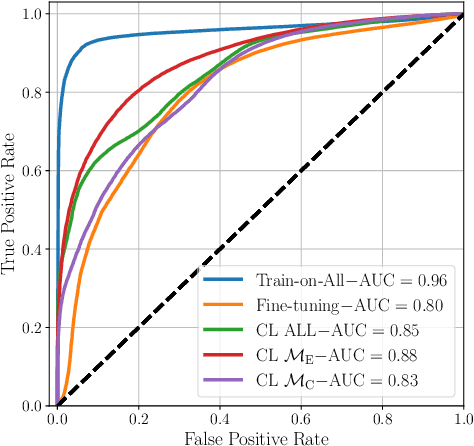

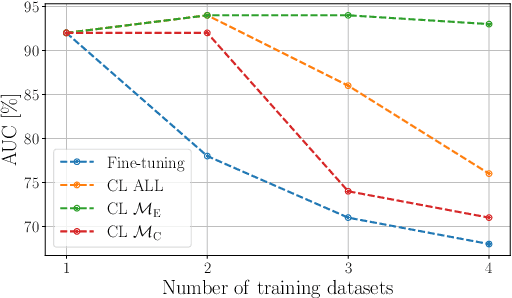

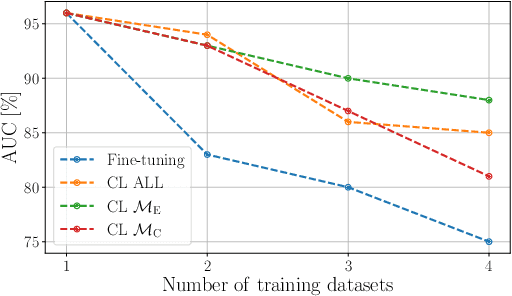

Abstract:In speech deepfake detection, one of the critical aspects is developing detectors able to generalize on unseen data and distinguish fake signals across different datasets. Common approaches to this challenge involve incorporating diverse data into the training process or fine-tuning models on unseen datasets. However, these solutions can be computationally demanding and may lead to the loss of knowledge acquired from previously learned data. Continual learning techniques offer a potential solution to this problem, allowing the models to learn from unseen data without losing what they have already learned. Still, the optimal way to apply these algorithms for speech deepfake detection remains unclear, and we do not know which is the best way to apply these algorithms to the developed models. In this paper we address this aspect and investigate whether, when retraining a speech deepfake detector, it is more effective to apply continual learning across the entire model or to update only some of its layers while freezing others. Our findings, validated across multiple models, indicate that the most effective approach among the analyzed ones is to update only the weights of the initial layers, which are responsible for processing the input features of the detector.

Leveraging Mixture of Experts for Improved Speech Deepfake Detection

Sep 24, 2024

Abstract:Speech deepfakes pose a significant threat to personal security and content authenticity. Several detectors have been proposed in the literature, and one of the primary challenges these systems have to face is the generalization over unseen data to identify fake signals across a wide range of datasets. In this paper, we introduce a novel approach for enhancing speech deepfake detection performance using a Mixture of Experts architecture. The Mixture of Experts framework is well-suited for the speech deepfake detection task due to its ability to specialize in different input types and handle data variability efficiently. This approach offers superior generalization and adaptability to unseen data compared to traditional single models or ensemble methods. Additionally, its modular structure supports scalable updates, making it more flexible in managing the evolving complexity of deepfake techniques while maintaining high detection accuracy. We propose an efficient, lightweight gating mechanism to dynamically assign expert weights for each input, optimizing detection performance. Experimental results across multiple datasets demonstrate the effectiveness and potential of our proposed approach.

FakeMusicCaps: a Dataset for Detection and Attribution of Synthetic Music Generated via Text-to-Music Models

Sep 16, 2024

Abstract:Text-To-Music (TTM) models have recently revolutionized the automatic music generation research field. Specifically, by reaching superior performances to all previous state-of-the-art models and by lowering the technical proficiency needed to use them. Due to these reasons, they have readily started to be adopted for commercial uses and music production practices. This widespread diffusion of TTMs poses several concerns regarding copyright violation and rightful attribution, posing the need of serious consideration of them by the audio forensics community. In this paper, we tackle the problem of detection and attribution of TTM-generated data. We propose a dataset, FakeMusicCaps that contains several versions of the music-caption pairs dataset MusicCaps re-generated via several state-of-the-art TTM techniques. We evaluate the proposed dataset by performing initial experiments regarding the detection and attribution of TTM-generated audio.

Analyzing the Impact of Splicing Artifacts in Partially Fake Speech Signals

Aug 25, 2024

Abstract:Speech deepfake detection has recently gained significant attention within the multimedia forensics community. Related issues have also been explored, such as the identification of partially fake signals, i.e., tracks that include both real and fake speech segments. However, generating high-quality spliced audio is not as straightforward as it may appear. Spliced signals are typically created through basic signal concatenation. This process could introduce noticeable artifacts that can make the generated data easier to detect. We analyze spliced audio tracks resulting from signal concatenation, investigate their artifacts and assess whether such artifacts introduce any bias in existing datasets. Our findings reveal that by analyzing splicing artifacts, we can achieve a detection EER of 6.16% and 7.36% on PartialSpoof and HAD datasets, respectively, without needing to train any detector. These results underscore the complexities of generating reliable spliced audio data and lead to discussions that can help improve future research in this area.

Deepfake Media Forensics: State of the Art and Challenges Ahead

Aug 01, 2024Abstract:AI-generated synthetic media, also called Deepfakes, have significantly influenced so many domains, from entertainment to cybersecurity. Generative Adversarial Networks (GANs) and Diffusion Models (DMs) are the main frameworks used to create Deepfakes, producing highly realistic yet fabricated content. While these technologies open up new creative possibilities, they also bring substantial ethical and security risks due to their potential misuse. The rise of such advanced media has led to the development of a cognitive bias known as Impostor Bias, where individuals doubt the authenticity of multimedia due to the awareness of AI's capabilities. As a result, Deepfake detection has become a vital area of research, focusing on identifying subtle inconsistencies and artifacts with machine learning techniques, especially Convolutional Neural Networks (CNNs). Research in forensic Deepfake technology encompasses five main areas: detection, attribution and recognition, passive authentication, detection in realistic scenarios, and active authentication. Each area tackles specific challenges, from tracing the origins of synthetic media and examining its inherent characteristics for authenticity. This paper reviews the primary algorithms that address these challenges, examining their advantages, limitations, and future prospects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge