Shuguang Dou

Visual Bridge: Universal Visual Perception Representations Generating

Nov 11, 2025Abstract:Recent advances in diffusion models have achieved remarkable success in isolated computer vision tasks such as text-to-image generation, depth estimation, and optical flow. However, these models are often restricted by a ``single-task-single-model'' paradigm, severely limiting their generalizability and scalability in multi-task scenarios. Motivated by the cross-domain generalization ability of large language models, we propose a universal visual perception framework based on flow matching that can generate diverse visual representations across multiple tasks. Our approach formulates the process as a universal flow-matching problem from image patch tokens to task-specific representations rather than an independent generation or regression problem. By leveraging a strong self-supervised foundation model as the anchor and introducing a multi-scale, circular task embedding mechanism, our method learns a universal velocity field to bridge the gap between heterogeneous tasks, supporting efficient and flexible representation transfer. Extensive experiments on classification, detection, segmentation, depth estimation, and image-text retrieval demonstrate that our model achieves competitive performance in both zero-shot and fine-tuned settings, outperforming prior generalist and several specialist models. Ablation studies further validate the robustness, scalability, and generalization of our framework. Our work marks a significant step towards general-purpose visual perception, providing a solid foundation for future research in universal vision modeling.

DROP: Decouple Re-Identification and Human Parsing with Task-specific Features for Occluded Person Re-identification

Jan 31, 2024Abstract:The paper introduces the Decouple Re-identificatiOn and human Parsing (DROP) method for occluded person re-identification (ReID). Unlike mainstream approaches using global features for simultaneous multi-task learning of ReID and human parsing, or relying on semantic information for attention guidance, DROP argues that the inferior performance of the former is due to distinct granularity requirements for ReID and human parsing features. ReID focuses on instance part-level differences between pedestrian parts, while human parsing centers on semantic spatial context, reflecting the internal structure of the human body. To address this, DROP decouples features for ReID and human parsing, proposing detail-preserving upsampling to combine varying resolution feature maps. Parsing-specific features for human parsing are decoupled, and human position information is exclusively added to the human parsing branch. In the ReID branch, a part-aware compactness loss is introduced to enhance instance-level part differences. Experimental results highlight the efficacy of DROP, especially achieving a Rank-1 accuracy of 76.8% on Occluded-Duke, surpassing two mainstream methods. The codebase is accessible at https://github.com/shuguang-52/DROP.

Invisible Backdoor Attack with Dynamic Triggers against Person Re-identification

Nov 20, 2022Abstract:In recent years, person Re-identification (ReID) has rapidly progressed with wide real-world applications, but also poses significant risks of adversarial attacks. In this paper, we focus on the backdoor attack on deep ReID models. Existing backdoor attack methods follow an all-to-one/all attack scenario, where all the target classes in the test set have already been seen in the training set. However, ReID is a much more complex fine-grained open-set recognition problem, where the identities in the test set are not contained in the training set. Thus, previous backdoor attack methods for classification are not applicable for ReID. To ameliorate this issue, we propose a novel backdoor attack on deep ReID under a new all-to-unknown scenario, called Dynamic Triggers Invisible Backdoor Attack (DT-IBA). Instead of learning fixed triggers for the target classes from the training set, DT-IBA can dynamically generate new triggers for any unknown identities. Specifically, an identity hashing network is proposed to first extract target identity information from a reference image, which is then injected into the benign images by image steganography. We extensively validate the effectiveness and stealthiness of the proposed attack on benchmark datasets, and evaluate the effectiveness of several defense methods against our attack.

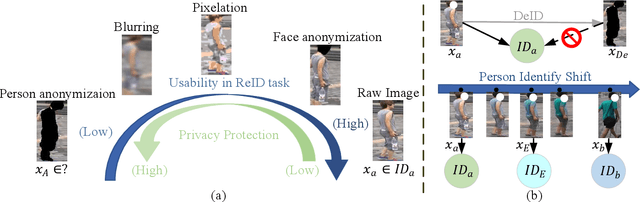

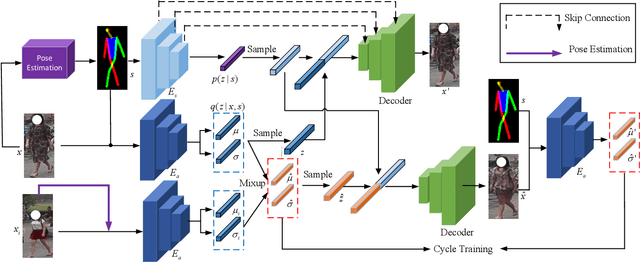

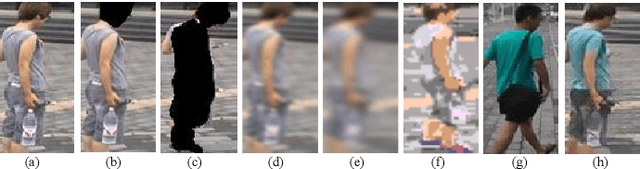

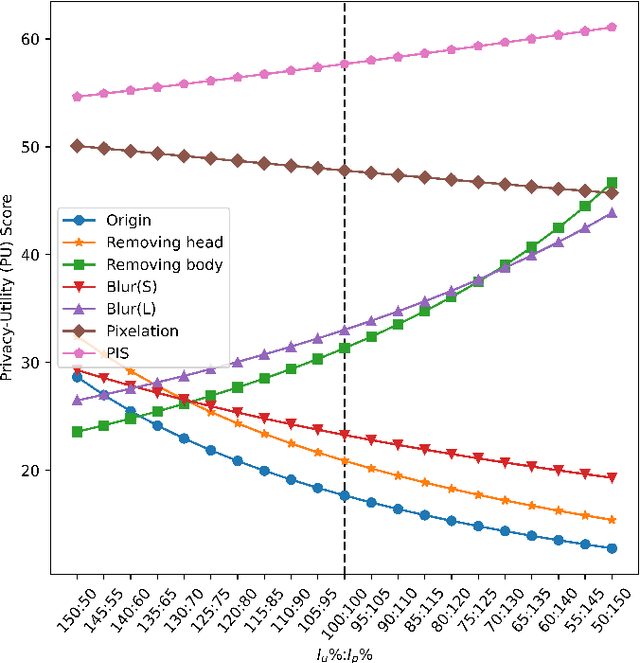

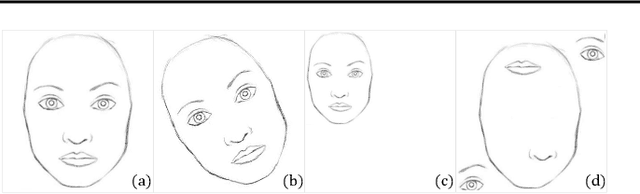

Towards Privacy-Preserving Person Re-identification via Person Identify Shift

Jul 15, 2022

Abstract:Recently privacy concerns of person re-identification (ReID) raise more and more attention and preserving the privacy of the pedestrian images used by ReID methods become essential. De-identification (DeID) methods alleviate privacy issues by removing the identity-related of the ReID data. However, most of the existing DeID methods tend to remove all personal identity-related information and compromise the usability of de-identified data on the ReID task. In this paper, we aim to develop a technique that can achieve a good trade-off between privacy protection and data usability for person ReID. To achieve this, we propose a novel de-identification method designed explicitly for person ReID, named Person Identify Shift (PIS). PIS removes the absolute identity in a pedestrian image while preserving the identity relationship between image pairs. By exploiting the interpolation property of variational auto-encoder, PIS shifts each pedestrian image from the current identity to another with a new identity, resulting in images still preserving the relative identities. Experimental results show that our method has a better trade-off between privacy-preserving and model performance than existing de-identification methods and can defend against human and model attacks for data privacy.

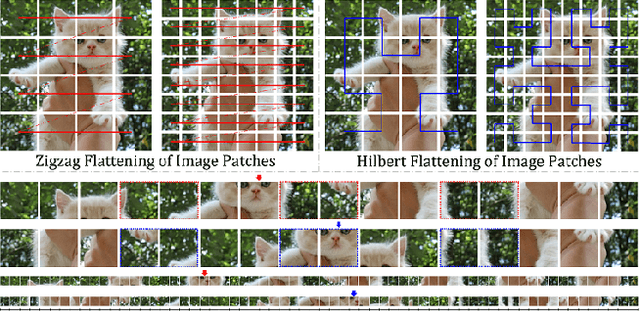

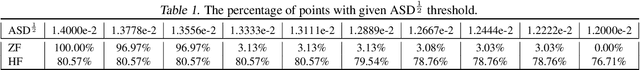

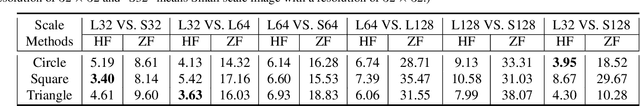

Rethinking the Zigzag Flattening for Image Reading

Mar 15, 2022

Abstract:Sequence ordering of word vector matters a lot to text reading, which has been proven in natural language processing (NLP). However, the rule of different sequence ordering in computer vision (CV) was not well explored, e.g., why the "zigzag" flattening (ZF) is commonly utilized as a default option to get the image patches ordering in vision transformers (ViTs). Notably, when decomposing multi-scale images, the ZF could not maintain the invariance of feature point positions. To this end, we investigate the Hilbert fractal flattening (HF) as another method for sequence ordering in CV and contrast it against ZF. The HF has proven to be superior to other curves in maintaining spatial locality, when performing multi-scale transformations of dimensional space. And it can be easily plugged into most deep neural networks (DNNs). Extensive experiments demonstrate that it can yield consistent and significant performance boosts for a variety of architectures. Finally, we hope that our studies spark further research about the flattening strategy of image reading.

GSC Loss: A Gaussian Score Calibrating Loss for Deep Learning

Mar 02, 2022

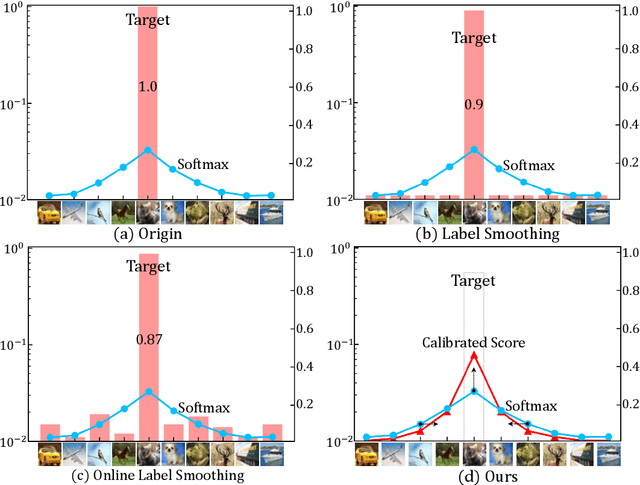

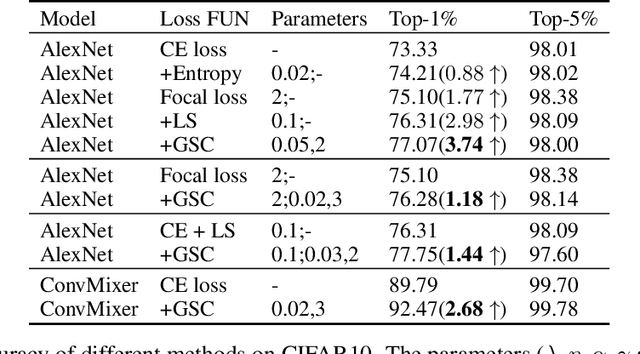

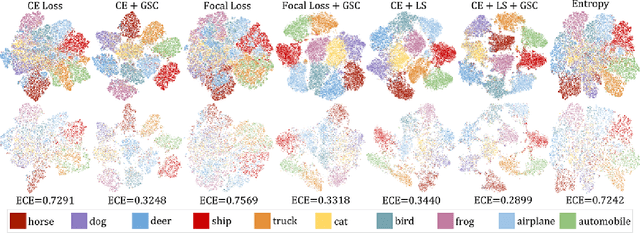

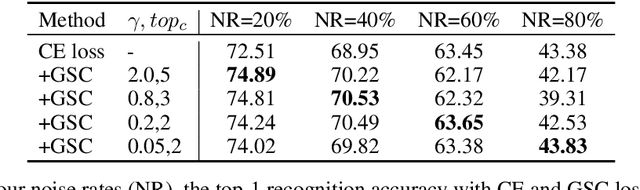

Abstract:Cross entropy (CE) loss integrated with softmax is an orthodox component in most classification-based frameworks, but it fails to obtain an accurate probability distribution of predicted scores that is critical for further decision-making of poor-classified samples. The prediction score calibration provides a solution to learn the distribution of predicted scores which can explicitly make the model obtain a discriminative representation. Considering the entropy function can be utilized to measure the uncertainty of predicted scores. But, the gradient variation of it is not in line with the expectations of model optimization. To this end, we proposed a general Gaussian Score Calibrating (GSC) loss to calibrate the predicted scores produced by the deep neural networks (DNN). Extensive experiments on over 10 benchmark datasets demonstrate that the proposed GSC loss can yield consistent and significant performance boosts in a variety of visual tasks. Notably, our label-independent GSC loss can be embedded into common improved methods based on the CE loss easily.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge