GSC Loss: A Gaussian Score Calibrating Loss for Deep Learning

Paper and Code

Mar 02, 2022

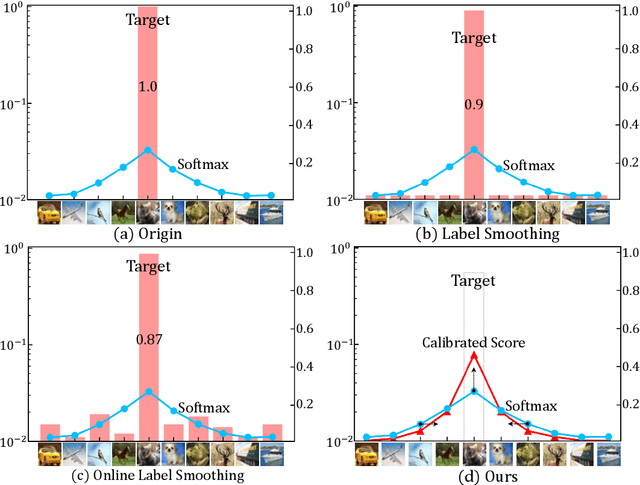

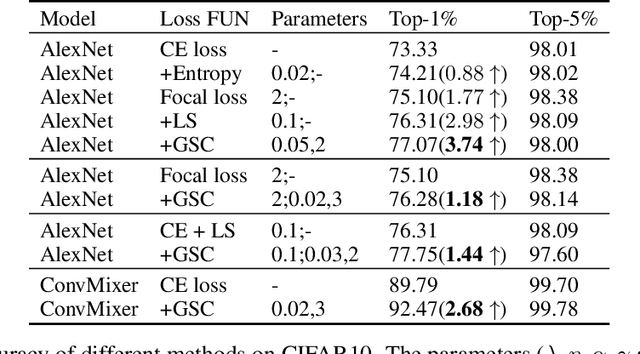

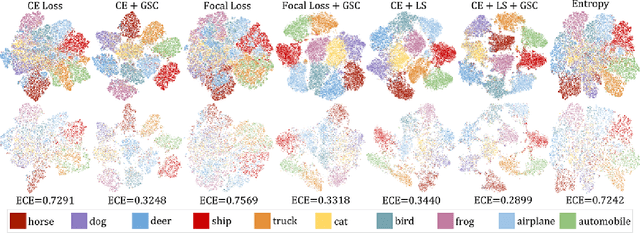

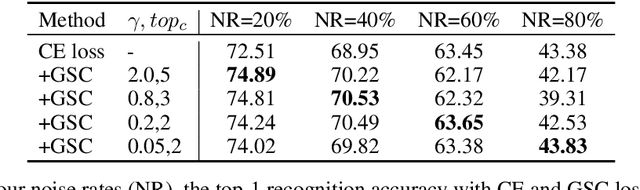

Cross entropy (CE) loss integrated with softmax is an orthodox component in most classification-based frameworks, but it fails to obtain an accurate probability distribution of predicted scores that is critical for further decision-making of poor-classified samples. The prediction score calibration provides a solution to learn the distribution of predicted scores which can explicitly make the model obtain a discriminative representation. Considering the entropy function can be utilized to measure the uncertainty of predicted scores. But, the gradient variation of it is not in line with the expectations of model optimization. To this end, we proposed a general Gaussian Score Calibrating (GSC) loss to calibrate the predicted scores produced by the deep neural networks (DNN). Extensive experiments on over 10 benchmark datasets demonstrate that the proposed GSC loss can yield consistent and significant performance boosts in a variety of visual tasks. Notably, our label-independent GSC loss can be embedded into common improved methods based on the CE loss easily.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge