Shintaro Yamamoto

Community-Driven Comprehensive Scientific Paper Summarization: Insight from cvpaper.challenge

Mar 17, 2022

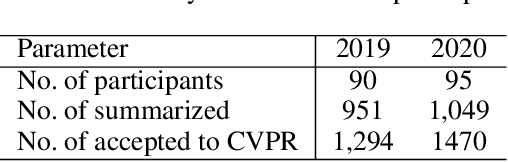

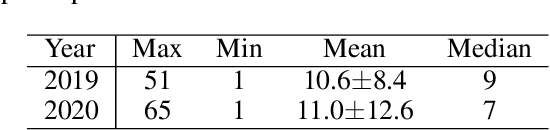

Abstract:The present paper introduces a group activity involving writing summaries of conference proceedings by volunteer participants. The rapid increase in scientific papers is a heavy burden for researchers, especially non-native speakers, who need to survey scientific literature. To alleviate this problem, we organized a group of non-native English speakers to write summaries of papers presented at a computer vision conference to share the knowledge of the papers read by the group. We summarized a total of 2,000 papers presented at the Conference on Computer Vision and Pattern Recognition, a top-tier conference on computer vision, in 2019 and 2020. We quantitatively analyzed participants' selection regarding which papers they read among the many available papers. The experimental results suggest that we can summarize a wide range of papers without asking participants to read papers unrelated to their interests.

Describing and Localizing Multiple Changes with Transformers

Mar 25, 2021

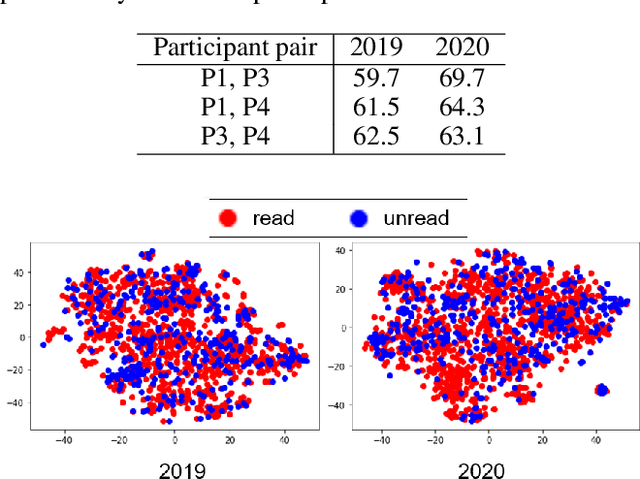

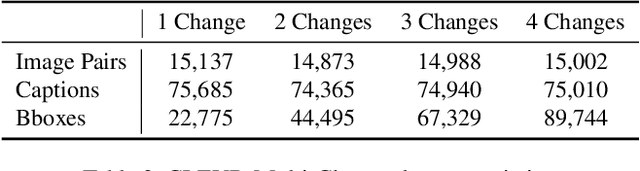

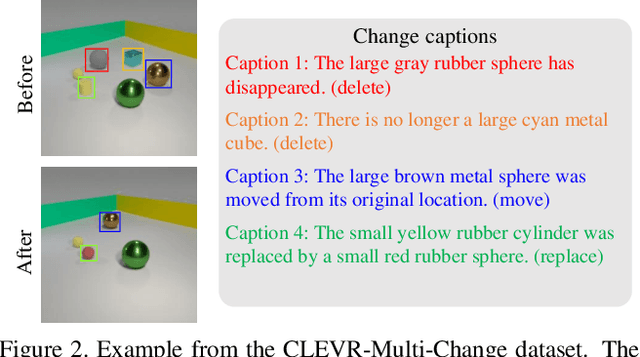

Abstract:Change captioning tasks aim to detect changes in image pairs observed before and after a scene change and generate a natural language description of the changes. Existing change captioning studies have mainly focused on scenes with a single change. However, detecting and describing multiple changed parts in image pairs is essential for enhancing adaptability to complex scenarios. We solve the above issues from three aspects: (i) We propose a CG-based multi-change captioning dataset; (ii) We benchmark existing state-of-the-art methods of single change captioning on multi-change captioning; (iii) We further propose Multi-Change Captioning transformers (MCCFormers) that identify change regions by densely correlating different regions in image pairs and dynamically determines the related change regions with words in sentences. The proposed method obtained the highest scores on four conventional change captioning evaluation metrics for multi-change captioning. In addition, existing methods generate a single attention map for multiple changes and lack the ability to distinguish change regions. In contrast, our proposed method can separate attention maps for each change and performs well with respect to change localization. Moreover, the proposed framework outperformed the previous state-of-the-art methods on an existing change captioning benchmark, CLEVR-Change, by a large margin (+6.1 on BLEU-4 and +9.7 on CIDEr scores), indicating its general ability in change captioning tasks.

Self-Supervised Learning for Visual Summary Identification in Scientific Publications

Jan 14, 2021

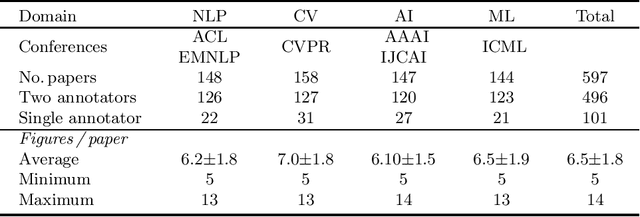

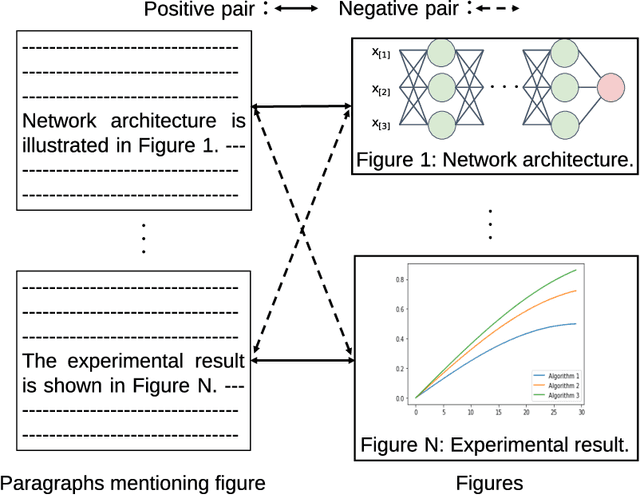

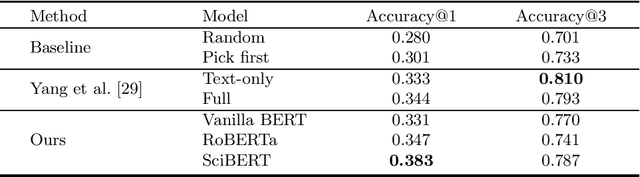

Abstract:Providing visual summaries of scientific publications can increase information access for readers and thereby help deal with the exponential growth in the number of scientific publications. Nonetheless, efforts in providing visual publication summaries have been few and far apart, primarily focusing on the biomedical domain. This is primarily because of the limited availability of annotated gold standards, which hampers the application of robust and high-performing supervised learning techniques. To address these problems we create a new benchmark dataset for selecting figures to serve as visual summaries of publications based on their abstracts, covering several domains in computer science. Moreover, we develop a self-supervised learning approach, based on heuristic matching of inline references to figures with figure captions. Experiments in both biomedical and computer science domains show that our model is able to outperform the state of the art despite being self-supervised and therefore not relying on any annotated training data.

Automatic Paper Summary Generation from Visual and Textual Information

Nov 16, 2018Abstract:Due to the recent boom in artificial intelligence (AI) research, including computer vision (CV), it has become impossible for researchers in these fields to keep up with the exponentially increasing number of manuscripts. In response to this situation, this paper proposes the paper summary generation (PSG) task using a simple but effective method to automatically generate an academic paper summary from raw PDF data. We realized PSG by combination of vision-based supervised components detector and language-based unsupervised important sentence extractor, which is applicable for a trained format of manuscripts. We show the quantitative evaluation of ability of simple vision-based components extraction, and the qualitative evaluation that our system can extract both visual item and sentence that are helpful for understanding. After processing via our PSG, the 979 manuscripts accepted by the Conference on Computer Vision and Pattern Recognition (CVPR) 2018 are available. It is believed that the proposed method will provide a better way for researchers to stay caught with important academic papers.

Understanding Fake Faces

Sep 22, 2018

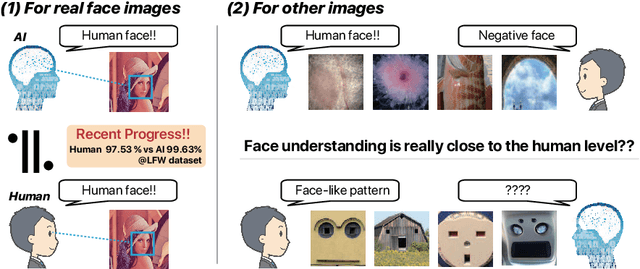

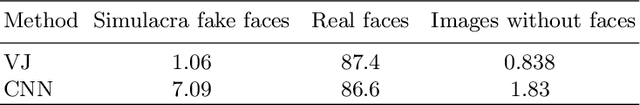

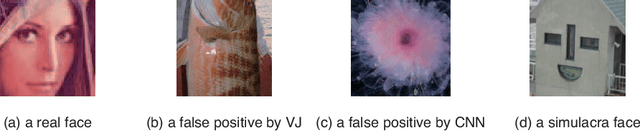

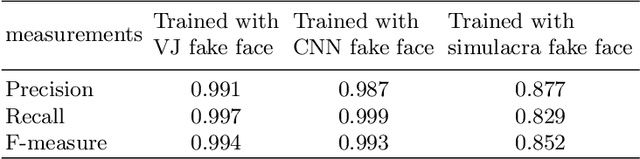

Abstract:Face recognition research is one of the most active topics in computer vision (CV), and deep neural networks (DNN) are now filling the gap between human-level and computer-driven performance levels in face verification algorithms. However, although the performance gap appears to be narrowing in terms of accuracy-based expectations, a curious question has arisen; specifically, "Face understanding of AI is really close to that of human?" In the present study, in an effort to confirm the brain-driven concept, we conduct image-based detection, classification, and generation using an in-house created fake face database. This database has two configurations: (i) false positive face detections produced using both the Viola Jones (VJ) method and convolutional neural networks (CNN), and (ii) simulacra that have fundamental characteristics that resemble faces but are completely artificial. The results show a level of suggestive knowledge that indicates the continuing existence of a gap between the capabilities of recent vision-based face recognition algorithms and human-level performance. On a positive note, however, we have obtained knowledge that will advance the progress of face-understanding models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge