Hirokatsu Kataoka

3D sans 3D Scans: Scalable Pre-training from Video-Generated Point Clouds

Dec 28, 2025Abstract:Despite recent progress in 3D self-supervised learning, collecting large-scale 3D scene scans remains expensive and labor-intensive. In this work, we investigate whether 3D representations can be learned from unlabeled videos recorded without any real 3D sensors. We present Laplacian-Aware Multi-level 3D Clustering with Sinkhorn-Knopp (LAM3C), a self-supervised framework that learns from video-generated point clouds from unlabeled videos. We first introduce RoomTours, a video-generated point cloud dataset constructed by collecting room-walkthrough videos from the web (e.g., real-estate tours) and generating 49,219 scenes using an off-the-shelf feed-forward reconstruction model. We also propose a noise-regularized loss that stabilizes representation learning by enforcing local geometric smoothness and ensuring feature stability under noisy point clouds. Remarkably, without using any real 3D scans, LAM3C achieves higher performance than the previous self-supervised methods on indoor semantic and instance segmentation. These results suggest that unlabeled videos represent an abundant source of data for 3D self-supervised learning.

S3OD: Towards Generalizable Salient Object Detection with Synthetic Data

Oct 24, 2025Abstract:Salient object detection exemplifies data-bounded tasks where expensive pixel-precise annotations force separate model training for related subtasks like DIS and HR-SOD. We present a method that dramatically improves generalization through large-scale synthetic data generation and ambiguity-aware architecture. We introduce S3OD, a dataset of over 139,000 high-resolution images created through our multi-modal diffusion pipeline that extracts labels from diffusion and DINO-v3 features. The iterative generation framework prioritizes challenging categories based on model performance. We propose a streamlined multi-mask decoder that naturally handles the inherent ambiguity in salient object detection by predicting multiple valid interpretations. Models trained solely on synthetic data achieve 20-50% error reduction in cross-dataset generalization, while fine-tuned versions reach state-of-the-art performance across DIS and HR-SOD benchmarks.

AgroBench: Vision-Language Model Benchmark in Agriculture

Jul 28, 2025

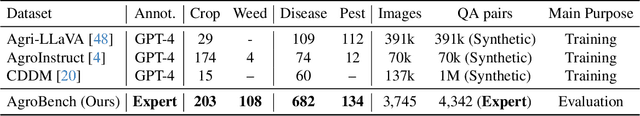

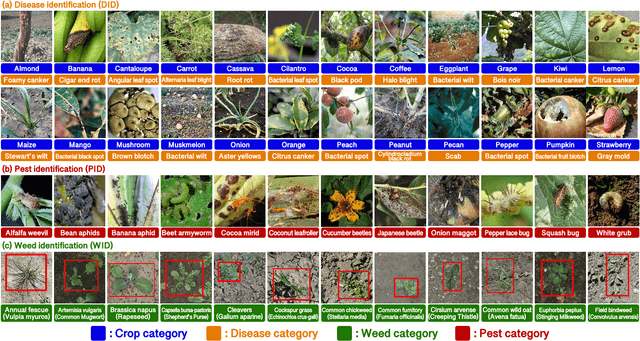

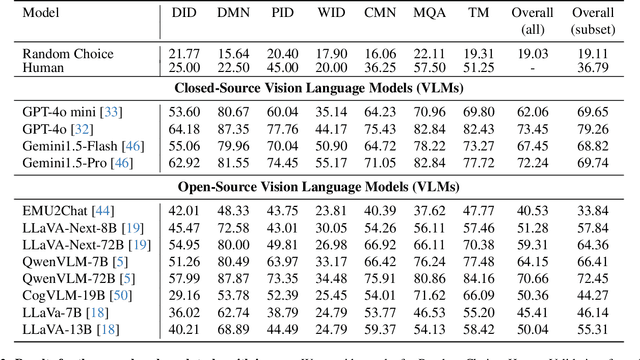

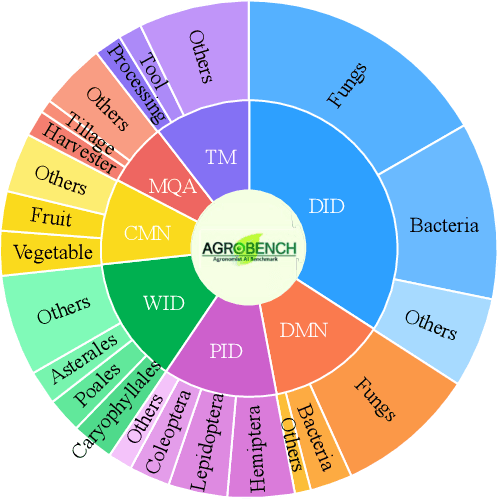

Abstract:Precise automated understanding of agricultural tasks such as disease identification is essential for sustainable crop production. Recent advances in vision-language models (VLMs) are expected to further expand the range of agricultural tasks by facilitating human-model interaction through easy, text-based communication. Here, we introduce AgroBench (Agronomist AI Benchmark), a benchmark for evaluating VLM models across seven agricultural topics, covering key areas in agricultural engineering and relevant to real-world farming. Unlike recent agricultural VLM benchmarks, AgroBench is annotated by expert agronomists. Our AgroBench covers a state-of-the-art range of categories, including 203 crop categories and 682 disease categories, to thoroughly evaluate VLM capabilities. In our evaluation on AgroBench, we reveal that VLMs have room for improvement in fine-grained identification tasks. Notably, in weed identification, most open-source VLMs perform close to random. With our wide range of topics and expert-annotated categories, we analyze the types of errors made by VLMs and suggest potential pathways for future VLM development. Our dataset and code are available at https://dahlian00.github.io/AgroBenchPage/ .

AnimalClue: Recognizing Animals by their Traces

Jul 27, 2025

Abstract:Wildlife observation plays an important role in biodiversity conservation, necessitating robust methodologies for monitoring wildlife populations and interspecies interactions. Recent advances in computer vision have significantly contributed to automating fundamental wildlife observation tasks, such as animal detection and species identification. However, accurately identifying species from indirect evidence like footprints and feces remains relatively underexplored, despite its importance in contributing to wildlife monitoring. To bridge this gap, we introduce AnimalClue, the first large-scale dataset for species identification from images of indirect evidence. Our dataset consists of 159,605 bounding boxes encompassing five categories of indirect clues: footprints, feces, eggs, bones, and feathers. It covers 968 species, 200 families, and 65 orders. Each image is annotated with species-level labels, bounding boxes or segmentation masks, and fine-grained trait information, including activity patterns and habitat preferences. Unlike existing datasets primarily focused on direct visual features (e.g., animal appearances), AnimalClue presents unique challenges for classification, detection, and instance segmentation tasks due to the need for recognizing more detailed and subtle visual features. In our experiments, we extensively evaluate representative vision models and identify key challenges in animal identification from their traces. Our dataset and code are available at https://dahlian00.github.io/AnimalCluePage/

Industrial Synthetic Segment Pre-training

May 20, 2025

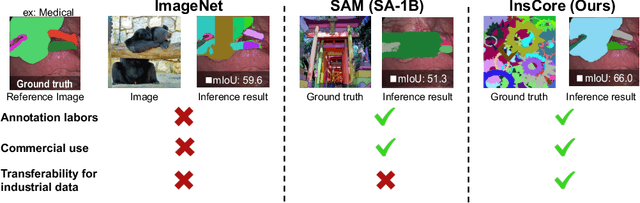

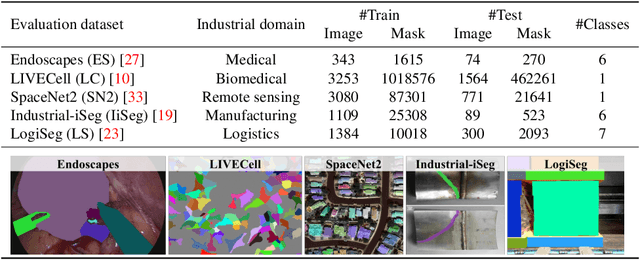

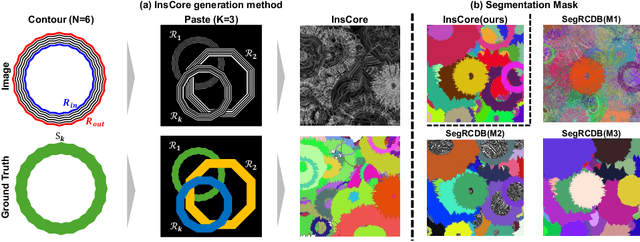

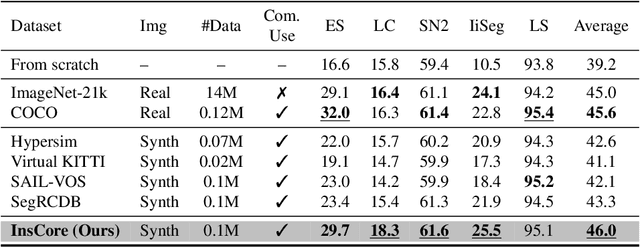

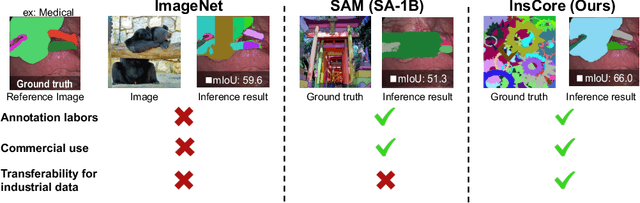

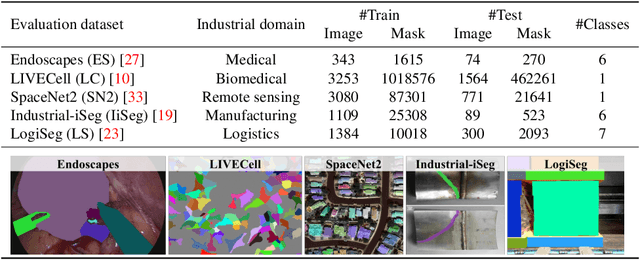

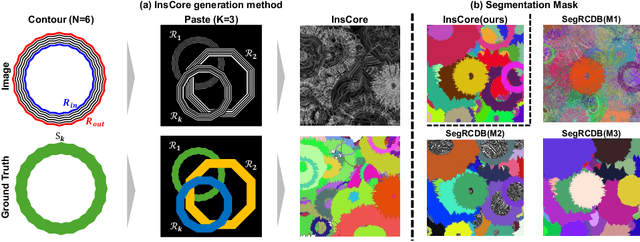

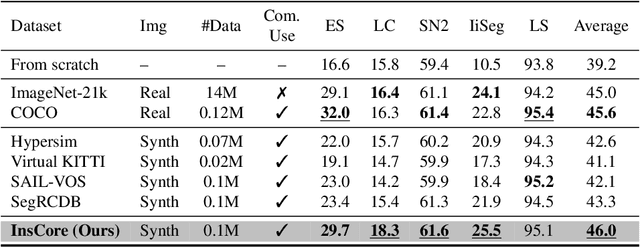

Abstract:Pre-training on real-image datasets has been widely proven effective for improving instance segmentation. However, industrial applications face two key challenges: (1) legal and ethical restrictions, such as ImageNet's prohibition of commercial use, and (2) limited transferability due to the domain gap between web images and industrial imagery. Even recent vision foundation models, including the segment anything model (SAM), show notable performance degradation in industrial settings. These challenges raise critical questions: Can we build a vision foundation model for industrial applications without relying on real images or manual annotations? And can such models outperform even fine-tuned SAM on industrial datasets? To address these questions, we propose the Instance Core Segmentation Dataset (InsCore), a synthetic pre-training dataset based on formula-driven supervised learning (FDSL). InsCore generates fully annotated instance segmentation images that reflect key characteristics of industrial data, including complex occlusions, dense hierarchical masks, and diverse non-rigid shapes, distinct from typical web imagery. Unlike previous methods, InsCore requires neither real images nor human annotations. Experiments on five industrial datasets show that models pre-trained with InsCore outperform those trained on COCO and ImageNet-21k, as well as fine-tuned SAM, achieving an average improvement of 6.2 points in instance segmentation performance. This result is achieved using only 100k synthetic images, more than 100 times fewer than the 11 million images in SAM's SA-1B dataset, demonstrating the data efficiency of our approach. These findings position InsCore as a practical and license-free vision foundation model for industrial applications.

Industry-focused Synthetic Segmentation Pre-training

May 19, 2025

Abstract:Pre-training on real-image datasets has been widely proven effective for improving instance segmentation. However, industrial applications face two key challenges: (1) legal and ethical restrictions, such as ImageNet's prohibition of commercial use, and (2) limited transferability due to the domain gap between web images and industrial imagery. Even recent vision foundation models, including the segment anything model (SAM), show notable performance degradation in industrial settings. These challenges raise critical questions: Can we build a vision foundation model for industrial applications without relying on real images or manual annotations? And can such models outperform even fine-tuned SAM on industrial datasets? To address these questions, we propose the Instance Core Segmentation Dataset (InsCore), a synthetic pre-training dataset based on formula-driven supervised learning (FDSL). InsCore generates fully annotated instance segmentation images that reflect key characteristics of industrial data, including complex occlusions, dense hierarchical masks, and diverse non-rigid shapes, distinct from typical web imagery. Unlike previous methods, InsCore requires neither real images nor human annotations. Experiments on five industrial datasets show that models pre-trained with InsCore outperform those trained on COCO and ImageNet-21k, as well as fine-tuned SAM, achieving an average improvement of 6.2 points in instance segmentation performance. This result is achieved using only 100k synthetic images, more than 100 times fewer than the 11 million images in SAM's SA-1B dataset, demonstrating the data efficiency of our approach. These findings position InsCore as a practical and license-free vision foundation model for industrial applications.

Simple Visual Artifact Detection in Sora-Generated Videos

Apr 30, 2025Abstract:The December 2024 release of OpenAI's Sora, a powerful video generation model driven by natural language prompts, highlights a growing convergence between large language models (LLMs) and video synthesis. As these multimodal systems evolve into video-enabled LLMs (VidLLMs), capable of interpreting, generating, and interacting with visual content, understanding their limitations and ensuring their safe deployment becomes essential. This study investigates visual artifacts frequently found and reported in Sora-generated videos, which can compromise quality, mislead viewers, or propagate disinformation. We propose a multi-label classification framework targeting four common artifact label types: label 1: boundary / edge defects, label 2: texture / noise issues, label 3: movement / joint anomalies, and label 4: object mismatches / disappearances. Using a dataset of 300 manually annotated frames extracted from 15 Sora-generated videos, we trained multiple 2D CNN architectures (ResNet-50, EfficientNet-B3 / B4, ViT-Base). The best-performing model trained by ResNet-50 achieved an average multi-label classification accuracy of 94.14%. This work supports the broader development of VidLLMs by contributing to (1) the creation of datasets for video quality evaluation, (2) interpretable artifact-based analysis beyond language metrics, and (3) the identification of visual risks relevant to factuality and safety.

Formula-Supervised Sound Event Detection: Pre-Training Without Real Data

Apr 06, 2025Abstract:In this paper, we propose a novel formula-driven supervised learning (FDSL) framework for pre-training an environmental sound analysis model by leveraging acoustic signals parametrically synthesized through formula-driven methods. Specifically, we outline detailed procedures and evaluate their effectiveness for sound event detection (SED). The SED task, which involves estimating the types and timings of sound events, is particularly challenged by the difficulty of acquiring a sufficient quantity of accurately labeled training data. Moreover, it is well known that manually annotated labels often contain noises and are significantly influenced by the subjective judgment of annotators. To address these challenges, we propose a novel pre-training method that utilizes a synthetic dataset, Formula-SED, where acoustic data are generated solely based on mathematical formulas. The proposed method enables large-scale pre-training by using the synthesis parameters applied at each time step as ground truth labels, thereby eliminating label noise and bias. We demonstrate that large-scale pre-training with Formula-SED significantly enhances model accuracy and accelerates training, as evidenced by our results in the DESED dataset used for DCASE2023 Challenge Task 4. The project page is at https://yutoshibata07.github.io/Formula-SED/

Pre-training with 3D Synthetic Data: Learning 3D Point Cloud Instance Segmentation from 3D Synthetic Scenes

Mar 31, 2025

Abstract:In the recent years, the research community has witnessed growing use of 3D point cloud data for the high applicability in various real-world applications. By means of 3D point cloud, this modality enables to consider the actual size and spatial understanding. The applied fields include mechanical control of robots, vehicles, or other real-world systems. Along this line, we would like to improve 3D point cloud instance segmentation which has emerged as a particularly promising approach for these applications. However, the creation of 3D point cloud datasets entails enormous costs compared to 2D image datasets. To train a model of 3D point cloud instance segmentation, it is necessary not only to assign categories but also to provide detailed annotations for each point in the large-scale 3D space. Meanwhile, the increase of recent proposals for generative models in 3D domain has spurred proposals for using a generative model to create 3D point cloud data. In this work, we propose a pre-training with 3D synthetic data to train a 3D point cloud instance segmentation model based on generative model for 3D scenes represented by point cloud data. We directly generate 3D point cloud data with Point-E for inserting a generated data into a 3D scene. More recently in 2025, although there are other accurate 3D generation models, even using the Point-E as an early 3D generative model can effectively support the pre-training with 3D synthetic data. In the experimental section, we compare our pre-training method with baseline methods indicated improved performance, demonstrating the efficacy of 3D generative models for 3D point cloud instance segmentation.

Leveraging LLMs with Iterative Loop Structure for Enhanced Social Intelligence in Video Question Answering

Mar 27, 2025

Abstract:Social intelligence, the ability to interpret emotions, intentions, and behaviors, is essential for effective communication and adaptive responses. As robots and AI systems become more prevalent in caregiving, healthcare, and education, the demand for AI that can interact naturally with humans grows. However, creating AI that seamlessly integrates multiple modalities, such as vision and speech, remains a challenge. Current video-based methods for social intelligence rely on general video recognition or emotion recognition techniques, often overlook the unique elements inherent in human interactions. To address this, we propose the Looped Video Debating (LVD) framework, which integrates Large Language Models (LLMs) with visual information, such as facial expressions and body movements, to enhance the transparency and reliability of question-answering tasks involving human interaction videos. Our results on the Social-IQ 2.0 benchmark show that LVD achieves state-of-the-art performance without fine-tuning. Furthermore, supplementary human annotations on existing datasets provide insights into the model's accuracy, guiding future improvements in AI-driven social intelligence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge