Shengli Zhang

College of Electronics and Information Engineering, Shenzhen University, Shenzhen, China

Agentic Graph Neural Networks for Wireless Communications and Networking Towards Edge General Intelligence: A Survey

Aug 12, 2025

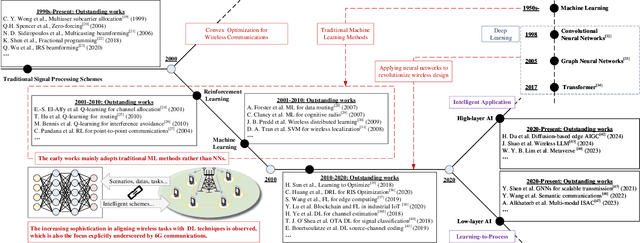

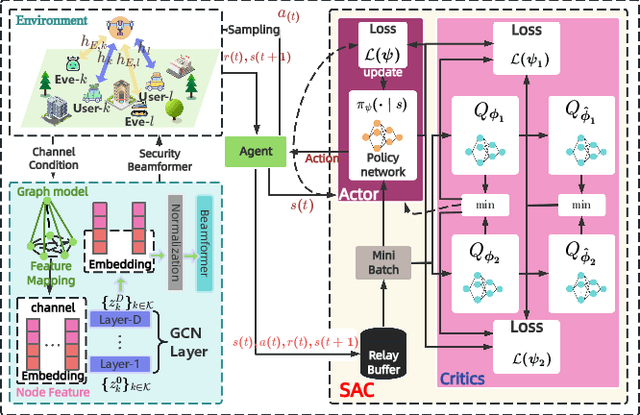

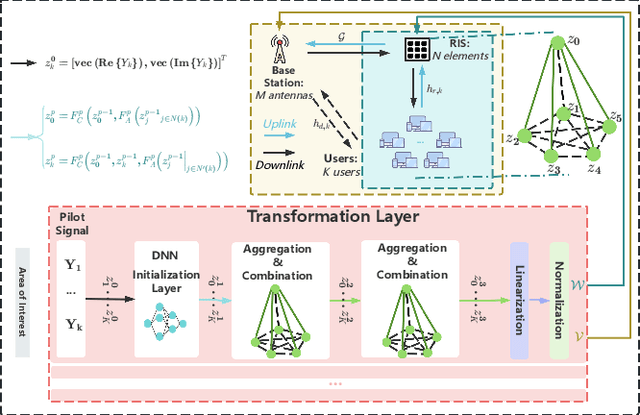

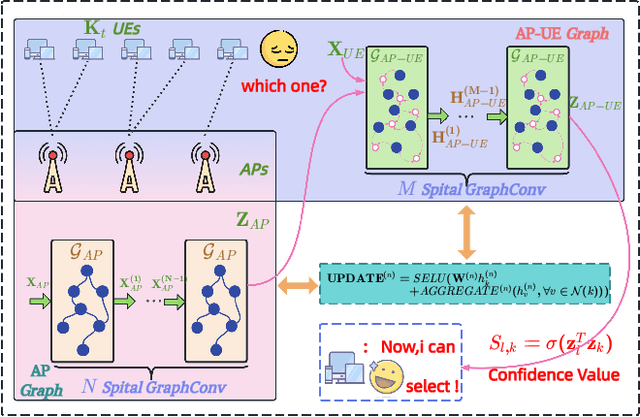

Abstract:The rapid advancement of communication technologies has driven the evolution of communication networks towards both high-dimensional resource utilization and multifunctional integration. This evolving complexity poses significant challenges in designing communication networks to satisfy the growing quality-of-service and time sensitivity of mobile applications in dynamic environments. Graph neural networks (GNNs) have emerged as fundamental deep learning (DL) models for complex communication networks. GNNs not only augment the extraction of features over network topologies but also enhance scalability and facilitate distributed computation. However, most existing GNNs follow a traditional passive learning framework, which may fail to meet the needs of increasingly diverse wireless systems. This survey proposes the employment of agentic artificial intelligence (AI) to organize and integrate GNNs, enabling scenario- and task-aware implementation towards edge general intelligence. To comprehend the full capability of GNNs, we holistically review recent applications of GNNs in wireless communications and networking. Specifically, we focus on the alignment between graph representations and network topologies, and between neural architectures and wireless tasks. We first provide an overview of GNNs based on prominent neural architectures, followed by the concept of agentic GNNs. Then, we summarize and compare GNN applications for conventional systems and emerging technologies, including physical, MAC, and network layer designs, integrated sensing and communication (ISAC), reconfigurable intelligent surface (RIS) and cell-free network architecture. We further propose a large language model (LLM) framework as an intelligent question-answering agent, leveraging this survey as a local knowledge base to enable GNN-related responses tailored to wireless communication research.

Zero-Knowledge Federated Learning: A New Trustworthy and Privacy-Preserving Distributed Learning Paradigm

Mar 18, 2025

Abstract:Federated Learning (FL) has emerged as a promising paradigm in distributed machine learning, enabling collaborative model training while preserving data privacy. However, despite its many advantages, FL still contends with significant challenges -- most notably regarding security and trust. Zero-Knowledge Proofs (ZKPs) offer a potential solution by establishing trust and enhancing system integrity throughout the FL process. Although several studies have explored ZKP-based FL (ZK-FL), a systematic framework and comprehensive analysis are still lacking. This article makes two key contributions. First, we propose a structured ZK-FL framework that categorizes and analyzes the technical roles of ZKPs across various FL stages and tasks. Second, we introduce a novel algorithm, Verifiable Client Selection FL (Veri-CS-FL), which employs ZKPs to refine the client selection process. In Veri-CS-FL, participating clients generate verifiable proofs for the performance metrics of their local models and submit these concise proofs to the server for efficient verification. The server then selects clients with high-quality local models for uploading, subsequently aggregating the contributions from these selected clients. By integrating ZKPs, Veri-CS-FL not only ensures the accuracy of performance metrics but also fortifies trust among participants while enhancing the overall efficiency and security of FL systems.

A Survey of Zero-Knowledge Proof Based Verifiable Machine Learning

Feb 25, 2025

Abstract:As machine learning technologies advance rapidly across various domains, concerns over data privacy and model security have grown significantly. These challenges are particularly pronounced when models are trained and deployed on cloud platforms or third-party servers due to the computational resource limitations of users' end devices. In response, zero-knowledge proof (ZKP) technology has emerged as a promising solution, enabling effective validation of model performance and authenticity in both training and inference processes without disclosing sensitive data. Thus, ZKP ensures the verifiability and security of machine learning models, making it a valuable tool for privacy-preserving AI. Although some research has explored the verifiable machine learning solutions that exploit ZKP, a comprehensive survey and summary of these efforts remain absent. This survey paper aims to bridge this gap by reviewing and analyzing all the existing Zero-Knowledge Machine Learning (ZKML) research from June 2017 to December 2024. We begin by introducing the concept of ZKML and outlining its ZKP algorithmic setups under three key categories: verifiable training, verifiable inference, and verifiable testing. Next, we provide a comprehensive categorization of existing ZKML research within these categories and analyze the works in detail. Furthermore, we explore the implementation challenges faced in this field and discuss the improvement works to address these obstacles. Additionally, we highlight several commercial applications of ZKML technology. Finally, we propose promising directions for future advancements in this domain.

Unseen Attack Detection in Software-Defined Networking Using a BERT-Based Large Language Model

Dec 09, 2024Abstract:Software defined networking (SDN) represents a transformative shift in network architecture by decoupling the control plane from the data plane, enabling centralized and flexible management of network resources. However, this architectural shift introduces significant security challenges, as SDN's centralized control becomes an attractive target for various types of attacks. While current research has yielded valuable insights into attack detection in SDN, critical gaps remain. Addressing challenges in feature selection, broadening the scope beyond DDoS attacks, strengthening attack decisions based on multi flow analysis, and building models capable of detecting unseen attacks that they have not been explicitly trained on are essential steps toward advancing security in SDN. In this paper, we introduce a novel approach that leverages Natural Language Processing (NLP) and the pre trained BERT base model to enhance attack detection in SDN. Our approach transforms network flow data into a format interpretable by language models, allowing BERT to capture intricate patterns and relationships within network traffic. By using Random Forest for feature selection, we optimize model performance and reduce computational overhead, ensuring accurate detection. Attack decisions are made based on several flows, providing stronger and more reliable detection of malicious traffic. Furthermore, our approach is specifically designed to detect previously unseen attacks, offering a solution for identifying threats that the model was not explicitly trained on. To rigorously evaluate our approach, we conducted experiments in two scenarios: one focused on detecting known attacks, achieving 99.96% accuracy, and another on detecting unseen attacks, where our model achieved 99.96% accuracy, demonstrating the robustness of our approach in detecting evolving threats to improve the security of SDN networks.

A deep-learning-based MAC for integrating channel access, rate adaptation and channel switch

Jun 04, 2024

Abstract:With increasing density and heterogeneity in unlicensed wireless networks, traditional MAC protocols, such as carrier-sense multiple access with collision avoidance (CSMA/CA) in Wi-Fi networks, are experiencing performance degradation. This is manifested in increased collisions and extended backoff times, leading to diminished spectrum efficiency and protocol coordination. Addressing these issues, this paper proposes a deep-learning-based MAC paradigm, dubbed DL-MAC, which leverages spectrum sensing data readily available from energy detection modules in wireless devices to achieve the MAC functionalities of channel access, rate adaptation and channel switch. First, we utilize DL-MAC to realize a joint design of channel access and rate adaptation. Subsequently, we integrate the capability of channel switch into DL-MAC, enhancing its functionality from single-channel to multi-channel operation. Specifically, the DL-MAC protocol incorporates a deep neural network (DNN) for channel selection and a recurrent neural network (RNN) for the joint design of channel access and rate adaptation. We conducted real-world data collection within the 2.4 GHz frequency band to validate the effectiveness of DL-MAC, and our experiments reveal that DL-MAC exhibits superior performance over traditional algorithms in both single and multi-channel environments and also outperforms single-function approaches in terms of overall performance. Additionally, the performance of DL-MAC remains robust, unaffected by channel switch overhead within the evaluated range.

DedustNet: A Frequency-dominated Swin Transformer-based Wavelet Network for Agricultural Dust Removal

Jan 09, 2024

Abstract:While dust significantly affects the environmental perception of automated agricultural machines, the existing deep learning-based methods for dust removal require further research and improvement in this area to improve the performance and reliability of automated agricultural machines in agriculture. We propose an end-to-end trainable learning network (DedustNet) to solve the real-world agricultural dust removal task. To our knowledge, DedustNet is the first time Swin Transformer-based units have been used in wavelet networks for agricultural image dusting. Specifically, we present the frequency-dominated block (DWTFormer block and IDWTFormer block) by adding a spatial features aggregation scheme (SFAS) to the Swin Transformer and combining it with the wavelet transform, the DWTFormer block and IDWTFormer block, alleviating the limitation of the global receptive field of Swin Transformer when dealing with complex dusty backgrounds. Furthermore, We propose a cross-level information fusion module to fuse different levels of features and effectively capture global and long-range feature relationships. In addition, we present a dilated convolution module to capture contextual information guided by wavelet transform at multiple scales, which combines the advantages of wavelet transform and dilated convolution. Our algorithm leverages deep learning techniques to effectively remove dust from images while preserving the original structural and textural features. Compared to existing state-of-the-art methods, DedustNet achieves superior performance and more reliable results in agricultural image dedusting, providing strong support for the application of agricultural machinery in dusty environments. Additionally, the impressive performance on real-world hazy datasets and application tests highlights DedustNet superior generalization ability and computer vision-related application performance.

WaveletFormerNet: A Transformer-based Wavelet Network for Real-world Non-homogeneous and Dense Fog Removal

Jan 09, 2024

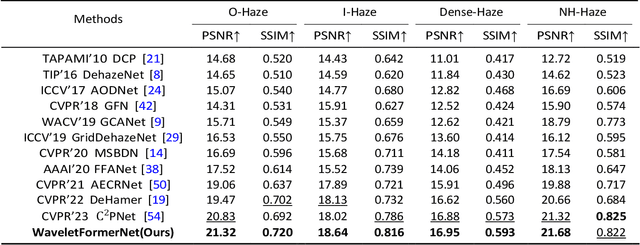

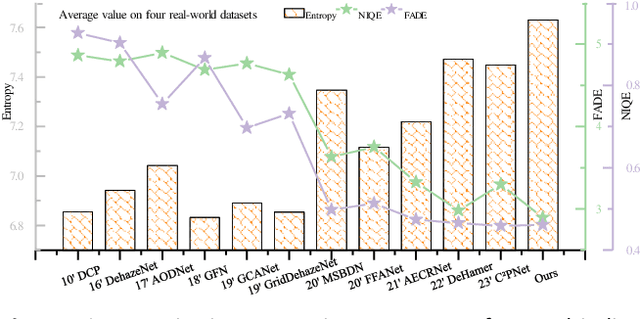

Abstract:Although deep convolutional neural networks have achieved remarkable success in removing synthetic fog, it is essential to be able to process images taken in complex foggy conditions, such as dense or non-homogeneous fog, in the real world. However, the haze distribution in the real world is complex, and downsampling can lead to color distortion or loss of detail in the output results as the resolution of a feature map or image resolution decreases. In addition to the challenges of obtaining sufficient training data, overfitting can also arise in deep learning techniques for foggy image processing, which can limit the generalization abilities of the model, posing challenges for its practical applications in real-world scenarios. Considering these issues, this paper proposes a Transformer-based wavelet network (WaveletFormerNet) for real-world foggy image recovery. We embed the discrete wavelet transform into the Vision Transformer by proposing the WaveletFormer and IWaveletFormer blocks, aiming to alleviate texture detail loss and color distortion in the image due to downsampling. We introduce parallel convolution in the Transformer block, which allows for the capture of multi-frequency information in a lightweight mechanism. Additionally, we have implemented a feature aggregation module (FAM) to maintain image resolution and enhance the feature extraction capacity of our model, further contributing to its impressive performance in real-world foggy image recovery tasks. Extensive experiments demonstrate that our WaveletFormerNet performs better than state-of-the-art methods, as shown through quantitative and qualitative evaluations of minor model complexity. Additionally, our satisfactory results on real-world dust removal and application tests showcase the superior generalization ability and improved performance of WaveletFormerNet in computer vision-related applications.

Adaptive Task Offloading for Space Missions: A State-Graph-Based Approach

Nov 16, 2022Abstract:Advances in space exploration have led to an explosion of tasks. Conventionally, these tasks are offloaded to ground servers for enhanced computing capability, or to adjacent low-earth-orbit satellites for reduced transmission delay. However, the overall delay is determined by both computation and transmission costs. The existing offloading schemes, while being highly-optimized for either costs, can be abysmal for the overall performance. The computation-transmission cost dilemma is yet to be solved. In this paper, we propose an adaptive offloading scheme to reduce the overall delay. The core idea is to jointly model and optimize the transmission-computation process over the entire network. Specifically, to represent the computation state migrations, we generalize graph nodes with multiple states. In this way, the joint optimization problem is transformed into a shortest path problem over the state graph. We further provide an extended Dijkstra's algorithm for efficient path finding. Simulation results show that the proposed scheme outperforms the ground and one-hop offloading schemes by up to 37.56% and 39.35% respectively on SpaceCube v2.0.

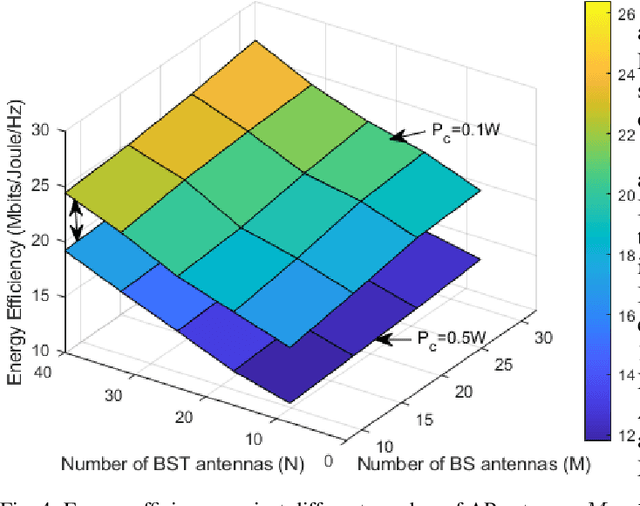

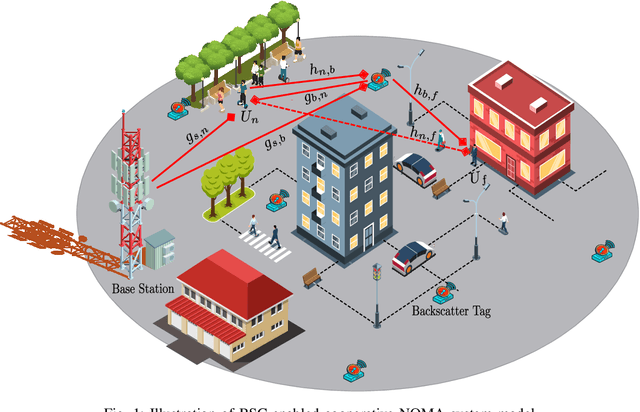

Energy-Efficient Beamforming and Resource Optimization for AmBSC-Assisted Cooperative NOMA IoT Networks

Sep 11, 2022

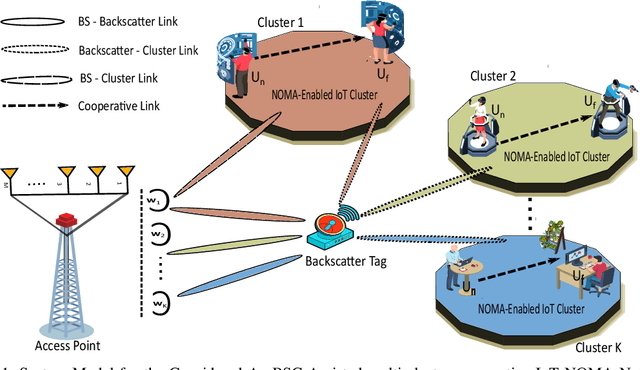

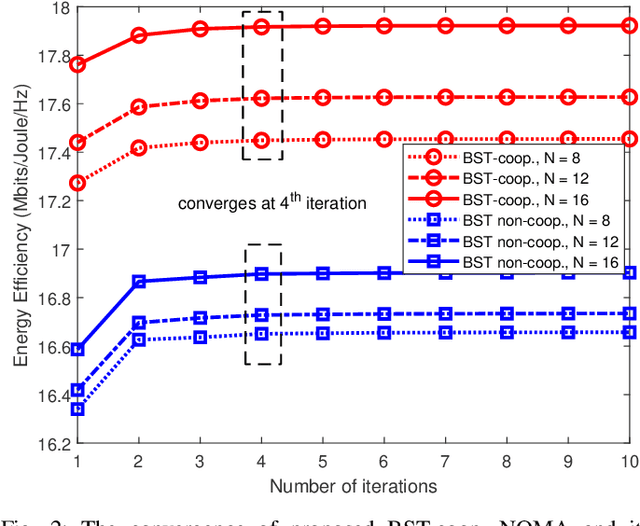

Abstract:In this manuscript, we present an energy-efficient alternating optimization framework based on the multi-antenna ambient backscatter communication (AmBSC) assisted cooperative non-orthogonal multiple access (NOMA) for next-generation (NG) internet-of-things (IoT) enabled communication networks. Specifically, the energy-efficiency maximization is achieved for the considered AmBSC-enabled multi-cluster cooperative IoT NOMA system by optimizing the active-beamforming vector and power-allocation coefficients (PAC) of IoT NOMA users at the transmitter, as well as passive-beamforming vector at the multi-antenna assisted backscatter node. Usually, increasing the number of IoT NOMA users in each cluster results in inter-cluster interference (ICI) (among different clusters) and intra-cluster interference (among IoT NOMA users). To combat the impact of ICI, we exploit a zero-forcing (ZF) based active-beamforming, as well as an efficient clustering technique at the source node. Further, the effect of intra-cluster interference is mitigated by exploiting an efficient power-allocation policy that determines the PAC of IoT NOMA users under the quality-of-service (QoS), cooperation, SIC decoding, and power-budget constraints. Moreover, the considered non-convex passive-beamforming problem is transformed into a standard semi-definite programming (SDP) problem by exploiting the successive-convex approximation (SCA) approximation, as well as the difference of convex (DC) programming, where Rank-1 solution of passive-beamforming is obtained based on the penalty-based method. Furthermore, the numerical analysis of simulation results demonstrates that the proposed energy-efficiency maximization algorithm exhibits an efficient performance by achieving convergence within only a few iterations.

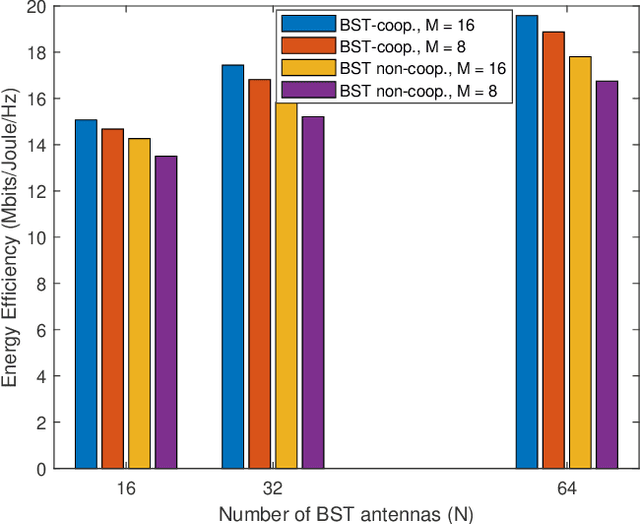

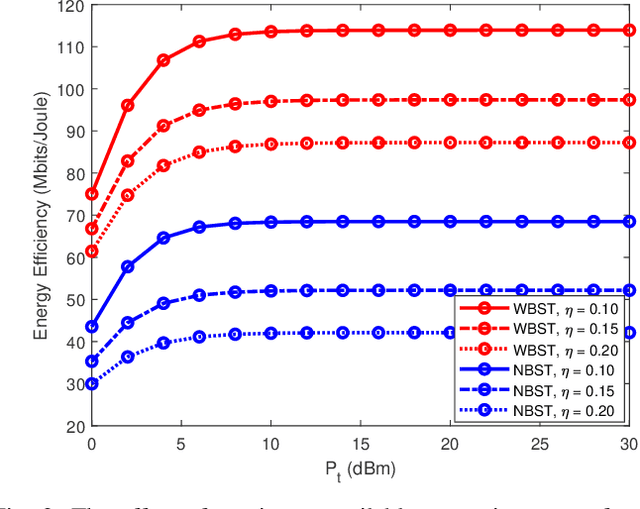

Energy Efficiency Maximization for Backscatter-Enabled Coded-Cooperative NOMA Under Imperfect SIC

Aug 01, 2022

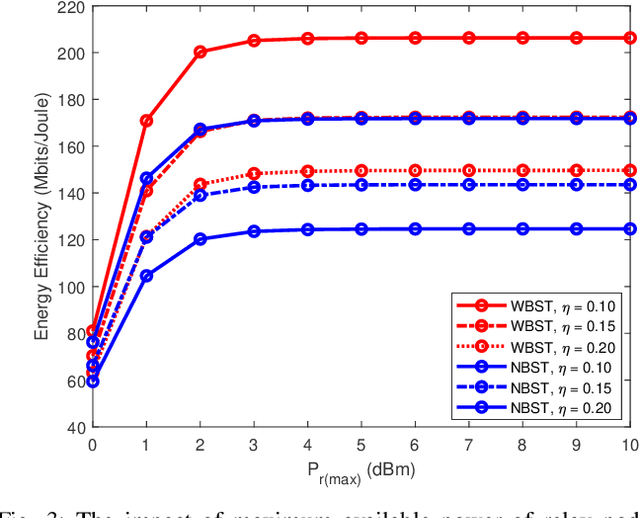

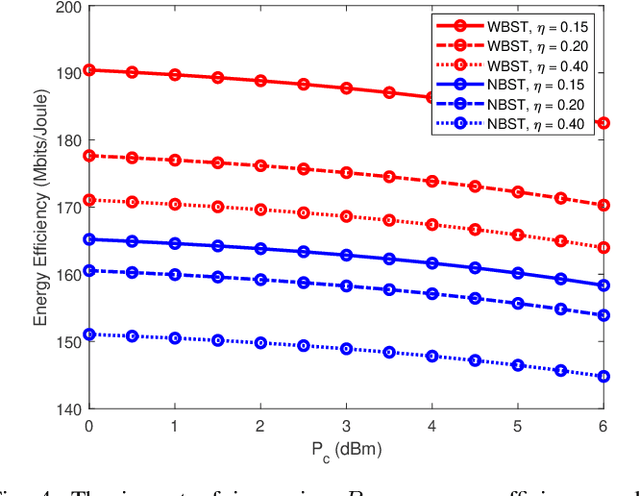

Abstract:In this manuscript, we propose an optimization framework to maximize the energy efficiency of the BSC-enabled cooperative NOMA system under imperfect successive interference cancellation (SIC) decoding at the receiver. Specifically, the energy efficiency of the system is maximized by optimizing the transmit power of the source, power allocation coefficients (PAC) of NOMA users, and power of the relay node. A low-complexity energy-efficient alternating optimization framework is introduced which simultaneously optimizes the transmit power of the source, PAC, and power of the relay node by considering the quality of service (QoS), power budget, and cooperation constraints under the imperfect SIC decoding. Subsequently, a joint channel coding framework is provided to enhance the performance of far user which has no direct communication link with the base station (BS) and has bad channel conditions. In the destination node, the far user data is jointly decoded using a Sum-product algorithm (SPA) based joint iterative decoder realized by jointly-designed Quasi-cyclic Low-density parity-check (QC-LDPC) codes obtained from cyclic balanced sampling plans excluding contiguous units (CBSEC). Simulation results evince that the proposed BSC-enabled cooperative NOMA system outperforms its counterpart by providing an efficient performance in terms of energy efficiency. Also, proposed jointly-designed QC-LDPC codes provide an excellent bit-error-rate (BER) performance by jointly decoding the far user data for considered BSC cooperative NOMA system with only a few decoding iterations under Rayleigh-fading transmission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge