Naijin Liu

Adaptive Task Offloading for Space Missions: A State-Graph-Based Approach

Nov 16, 2022Abstract:Advances in space exploration have led to an explosion of tasks. Conventionally, these tasks are offloaded to ground servers for enhanced computing capability, or to adjacent low-earth-orbit satellites for reduced transmission delay. However, the overall delay is determined by both computation and transmission costs. The existing offloading schemes, while being highly-optimized for either costs, can be abysmal for the overall performance. The computation-transmission cost dilemma is yet to be solved. In this paper, we propose an adaptive offloading scheme to reduce the overall delay. The core idea is to jointly model and optimize the transmission-computation process over the entire network. Specifically, to represent the computation state migrations, we generalize graph nodes with multiple states. In this way, the joint optimization problem is transformed into a shortest path problem over the state graph. We further provide an extended Dijkstra's algorithm for efficient path finding. Simulation results show that the proposed scheme outperforms the ground and one-hop offloading schemes by up to 37.56% and 39.35% respectively on SpaceCube v2.0.

Adversarial Jamming for a More Effective Constellation Attack

Jan 20, 2022

Abstract:The common jamming mode in wireless communication is band barrage jamming, which is controllable and difficult to resist. Although this method is simple to implement, it is obviously not the best jamming waveform. Therefore, based on the idea of adversarial examples, we propose the adversarial jamming waveform, which can independently optimize and find the best jamming waveform. We attack QAM with adversarial jamming and find that the optimal jamming waveform is equivalent to the amplitude and phase between the nearest constellation points. Furthermore, by verifying the jamming performance on a hardware platform, it is shown that our method significantly improves the bit error rate compared to other methods.

Low-Interception Waveform: To Prevent the Recognition of Spectrum Waveform Modulation via Adversarial Examples

Jan 20, 2022

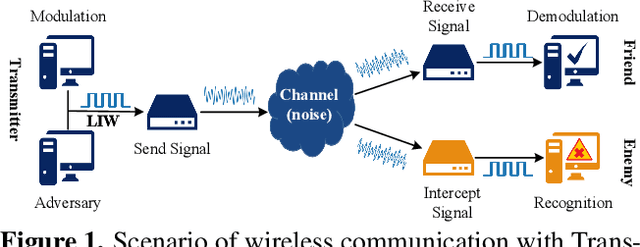

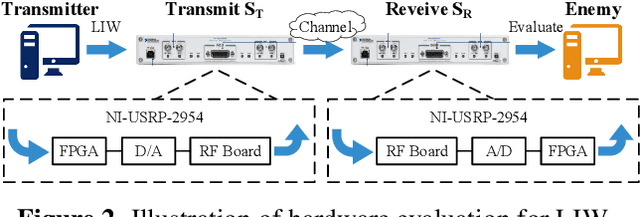

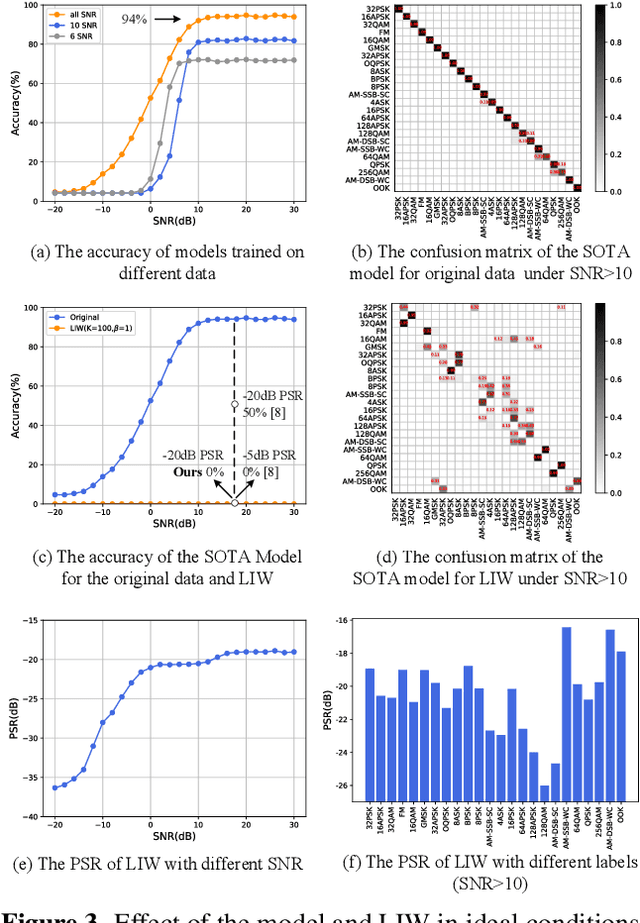

Abstract:Deep learning is applied to many complex tasks in the field of wireless communication, such as modulation recognition of spectrum waveforms, because of its convenience and efficiency. This leads to the problem of a malicious third party using a deep learning model to easily recognize the modulation format of the transmitted waveform. Some existing works address this problem directly using the concept of adversarial examples in the image domain without fully considering the characteristics of the waveform transmission in the physical world. Therefore, we propose a low-intercept waveform~(LIW) generation method that can reduce the probability of the modulation being recognized by a third party without affecting the reliable communication of the friendly party. Our LIW exhibits significant low-interception performance even in the physical hardware experiment, decreasing the accuracy of the state of the art model to approximately $15\%$ with small perturbations.

* 4 pages, 4 figures, published in 2021 34th General Assembly and Scientific Symposium of the International Union of Radio Science, URSI GASS 2021

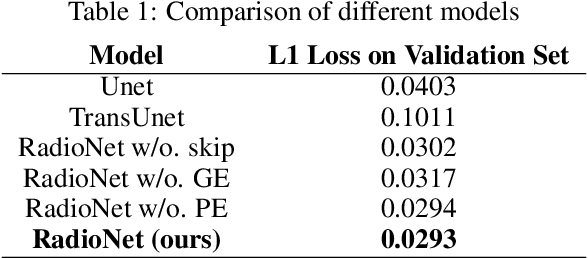

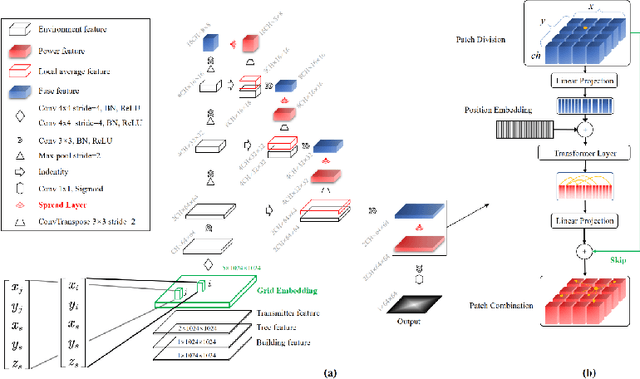

RadioNet: Transformer based Radio Map Prediction Model For Dense Urban Environments

May 15, 2021

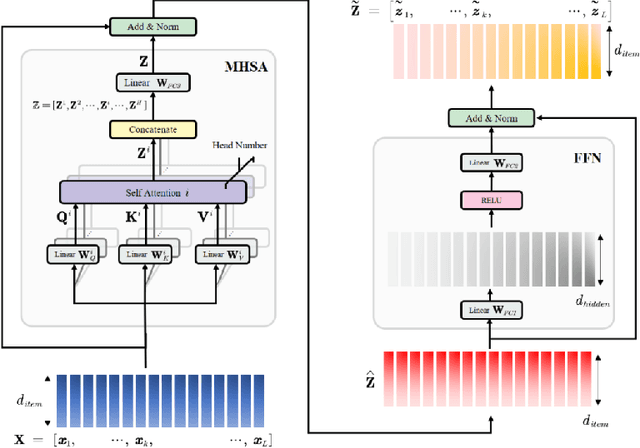

Abstract:Radio Map Prediction (RMP), aiming at estimating coverage of radio wave, has been widely recognized as an enabling technology for improving radio spectrum efficiency. However, fast and reliable radio map prediction can be very challenging due to the complicated interaction between radio waves and the environment. In this paper, a novel Transformer based deep learning model termed as RadioNet is proposed for radio map prediction in urban scenarios. In addition, a novel Grid Embedding technique is proposed to substitute the original Position Embedding in Transformer to better anchor the relative position of the radiation source, destination and environment. The effectiveness of proposed method is verified on an urban radio wave propagation dataset. Compared with the SOTA model on RMP task, RadioNet reduces the validation loss by 27.3\%, improves the prediction reliability from 90.9\% to 98.9\%. The prediction speed is increased by 4 orders of magnitude, when compared with ray-tracing based method. We believe that the proposed method will be beneficial to high-efficiency wireless communication, real-time radio visualization, and even high-speed image rendering.

Mean Field MARL Based Bandwidth Negotiation Method for Massive Devices Spectrum Sharing

Apr 30, 2021

Abstract:In this paper, a novel bandwidth negotiation mechanism is proposed for massive devices wireless spectrum sharing, in which individual device locally negotiates bandwidth usage with neighbor devices and globally optimal spectrum utilization is achieved through distributed decision-making. Since only sparse feedback is needed, the proposed mechanism can greatly reduce the signaling overhead. In order to solve the distributed optimization problem when massive devices coexist, mean field multi-agent reinforcement learning (MF-MARL) based bandwidth decision algorithm is proposed, which allow device make globally optimal decision leveraging only neighborhood observation. In simulation, distributed bandwidth negotiation between 1000 devices is demonstrated and the spectrum utilization rate is above 95%. The proposed method is beneficial to reduce spectrum conflicts, increase spectrum utilization for massive devices spectrum sharing.

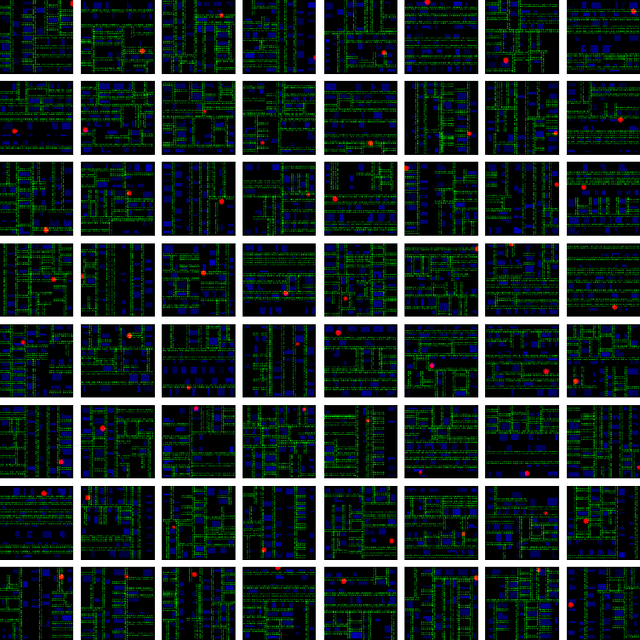

Noise Attention based Spectrum Anomaly Detection Method for Unauthorized Bands

Apr 17, 2021

Abstract:Spectrum anomaly detection is of great importance in wireless communication to secure safety and improve spectrum efficiency. However, spectrum anomaly detection faces many difficulties, especially in unauthorized frequency bands. For example, the composition of unauthorized frequency bands is very complex and the abnormal usage patterns are unknown in prior. In this paper, a noise attention method is proposed for unsupervised spectrum anomaly detection in unauthorized bands. First of all, we theoretically prove that the anomalies in unauthorized bands will raise the noise floor of spectrogram after VAE reconstruction. Then, we introduce a novel anomaly metric named as noise attention score to more effectively capture spectrum anomaly. The effectiveness of the proposed method is experimentally verified in 2.4 GHz ISM band. Leveraging the noise attention score, the AUC metric of anomaly detection is increased by 0.193. The proposed method is beneficial to reliably detecting abnormal spectrum while keeping low false alarm rate.

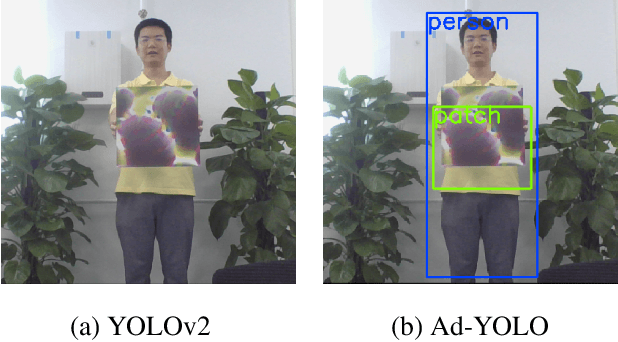

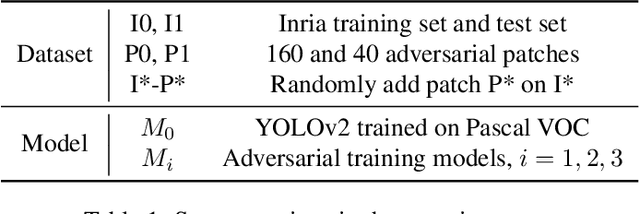

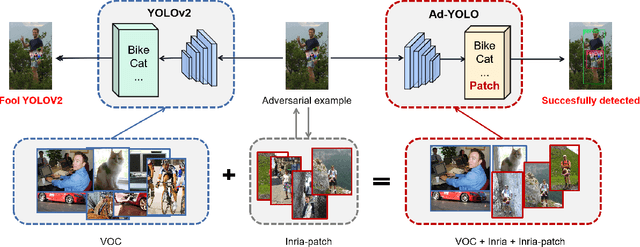

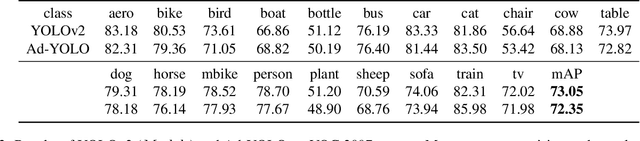

Adversarial YOLO: Defense Human Detection Patch Attacks via Detecting Adversarial Patches

Mar 16, 2021

Abstract:The security of object detection systems has attracted increasing attention, especially when facing adversarial patch attacks. Since patch attacks change the pixels in a restricted area on objects, they are easy to implement in the physical world, especially for attacking human detection systems. The existing defenses against patch attacks are mostly applied for image classification problems and have difficulty resisting human detection attacks. Towards this critical issue, we propose an efficient and effective plug-in defense component on the YOLO detection system, which we name Ad-YOLO. The main idea is to add a patch class on the YOLO architecture, which has a negligible inference increment. Thus, Ad-YOLO is expected to directly detect both the objects of interest and adversarial patches. To the best of our knowledge, our approach is the first defense strategy against human detection attacks. We investigate Ad-YOLO's performance on the YOLOv2 baseline. To improve the ability of Ad-YOLO to detect variety patches, we first use an adversarial training process to develop a patch dataset based on the Inria dataset, which we name Inria-Patch. Then, we train Ad-YOLO by a combination of Pascal VOC, Inria, and Inria-Patch datasets. With a slight drop of $0.70\%$ mAP on VOC 2007 test set, Ad-YOLO achieves $80.31\%$ AP of persons, which highly outperforms $33.93\%$ AP for YOLOv2 when facing white-box patch attacks. Furthermore, compared with YOLOv2, the results facing a physical-world attack are also included to demonstrate Ad-YOLO's excellent generalization ability.

Blind Adversarial Pruning: Balance Accuracy, Efficiency and Robustness

Apr 10, 2020

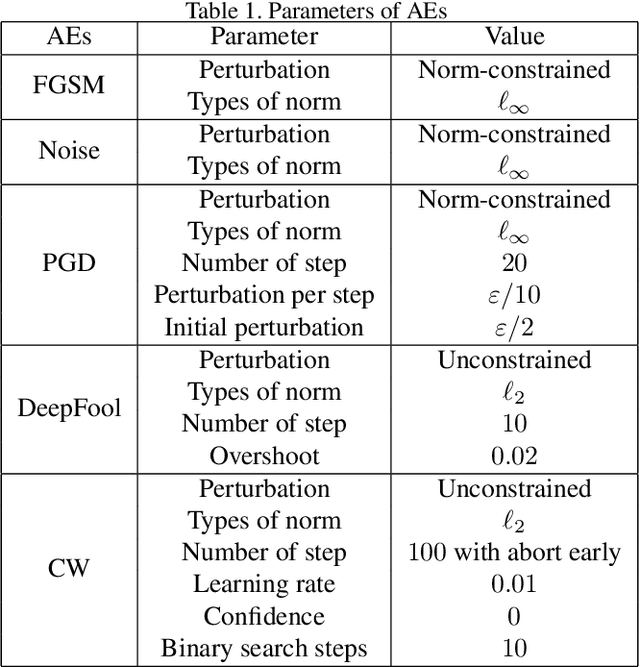

Abstract:With the growth of interest in the attack and defense of deep neural networks, researchers are focusing more on the robustness of applying them to devices with limited memory. Thus, unlike adversarial training, which only considers the balance between accuracy and robustness, we come to a more meaningful and critical issue, i.e., the balance among accuracy, efficiency and robustness (AER). Recently, some related works focused on this issue, but with different observations, and the relations among AER remain unclear. This paper first investigates the robustness of pruned models with different compression ratios under the gradual pruning process and concludes that the robustness of the pruned model drastically varies with different pruning processes, especially in response to attacks with large strength. Second, we test the performance of mixing the clean data and adversarial examples (generated with a prescribed uniform budget) into the gradual pruning process, called adversarial pruning, and find the following: the pruned model's robustness exhibits high sensitivity to the budget. Furthermore, to better balance the AER, we propose an approach called blind adversarial pruning (BAP), which introduces the idea of blind adversarial training into the gradual pruning process. The main idea is to use a cutoff-scale strategy to adaptively estimate a nonuniform budget to modify the AEs used during pruning, thus ensuring that the strengths of AEs are dynamically located within a reasonable range at each pruning step and ultimately improving the overall AER of the pruned model. The experimental results obtained using BAP for pruning classification models based on several benchmarks demonstrate the competitive performance of this method: the robustness of the model pruned by BAP is more stable among varying pruning processes, and BAP exhibits better overall AER than adversarial pruning.

Blind Adversarial Training: Balance Accuracy and Robustness

Apr 10, 2020

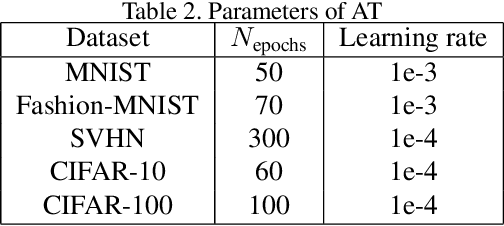

Abstract:Adversarial training (AT) aims to improve the robustness of deep learning models by mixing clean data and adversarial examples (AEs). Most existing AT approaches can be grouped into restricted and unrestricted approaches. Restricted AT requires a prescribed uniform budget to constrain the magnitude of the AE perturbations during training, with the obtained results showing high sensitivity to the budget. On the other hand, unrestricted AT uses unconstrained AEs, resulting in the use of AEs located beyond the decision boundary; these overestimated AEs significantly lower the accuracy on clean data. These limitations mean that the existing AT approaches have difficulty in obtaining a comprehensively robust model with high accuracy and robustness when confronting attacks with varying strengths. Considering this problem, this paper proposes a novel AT approach named blind adversarial training (BAT) to better balance the accuracy and robustness. The main idea of this approach is to use a cutoff-scale strategy to adaptively estimate a nonuniform budget to modify the AEs used in the training, ensuring that the strengths of the AEs are dynamically located in a reasonable range and ultimately improving the overall robustness of the AT model. The experimental results obtained using BAT for training classification models on several benchmarks demonstrate the competitive performance of this method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge