Shaohui Foong

Analyzing Swimming Performance Using Drone Captured Aerial Videos

Mar 17, 2025

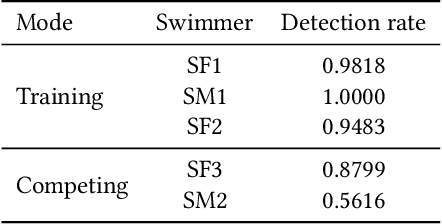

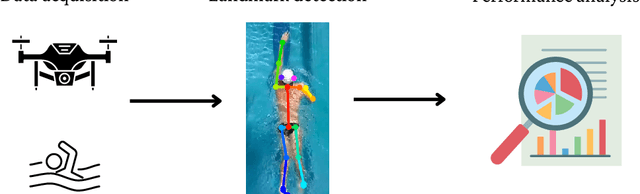

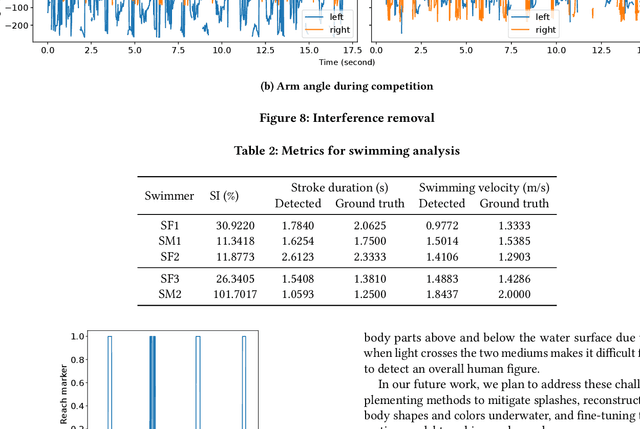

Abstract:Monitoring swimmer performance is crucial for improving training and enhancing athletic techniques. Traditional methods for tracking swimmers, such as above-water and underwater cameras, face limitations due to the need for multiple cameras and obstructions from water splashes. This paper presents a novel approach for tracking swimmers using a moving UAV. The proposed system employs a UAV equipped with a high-resolution camera to capture aerial footage of the swimmers. The footage is then processed using computer vision algorithms to extract the swimmers' positions and movements. This approach offers several advantages, including single camera use and comprehensive coverage. The system's accuracy is evaluated with both training and in competition videos. The results demonstrate the system's ability to accurately track swimmers' movements, limb angles, stroke duration and velocity with the maximum error of 0.3 seconds and 0.35~m/s for stroke duration and velocity, respectively.

Distance Measurement for UAVs in Deep Hazardous Tunnels

Sep 11, 2024

Abstract:The localization of Unmanned aerial vehicles (UAVs) in deep tunnels is extremely challenging due to their inaccessibility and hazardous environment. Conventional outdoor localization techniques (such as using GPS) and indoor localization techniques (such as those based on WiFi, Infrared (IR), Ultra-Wideband, etc.) do not work in deep tunnels. We are developing a UAV-based system for the inspection of defects in the Deep Tunnel Sewerage System (DTSS) in Singapore. To enable the UAV localization in the DTSS, we have developed a distance measurement module based on the optical flow technique. However, the standard optical flow technique does not work well in tunnels with poor lighting and a lack of features. Thus, we have developed an enhanced optical flow algorithm with prediction, to improve the distance measurement for UAVs in deep hazardous tunnels.

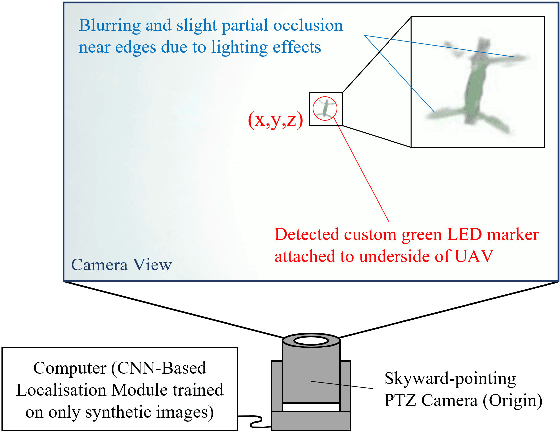

Marker-Based Localisation System Using an Active PTZ Camera and CNN-Based Ellipse Detection

Nov 06, 2023Abstract:Localisation in GPS-denied environments is challenging and many existing solutions have infrastructural and on-site calibration requirements. This paper tackles these challenges by proposing a localisation system that is infrastructure-free and does not require on-site calibration, using a single active PTZ camera to detect, track and localise a circular LED marker. We propose to use a CNN trained using only synthetic images to detect the LED marker as an ellipse and show that our approach is more robust than using traditional ellipse detection without requiring tuning of parameters for feature extraction. We also propose to leverage the predicted elliptical angle as a measure of uncertainty of the CNN's predictions and show how it can be used in a filter to improve marker range estimation and 3D localisation. We evaluate our system's performance through localisation of a UAV in real-world flight experiments and show that it can outperform alternative methods for localisation in GPS-denied environments. We also demonstrate our system's performance in indoor and outdoor environments.

Monocular UAV Localisation with Deep Learning and Uncertainty Propagation

Nov 06, 2023

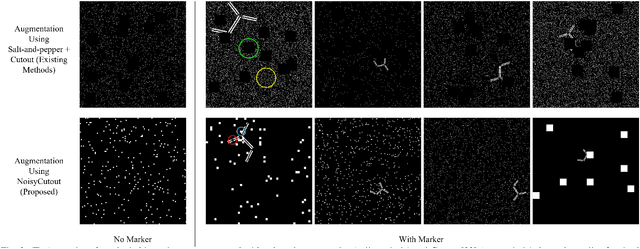

Abstract:In this paper, we propose a ground-based monocular UAV localisation system that detects and localises an LED marker attached to the underside of a UAV. Our system removes the need for extensive infrastructure and calibration unlike existing technologies such as UWB, radio frequency and multi-camera systems often used for localisation in GPS-denied environment. To improve deployablity for real-world applications without the need to collect extensive real dataset, we train a CNN on synthetic binary images as opposed to using real images in existing monocular UAV localisation methods, and factor in the camera's zoom to allow tracking of UAVs flying at further distances. We propose NoisyCutout algorithm for augmenting synthetic binary images to simulate binary images processed from real images and show that it improves localisation accuracy as compared to using existing salt-and-pepper and Cutout augmentation methods. We also leverage uncertainty propagation to modify the CNN's loss function and show that this also improves localisation accuracy. Real-world experiments are conducted to evaluate our methods and we achieve an overall 3D RMSE of approximately 0.41m.

Initialisation of Autonomous Aircraft Visual Inspection Systems via CNN-Based Camera Pose Estimation

Nov 06, 2023Abstract:General Visual Inspection is a manual inspection process regularly used to detect and localise obvious damage on the exterior of commercial aircraft. There has been increasing demand to perform this process at the boarding gate to minimize the downtime of the aircraft and automating this process is desired to reduce the reliance on human labour. This automation typically requires the first step of estimating a camera's pose with respect to the aircraft for initialisation. However, localisation methods often require infrastructure, which can be very challenging when performed in uncontrolled outdoor environments and within the limited turnover time (approximately 2 hours) on an airport tarmac. In addition, access to commercial aircraft can be very restricted, causing development and testing of solutions to be a challenge. Hence, this paper proposes an on-site infrastructure-less initialisation method, by using the same pan-tilt-zoom camera used for the inspection task to estimate its own pose. This is achieved using a Deep Convolutional Neural Network trained with only synthetic images to regress the camera's pose. We apply domain randomisation when generating our dataset for training our network and improve prediction accuracy by introducing a new component to an existing loss function that leverages on known aircraft geometry to relate position and orientation. Experiments are conducted and we have successfully regressed camera poses with a median error of 0.22 m and 0.73 degrees.

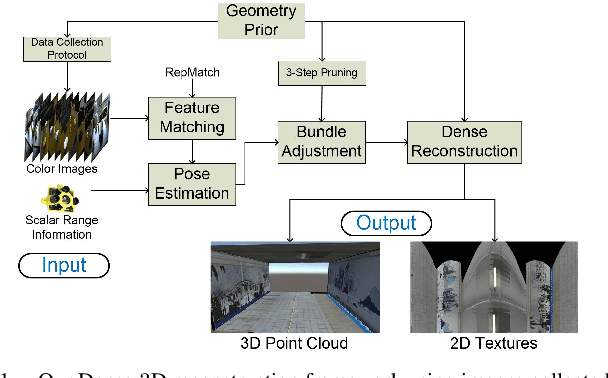

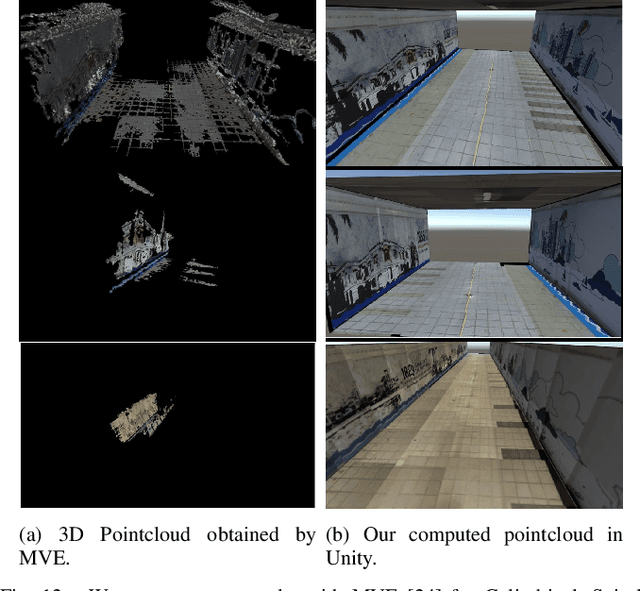

Dense 3D Reconstruction for Visual Tunnel Inspection using Unmanned Aerial Vehicle

Nov 09, 2019

Abstract:Advances in Unmanned Aerial Vehicle (UAV) opens venues for application such as tunnel inspection. Owing to its versatility to fly inside the tunnels, it can quickly identify defects and potential problems related to safety. However, long tunnels, especially with repetitive or uniform structures pose a significant problem for UAV navigation. Furthermore, post-processing visual data from the camera mounted on the UAV is required to generate useful information for the inspection task. In this work, we design a UAV with a single rotating camera to accomplish the task. Compared to other platforms, our solution can fit the stringent requirement for tunnel inspection, in terms of battery life, size and weight. While the current state-of-the-art can estimate camera pose and 3D geometry from a sequence of images, they assume large overlap, small rotational motion, and many distinct matching points between images. These assumptions severely limit their effectiveness in tunnel-like scenarios where the camera has erratic or large rotational motion, such as the one mounted on the UAV. This paper presents a novel solution which exploits Structure-from-Motion, Bundle Adjustment, and available geometry priors to robustly estimate camera pose and automatically reconstruct a fully-dense 3D scene using the least possible number of images in various challenging tunnel-like environments. We validate our system with both Virtual Reality application and experimentation with a real dataset. The results demonstrate that the proposed reconstruction along with texture mapping allows for remote navigation and inspection of tunnel-like environments, even those which are inaccessible for humans.

* 8 pages, 12 figures

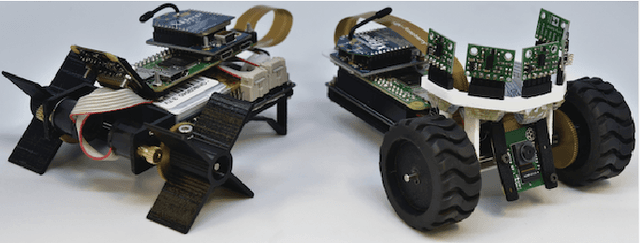

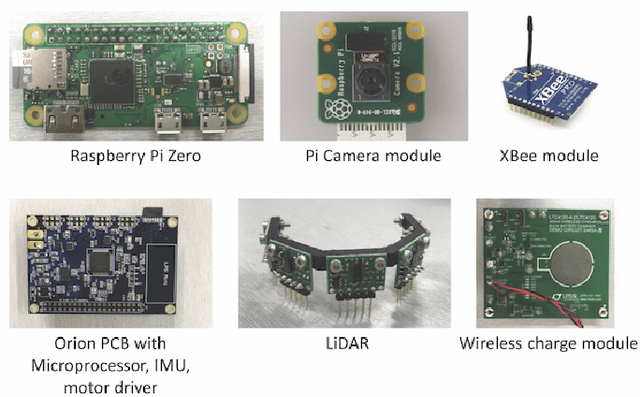

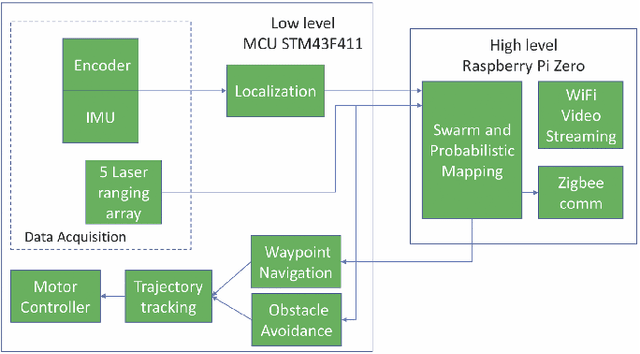

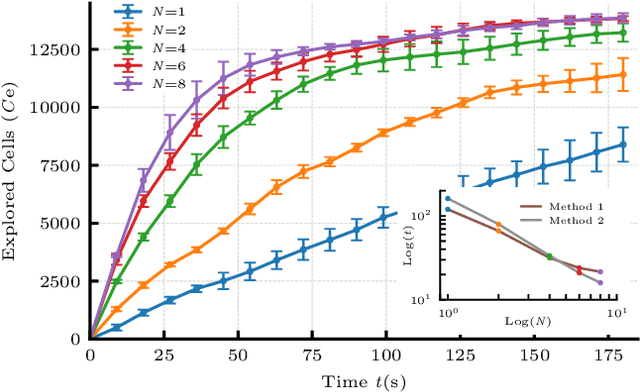

Decentralized Multi-Floor Exploration by a Swarm of Miniature Robots Teaming with Wall-Climbing Units

Aug 16, 2019

Abstract:In this paper, we consider the problem of collectively exploring unknown and dynamic environments with a decentralized heterogeneous multi-robot system consisting of multiple units of two variants of a miniature robot. The first variant-a wheeled ground unit-is at the core of a swarm of floor-mapping robots exhibiting scalability, robustness and flexibility. These properties are systematically tested and quantitatively evaluated in unstructured and dynamic environments, in the absence of any supporting infrastructure. The results of repeated sets of experiments show a consistent performance for all three features, as well as the possibility to inject units into the system while it is operating. Several units of the second variant-a wheg-based wall-climbing unit-are used to support the swarm of mapping robots when simultaneously exploring multiple floors by expanding the distributed communication channel necessary for the coordinated behavior among platforms. Although the occupancy-grid maps obtained can be large, they are fully distributed. Not a single robotic unit possesses the overall map, which is not required by our cooperative path-planning strategy.

Feature-less Stitching of Cylindrical Tunnel

Jun 27, 2018

Abstract:Traditional image stitching algorithms use transforms such as homography to combine different views of a scene. They usually work well when the scene is planar or when the camera is only rotated, keeping its position static. This severely limits their use in real world scenarios where an unmanned aerial vehicle (UAV) potentially hovers around and flies in an enclosed area while rotating to capture a video sequence. We utilize known scene geometry along with recorded camera trajectory to create cylindrical images captured in a given environment such as a tunnel where the camera rotates around its center. The captured images of the inner surface of the given scene are combined to create a composite panoramic image that is textured onto a 3D geometrical object in Unity graphical engine to create an immersive environment for end users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge