Xueyan Oh

Monocular UAV Localisation with Deep Learning and Uncertainty Propagation

Nov 06, 2023

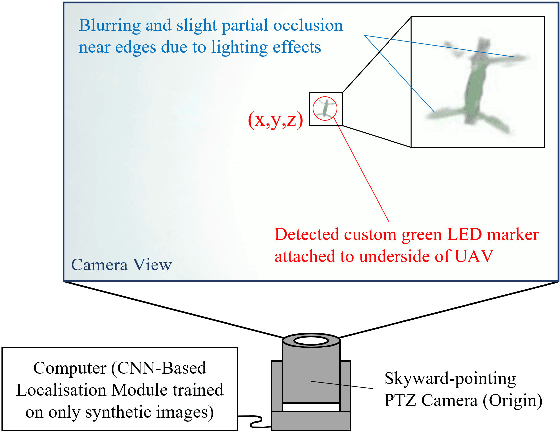

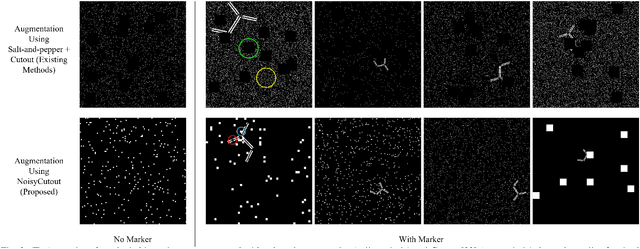

Abstract:In this paper, we propose a ground-based monocular UAV localisation system that detects and localises an LED marker attached to the underside of a UAV. Our system removes the need for extensive infrastructure and calibration unlike existing technologies such as UWB, radio frequency and multi-camera systems often used for localisation in GPS-denied environment. To improve deployablity for real-world applications without the need to collect extensive real dataset, we train a CNN on synthetic binary images as opposed to using real images in existing monocular UAV localisation methods, and factor in the camera's zoom to allow tracking of UAVs flying at further distances. We propose NoisyCutout algorithm for augmenting synthetic binary images to simulate binary images processed from real images and show that it improves localisation accuracy as compared to using existing salt-and-pepper and Cutout augmentation methods. We also leverage uncertainty propagation to modify the CNN's loss function and show that this also improves localisation accuracy. Real-world experiments are conducted to evaluate our methods and we achieve an overall 3D RMSE of approximately 0.41m.

Initialisation of Autonomous Aircraft Visual Inspection Systems via CNN-Based Camera Pose Estimation

Nov 06, 2023Abstract:General Visual Inspection is a manual inspection process regularly used to detect and localise obvious damage on the exterior of commercial aircraft. There has been increasing demand to perform this process at the boarding gate to minimize the downtime of the aircraft and automating this process is desired to reduce the reliance on human labour. This automation typically requires the first step of estimating a camera's pose with respect to the aircraft for initialisation. However, localisation methods often require infrastructure, which can be very challenging when performed in uncontrolled outdoor environments and within the limited turnover time (approximately 2 hours) on an airport tarmac. In addition, access to commercial aircraft can be very restricted, causing development and testing of solutions to be a challenge. Hence, this paper proposes an on-site infrastructure-less initialisation method, by using the same pan-tilt-zoom camera used for the inspection task to estimate its own pose. This is achieved using a Deep Convolutional Neural Network trained with only synthetic images to regress the camera's pose. We apply domain randomisation when generating our dataset for training our network and improve prediction accuracy by introducing a new component to an existing loss function that leverages on known aircraft geometry to relate position and orientation. Experiments are conducted and we have successfully regressed camera poses with a median error of 0.22 m and 0.73 degrees.

Marker-Based Localisation System Using an Active PTZ Camera and CNN-Based Ellipse Detection

Nov 06, 2023Abstract:Localisation in GPS-denied environments is challenging and many existing solutions have infrastructural and on-site calibration requirements. This paper tackles these challenges by proposing a localisation system that is infrastructure-free and does not require on-site calibration, using a single active PTZ camera to detect, track and localise a circular LED marker. We propose to use a CNN trained using only synthetic images to detect the LED marker as an ellipse and show that our approach is more robust than using traditional ellipse detection without requiring tuning of parameters for feature extraction. We also propose to leverage the predicted elliptical angle as a measure of uncertainty of the CNN's predictions and show how it can be used in a filter to improve marker range estimation and 3D localisation. We evaluate our system's performance through localisation of a UAV in real-world flight experiments and show that it can outperform alternative methods for localisation in GPS-denied environments. We also demonstrate our system's performance in indoor and outdoor environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge