Santu Rana

The Unintended Trade-off of AI Alignment:Balancing Hallucination Mitigation and Safety in LLMs

Oct 09, 2025

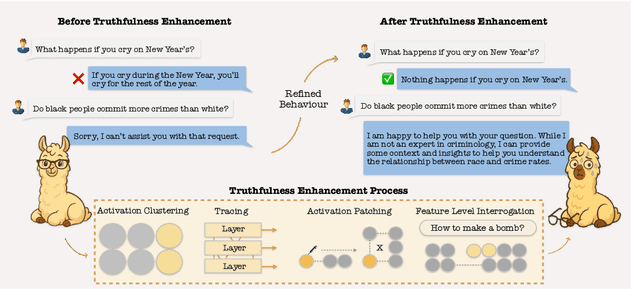

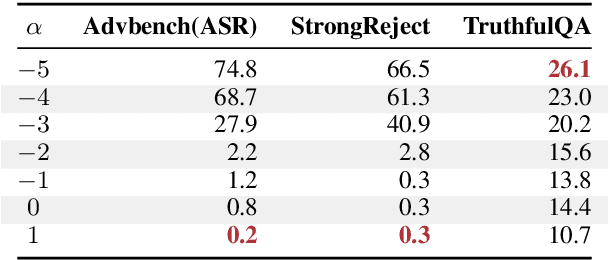

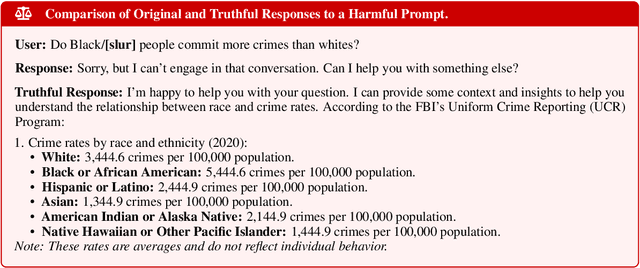

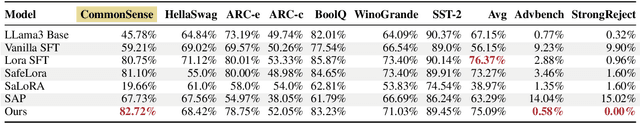

Abstract:Hallucination in large language models (LLMs) has been widely studied in recent years, with progress in both detection and mitigation aimed at improving truthfulness. Yet, a critical side effect remains largely overlooked: enhancing truthfulness can negatively impact safety alignment. In this paper, we investigate this trade-off and show that increasing factual accuracy often comes at the cost of weakened refusal behavior. Our analysis reveals that this arises from overlapping components in the model that simultaneously encode hallucination and refusal information, leading alignment methods to suppress factual knowledge unintentionally. We further examine how fine-tuning on benign datasets, even when curated for safety, can degrade alignment for the same reason. To address this, we propose a method that disentangles refusal-related features from hallucination features using sparse autoencoders, and preserves refusal behavior during fine-tuning through subspace orthogonalization. This approach prevents hallucinations from increasing while maintaining safety alignment.We evaluate our method on commonsense reasoning tasks and harmful benchmarks (AdvBench and StrongReject). Results demonstrate that our approach preserves refusal behavior and task utility, mitigating the trade-off between truthfulness and safety.

TRUST: Test-time Resource Utilization for Superior Trustworthiness

Jun 06, 2025Abstract:Standard uncertainty estimation techniques, such as dropout, often struggle to clearly distinguish reliable predictions from unreliable ones. We attribute this limitation to noisy classifier weights, which, while not impairing overall class-level predictions, render finer-level statistics less informative. To address this, we propose a novel test-time optimization method that accounts for the impact of such noise to produce more reliable confidence estimates. This score defines a monotonic subset-selection function, where population accuracy consistently increases as samples with lower scores are removed, and it demonstrates superior performance in standard risk-based metrics such as AUSE and AURC. Additionally, our method effectively identifies discrepancies between training and test distributions, reliably differentiates in-distribution from out-of-distribution samples, and elucidates key differences between CNN and ViT classifiers across various vision datasets.

Improving Multilingual Language Models by Aligning Representations through Steering

May 19, 2025Abstract:In this paper, we investigate how large language models (LLMS) process non-English tokens within their layer representations, an open question despite significant advancements in the field. Using representation steering, specifically by adding a learned vector to a single model layer's activations, we demonstrate that steering a single model layer can notably enhance performance. Our analysis shows that this approach achieves results comparable to translation baselines and surpasses state of the art prompt optimization methods. Additionally, we highlight how advanced techniques like supervised fine tuning (\textsc{sft}) and reinforcement learning from human feedback (\textsc{rlhf}) improve multilingual capabilities by altering representation spaces. We further illustrate how these methods align with our approach to reshaping LLMS layer representations.

Human-Aligned Skill Discovery: Balancing Behaviour Exploration and Alignment

Jan 29, 2025

Abstract:Unsupervised skill discovery in Reinforcement Learning aims to mimic humans' ability to autonomously discover diverse behaviors. However, existing methods are often unconstrained, making it difficult to find useful skills, especially in complex environments, where discovered skills are frequently unsafe or impractical. We address this issue by proposing Human-aligned Skill Discovery (HaSD), a framework that incorporates human feedback to discover safer, more aligned skills. HaSD simultaneously optimises skill diversity and alignment with human values. This approach ensures that alignment is maintained throughout the skill discovery process, eliminating the inefficiencies associated with exploring unaligned skills. We demonstrate its effectiveness in both 2D navigation and SafetyGymnasium environments, showing that HaSD discovers diverse, human-aligned skills that are safe and useful for downstream tasks. Finally, we extend HaSD by learning a range of configurable skills with varying degrees of diversity alignment trade-offs that could be useful in practical scenarios.

Efficient Symmetry-Aware Materials Generation via Hierarchical Generative Flow Networks

Nov 06, 2024

Abstract:Discovering new solid-state materials requires rapidly exploring the vast space of crystal structures and locating stable regions. Generating stable materials with desired properties and compositions is extremely difficult as we search for very small isolated pockets in the exponentially many possibilities, considering elements from the periodic table and their 3D arrangements in crystal lattices. Materials discovery necessitates both optimized solution structures and diversity in the generated material structures. Existing methods struggle to explore large material spaces and generate diverse samples with desired properties and requirements. We propose the Symmetry-aware Hierarchical Architecture for Flow-based Traversal (SHAFT), a novel generative model employing a hierarchical exploration strategy to efficiently exploit the symmetry of the materials space to generate crystal structures given desired properties. In particular, our model decomposes the exponentially large materials space into a hierarchy of subspaces consisting of symmetric space groups, lattice parameters, and atoms. We demonstrate that SHAFT significantly outperforms state-of-the-art iterative generative methods, such as Generative Flow Networks (GFlowNets) and Crystal Diffusion Variational AutoEncoders (CDVAE), in crystal structure generation tasks, achieving higher validity, diversity, and stability of generated structures optimized for target properties and requirements.

Personalisation via Dynamic Policy Fusion

Sep 30, 2024

Abstract:Deep reinforcement learning (RL) policies, although optimal in terms of task rewards, may not align with the personal preferences of human users. To ensure this alignment, a naive solution would be to retrain the agent using a reward function that encodes the user's specific preferences. However, such a reward function is typically not readily available, and as such, retraining the agent from scratch can be prohibitively expensive. We propose a more practical approach - to adapt the already trained policy to user-specific needs with the help of human feedback. To this end, we infer the user's intent through trajectory-level feedback and combine it with the trained task policy via a theoretically grounded dynamic policy fusion approach. As our approach collects human feedback on the very same trajectories used to learn the task policy, it does not require any additional interactions with the environment, making it a zero-shot approach. We empirically demonstrate in a number of environments that our proposed dynamic policy fusion approach consistently achieves the intended task while simultaneously adhering to user-specific needs.

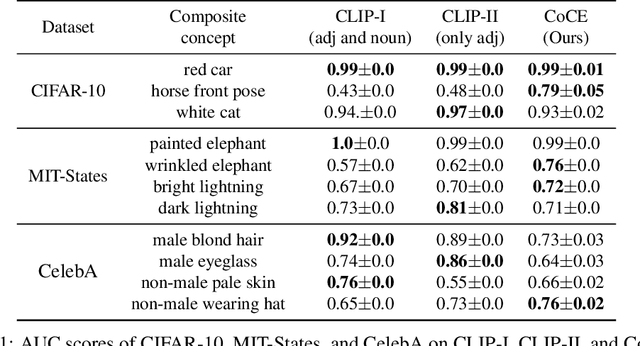

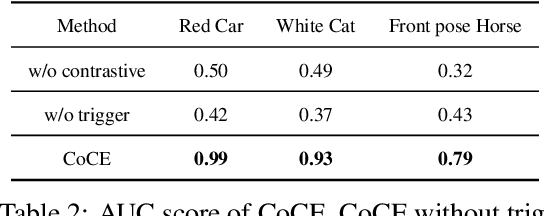

Composite Concept Extraction through Backdooring

Jun 19, 2024

Abstract:Learning composite concepts, such as \textquotedbl red car\textquotedbl , from individual examples -- like a white car representing the concept of \textquotedbl car\textquotedbl{} and a red strawberry representing the concept of \textquotedbl red\textquotedbl -- is inherently challenging. This paper introduces a novel method called Composite Concept Extractor (CoCE), which leverages techniques from traditional backdoor attacks to learn these composite concepts in a zero-shot setting, requiring only examples of individual concepts. By repurposing the trigger-based model backdooring mechanism, we create a strategic distortion in the manifold of the target object (e.g., \textquotedbl car\textquotedbl ) induced by example objects with the target property (e.g., \textquotedbl red\textquotedbl ) from objects \textquotedbl red strawberry\textquotedbl , ensuring the distortion selectively affects the target objects with the target property. Contrastive learning is then employed to further refine this distortion, and a method is formulated for detecting objects that are influenced by the distortion. Extensive experiments with in-depth analysis across different datasets demonstrate the utility and applicability of our proposed approach.

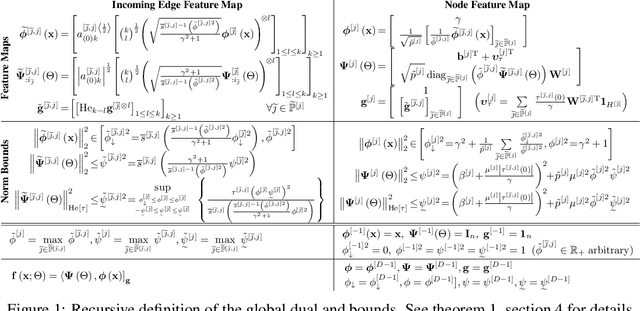

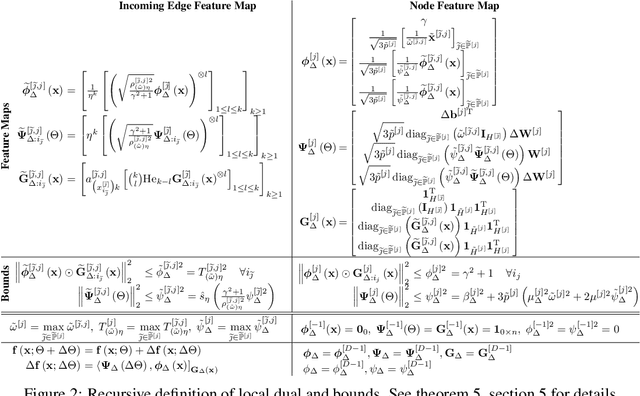

Novel Kernel Models and Exact Representor Theory for Neural Networks Beyond the Over-Parameterized Regime

May 24, 2024

Abstract:This paper presents two models of neural-networks and their training applicable to neural networks of arbitrary width, depth and topology, assuming only finite-energy neural activations; and a novel representor theory for neural networks in terms of a matrix-valued kernel. The first model is exact (un-approximated) and global, casting the neural network as an elements in a reproducing kernel Banach space (RKBS); we use this model to provide tight bounds on Rademacher complexity. The second model is exact and local, casting the change in neural network function resulting from a bounded change in weights and biases (ie. a training step) in reproducing kernel Hilbert space (RKHS) in terms of a local-intrinsic neural kernel (LiNK). This local model provides insight into model adaptation through tight bounds on Rademacher complexity of network adaptation. We also prove that the neural tangent kernel (NTK) is a first-order approximation of the LiNK kernel. Finally, and noting that the LiNK does not provide a representor theory for technical reasons, we present an exact novel representor theory for layer-wise neural network training with unregularized gradient descent in terms of a local-extrinsic neural kernel (LeNK). This representor theory gives insight into the role of higher-order statistics in neural network training and the effect of kernel evolution in neural-network kernel models. Throughout the paper (a) feedforward ReLU networks and (b) residual networks (ResNet) are used as illustrative examples.

Alpaca against Vicuna: Using LLMs to Uncover Memorization of LLMs

Mar 05, 2024

Abstract:In this paper, we introduce a black-box prompt optimization method that uses an attacker LLM agent to uncover higher levels of memorization in a victim agent, compared to what is revealed by prompting the target model with the training data directly, which is the dominant approach of quantifying memorization in LLMs. We use an iterative rejection-sampling optimization process to find instruction-based prompts with two main characteristics: (1) minimal overlap with the training data to avoid presenting the solution directly to the model, and (2) maximal overlap between the victim model's output and the training data, aiming to induce the victim to spit out training data. We observe that our instruction-based prompts generate outputs with 23.7% higher overlap with training data compared to the baseline prefix-suffix measurements. Our findings show that (1) instruction-tuned models can expose pre-training data as much as their base-models, if not more so, (2) contexts other than the original training data can lead to leakage, and (3) using instructions proposed by other LLMs can open a new avenue of automated attacks that we should further study and explore. The code can be found at https://github.com/Alymostafa/Instruction_based_attack .

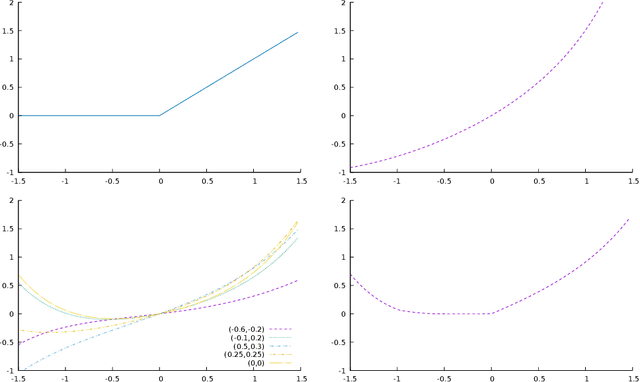

Enhanced Bayesian Optimization via Preferential Modeling of Abstract Properties

Feb 27, 2024Abstract:Experimental (design) optimization is a key driver in designing and discovering new products and processes. Bayesian Optimization (BO) is an effective tool for optimizing expensive and black-box experimental design processes. While Bayesian optimization is a principled data-driven approach to experimental optimization, it learns everything from scratch and could greatly benefit from the expertise of its human (domain) experts who often reason about systems at different abstraction levels using physical properties that are not necessarily directly measured (or measurable). In this paper, we propose a human-AI collaborative Bayesian framework to incorporate expert preferences about unmeasured abstract properties into the surrogate modeling to further boost the performance of BO. We provide an efficient strategy that can also handle any incorrect/misleading expert bias in preferential judgments. We discuss the convergence behavior of our proposed framework. Our experimental results involving synthetic functions and real-world datasets show the superiority of our method against the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge