Buddhika Laknath Semage

The Unintended Trade-off of AI Alignment:Balancing Hallucination Mitigation and Safety in LLMs

Oct 09, 2025

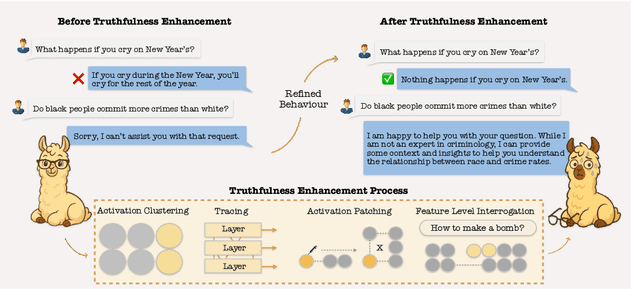

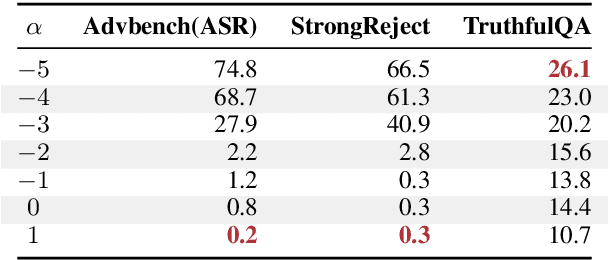

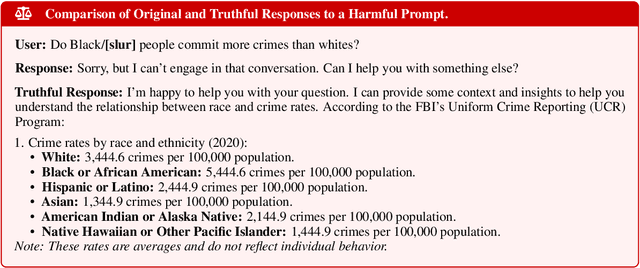

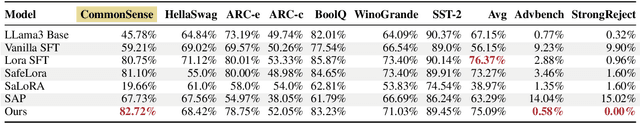

Abstract:Hallucination in large language models (LLMs) has been widely studied in recent years, with progress in both detection and mitigation aimed at improving truthfulness. Yet, a critical side effect remains largely overlooked: enhancing truthfulness can negatively impact safety alignment. In this paper, we investigate this trade-off and show that increasing factual accuracy often comes at the cost of weakened refusal behavior. Our analysis reveals that this arises from overlapping components in the model that simultaneously encode hallucination and refusal information, leading alignment methods to suppress factual knowledge unintentionally. We further examine how fine-tuning on benign datasets, even when curated for safety, can degrade alignment for the same reason. To address this, we propose a method that disentangles refusal-related features from hallucination features using sparse autoencoders, and preserves refusal behavior during fine-tuning through subspace orthogonalization. This approach prevents hallucinations from increasing while maintaining safety alignment.We evaluate our method on commonsense reasoning tasks and harmful benchmarks (AdvBench and StrongReject). Results demonstrate that our approach preserves refusal behavior and task utility, mitigating the trade-off between truthfulness and safety.

Improving Multilingual Language Models by Aligning Representations through Steering

May 19, 2025Abstract:In this paper, we investigate how large language models (LLMS) process non-English tokens within their layer representations, an open question despite significant advancements in the field. Using representation steering, specifically by adding a learned vector to a single model layer's activations, we demonstrate that steering a single model layer can notably enhance performance. Our analysis shows that this approach achieves results comparable to translation baselines and surpasses state of the art prompt optimization methods. Additionally, we highlight how advanced techniques like supervised fine tuning (\textsc{sft}) and reinforcement learning from human feedback (\textsc{rlhf}) improve multilingual capabilities by altering representation spaces. We further illustrate how these methods align with our approach to reshaping LLMS layer representations.

Zero-shot Sim2Real Adaptation Across Environments

Feb 08, 2023Abstract:Simulation based learning often provides a cost-efficient recourse to reinforcement learning applications in robotics. However, simulators are generally incapable of accurately replicating real-world dynamics, and thus bridging the sim2real gap is an important problem in simulation based learning. Current solutions to bridge the sim2real gap involve hybrid simulators that are augmented with neural residual models. Unfortunately, they require a separate residual model for each individual environment configuration (i.e., a fixed setting of environment variables such as mass, friction etc.), and thus are not transferable to new environments quickly. To address this issue, we propose a Reverse Action Transformation (RAT) policy which learns to imitate simulated policies in the real-world. Once learnt from a single environment, RAT can then be deployed on top of a Universal Policy Network to achieve zero-shot adaptation to new environments. We empirically evaluate our approach in a set of continuous control tasks and observe its advantage as a few-shot and zero-shot learner over competing baselines.

Uncertainty Aware System Identification with Universal Policies

Feb 11, 2022Abstract:Sim2real transfer is primarily concerned with transferring policies trained in simulation to potentially noisy real world environments. A common problem associated with sim2real transfer is estimating the real-world environmental parameters to ground the simulated environment to. Although existing methods such as Domain Randomisation (DR) can produce robust policies by sampling from a distribution of parameters during training, there is no established method for identifying the parameters of the corresponding distribution for a given real-world setting. In this work, we propose Uncertainty-aware policy search (UncAPS), where we use Universal Policy Network (UPN) to store simulation-trained task-specific policies across the full range of environmental parameters and then subsequently employ robust Bayesian optimisation to craft robust policies for the given environment by combining relevant UPN policies in a DR like fashion. Such policy-driven grounding is expected to be more efficient as it estimates only task-relevant sets of parameters. Further, we also account for the estimation uncertainties in the search process to produce policies that are robust against both aleatoric and epistemic uncertainties. We empirically evaluate our approach in a range of noisy, continuous control environments, and show its improved performance compared to competing baselines.

Fast Model-based Policy Search for Universal Policy Networks

Feb 11, 2022Abstract:Adapting an agent's behaviour to new environments has been one of the primary focus areas of physics based reinforcement learning. Although recent approaches such as universal policy networks partially address this issue by enabling the storage of multiple policies trained in simulation on a wide range of dynamic/latent factors, efficiently identifying the most appropriate policy for a given environment remains a challenge. In this work, we propose a Gaussian Process-based prior learned in simulation, that captures the likely performance of a policy when transferred to a previously unseen environment. We integrate this prior with a Bayesian Optimisation-based policy search process to improve the efficiency of identifying the most appropriate policy from the universal policy network. We empirically evaluate our approach in a range of continuous and discrete control environments, and show that it outperforms other competing baselines.

Intuitive Physics Guided Exploration for Sample Efficient Sim2real Transfer

Apr 18, 2021

Abstract:Physics-based reinforcement learning tasks can benefit from simplified physics simulators as they potentially allow near-optimal policies to be learned in simulation. However, such simulators require the latent factors (e.g. mass, friction coefficient etc.) of the associated objects and other environment-specific factors (e.g. wind speed, air density etc.) to be accurately specified, without which, it could take considerable additional learning effort to adapt the learned simulation policy to the real environment. As such a complete specification can be impractical, in this paper, we instead, focus on learning task-specific estimates of latent factors which allow the approximation of real world trajectories in an ideal simulation environment. Specifically, we propose two new concepts: a) action grouping - the idea that certain types of actions are closely associated with the estimation of certain latent factors, and; b) partial grounding - the idea that simulation of task-specific dynamics may not need precise estimation of all the latent factors. We first introduce intuitive action groupings based on human physics knowledge and experience, which is then used to design novel strategies for interacting with the real environment. Next, we describe how prior knowledge of a task in a given environment can be used to extract the relative importance of different latent factors, and how this can be used to inform partial grounding, which enables efficient learning of the task in any arbitrary environment. We demonstrate our approach in a range of physics based tasks, and show that it achieves superior performance relative to other baselines, using only a limited number of real-world interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge