Sankaran Mahadevan

Power grid operational risk assessment using graph neural network surrogates

Nov 21, 2023

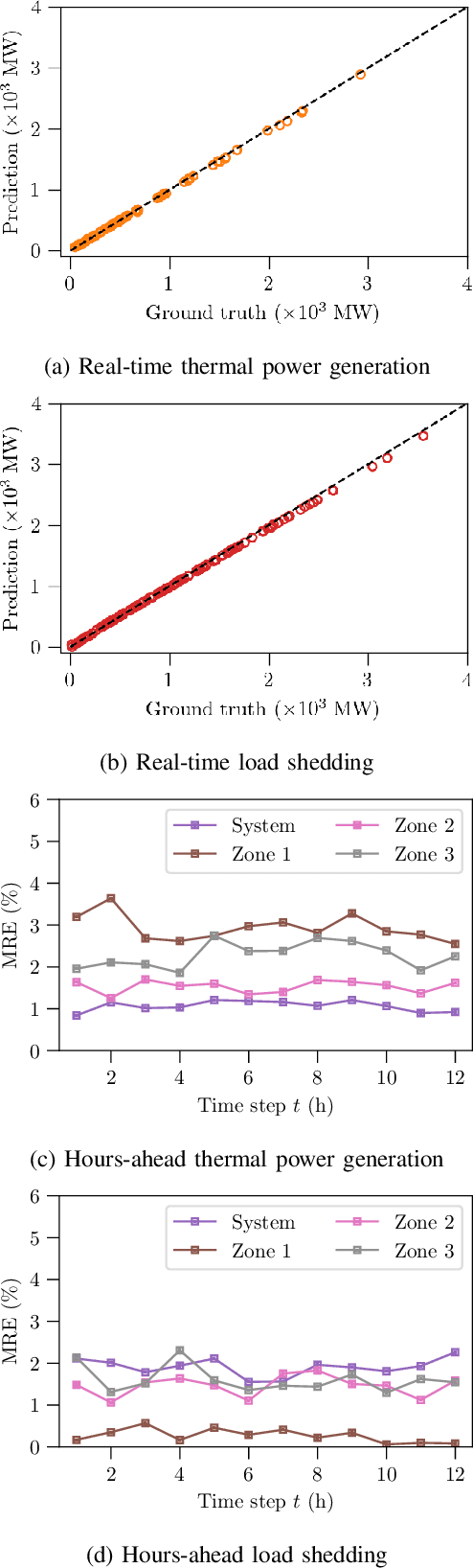

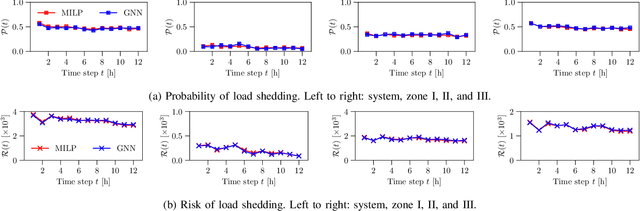

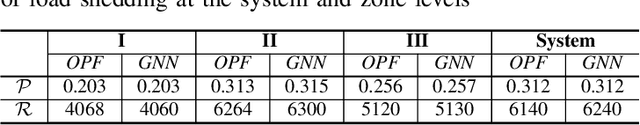

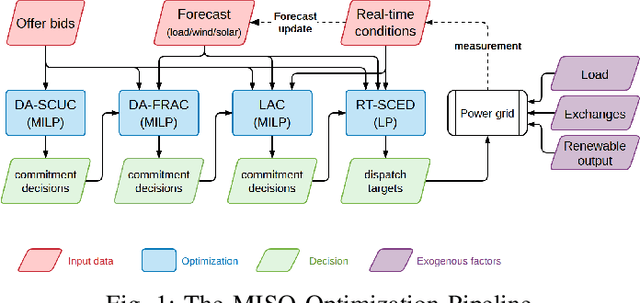

Abstract:We investigate the utility of graph neural networks (GNNs) as proxies of power grid operational decision-making algorithms (optimal power flow (OPF) and security-constrained unit commitment (SCUC)) to enable rigorous quantification of the operational risk. To conduct principled risk analysis, numerous Monte Carlo (MC) samples are drawn from the (foretasted) probability distributions of spatio-temporally correlated stochastic grid variables. The corresponding OPF and SCUC solutions, which are needed to quantify the risk, are generated using traditional OPF and SCUC solvers to generate data for training GNN model(s). The GNN model performance is evaluated in terms of the accuracy of predicting quantities of interests (QoIs) derived from the decision variables in OPF and SCUC. Specifically, we focus on thermal power generation and load shedding at system and individual zone level. We also perform reliability and risk quantification based on GNN predictions and compare with that obtained from OPF/SCUC solutions. Our results demonstrate that GNNs are capable of providing fast and accurate prediction of QoIs and thus can be good surrogate models for OPF and SCUC. The excellent accuracy of GNN-based reliability and risk assessment further suggests that GNN surrogate has the potential to be applied in real-time and hours-ahead risk quantification.

Graph Neural Networks for Power Grid Operational Risk Assessment

Nov 07, 2023

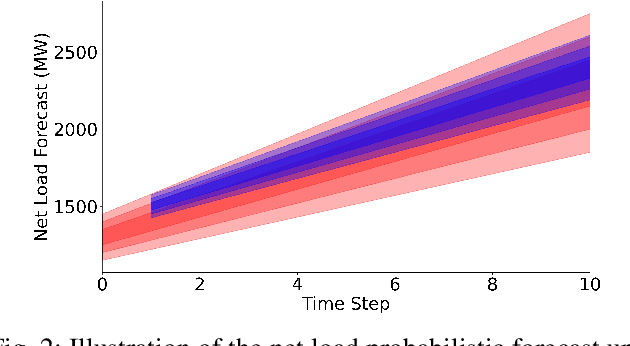

Abstract:In this article, the utility of graph neural network (GNN) surrogates for Monte Carlo (MC) sampling-based risk quantification in daily operations of power grid is investigated. The MC simulation process necessitates solving a large number of optimal power flow (OPF) problems corresponding to the sample values of stochastic grid variables (power demand and renewable generation), which is computationally prohibitive. Computationally inexpensive surrogates of the OPF problem provide an attractive alternative for expedited MC simulation. GNN surrogates are especially suitable due to their superior ability to handle graph-structured data. Therefore, GNN surrogates of OPF problem are trained using supervised learning. They are then used to obtain Monte Carlo (MC) samples of the quantities of interest (operating reserve, transmission line flow) given the (hours-ahead) probabilistic wind generation and load forecast. The utility of GNN surrogates is evaluated by comparing OPF-based and GNN-based grid reliability and risk for IEEE Case118 synthetic grid. It is shown that the GNN surrogates are sufficiently accurate for predicting the (bus-level, branch-level and system-level) grid state and enable fast as well as accurate operational risk quantification for power grids. The article thus develops various tools for fast reliability and risk quantification for real-world power grids using GNNs.

Just-In-Time Learning for Operational Risk Assessment in Power Grids

Sep 26, 2022

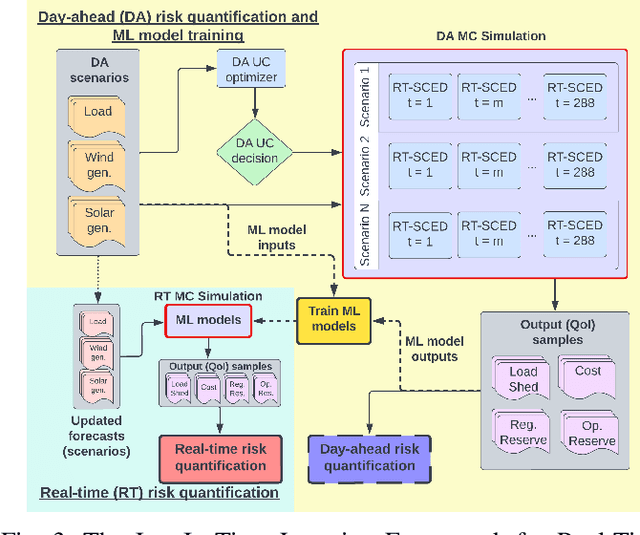

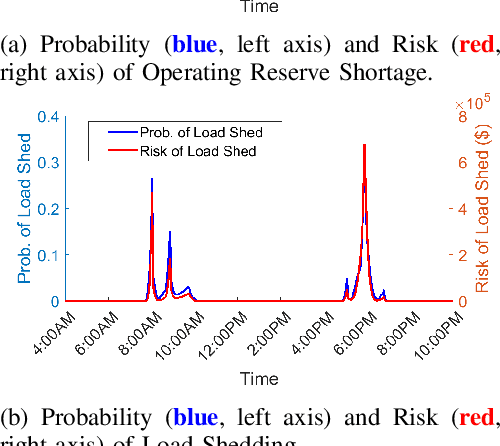

Abstract:In a grid with a significant share of renewable generation, operators will need additional tools to evaluate the operational risk due to the increased volatility in load and generation. The computational requirements of the forward uncertainty propagation problem, which must solve numerous security-constrained economic dispatch (SCED) optimizations, is a major barrier for such real-time risk assessment. This paper proposes a Just-In-Time Risk Assessment Learning Framework (JITRALF) as an alternative. JITRALF trains risk surrogates, one for each hour in the day, using Machine Learning (ML) to predict the quantities needed to estimate risk, without explicitly solving the SCED problem. This significantly reduces the computational burden of the forward uncertainty propagation and allows for fast, real-time risk estimation. The paper also proposes a novel, asymmetric loss function and shows that models trained using the asymmetric loss perform better than those using symmetric loss functions. JITRALF is evaluated on the French transmission system for assessing the risk of insufficient operating reserves, the risk of load shedding, and the expected operating cost.

A Comprehensive Review of Digital Twin -- Part 2: Roles of Uncertainty Quantification and Optimization, a Battery Digital Twin, and Perspectives

Aug 27, 2022

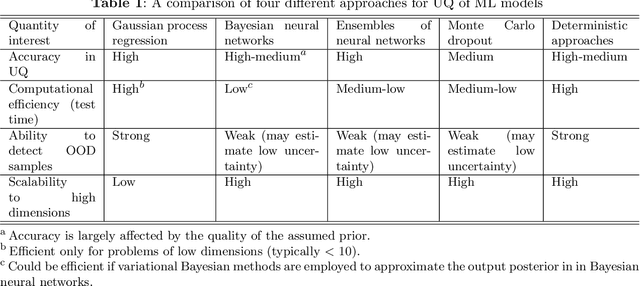

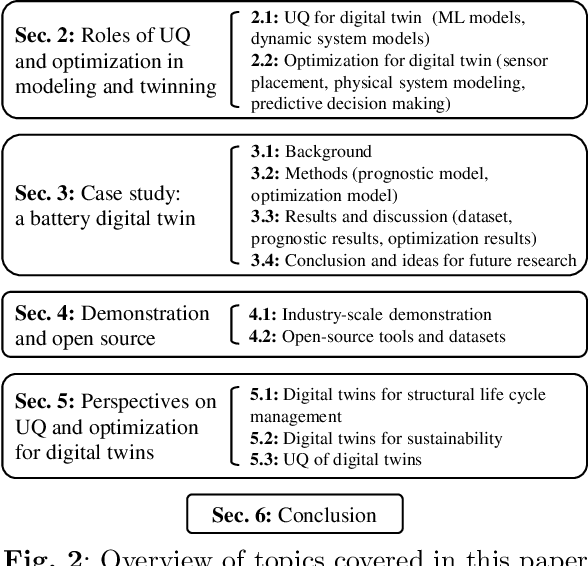

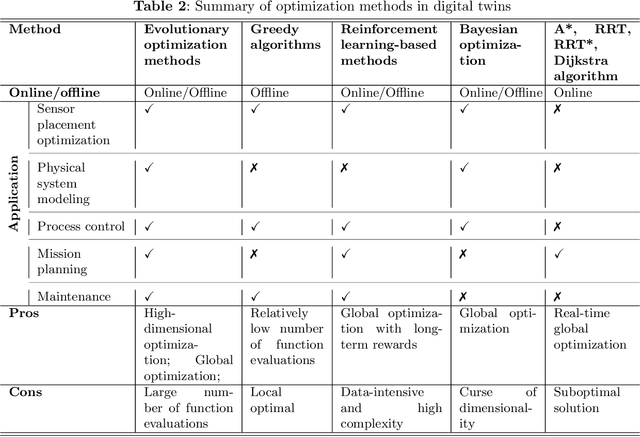

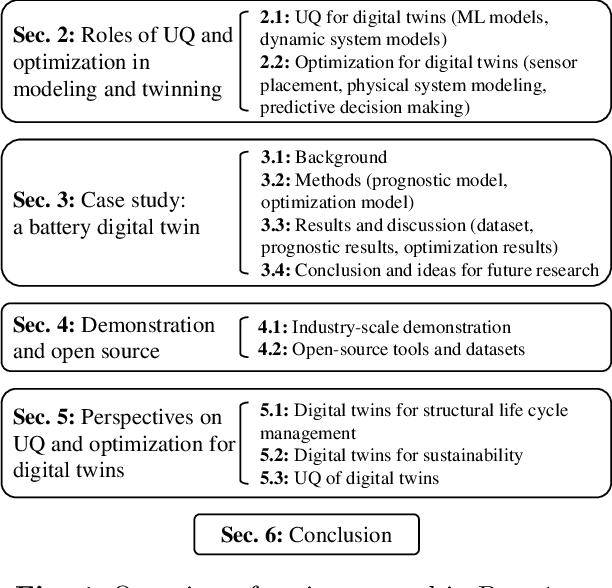

Abstract:As an emerging technology in the era of Industry 4.0, digital twin is gaining unprecedented attention because of its promise to further optimize process design, quality control, health monitoring, decision and policy making, and more, by comprehensively modeling the physical world as a group of interconnected digital models. In a two-part series of papers, we examine the fundamental role of different modeling techniques, twinning enabling technologies, and uncertainty quantification and optimization methods commonly used in digital twins. This second paper presents a literature review of key enabling technologies of digital twins, with an emphasis on uncertainty quantification, optimization methods, open source datasets and tools, major findings, challenges, and future directions. Discussions focus on current methods of uncertainty quantification and optimization and how they are applied in different dimensions of a digital twin. Additionally, this paper presents a case study where a battery digital twin is constructed and tested to illustrate some of the modeling and twinning methods reviewed in this two-part review. Code and preprocessed data for generating all the results and figures presented in the case study are available on GitHub.

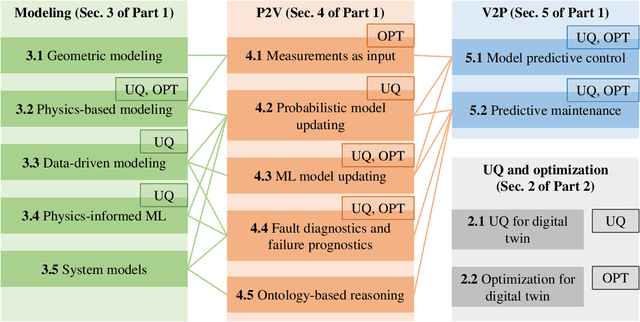

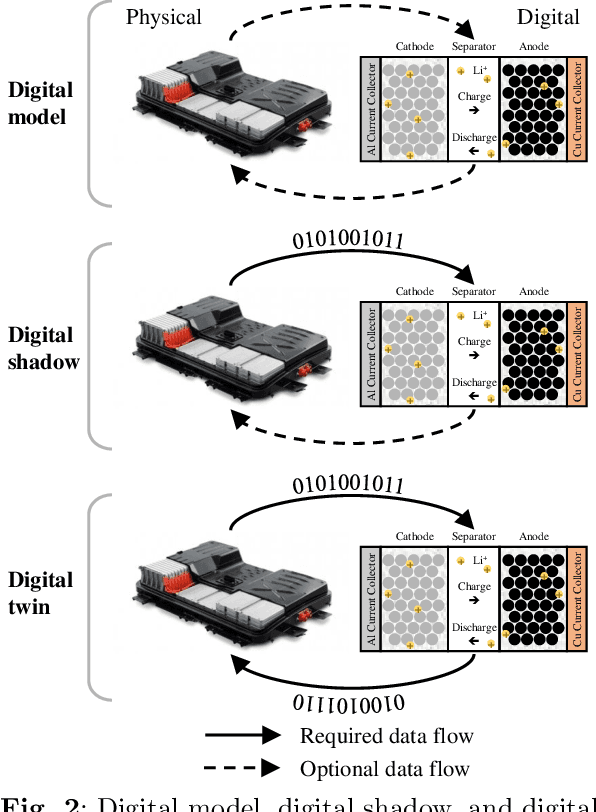

A Comprehensive Review of Digital Twin -- Part 1: Modeling and Twinning Enabling Technologies

Aug 26, 2022

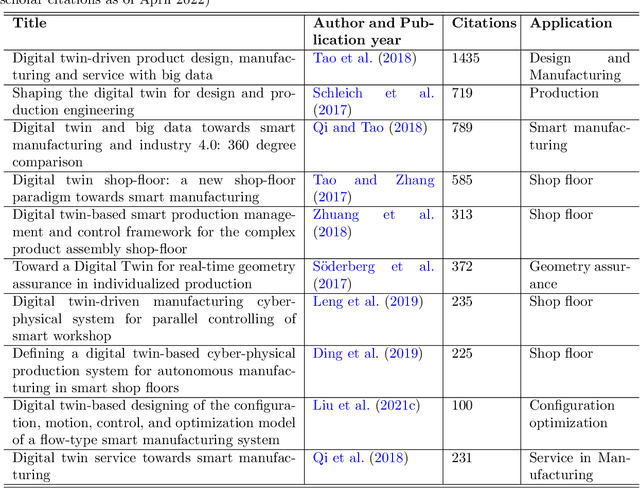

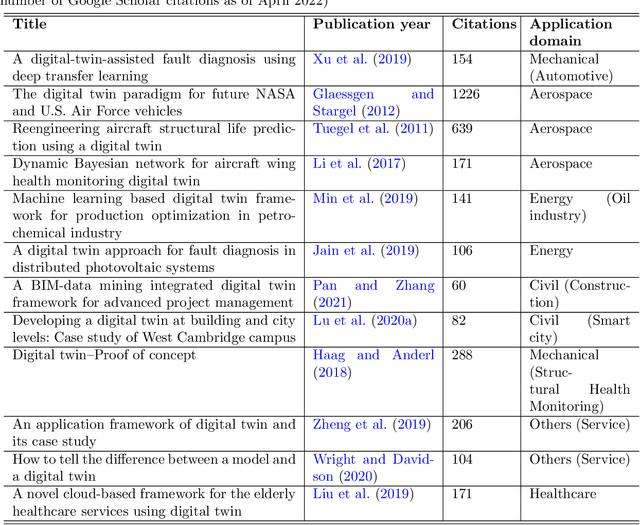

Abstract:As an emerging technology in the era of Industry 4.0, digital twin is gaining unprecedented attention because of its promise to further optimize process design, quality control, health monitoring, decision and policy making, and more, by comprehensively modeling the physical world as a group of interconnected digital models. In a two-part series of papers, we examine the fundamental role of different modeling techniques, twinning enabling technologies, and uncertainty quantification and optimization methods commonly used in digital twins. This first paper presents a thorough literature review of digital twin trends across many disciplines currently pursuing this area of research. Then, digital twin modeling and twinning enabling technologies are further analyzed by classifying them into two main categories: physical-to-virtual, and virtual-to-physical, based on the direction in which data flows. Finally, this paper provides perspectives on the trajectory of digital twin technology over the next decade, and introduces a few emerging areas of research which will likely be of great use in future digital twin research. In part two of this review, the role of uncertainty quantification and optimization are discussed, a battery digital twin is demonstrated, and more perspectives on the future of digital twin are shared.

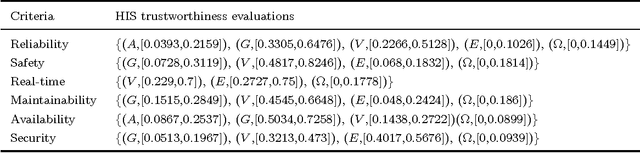

Modeling contaminant intrusion in water distribution networks based on D numbers

Apr 02, 2014

Abstract:Efficient modeling on uncertain information plays an important role in estimating the risk of contaminant intrusion in water distribution networks. Dempster-Shafer evidence theory is one of the most commonly used methods. However, the Dempster-Shafer evidence theory has some hypotheses including the exclusive property of the elements in the frame of discernment, which may not be consistent with the real world. In this paper, based on a more effective representation of uncertainty, called D numbers, a new method that allows the elements in the frame of discernment to be non-exclusive is proposed. To demonstrate the efficiency of the proposed method, we apply it to the water distribution networks to estimate the risk of contaminant intrusion.

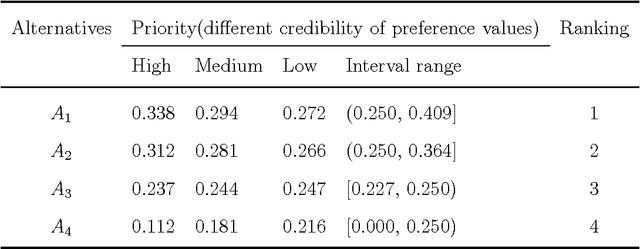

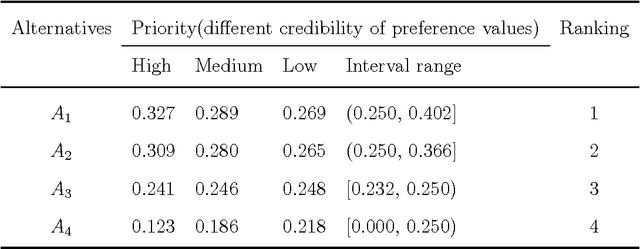

D-CFPR: D numbers extended consistent fuzzy preference relations

Mar 23, 2014

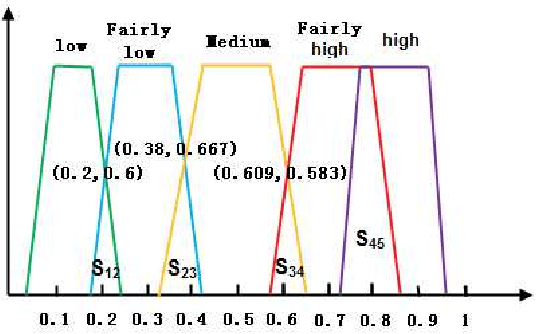

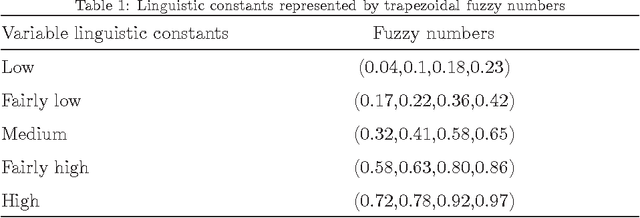

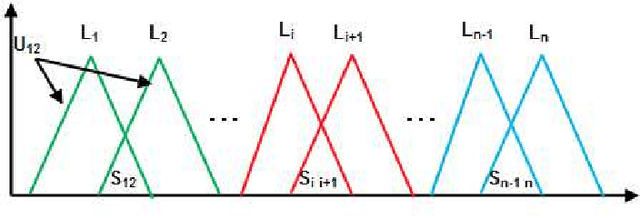

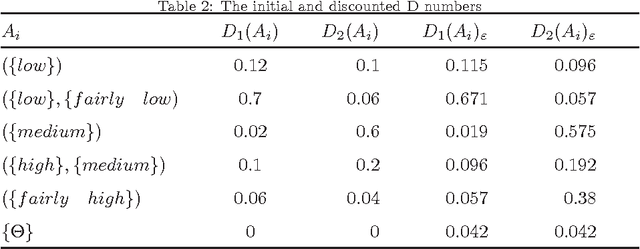

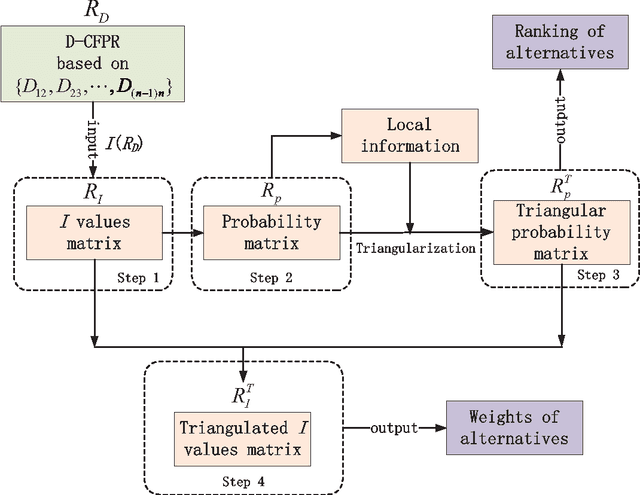

Abstract:How to express an expert's or a decision maker's preference for alternatives is an open issue. Consistent fuzzy preference relation (CFPR) is with big advantages to handle this problem due to it can be construed via a smaller number of pairwise comparisons and satisfies additive transitivity property. However, the CFPR is incapable of dealing with the cases involving uncertain and incomplete information. In this paper, a D numbers extended consistent fuzzy preference relation (D-CFPR) is proposed to overcome the weakness. The D-CFPR extends the classical CFPR by using a new model of expressing uncertain information called D numbers. The D-CFPR inherits the merits of classical CFPR and can be totally reduced to the classical CFPR. This study can be integrated into our previous study about D-AHP (D numbers extended AHP) model to provide a systematic solution for multi-criteria decision making (MCDM).

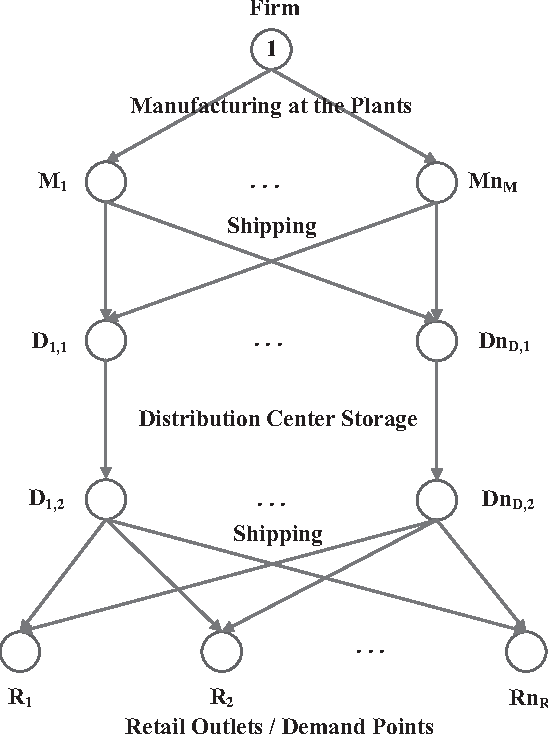

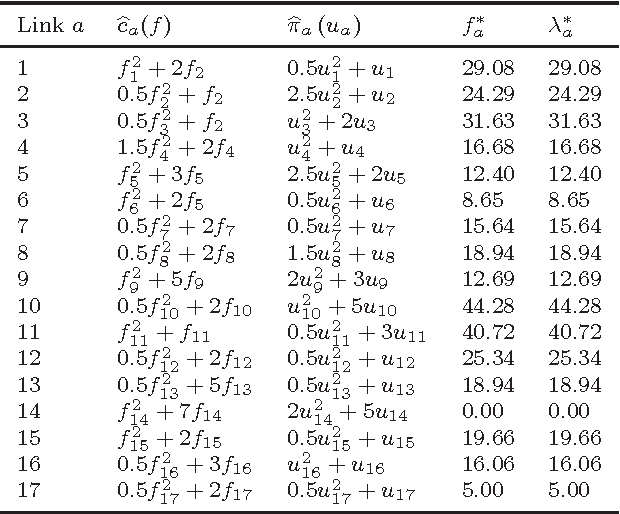

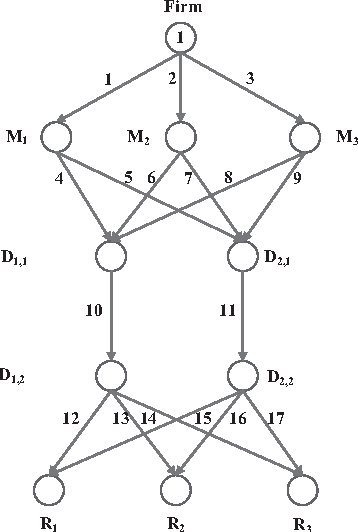

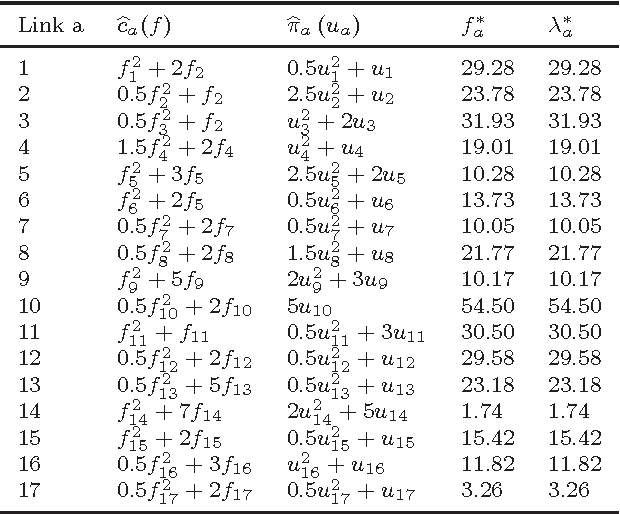

A Physarum-Inspired Approach to Optimal Supply Chain Network Design at Minimum Total Cost with Demand Satisfaction

Mar 21, 2014

Abstract:A supply chain is a system which moves products from a supplier to customers. The supply chains are ubiquitous. They play a key role in all economic activities. Inspired by biological principles of nutrients' distribution in protoplasmic networks of slime mould Physarum polycephalum we propose a novel algorithm for a supply chain design. The algorithm handles the supply networks where capacity investments and product flows are variables. The networks are constrained by a need to satisfy product demands. Two features of the slime mould are adopted in our algorithm. The first is the continuity of a flux during the iterative process, which is used in real-time update of the costs associated with the supply links. The second feature is adaptivity. The supply chain can converge to an equilibrium state when costs are changed. Practicality and flexibility of our algorithm is illustrated on numerical examples.

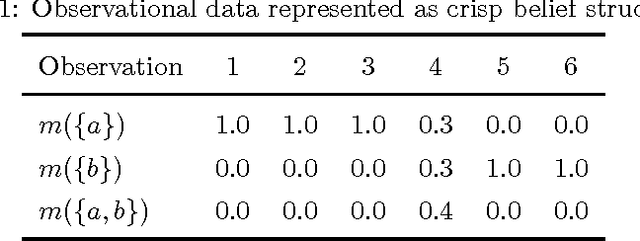

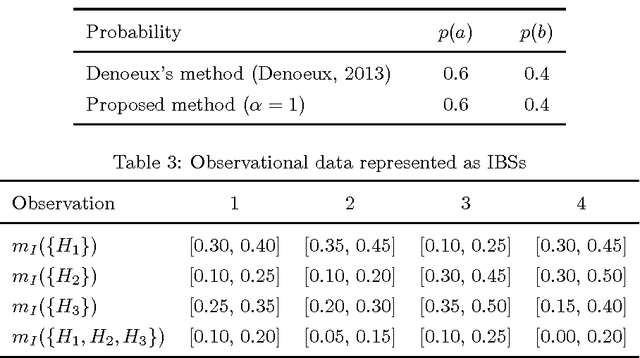

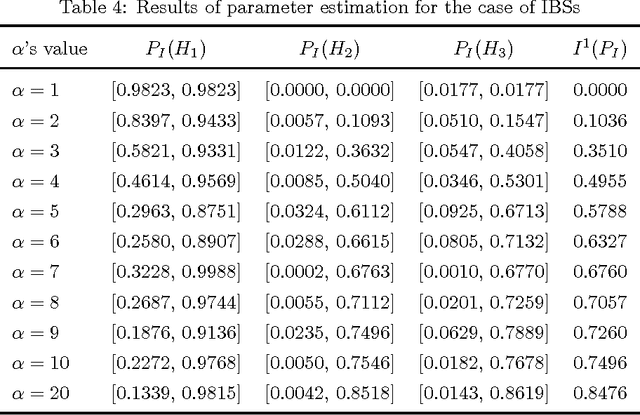

Parameter estimation based on interval-valued belief structures

Feb 15, 2014

Abstract:Parameter estimation based on uncertain data represented as belief structures is one of the latest problems in the Dempster-Shafer theory. In this paper, a novel method is proposed for the parameter estimation in the case where belief structures are uncertain and represented as interval-valued belief structures. Within our proposed method, the maximization of likelihood criterion and minimization of estimated parameter's uncertainty are taken into consideration simultaneously. As an illustration, the proposed method is employed to estimate parameters for deterministic and uncertain belief structures, which demonstrates its effectiveness and versatility.

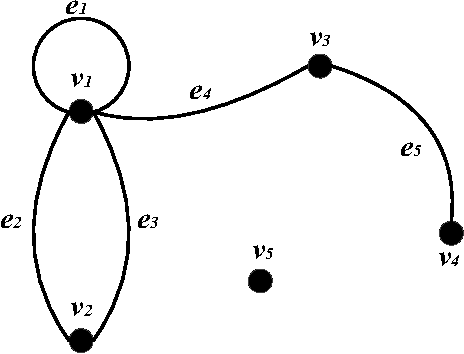

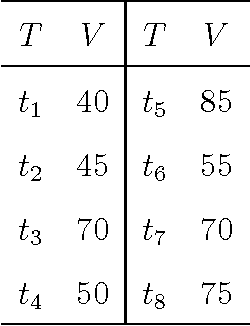

A Visibility Graph Averaging Aggregation Operator

Nov 17, 2013

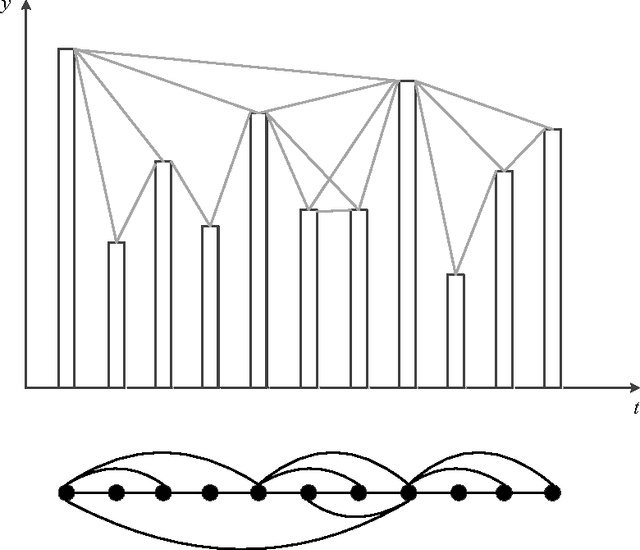

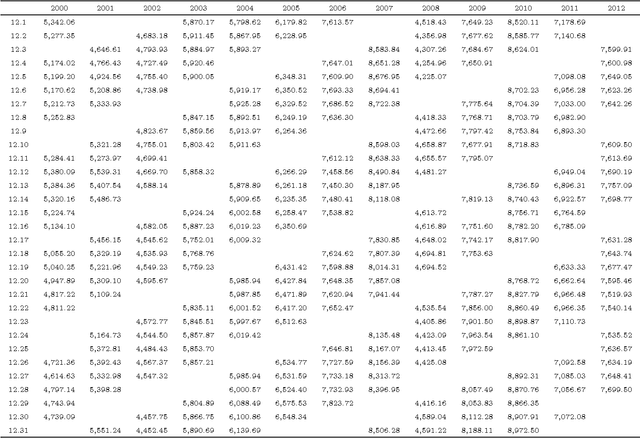

Abstract:The problem of aggregation is considerable importance in many disciplines. In this paper, a new type of operator called visibility graph averaging (VGA) aggregation operator is proposed. This proposed operator is based on the visibility graph which can convert a time series into a graph. The weights are obtained according to the importance of the data in the visibility graph. Finally, the VGA operator is used in the analysis of the TAIEX database to illustrate that it is practical and compared with the classic aggregation operators, it shows its advantage that it not only implements the aggregation of the data purely, but also conserves the time information, and meanwhile, the determination of the weights is more reasonable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge