Xin-She Yang

Firefly Algorithm for Movable Antenna Arrays

Sep 06, 2024

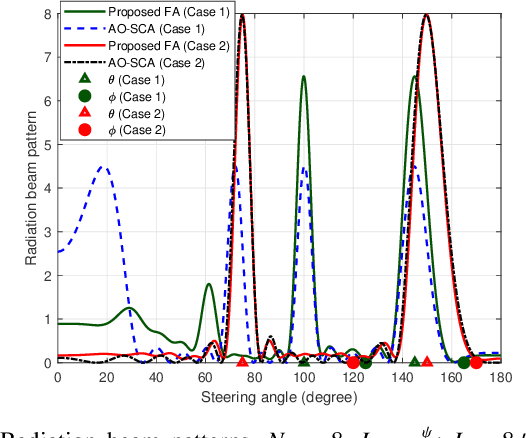

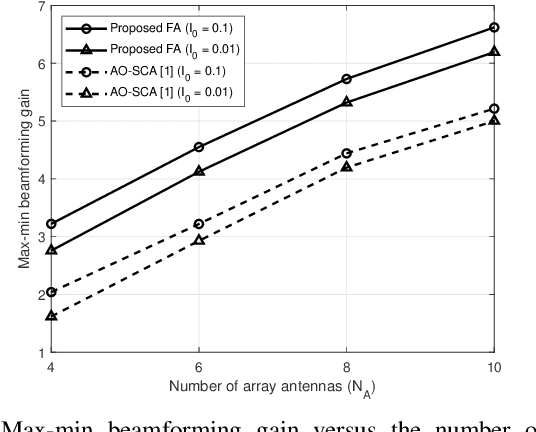

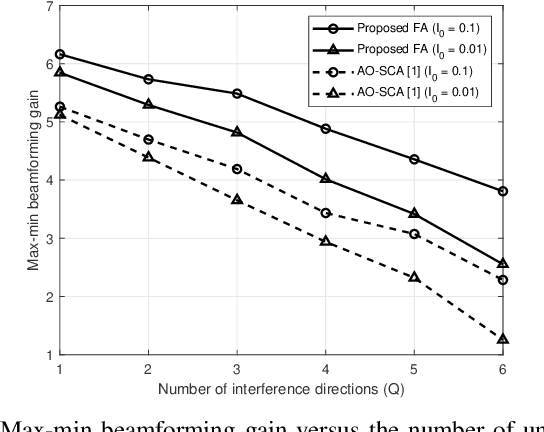

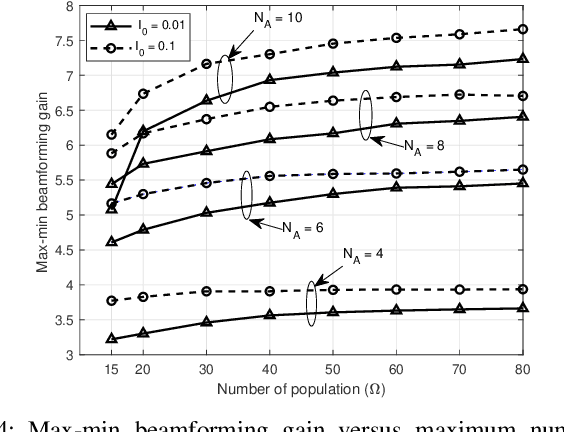

Abstract:This letter addresses a multivariate optimization problem for linear movable antenna arrays (MAAs). Particularly, the position and beamforming vectors of the under-investigated MAA are optimized simultaneously to maximize the minimum beamforming gain across several intended directions, while ensuring interference levels at various unintended directions remain below specified thresholds. To this end, a swarm-intelligence-based firefly algorithm (FA) is introduced to acquire an effective solution to the optimization problem. Simulation results reveal the superior performance of the proposed FA approach compared to the state-of-the-art approach employing alternating optimization and successive convex approximation. This is attributed to the FA's effectiveness in handling non-convex multivariate and multimodal optimization problems without resorting approximations.

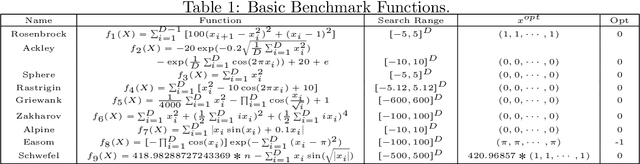

A Generalized Evolutionary Metaheuristic (GEM) Algorithm for Engineering Optimization

Jul 02, 2024Abstract:Many optimization problems in engineering and industrial design applications can be formulated as optimization problems with highly nonlinear objectives, subject to multiple complex constraints. Solving such optimization problems requires sophisticated algorithms and optimization techniques. A major trend in recent years is the use of nature-inspired metaheustic algorithms (NIMA). Despite the popularity of nature-inspired metaheuristic algorithms, there are still some challenging issues and open problems to be resolved. Two main issues related to current NIMAs are: there are over 540 algorithms in the literature, and there is no unified framework to understand the search mechanisms of different algorithms. Therefore, this paper attempts to analyse some similarities and differences among different algorithms and then presents a generalized evolutionary metaheuristic (GEM) in an attempt to unify some of the existing algorithms. After a brief discussion of some insights into nature-inspired algorithms and some open problems, we propose a generalized evolutionary metaheuristic algorithm to unify more than 20 different algorithms so as to understand their main steps and search mechanisms. We then test the unified GEM using 15 test benchmarks to validate its performance. Finally, further research topics are briefly discussed.

* 17 pages, 2 figures and 4 tables

Parameter Tuning of the Firefly Algorithm by Standard Monte Carlo and Quasi-Monte Carlo Methods

Jul 01, 2024Abstract:Almost all optimization algorithms have algorithm-dependent parameters, and the setting of such parameter values can significantly influence the behavior of the algorithm under consideration. Thus, proper parameter tuning should be carried out to ensure that the algorithm used for optimization performs well and is sufficiently robust for solving different types of optimization problems. In this study, the Firefly Algorithm (FA) is used to evaluate the influence of its parameter values on its efficiency. Parameter values are randomly initialized using both the standard Monte Carlo method and the Quasi Monte-Carlo method. The values are then used for tuning the FA. Two benchmark functions and a spring design problem are used to test the robustness of the tuned FA. From the preliminary findings, it can be deduced that both the Monte Carlo method and Quasi-Monte Carlo method produce similar results in terms of optimal fitness values. Numerical experiments using the two different methods on both benchmark functions and the spring design problem showed no major variations in the final fitness values, irrespective of the different sample values selected during the simulations. This insensitivity indicates the robustness of the FA.

Generalized Firefly Algorithm for Optimal Transmit Beamforming

Oct 27, 2023

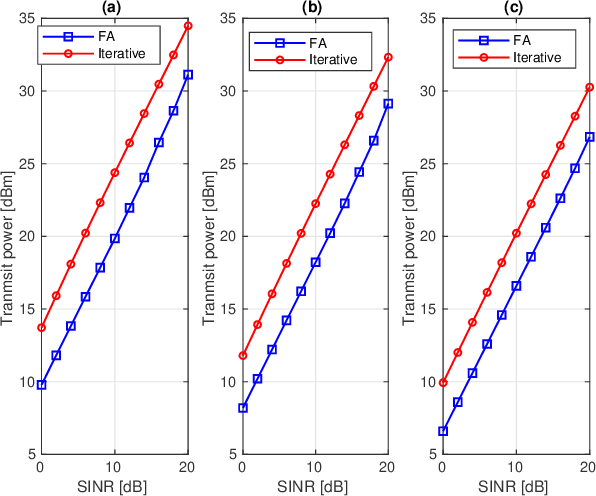

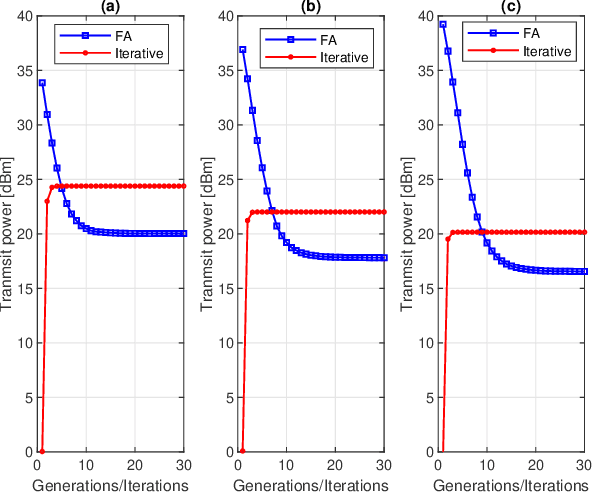

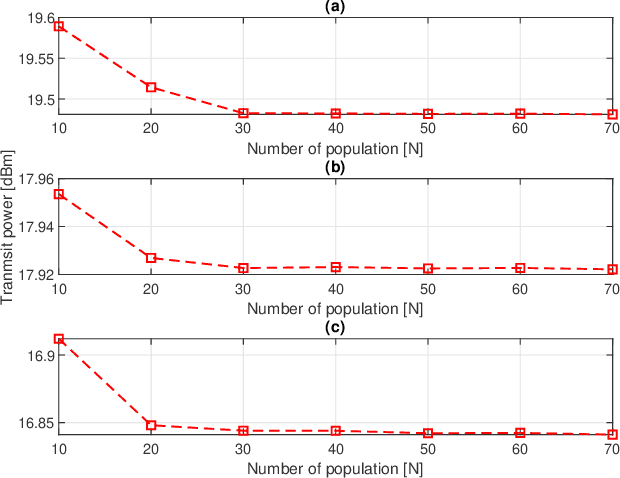

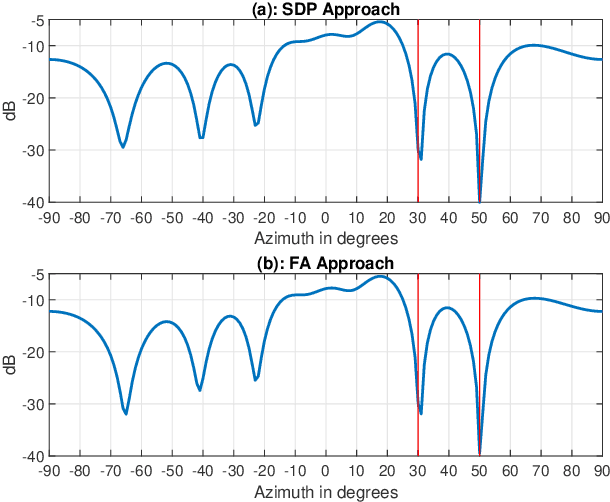

Abstract:This paper proposes a generalized Firefly Algorithm (FA) to solve an optimization framework having objective function and constraints as multivariate functions of independent optimization variables. Four representative examples of how the proposed generalized FA can be adopted to solve downlink beamforming problems are shown for a classic transmit beamforming, cognitive beamforming, reconfigurable-intelligent-surfaces-aided (RIS-aided) transmit beamforming, and RIS-aided wireless power transfer (WPT). Complexity analyzes indicate that in large-antenna regimes the proposed FA approaches require less computational complexity than their corresponding interior point methods (IPMs) do, yet demand a higher complexity than the iterative and the successive convex approximation (SCA) approaches do. Simulation results reveal that the proposed FA attains the same global optimal solution as that of the IPM for an optimization problem in cognitive beamforming. On the other hand, the proposed FA approaches outperform the iterative, IPM and SCA in terms of obtaining better solution for optimization problems, respectively, for a classic transmit beamforming, RIS-aided transmit beamforming and RIS-aided WPT.

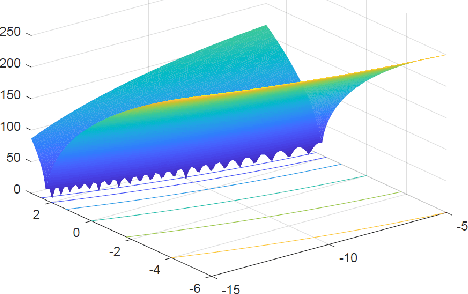

Ten New Benchmarks for Optimization

Aug 30, 2023Abstract:Benchmarks are used for testing new optimization algorithms and their variants to evaluate their performance. Most existing benchmarks are smooth functions. This chapter introduces ten new benchmarks with different properties, including noise, discontinuity, parameter estimation and unknown paths.

Review of Parameter Tuning Methods for Nature-Inspired Algorithms

Aug 30, 2023Abstract:Almost all optimization algorithms have algorithm-dependent parameters, and the setting of such parameter values can largely influence the behaviour of the algorithm under consideration. Thus, proper parameter tuning should be carried out to ensure the algorithm used for optimization may perform well and can be sufficiently robust for solving different types of optimization problems. This chapter reviews some of the main methods for parameter tuning and then highlights the important issues concerning the latest development in parameter tuning. A few open problems are also discussed with some recommendations for future research.

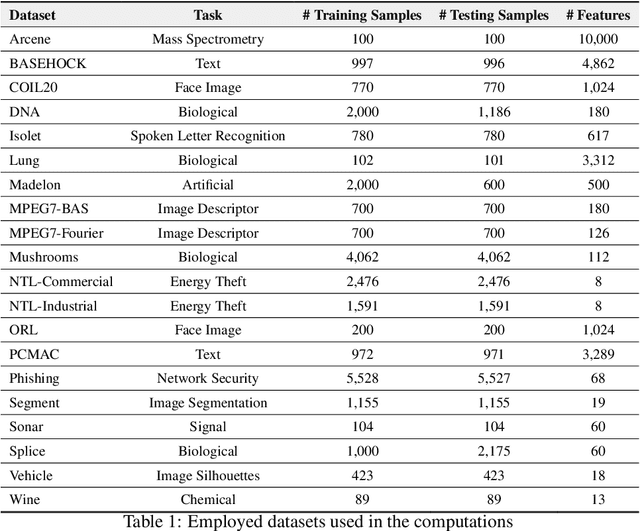

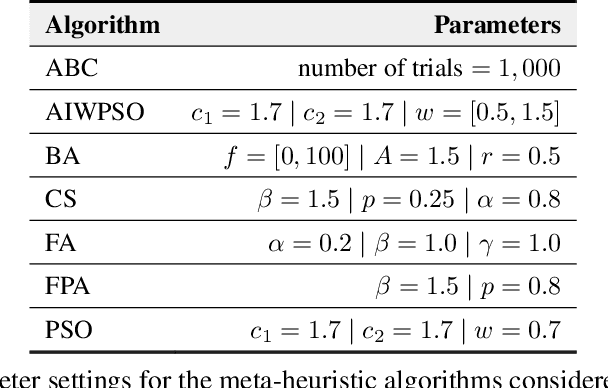

A Nature-Inspired Feature Selection Approach based on Hypercomplex Information

Jan 14, 2021

Abstract:Feature selection for a given model can be transformed into an optimization task. The essential idea behind it is to find the most suitable subset of features according to some criterion. Nature-inspired optimization can mitigate this problem by producing compelling yet straightforward solutions when dealing with complicated fitness functions. Additionally, new mathematical representations, such as quaternions and octonions, are being used to handle higher-dimensional spaces. In this context, we are introducing a meta-heuristic optimization framework in a hypercomplex-based feature selection, where hypercomplex numbers are mapped to real-valued solutions and then transferred onto a boolean hypercube by a sigmoid function. The intended hypercomplex feature selection is tested for several meta-heuristic algorithms and hypercomplex representations, achieving results comparable to some state-of-the-art approaches. The good results achieved by the proposed approach make it a promising tool amongst feature selection research.

* 17 pages, 7 figures

COEBA: A Coevolutionary Bat Algorithm for Discrete Evolutionary Multitasking

Mar 24, 2020

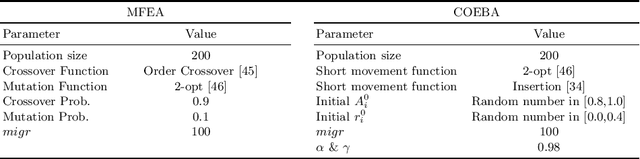

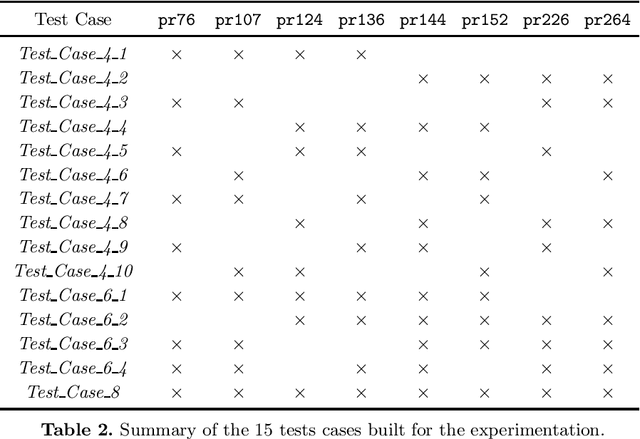

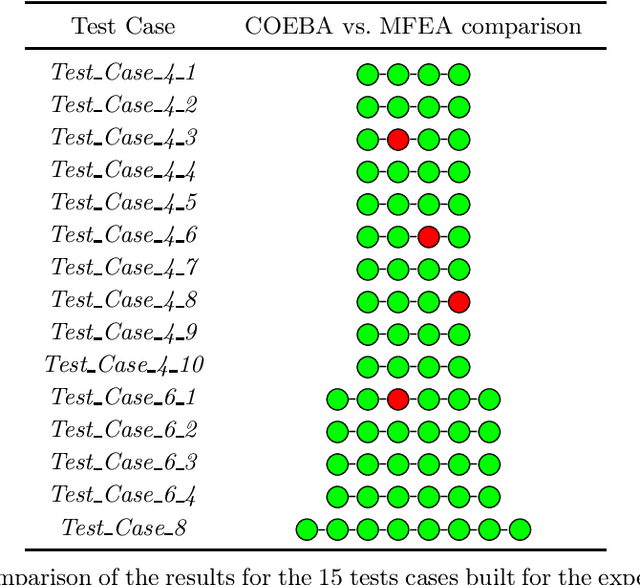

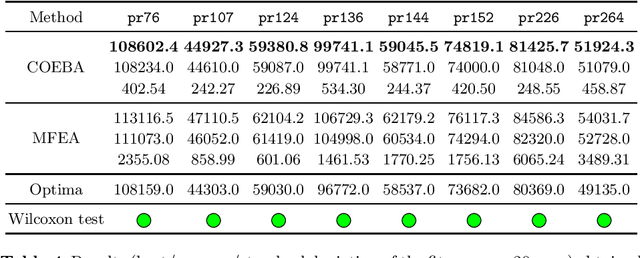

Abstract:Multitasking optimization is an emerging research field which has attracted lot of attention in the scientific community. The main purpose of this paradigm is how to solve multiple optimization problems or tasks simultaneously by conducting a single search process. The main catalyst for reaching this objective is to exploit possible synergies and complementarities among the tasks to be optimized, helping each other by virtue of the transfer of knowledge among them (thereby being referred to as Transfer Optimization). In this context, Evolutionary Multitasking addresses Transfer Optimization problems by resorting to concepts from Evolutionary Computation for simultaneous solving the tasks at hand. This work contributes to this trend by proposing a novel algorithmic scheme for dealing with multitasking environments. The proposed approach, coined as Coevolutionary Bat Algorithm, finds its inspiration in concepts from both co-evolutionary strategies and the metaheuristic Bat Algorithm. We compare the performance of our proposed method with that of its Multifactorial Evolutionary Algorithm counterpart over 15 different multitasking setups, composed by eight reference instances of the discrete Traveling Salesman Problem. The experimentation and results stemming therefrom support the main hypothesis of this study: the proposed Coevolutionary Bat Algorithm is a promising meta-heuristic for solving Evolutionary Multitasking scenarios.

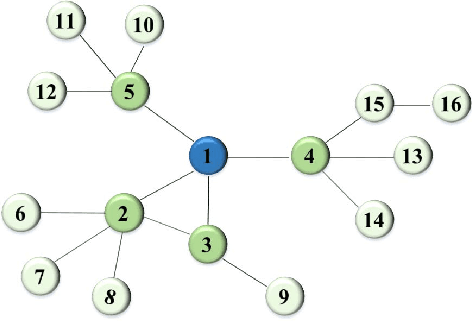

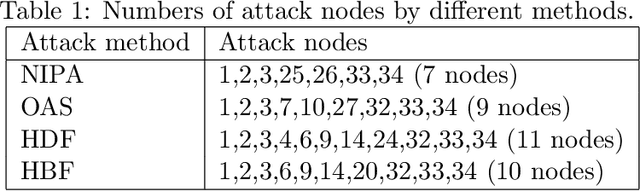

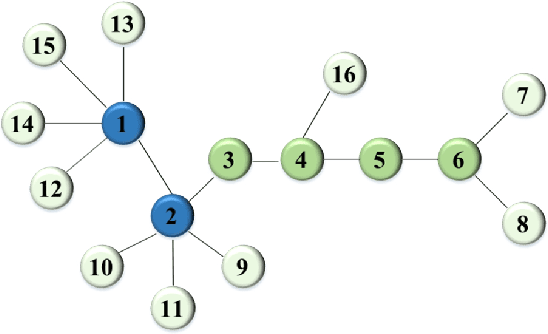

Neighborhood Information-based Probabilistic Algorithm for Network Disintegration

Mar 08, 2020

Abstract:Many real-world applications can be modelled as complex networks, and such networks include the Internet, epidemic disease networks, transport networks, power grids, protein-folding structures and others. Network integrity and robustness are important to ensure that crucial networks are protected and undesired harmful networks can be dismantled. Network structure and integrity can be controlled by a set of key nodes, and to find the optimal combination of nodes in a network to ensure network structure and integrity can be an NP-complete problem. Despite extensive studies, existing methods have many limitations and there are still many unresolved problems. This paper presents a probabilistic approach based on neighborhood information and node importance, namely, neighborhood information-based probabilistic algorithm (NIPA). We also define a new centrality-based importance measure (IM), which combines the contribution ratios of the neighbor nodes of each target node and two-hop node information. Our proposed NIPA has been tested for different network benchmarks and compared with three other methods: optimal attack strategy (OAS), high betweenness first (HBF) and high degree first (HDF). Experiments suggest that the proposed NIPA is most effective among all four methods. In general, NIPA can identify the most crucial node combination with higher effectiveness, and the set of optimal key nodes found by our proposed NIPA is much smaller than that by heuristic centrality prediction. In addition, many previously neglected weakly connected nodes are identified, which become a crucial part of the newly identified optimal nodes. Thus, revised strategies for protection are recommended to ensure the safeguard of network integrity. Further key issues and future research topics are also discussed.

* 25 pages, 13 figures, 2 tables

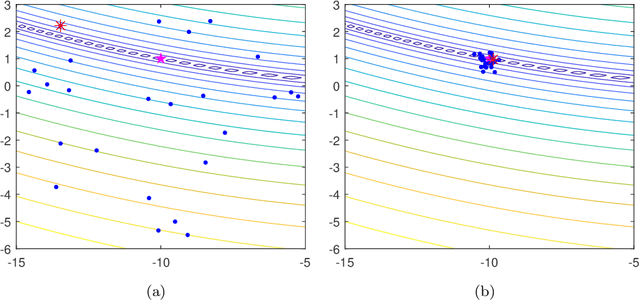

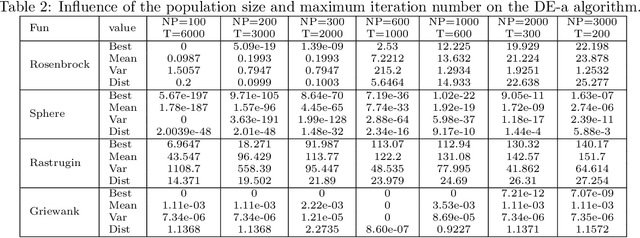

Influence of Initialization on the Performance of Metaheuristic Optimizers

Mar 08, 2020

Abstract:All metaheuristic optimization algorithms require some initialization, and the initialization for such optimizers is usually carried out randomly. However, initialization can have some significant influence on the performance of such algorithms. This paper presents a systematic comparison of 22 different initialization methods on the convergence and accuracy of five optimizers: differential evolution (DE), particle swarm optimization (PSO), cuckoo search (CS), artificial bee colony (ABC) algorithm and genetic algorithm (GA). We have used 19 different test functions with different properties and modalities to compare the possible effects of initialization, population sizes and the numbers of iterations. Rigorous statistical ranking tests indicate that 43.37\% of the functions using the DE algorithm show significant differences for different initialization methods, while 73.68\% of the functions using both PSO and CS algorithms are significantly affected by different initialization methods. The simulations show that DE is less sensitive to initialization, while both PSO and CS are more sensitive to initialization. In addition, under the condition of the same maximum number of function evaluations (FEs), the population size can also have a strong effect. Particle swarm optimization usually requires a larger population, while the cuckoo search needs only a small population size. Differential evolution depends more heavily on the number of iterations, a relatively small population with more iterations can lead to better results. Furthermore, ABC is more sensitive to initialization, while such initialization has little effect on GA. Some probability distributions such as the beta distribution, exponential distribution and Rayleigh distribution can usually lead to better performance. The implications of this study and further research topics are also discussed in detail.

* 39 pages, 10 figures, 20 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge