Ruqayya Awan

INSIGHT: Spatially resolved survival modelling from routine histology crosslinked with molecular profiling reveals prognostic epithelial-immune axes in stage II/III colorectal cancer

Dec 24, 2025Abstract:Routine histology contains rich prognostic information in stage II/III colorectal cancer, much of which is embedded in complex spatial tissue organisation. We present INSIGHT, a graph neural network that predicts survival directly from routine histology images. Trained and cross-validated on TCGA (n=342) and SURGEN (n=336), INSIGHT produces patient-level spatially resolved risk scores. Large independent validation showed superior prognostic performance compared with pTNM staging (C-index 0.68-0.69 vs 0.44-0.58). INSIGHT spatial risk maps recapitulated canonical prognostic histopathology and identified nuclear solidity and circularity as quantitative risk correlates. Integrating spatial risk with data-driven spatial transcriptomic signatures, spatial proteomics, bulk RNA-seq, and single-cell references revealed an epithelium-immune risk manifold capturing epithelial dedifferentiation and fetal programs, myeloid-driven stromal states including $\mathrm{SPP1}^{+}$ macrophages and $\mathrm{LAMP3}^{+}$ dendritic cells, and adaptive immune dysfunction. This analysis exposed patient-specific epithelial heterogeneity, stratification within MSI-High tumours, and high-risk routes of CDX2/HNF4A loss and CEACAM5/6-associated proliferative programs, highlighting coordinated therapeutic vulnerabilities.

From Traditional to Deep Learning Approaches in Whole Slide Image Registration: A Methodological Review

Feb 26, 2025

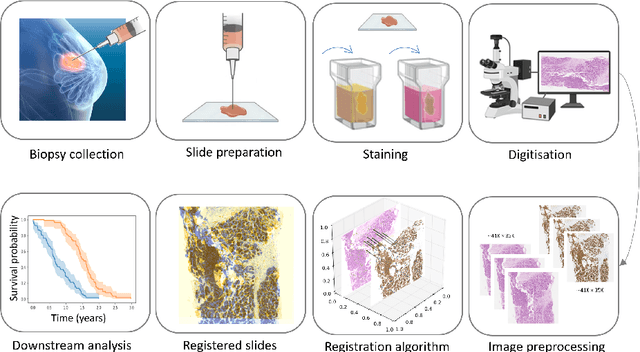

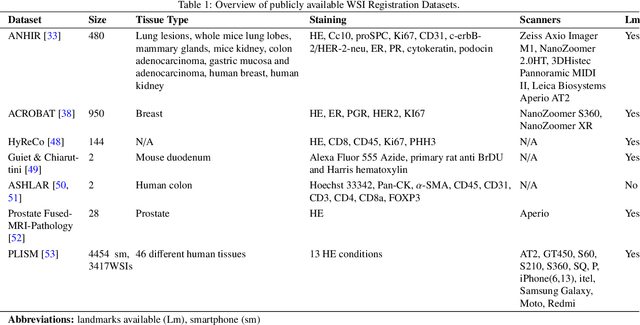

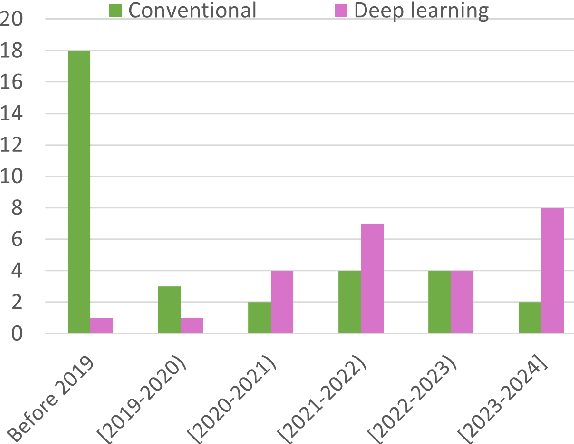

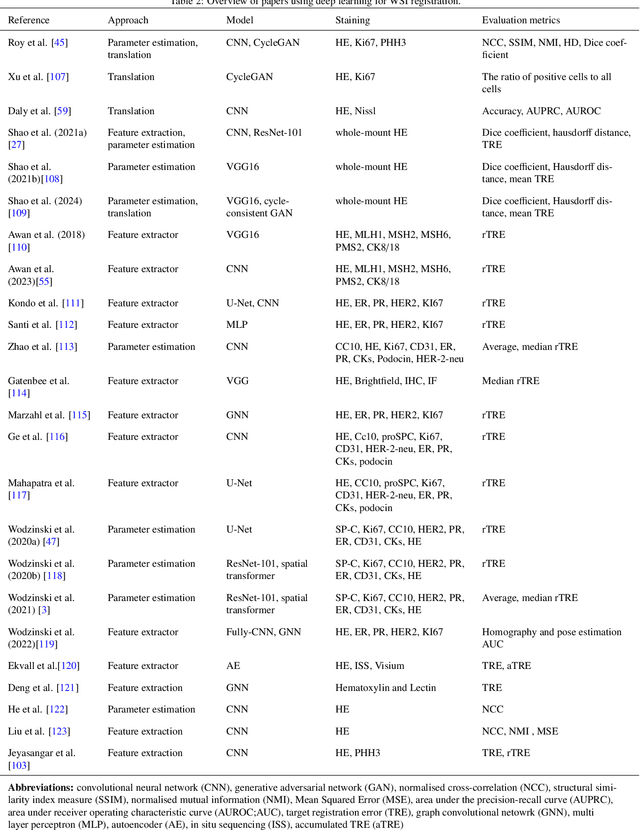

Abstract:Whole slide image (WSI) registration is an essential task for analysing the tumour microenvironment (TME) in histopathology. It involves the alignment of spatial information between WSIs of the same section or serial sections of a tissue sample. The tissue sections are usually stained with single or multiple biomarkers before imaging, and the goal is to identify neighbouring nuclei along the Z-axis for creating a 3D image or identifying subclasses of cells in the TME. This task is considerably more challenging compared to radiology image registration, such as magnetic resonance imaging or computed tomography, due to various factors. These include gigapixel size of images, variations in appearance between differently stained tissues, changes in structure and morphology between non-consecutive sections, and the presence of artefacts, tears, and deformations. Currently, there is a noticeable gap in the literature regarding a review of the current approaches and their limitations, as well as the challenges and opportunities they present. We aim to provide a comprehensive understanding of the available approaches and their application for various purposes. Furthermore, we investigate current deep learning methods used for WSI registration, emphasising their diverse methodologies. We examine the available datasets and explore tools and software employed in the field. Finally, we identify open challenges and potential future trends in this area of research.

Mimicking a Pathologist: Dual Attention Model for Scoring of Gigapixel Histology Images

Feb 19, 2023

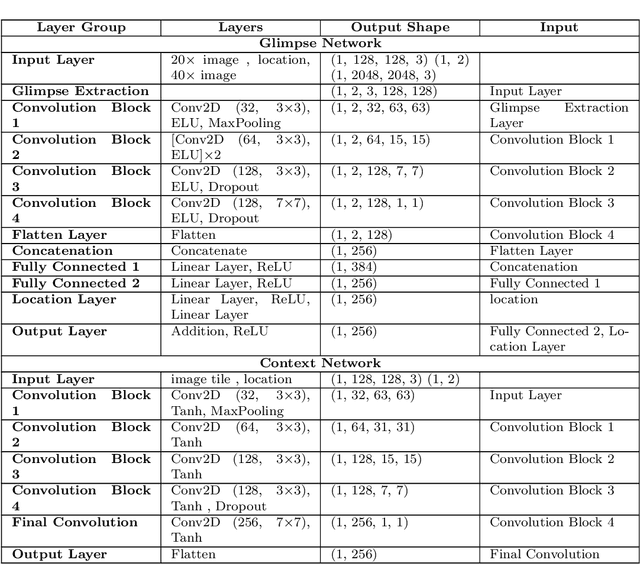

Abstract:Some major challenges associated with the automated processing of whole slide images (WSIs) includes their sheer size, different magnification levels and high resolution. Utilizing these images directly in AI frameworks is computationally expensive due to memory constraints, while downsampling WSIs incurs information loss and splitting WSIs into tiles and patches results in loss of important contextual information. We propose a novel dual attention approach, consisting of two main components, to mimic visual examination by a pathologist. The first component is a soft attention model which takes as input a high-level view of the WSI to determine various regions of interest. We employ a custom sampling method to extract diverse and spatially distinct image tiles from selected high attention areas. The second component is a hard attention classification model, which further extracts a sequence of multi-resolution glimpses from each tile for classification. Since hard attention is non-differentiable, we train this component using reinforcement learning and predict the location of glimpses without processing all patches of a given tile, thereby aligning with pathologist's way of diagnosis. We train our components both separately and in an end-to-end fashion using a joint loss function to demonstrate the efficacy of our proposed model. We employ our proposed model on two different IHC use cases: HER2 prediction on breast cancer and prediction of Intact/Loss status of two MMR biomarkers, for colorectal cancer. We show that the proposed model achieves accuracy comparable to state-of-the-art methods while only processing a small fraction of the WSI at highest magnification.

Deep Feature based Cross-slide Registration

Feb 27, 2022

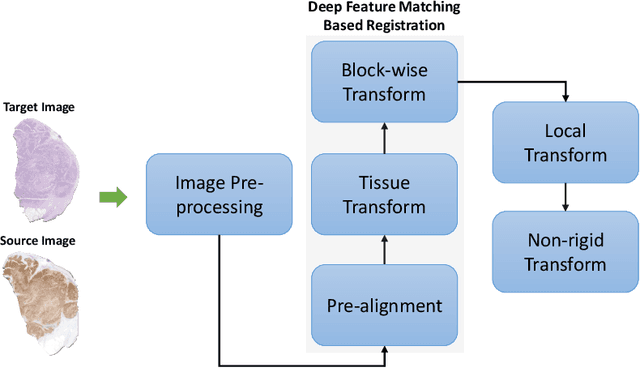

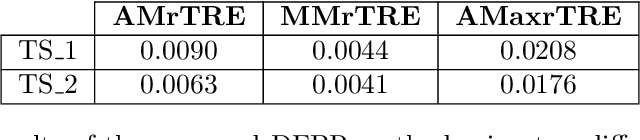

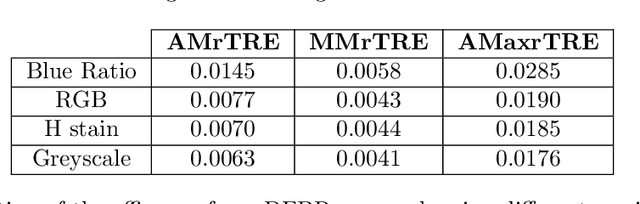

Abstract:Cross-slide image analysis provides additional information by analysing the expression of different biomarkers as compared to a single slide analysis. Slides stained with different biomarkers are analysed side by side which may reveal unknown relations between the different biomarkers. During the slide preparation, a tissue section may be placed at an arbitrary orientation as compared to other sections of the same tissue block. The problem is compounded by the fact that tissue contents are likely to change from one section to the next and there may be unique artefacts on some of the slides. This makes registration of each section to a reference section of the same tissue block an important pre-requisite task before any cross-slide analysis. We propose a deep feature based registration (DFBR) method which utilises data-driven features to estimate the rigid transformation. We adopted a multi-stage strategy for improving the quality of registration. We also developed a visualisation tool to view registered pairs of WSIs at different magnifications. With the help of this tool, one can apply a transformation on the fly without the need to generate transformed source WSI in a pyramidal form. We compared the performance of data-driven features with that of hand-crafted features on the COMET dataset. Our approach can align the images with low registration errors. Generally, the success of non-rigid registration is dependent on the quality of rigid registration. To evaluate the efficacy of the DFBR method, the first two steps of the ANHIR winner's framework are replaced with our DFBR to register challenge provided image pairs. The modified framework produce comparable results to that of challenge winning team.

Deep Learning based Prediction of MSI in Colorectal Cancer via Prediction of the Status of MMR Markers

Feb 24, 2022

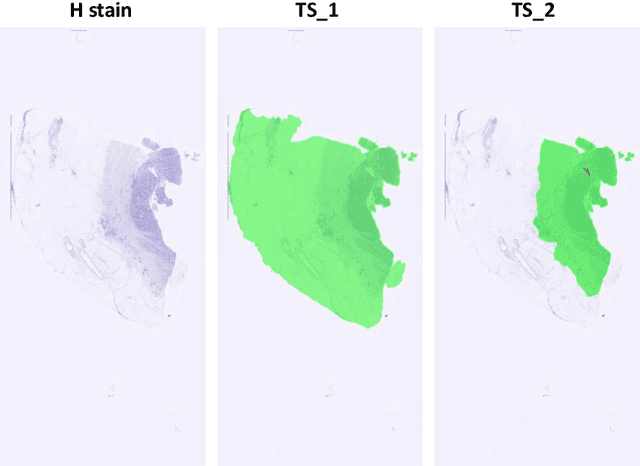

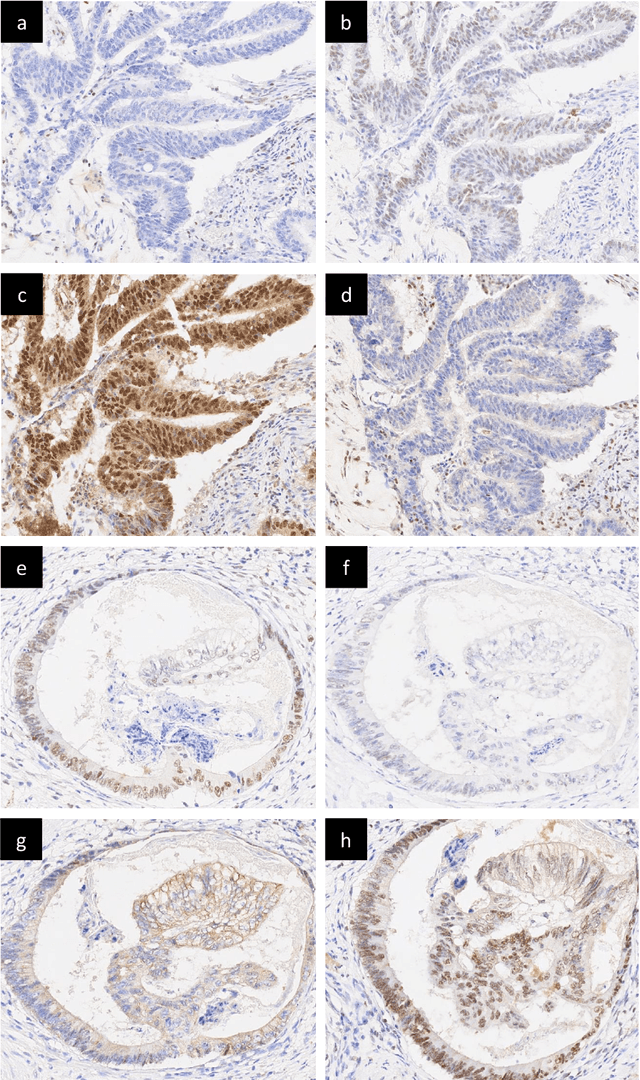

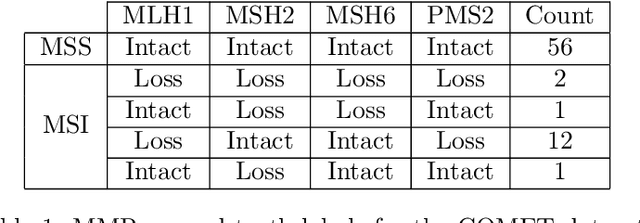

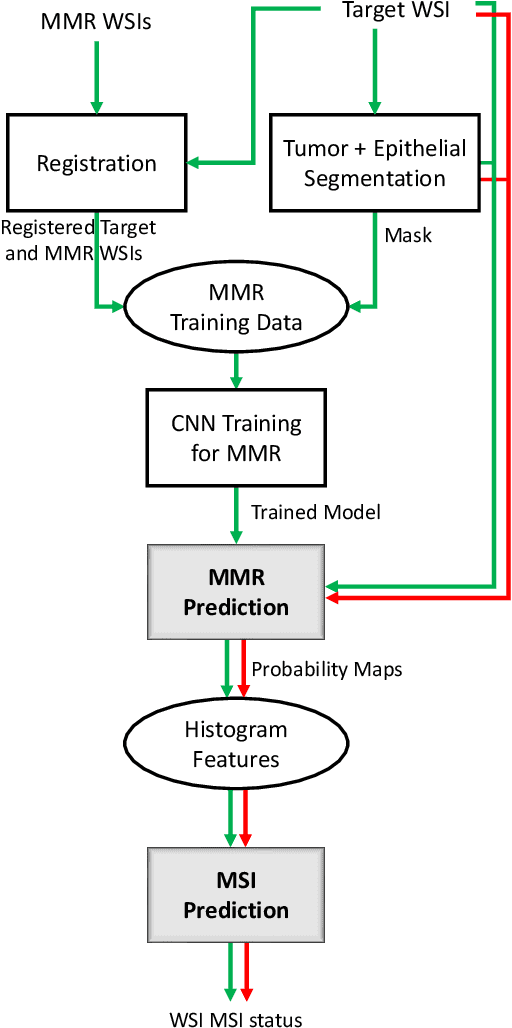

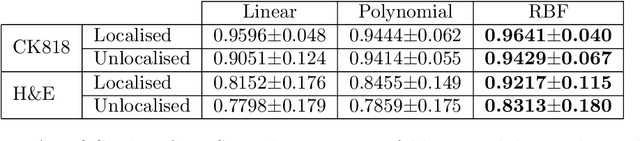

Abstract:An accurate diagnosis and profiling of tumour are critical to the best treatment choices for cancer patients. In addition to the cancer type and its aggressiveness, molecular heterogeneity also plays a vital role in treatment selection. MSI or MMR deficiency is one of the well-studied aberrations in terms of molecular changes. Colorectal cancer patients with MMR deficiency respond well to immunotherapy, hence assessment of the relevant molecular markers can assist clinicians in making optimal treatment selections for patients. Immunohistochemistry is one of the ways for identifying these molecular changes which requires additional sections of tumour tissue. Introduction of automated methods that can predict MSI or MMR status from a target image without the need for additional sections can substantially reduce the cost associated with it. In this work, we present our work on predicting MSI status in a two-stage process using a single target slide either stained with CK818 or H\&E. First, we train a multi-headed convolutional neural network model where each head is responsible for predicting one of the MMR protein expressions. To this end, we perform registration of MMR slides to the target slide as a pre-processing step. In the second stage, statistical features computed from the MMR prediction maps are used for the final MSI prediction. Our results demonstrate that MSI classification can be improved on incorporating fine-grained MMR labels in comparison to the previous approaches in which coarse labels (MSI/MSS) are utilised.

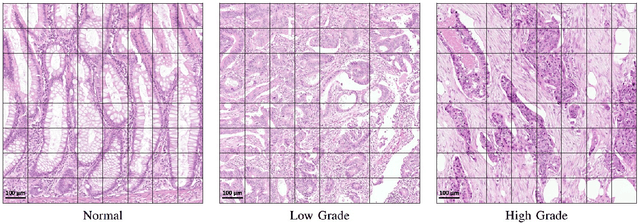

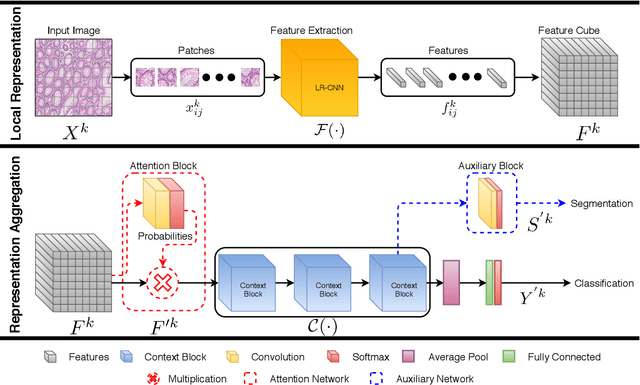

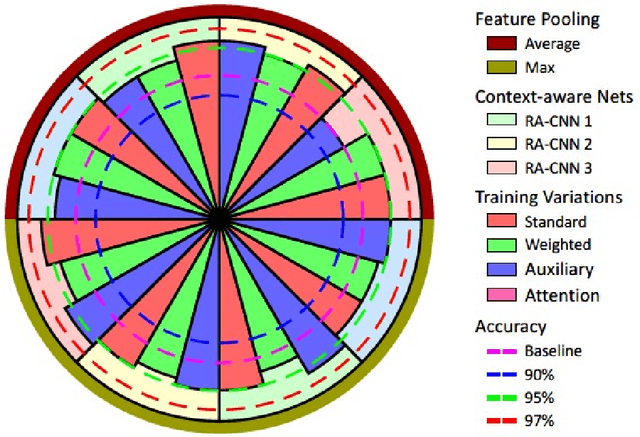

Context-Aware Convolutional Neural Network for Grading of Colorectal Cancer Histology Images

Jul 22, 2019

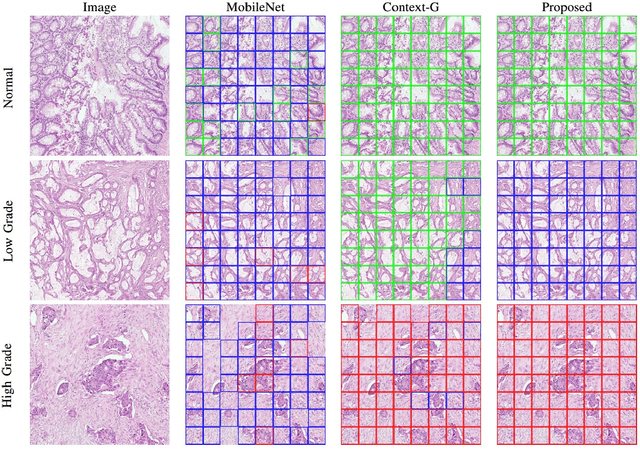

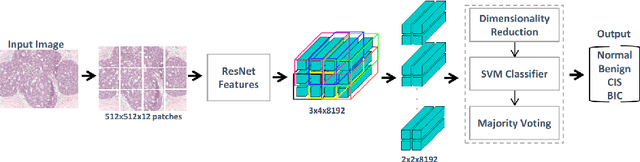

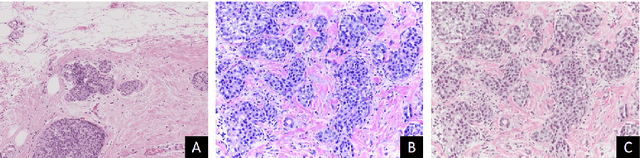

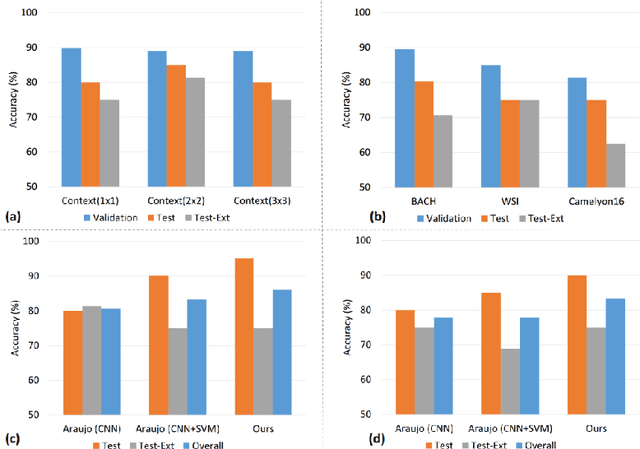

Abstract:Digital histology images are amenable to the application of convolutional neural network (CNN) for analysis due to the sheer size of pixel data present in them. CNNs are generally used for representation learning from small image patches (e.g. 224x224) extracted from digital histology images due to computational and memory constraints. However, this approach does not incorporate high-resolution contextual information in histology images. We propose a novel way to incorporate larger context by a context-aware neural network based on images with a dimension of 1,792x1,792 pixels. The proposed framework first encodes the local representation of a histology image into high dimensional features then aggregates the features by considering their spatial organization to make a final prediction. The proposed method is evaluated for colorectal cancer grading and breast cancer classification. A comprehensive analysis of some variants of the proposed method is presented. Our method outperformed the traditional patch-based approaches, problem-specific methods, and existing context-based methods quantitatively by a margin of 3.61%. Code and dataset related information is available at this link: https://tia-lab.github.io/Context-Aware-CNN

Context-Aware Learning using Transferable Features for Classification of Breast Cancer Histology Images

Mar 06, 2018

Abstract:Convolutional neural networks (CNNs) have been recently used for a variety of histology image analysis. However, availability of a large dataset is a major prerequisite for training a CNN which limits its use by the computational pathology community. In previous studies, CNNs have demonstrated their potential in terms of feature generalizability and transferability accompanied with better performance. Considering these traits of CNN, we propose a simple yet effective method which leverages the strengths of CNN combined with the advantages of including contextual information, particularly designed for a small dataset. Our method consists of two main steps: first it uses the activation features of CNN trained for a patch-based classification and then it trains a separate classifier using features of overlapping patches to perform image-based classification using the contextual information. The proposed framework outperformed the state-of-the-art method for breast cancer classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge