Mimicking a Pathologist: Dual Attention Model for Scoring of Gigapixel Histology Images

Paper and Code

Feb 19, 2023

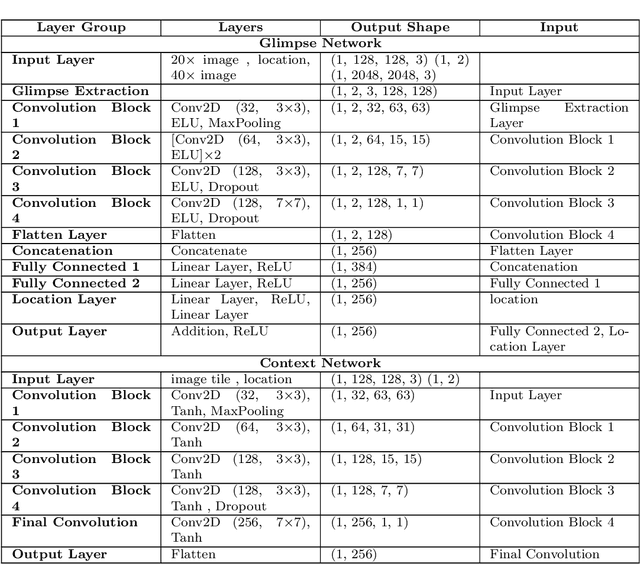

Some major challenges associated with the automated processing of whole slide images (WSIs) includes their sheer size, different magnification levels and high resolution. Utilizing these images directly in AI frameworks is computationally expensive due to memory constraints, while downsampling WSIs incurs information loss and splitting WSIs into tiles and patches results in loss of important contextual information. We propose a novel dual attention approach, consisting of two main components, to mimic visual examination by a pathologist. The first component is a soft attention model which takes as input a high-level view of the WSI to determine various regions of interest. We employ a custom sampling method to extract diverse and spatially distinct image tiles from selected high attention areas. The second component is a hard attention classification model, which further extracts a sequence of multi-resolution glimpses from each tile for classification. Since hard attention is non-differentiable, we train this component using reinforcement learning and predict the location of glimpses without processing all patches of a given tile, thereby aligning with pathologist's way of diagnosis. We train our components both separately and in an end-to-end fashion using a joint loss function to demonstrate the efficacy of our proposed model. We employ our proposed model on two different IHC use cases: HER2 prediction on breast cancer and prediction of Intact/Loss status of two MMR biomarkers, for colorectal cancer. We show that the proposed model achieves accuracy comparable to state-of-the-art methods while only processing a small fraction of the WSI at highest magnification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge