Rujia Shen

Causal Discovery from Time-Series Data with Short-Term Invariance-Based Convolutional Neural Networks

Aug 15, 2024Abstract:Causal discovery from time-series data aims to capture both intra-slice (contemporaneous) and inter-slice (time-lagged) causality between variables within the temporal chain, which is crucial for various scientific disciplines. Compared to causal discovery from non-time-series data, causal discovery from time-series data necessitates more serialized samples with a larger amount of observed time steps. To address the challenges, we propose a novel gradient-based causal discovery approach STIC, which focuses on \textbf{S}hort-\textbf{T}erm \textbf{I}nvariance using \textbf{C}onvolutional neural networks to uncover the causal relationships from time-series data. Specifically, STIC leverages both the short-term time and mechanism invariance of causality within each window observation, which possesses the property of independence, to enhance sample efficiency. Furthermore, we construct two causal convolution kernels, which correspond to the short-term time and mechanism invariance respectively, to estimate the window causal graph. To demonstrate the necessity of convolutional neural networks for causal discovery from time-series data, we theoretically derive the equivalence between convolution and the underlying generative principle of time-series data under the assumption that the additive noise model is identifiable. Experimental evaluations conducted on both synthetic and FMRI benchmark datasets demonstrate that our STIC outperforms baselines significantly and achieves the state-of-the-art performance, particularly when the datasets contain a limited number of observed time steps. Code is available at \url{https://github.com/HITshenrj/STIC}.

ProSpec RL: Plan Ahead, then Execute

Jul 31, 2024Abstract:Imagining potential outcomes of actions before execution helps agents make more informed decisions, a prospective thinking ability fundamental to human cognition. However, mainstream model-free Reinforcement Learning (RL) methods lack the ability to proactively envision future scenarios, plan, and guide strategies. These methods typically rely on trial and error to adjust policy functions, aiming to maximize cumulative rewards or long-term value, even if such high-reward decisions place the environment in extremely dangerous states. To address this, we propose the Prospective (ProSpec) RL method, which makes higher-value, lower-risk optimal decisions by imagining future n-stream trajectories. Specifically, ProSpec employs a dynamic model to predict future states (termed "imagined states") based on the current state and a series of sampled actions. Furthermore, we integrate the concept of Model Predictive Control and introduce a cycle consistency constraint that allows the agent to evaluate and select the optimal actions from these trajectories. Moreover, ProSpec employs cycle consistency to mitigate two fundamental issues in RL: augmenting state reversibility to avoid irreversible events (low risk) and augmenting actions to generate numerous virtual trajectories, thereby improving data efficiency. We validated the effectiveness of our method on the DMControl benchmarks, where our approach achieved significant performance improvements. Code will be open-sourced upon acceptance.

FreqTSF: Time Series Forecasting Via Simulating Frequency Kramer-Kronig Relations

Jul 31, 2024Abstract:Time series forecasting (TSF) is immensely important in extensive applications, such as electricity transformation, financial trade, medical monitoring, and smart agriculture. Although Transformer-based methods can handle time series data, their ability to predict long-term time series is limited due to the ``anti-order" nature of the self-attention mechanism. To address this problem, we focus on frequency domain to weaken the impact of order in TSF and propose the FreqBlock, where we first obtain frequency representations through the Frequency Transform Module. Subsequently, a newly designed Frequency Cross Attention is used to obtian enhanced frequency representations between the real and imaginary parts, thus establishing a link between the attention mechanism and the inherent Kramer-Kronig relations (KKRs). Our backbone network, FreqTSF, adopts a residual structure by concatenating multiple FreqBlocks to simulate KKRs in the frequency domain and avoid degradation problems. On a theoretical level, we demonstrate that the proposed two modules can significantly reduce the time and memory complexity from $\mathcal{O}(L^2)$ to $\mathcal{O}(L)$ for each FreqBlock computation. Empirical studies on four benchmark datasets show that FreqTSF achieves an overall relative MSE reduction of 15\% and an overall relative MAE reduction of 11\% compared to the state-of-the-art methods. The code will be available soon.

Causal prompting model-based offline reinforcement learning

Jun 03, 2024Abstract:Model-based offline Reinforcement Learning (RL) allows agents to fully utilise pre-collected datasets without requiring additional or unethical explorations. However, applying model-based offline RL to online systems presents challenges, primarily due to the highly suboptimal (noise-filled) and diverse nature of datasets generated by online systems. To tackle these issues, we introduce the Causal Prompting Reinforcement Learning (CPRL) framework, designed for highly suboptimal and resource-constrained online scenarios. The initial phase of CPRL involves the introduction of the Hidden-Parameter Block Causal Prompting Dynamic (Hip-BCPD) to model environmental dynamics. This approach utilises invariant causal prompts and aligns hidden parameters to generalise to new and diverse online users. In the subsequent phase, a single policy is trained to address multiple tasks through the amalgamation of reusable skills, circumventing the need for training from scratch. Experiments conducted across datasets with varying levels of noise, including simulation-based and real-world offline datasets from the Dnurse APP, demonstrate that our proposed method can make robust decisions in out-of-distribution and noisy environments, outperforming contemporary algorithms. Additionally, we separately verify the contributions of Hip-BCPDs and the skill-reuse strategy to the robustness of performance. We further analyse the visualised structure of Hip-BCPD and the interpretability of sub-skills. We released our source code and the first ever real-world medical dataset for precise medical decision-making tasks.

Blood Glucose Control Via Pre-trained Counterfactual Invertible Neural Networks

May 23, 2024

Abstract:Type 1 diabetes mellitus (T1D) is characterized by insulin deficiency and blood glucose (BG) control issues. The state-of-the-art solution for continuous BG control is reinforcement learning (RL), where an agent can dynamically adjust exogenous insulin doses in time to maintain BG levels within the target range. However, due to the lack of action guidance, the agent often needs to learn from randomized trials to understand misleading correlations between exogenous insulin doses and BG levels, which can lead to instability and unsafety. To address these challenges, we propose an introspective RL based on Counterfactual Invertible Neural Networks (CINN). We use the pre-trained CINN as a frozen introspective block of the RL agent, which integrates forward prediction and counterfactual inference to guide the policy updates, promoting more stable and safer BG control. Constructed based on interpretable causal order, CINN employs bidirectional encoders with affine coupling layers to ensure invertibility while using orthogonal weight normalization to enhance the trainability, thereby ensuring the bidirectional differentiability of network parameters. We experimentally validate the accuracy and generalization ability of the pre-trained CINN in BG prediction and counterfactual inference for action. Furthermore, our experimental results highlight the effectiveness of pre-trained CINN in guiding RL policy updates for more accurate and safer BG control.

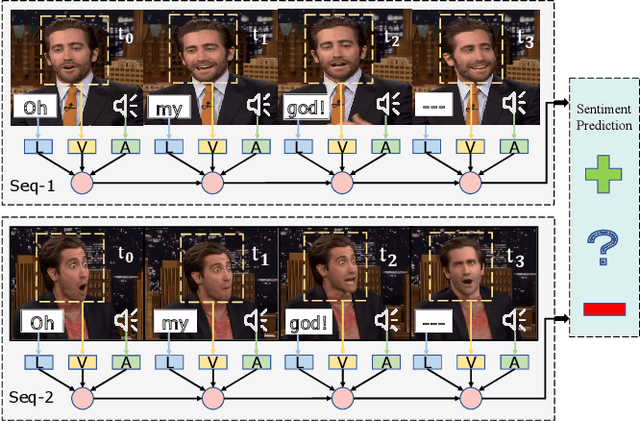

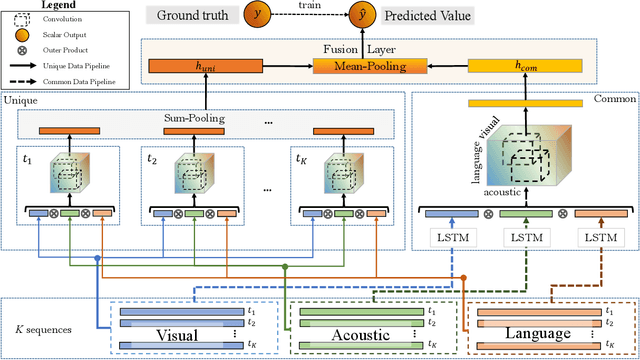

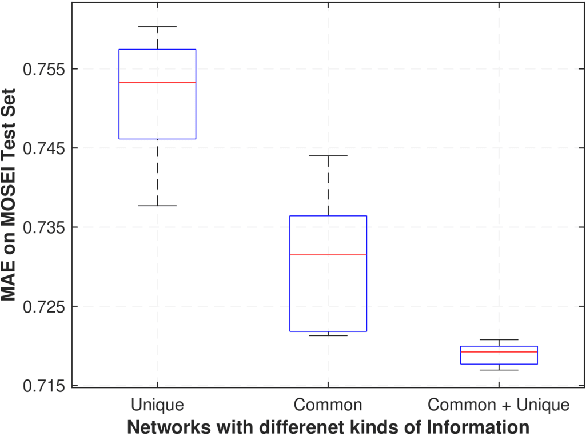

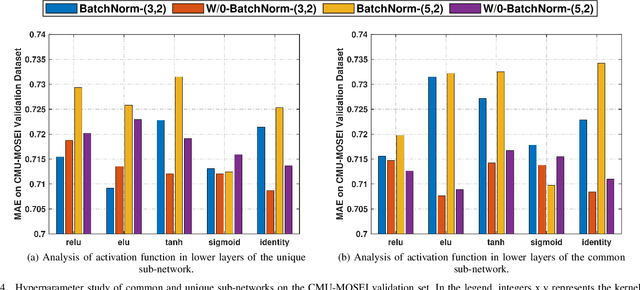

Deep-HOSeq: Deep Higher Order Sequence Fusion for Multimodal Sentiment Analysis

Oct 16, 2020

Abstract:Multimodal sentiment analysis utilizes multiple heterogeneous modalities for sentiment classification. The recent multimodal fusion schemes customize LSTMs to discover intra-modal dynamics and design sophisticated attention mechanisms to discover the inter-modal dynamics from multimodal sequences. Although powerful, these schemes completely rely on attention mechanisms which is problematic due to two major drawbacks 1) deceptive attention masks, and 2) training dynamics. Nevertheless, strenuous efforts are required to optimize hyperparameters of these consolidate architectures, in particular their custom-designed LSTMs constrained by attention schemes. In this research, we first propose a common network to discover both intra-modal and inter-modal dynamics by utilizing basic LSTMs and tensor based convolution networks. We then propose unique networks to encapsulate temporal-granularity among the modalities which is essential while extracting information within asynchronous sequences. We then integrate these two kinds of information via a fusion layer and call our novel multimodal fusion scheme as Deep-HOSeq (Deep network with higher order Common and Unique Sequence information). The proposed Deep-HOSeq efficiently discovers all-important information from multimodal sequences and the effectiveness of utilizing both types of information is empirically demonstrated on CMU-MOSEI and CMU-MOSI benchmark datasets. The source code of our proposed Deep-HOSeq is and available at https://github.com/sverma88/Deep-HOSeq--ICDM-2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge