Jiwei Wang

Use neural networks to recognize students' handwritten letters and incorrect symbols

Sep 12, 2023Abstract:Correcting students' multiple-choice answers is a repetitive and mechanical task that can be considered an image multi-classification task. Assuming possible options are 'abcd' and the correct option is one of the four, some students may write incorrect symbols or options that do not exist. In this paper, five classifications were set up - four for possible correct options and one for other incorrect writing. This approach takes into account the possibility of non-standard writing options.

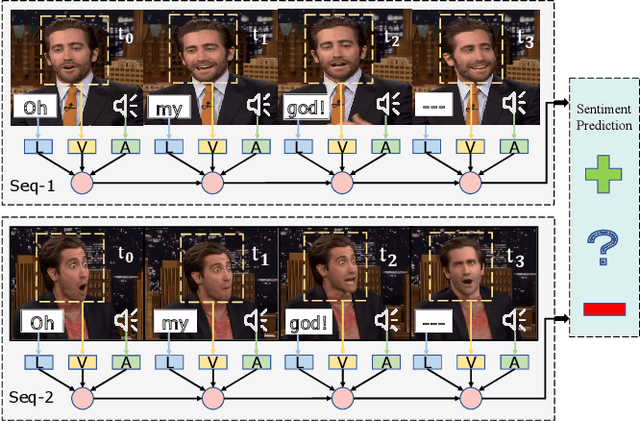

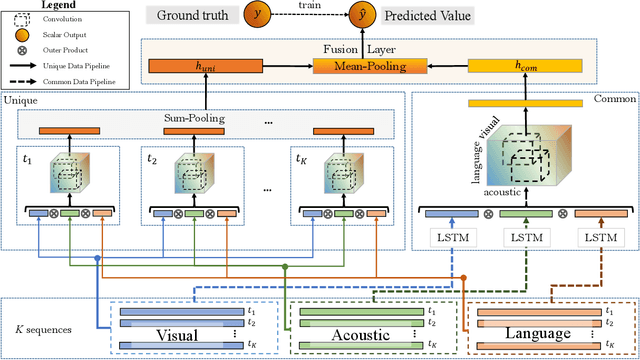

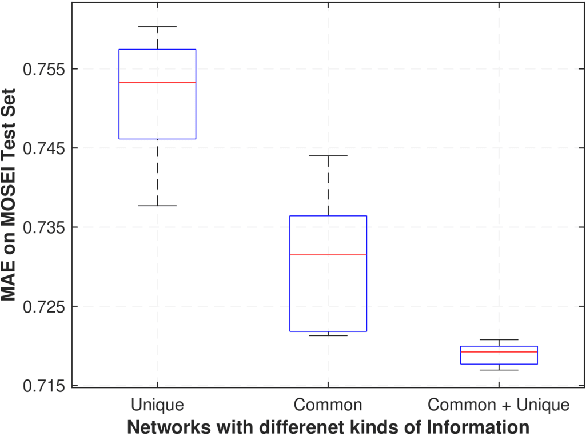

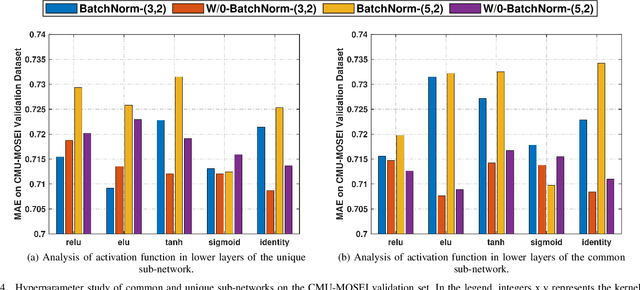

Deep-HOSeq: Deep Higher Order Sequence Fusion for Multimodal Sentiment Analysis

Oct 16, 2020

Abstract:Multimodal sentiment analysis utilizes multiple heterogeneous modalities for sentiment classification. The recent multimodal fusion schemes customize LSTMs to discover intra-modal dynamics and design sophisticated attention mechanisms to discover the inter-modal dynamics from multimodal sequences. Although powerful, these schemes completely rely on attention mechanisms which is problematic due to two major drawbacks 1) deceptive attention masks, and 2) training dynamics. Nevertheless, strenuous efforts are required to optimize hyperparameters of these consolidate architectures, in particular their custom-designed LSTMs constrained by attention schemes. In this research, we first propose a common network to discover both intra-modal and inter-modal dynamics by utilizing basic LSTMs and tensor based convolution networks. We then propose unique networks to encapsulate temporal-granularity among the modalities which is essential while extracting information within asynchronous sequences. We then integrate these two kinds of information via a fusion layer and call our novel multimodal fusion scheme as Deep-HOSeq (Deep network with higher order Common and Unique Sequence information). The proposed Deep-HOSeq efficiently discovers all-important information from multimodal sequences and the effectiveness of utilizing both types of information is empirically demonstrated on CMU-MOSEI and CMU-MOSI benchmark datasets. The source code of our proposed Deep-HOSeq is and available at https://github.com/sverma88/Deep-HOSeq--ICDM-2020.

A Mobile Cloud Collaboration Fall Detection System Based on Ensemble Learning

Jul 05, 2019

Abstract:Falls are one of the important causes of accidental or unintentional injury death worldwide. Therefore, this paper presents a reliable fall detection algorithm and a mobile cloud collaboration system for fall detection. The algorithm is an ensemble learning method based on decision tree, named Falldetection Ensemble Decision Tree (FEDT). The mobile cloud collaboration system can be divided into three stages: 1) mobile stage: use a light-weighted threshold method to filter out the activities of daily livings (ADLs), 2) collaboration stage: transmit data to cloud and meanwhile extract features in the cloud, 3) cloud stage: deploy the model trained by FEDT to give the final detection result with the extracted features. Experiments show that the performance of the proposed FEDT outperforms the others' over 1-3% both on sensitivity and specificity, and more importantly, the system can provide reliable fall detection in practical scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge