Boran Wang

ChartAgent: A Chart Understanding Framework with Tool Integrated Reasoning

Dec 16, 2025

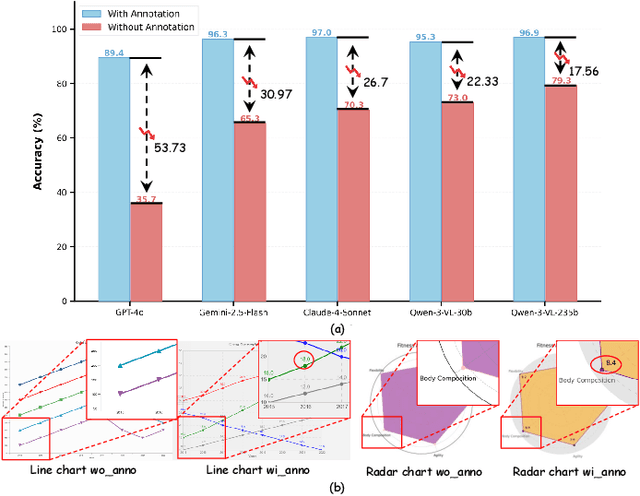

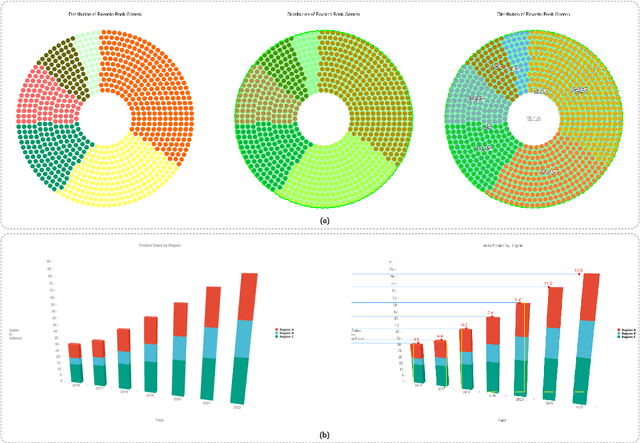

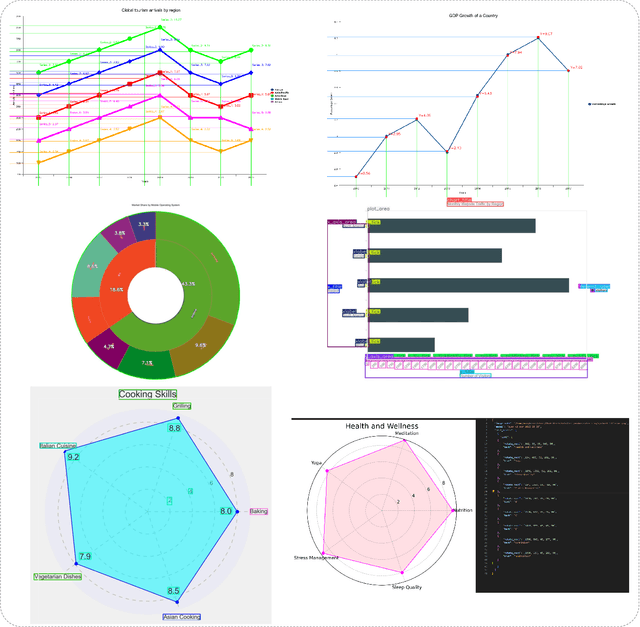

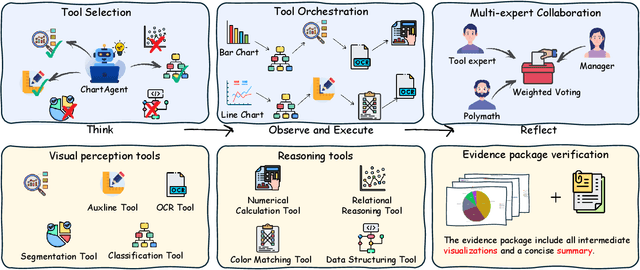

Abstract:With their high information density and intuitive readability, charts have become the de facto medium for data analysis and communication across disciplines. Recent multimodal large language models (MLLMs) have made notable progress in automated chart understanding, yet they remain heavily dependent on explicit textual annotations and the performance degrades markedly when key numerals are absent. To address this limitation, we introduce ChartAgent, a chart understanding framework grounded in Tool-Integrated Reasoning (TIR). Inspired by human cognition, ChartAgent decomposes complex chart analysis into a sequence of observable, replayable steps. Supporting this architecture is an extensible, modular tool library comprising more than a dozen core tools, such as keyelement detection, instance segmentation, and optical character recognition (OCR), which the agent dynamically orchestrates to achieve systematic visual parsing across diverse chart types. Leveraging TIRs transparency and verifiability, ChartAgent moves beyond the black box paradigm by standardizing and consolidating intermediate outputs into a structured Evidence Package, providing traceable and reproducible support for final conclusions. Experiments show that ChartAgent substantially improves robustness under sparse annotation settings, offering a practical path toward trustworthy and extensible systems for chart understanding.

DEYOLO: Dual-Feature-Enhancement YOLO for Cross-Modality Object Detection

Dec 06, 2024Abstract:Object detection in poor-illumination environments is a challenging task as objects are usually not clearly visible in RGB images. As infrared images provide additional clear edge information that complements RGB images, fusing RGB and infrared images has potential to enhance the detection ability in poor-illumination environments. However, existing works involving both visible and infrared images only focus on image fusion, instead of object detection. Moreover, they directly fuse the two kinds of image modalities, which ignores the mutual interference between them. To fuse the two modalities to maximize the advantages of cross-modality, we design a dual-enhancement-based cross-modality object detection network DEYOLO, in which semantic-spatial cross modality and novel bi-directional decoupled focus modules are designed to achieve the detection-centered mutual enhancement of RGB-infrared (RGB-IR). Specifically, a dual semantic enhancing channel weight assignment module (DECA) and a dual spatial enhancing pixel weight assignment module (DEPA) are firstly proposed to aggregate cross-modality information in the feature space to improve the feature representation ability, such that feature fusion can aim at the object detection task. Meanwhile, a dual-enhancement mechanism, including enhancements for two-modality fusion and single modality, is designed in both DECAand DEPAto reduce interference between the two kinds of image modalities. Then, a novel bi-directional decoupled focus is developed to enlarge the receptive field of the backbone network in different directions, which improves the representation quality of DEYOLO. Extensive experiments on M3FD and LLVIP show that our approach outperforms SOTA object detection algorithms by a clear margin. Our code is available at https://github.com/chips96/DEYOLO.

DROJ: A Prompt-Driven Attack against Large Language Models

Nov 14, 2024

Abstract:Large Language Models (LLMs) have demonstrated exceptional capabilities across various natural language processing tasks. Due to their training on internet-sourced datasets, LLMs can sometimes generate objectionable content, necessitating extensive alignment with human feedback to avoid such outputs. Despite massive alignment efforts, LLMs remain susceptible to adversarial jailbreak attacks, which usually are manipulated prompts designed to circumvent safety mechanisms and elicit harmful responses. Here, we introduce a novel approach, Directed Rrepresentation Optimization Jailbreak (DROJ), which optimizes jailbreak prompts at the embedding level to shift the hidden representations of harmful queries towards directions that are more likely to elicit affirmative responses from the model. Our evaluations on LLaMA-2-7b-chat model show that DROJ achieves a 100\% keyword-based Attack Success Rate (ASR), effectively preventing direct refusals. However, the model occasionally produces repetitive and non-informative responses. To mitigate this, we introduce a helpfulness system prompt that enhances the utility of the model's responses. Our code is available at https://github.com/Leon-Leyang/LLM-Safeguard.

Causal Discovery from Time-Series Data with Short-Term Invariance-Based Convolutional Neural Networks

Aug 15, 2024Abstract:Causal discovery from time-series data aims to capture both intra-slice (contemporaneous) and inter-slice (time-lagged) causality between variables within the temporal chain, which is crucial for various scientific disciplines. Compared to causal discovery from non-time-series data, causal discovery from time-series data necessitates more serialized samples with a larger amount of observed time steps. To address the challenges, we propose a novel gradient-based causal discovery approach STIC, which focuses on \textbf{S}hort-\textbf{T}erm \textbf{I}nvariance using \textbf{C}onvolutional neural networks to uncover the causal relationships from time-series data. Specifically, STIC leverages both the short-term time and mechanism invariance of causality within each window observation, which possesses the property of independence, to enhance sample efficiency. Furthermore, we construct two causal convolution kernels, which correspond to the short-term time and mechanism invariance respectively, to estimate the window causal graph. To demonstrate the necessity of convolutional neural networks for causal discovery from time-series data, we theoretically derive the equivalence between convolution and the underlying generative principle of time-series data under the assumption that the additive noise model is identifiable. Experimental evaluations conducted on both synthetic and FMRI benchmark datasets demonstrate that our STIC outperforms baselines significantly and achieves the state-of-the-art performance, particularly when the datasets contain a limited number of observed time steps. Code is available at \url{https://github.com/HITshenrj/STIC}.

FreqTSF: Time Series Forecasting Via Simulating Frequency Kramer-Kronig Relations

Jul 31, 2024Abstract:Time series forecasting (TSF) is immensely important in extensive applications, such as electricity transformation, financial trade, medical monitoring, and smart agriculture. Although Transformer-based methods can handle time series data, their ability to predict long-term time series is limited due to the ``anti-order" nature of the self-attention mechanism. To address this problem, we focus on frequency domain to weaken the impact of order in TSF and propose the FreqBlock, where we first obtain frequency representations through the Frequency Transform Module. Subsequently, a newly designed Frequency Cross Attention is used to obtian enhanced frequency representations between the real and imaginary parts, thus establishing a link between the attention mechanism and the inherent Kramer-Kronig relations (KKRs). Our backbone network, FreqTSF, adopts a residual structure by concatenating multiple FreqBlocks to simulate KKRs in the frequency domain and avoid degradation problems. On a theoretical level, we demonstrate that the proposed two modules can significantly reduce the time and memory complexity from $\mathcal{O}(L^2)$ to $\mathcal{O}(L)$ for each FreqBlock computation. Empirical studies on four benchmark datasets show that FreqTSF achieves an overall relative MSE reduction of 15\% and an overall relative MAE reduction of 11\% compared to the state-of-the-art methods. The code will be available soon.

Blood Glucose Control Via Pre-trained Counterfactual Invertible Neural Networks

May 23, 2024

Abstract:Type 1 diabetes mellitus (T1D) is characterized by insulin deficiency and blood glucose (BG) control issues. The state-of-the-art solution for continuous BG control is reinforcement learning (RL), where an agent can dynamically adjust exogenous insulin doses in time to maintain BG levels within the target range. However, due to the lack of action guidance, the agent often needs to learn from randomized trials to understand misleading correlations between exogenous insulin doses and BG levels, which can lead to instability and unsafety. To address these challenges, we propose an introspective RL based on Counterfactual Invertible Neural Networks (CINN). We use the pre-trained CINN as a frozen introspective block of the RL agent, which integrates forward prediction and counterfactual inference to guide the policy updates, promoting more stable and safer BG control. Constructed based on interpretable causal order, CINN employs bidirectional encoders with affine coupling layers to ensure invertibility while using orthogonal weight normalization to enhance the trainability, thereby ensuring the bidirectional differentiability of network parameters. We experimentally validate the accuracy and generalization ability of the pre-trained CINN in BG prediction and counterfactual inference for action. Furthermore, our experimental results highlight the effectiveness of pre-trained CINN in guiding RL policy updates for more accurate and safer BG control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge