Ratnesh Kumar

NVIDIA Nemotron Nano V2 VL

Nov 07, 2025Abstract:We introduce Nemotron Nano V2 VL, the latest model of the Nemotron vision-language series designed for strong real-world document understanding, long video comprehension, and reasoning tasks. Nemotron Nano V2 VL delivers significant improvements over our previous model, Llama-3.1-Nemotron-Nano-VL-8B, across all vision and text domains through major enhancements in model architecture, datasets, and training recipes. Nemotron Nano V2 VL builds on Nemotron Nano V2, a hybrid Mamba-Transformer LLM, and innovative token reduction techniques to achieve higher inference throughput in long document and video scenarios. We are releasing model checkpoints in BF16, FP8, and FP4 formats and sharing large parts of our datasets, recipes and training code.

Dynamic semantic VSLAM with known and unknown objects

Dec 18, 2024Abstract:Traditional Visual Simultaneous Localization and Mapping (VSLAM) systems assume a static environment, which makes them ineffective in highly dynamic settings. To overcome this, many approaches integrate semantic information from deep learning models to identify dynamic regions within images. However, these methods face a significant limitation as a supervised model cannot recognize objects not included in the training datasets. This paper introduces a novel feature-based Semantic VSLAM capable of detecting dynamic features in the presence of both known and unknown objects. By employing an unsupervised segmentation network, we achieve unlabeled segmentation, and next utilize an objector detector to identify any of the known classes among those. We then pair this with the computed high-gradient optical-flow information to next identify the static versus dynamic segmentations for both known and unknown object classes. A consistency check module is also introduced for further refinement and final classification into static versus dynamic features. Evaluations using public datasets demonstrate that our method offers superior performance than traditional VSLAM when unknown objects are present in the images while still matching the performance of the leading semantic VSLAM techniques when the images contain only the known objects

NeuroKoopman Dynamic Causal Discovery

Apr 25, 2024Abstract:In many real-world applications where the system dynamics has an underlying interdependency among its variables (such as power grid, economics, neuroscience, omics networks, environmental ecosystems, and others), one is often interested in knowing whether the past values of one time series influences the future of another, known as Granger causality, and the associated underlying dynamics. This paper introduces a Koopman-inspired framework that leverages neural networks for data-driven learning of the Koopman bases, termed NeuroKoopman Dynamic Causal Discovery (NKDCD), for reliably inferring the Granger causality along with the underlying nonlinear dynamics. NKDCD employs an autoencoder architecture that lifts the nonlinear dynamics to a higher dimension using data-learned bases, where the lifted time series can be reliably modeled linearly. The lifting function, the linear Granger causality lag matrices, and the projection function (from lifted space to base space) are all represented as multilayer perceptrons and are all learned simultaneously in one go. NKDCD also utilizes sparsity-inducing penalties on the weights of the lag matrices, encouraging the model to select only the needed causal dependencies within the data. Through extensive testing on practically applicable datasets, it is shown that the NKDCD outperforms the existing nonlinear Granger causality discovery approaches.

Argoverse 2: Next Generation Datasets for Self-Driving Perception and Forecasting

Jan 02, 2023

Abstract:We introduce Argoverse 2 (AV2) - a collection of three datasets for perception and forecasting research in the self-driving domain. The annotated Sensor Dataset contains 1,000 sequences of multimodal data, encompassing high-resolution imagery from seven ring cameras, and two stereo cameras in addition to lidar point clouds, and 6-DOF map-aligned pose. Sequences contain 3D cuboid annotations for 26 object categories, all of which are sufficiently-sampled to support training and evaluation of 3D perception models. The Lidar Dataset contains 20,000 sequences of unlabeled lidar point clouds and map-aligned pose. This dataset is the largest ever collection of lidar sensor data and supports self-supervised learning and the emerging task of point cloud forecasting. Finally, the Motion Forecasting Dataset contains 250,000 scenarios mined for interesting and challenging interactions between the autonomous vehicle and other actors in each local scene. Models are tasked with the prediction of future motion for "scored actors" in each scenario and are provided with track histories that capture object location, heading, velocity, and category. In all three datasets, each scenario contains its own HD Map with 3D lane and crosswalk geometry - sourced from data captured in six distinct cities. We believe these datasets will support new and existing machine learning research problems in ways that existing datasets do not. All datasets are released under the CC BY-NC-SA 4.0 license.

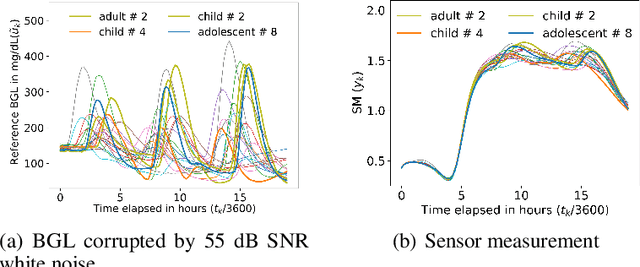

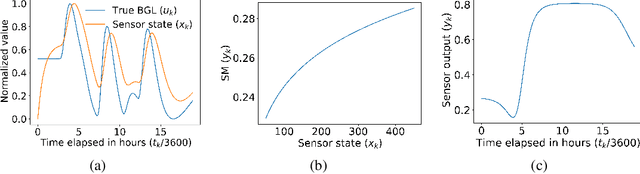

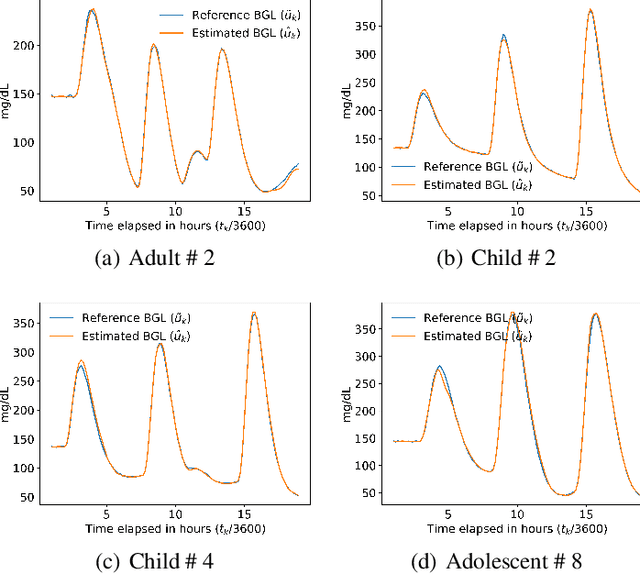

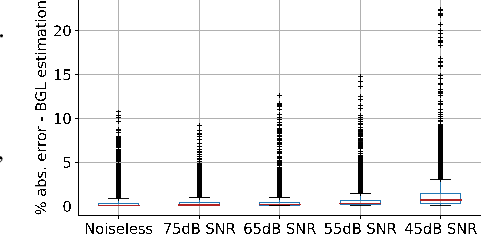

Dynamic Calibration of Nonlinear Sensors with Time-Drifts and Delays by Bayesian Inference

Aug 29, 2022

Abstract:Most sensor calibrations rely on the linearity and steadiness of their response characteristics, but practical sensors are nonlinear, and their response drifts with time, restricting their choices for adoption. To broaden the realm of sensors to allow nonlinearity and time-drift in the underlying dynamics, a Bayesian inference-based nonlinear, non-causal dynamic calibration method is introduced, where the sensed value is estimated as a posterior conditional mean given a finite-length sequence of the sensor measurements and the elapsed time. Additionally, an algorithm is proposed to adjust an already learned calibration map online whenever new data arrives. The effectiveness of the proposed method is validated on continuous-glucose-monitoring (CGM) data from an alive rat equipped with an in-house optical glucose sensor. To allow flexibility in choice, the validation is also performed on a synthetic blood glucose level (BGL) dataset generated using FDA-approved virtual diabetic patient models together with an illustrative CGM sensor model.

Data-Driven Linear Koopman Embedding for Model-Predictive Power System Control

Jun 02, 2022

Abstract:This paper presents a linear Koopman embedding for model predictive emergency voltage regulation in power systems, by way of a data-driven lifting of the system dynamics into a higher dimensional linear space over which the MPC (model predictive control) is exercised, thereby scaling as well as expediting the MPC computation for its real-time implementation for practical systems. We develop a {\em Koopman-inspired deep neural network} (KDNN) architecture for the linear embedding of the voltage dynamics subjected to reactive controls. The training of the KDNN for the purposes of linear embedding is done using the simulated voltage trajectories under a variety of applied control inputs and load conditions. The proposed framework learns the underlying system dynamics from the input/output data in the form of a triple of transforms: A Neural Network (NN)-based lifting to a higher dimension, a linear dynamics within that higher dynamics, and an NN-based projection to original space. This approach alleviates the burden of an ad-hoc selection of the basis functions for the purposes of lifting to higher dimensional linear space. The MPC is computed over the linear dynamics, making the control computation scalable and also real-time.

Noise-robust Clustering

Oct 19, 2021

Abstract:This paper presents noise-robust clustering techniques in unsupervised machine learning. The uncertainty about the noise, consistency, and other ambiguities can become severe obstacles in data analytics. As a result, data quality, cleansing, management, and governance remain critical disciplines when working with Big Data. With this complexity, it is no longer sufficient to treat data deterministically as in a classical setting, and it becomes meaningful to account for noise distribution and its impact on data sample values. Classical clustering methods group data into "similarity classes" depending on their relative distances or similarities in the underlying space. This paper addressed this problem via the extension of classical $K$-means and $K$-medoids clustering over data distributions (rather than the raw data). This involves measuring distances among distributions using two types of measures: the optimal mass transport (also called Wasserstein distance, denoted $W_2$) and a novel distance measure proposed in this paper, the expected value of random variable distance (denoted ED). The presented distribution-based $K$-means and $K$-medoids algorithms cluster the data distributions first and then assign each raw data to the cluster of data's distribution.

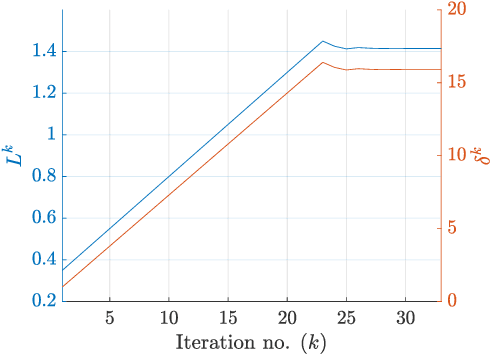

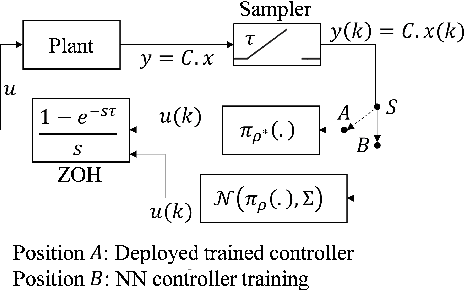

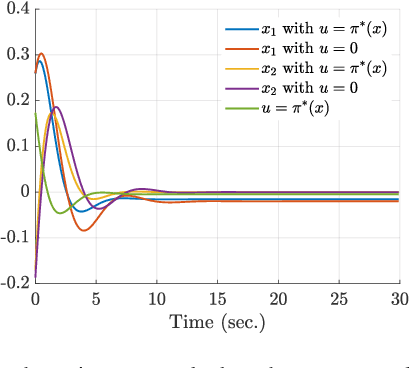

Robust Stability of Neural-Network Controlled Nonlinear Systems with Parametric Variability

Sep 13, 2021

Abstract:Stability certification and identification of the stabilizable operating region of a system are two important concerns to ensure its operational safety/security and robustness. With the advent of machine-learning tools, these issues are specially important for systems with machine-learned components in the feedback loop. Here we develop a theory for stability and stabilizability of a class of neural-network controlled nonlinear systems, where the equilibria can drift when parametric changes occur. A Lyapunov based convex stability certificate is developed and is further used to devise an estimate for a local Lipschitz upper bound for a neural-network (NN) controller and a corresponding operating domain on the state space, containing an initialization set from where the closed-loop (CL) local asymptotic stability of each system in the class is guaranteed under the same controller, while the system trajectories remain confined to the operating domain. For computing such a robust stabilizing NN controller, a stability guaranteed training (SGT) algorithm is also proposed. The effectiveness of the proposed framework is demonstrated using illustrative examples.

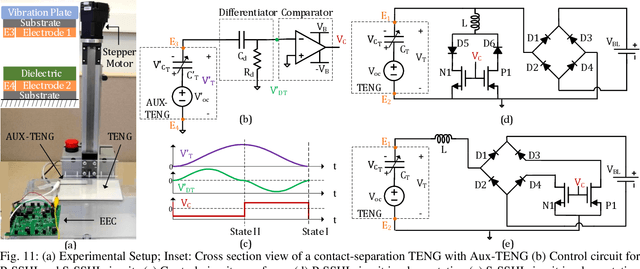

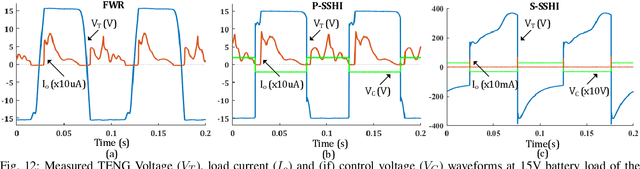

Synchronous Inductor Switched Energy Extraction Circuits for Triboelectric Nanogenerator

Feb 09, 2021

Abstract:Triboelectric nanogenerator (TENG), a class of mechanical to electrical energy transducers, has emerged as a promising solution to self-power Internet of Things (IoT) sensors, wearable electronics, etc. The use of synchronous switched energy extraction circuits (EECs) as an interface between TENG and battery load can deliver multi-fold energy gain over simple minded Full Wave Rectification (FWR). This paper presents a detailed analysis of Parallel and Series Synchronous Switched Harvesting on Inductor (P-SSHI and S-SSHI) EECs to derive the energy delivered to the battery load and compare it with the standard FWR (a 3rd circuit) in a common analytical framework, under both realistic conditions, and also ideal conditions. Further, the optimal value of battery load to maximize output and upper bound beyond which charging is not feasible are derived for all the three considered circuits. These closed-form results derived with general TENG electrical parameters and first-order circuit non-idealities shed light on the physics of the modeling and guide the choice and design of EECs for any given TENG. The derived analytical results are verified against PSpice based simulation results as well as the experimentally measured values.

PAMTRI: Pose-Aware Multi-Task Learning for Vehicle Re-Identification Using Highly Randomized Synthetic Data

May 02, 2020

Abstract:In comparison with person re-identification (ReID), which has been widely studied in the research community, vehicle ReID has received less attention. Vehicle ReID is challenging due to 1) high intra-class variability (caused by the dependency of shape and appearance on viewpoint), and 2) small inter-class variability (caused by the similarity in shape and appearance between vehicles produced by different manufacturers). To address these challenges, we propose a Pose-Aware Multi-Task Re-Identification (PAMTRI) framework. This approach includes two innovations compared with previous methods. First, it overcomes viewpoint-dependency by explicitly reasoning about vehicle pose and shape via keypoints, heatmaps and segments from pose estimation. Second, it jointly classifies semantic vehicle attributes (colors and types) while performing ReID, through multi-task learning with the embedded pose representations. Since manually labeling images with detailed pose and attribute information is prohibitive, we create a large-scale highly randomized synthetic dataset with automatically annotated vehicle attributes for training. Extensive experiments validate the effectiveness of each proposed component, showing that PAMTRI achieves significant improvement over state-of-the-art on two mainstream vehicle ReID benchmarks: VeRi and CityFlow-ReID. Code and models are available at https://github.com/NVlabs/PAMTRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge