Qingyi Gu

PTQ4ARVG: Post-Training Quantization for AutoRegressive Visual Generation Models

Jan 29, 2026Abstract:AutoRegressive Visual Generation (ARVG) models retain an architecture compatible with language models, while achieving performance comparable to diffusion-based models. Quantization is commonly employed in neural networks to reduce model size and computational latency. However, applying quantization to ARVG remains largely underexplored, and existing quantization methods fail to generalize effectively to ARVG models. In this paper, we explore this issue and identify three key challenges: (1) severe outliers at channel-wise level, (2) highly dynamic activations at token-wise level, and (3) mismatched distribution information at sample-wise level. To these ends, we propose PTQ4ARVG, a training-free post-training quantization (PTQ) framework consisting of: (1) Gain-Projected Scaling (GPS) mitigates the channel-wise outliers, which expands the quantization loss via a Taylor series to quantify the gain of scaling for activation-weight quantization, and derives the optimal scaling factor through differentiation.(2) Static Token-Wise Quantization (STWQ) leverages the inherent properties of ARVG, fixed token length and position-invariant distribution across samples, to address token-wise variance without incurring dynamic calibration overhead.(3) Distribution-Guided Calibration (DGC) selects samples that contribute most to distributional entropy, eliminating the sample-wise distribution mismatch. Extensive experiments show that PTQ4ARVG can effectively quantize the ARVG family models to 8-bit and 6-bit while maintaining competitive performance. Code is available at http://github.com/BienLuky/PTQ4ARVG .

MambaSeg: Harnessing Mamba for Accurate and Efficient Image-Event Semantic Segmentation

Dec 30, 2025Abstract:Semantic segmentation is a fundamental task in computer vision with wide-ranging applications, including autonomous driving and robotics. While RGB-based methods have achieved strong performance with CNNs and Transformers, their effectiveness degrades under fast motion, low-light, or high dynamic range conditions due to limitations of frame cameras. Event cameras offer complementary advantages such as high temporal resolution and low latency, yet lack color and texture, making them insufficient on their own. To address this, recent research has explored multimodal fusion of RGB and event data; however, many existing approaches are computationally expensive and focus primarily on spatial fusion, neglecting the temporal dynamics inherent in event streams. In this work, we propose MambaSeg, a novel dual-branch semantic segmentation framework that employs parallel Mamba encoders to efficiently model RGB images and event streams. To reduce cross-modal ambiguity, we introduce the Dual-Dimensional Interaction Module (DDIM), comprising a Cross-Spatial Interaction Module (CSIM) and a Cross-Temporal Interaction Module (CTIM), which jointly perform fine-grained fusion along both spatial and temporal dimensions. This design improves cross-modal alignment, reduces ambiguity, and leverages the complementary properties of each modality. Extensive experiments on the DDD17 and DSEC datasets demonstrate that MambaSeg achieves state-of-the-art segmentation performance while significantly reducing computational cost, showcasing its promise for efficient, scalable, and robust multimodal perception.

SAQ-SAM: Semantically-Aligned Quantization for Segment Anything Model

Mar 09, 2025

Abstract:Segment Anything Model (SAM) exhibits remarkable zero-shot segmentation capability; however, its prohibitive computational costs make edge deployment challenging. Although post-training quantization (PTQ) offers a promising compression solution, existing methods yield unsatisfactory results when applied to SAM, owing to its specialized model components and promptable workflow: (i) The mask decoder's attention exhibits extreme outliers, and we find that aggressive clipping (ranging down to even 100$\times$), instead of smoothing or isolation, is effective in suppressing outliers while maintaining semantic capabilities. Unfortunately, traditional metrics (e.g., MSE) fail to provide such large-scale clipping. (ii) Existing reconstruction methods potentially neglect prompts' intention, resulting in distorted visual encodings during prompt interactions. To address the above issues, we propose SAQ-SAM in this paper, which boosts PTQ of SAM with semantic alignment. Specifically, we propose Perceptual-Consistency Clipping, which exploits attention focus overlap as clipping metric, to significantly suppress outliers. Furthermore, we propose Prompt-Aware Reconstruction, which incorporates visual-prompt interactions by leveraging cross-attention responses in mask decoder, thus facilitating alignment in both distribution and semantics. To ensure the interaction efficiency, we also introduce a layer-skipping strategy for visual tokens. Extensive experiments are conducted on different segmentation tasks and SAMs of various sizes, and the results show that the proposed SAQ-SAM consistently outperforms baselines. For example, when quantizing SAM-B to 4-bit, our method achieves 11.7% higher mAP than the baseline in instance segmentation task.

CacheQuant: Comprehensively Accelerated Diffusion Models

Mar 03, 2025Abstract:Diffusion models have gradually gained prominence in the field of image synthesis, showcasing remarkable generative capabilities. Nevertheless, the slow inference and complex networks, resulting from redundancy at both temporal and structural levels, hinder their low-latency applications in real-world scenarios. Current acceleration methods for diffusion models focus separately on temporal and structural levels. However, independent optimization at each level to further push the acceleration limits results in significant performance degradation. On the other hand, integrating optimizations at both levels can compound the acceleration effects. Unfortunately, we find that the optimizations at these two levels are not entirely orthogonal. Performing separate optimizations and then simply integrating them results in unsatisfactory performance. To tackle this issue, we propose CacheQuant, a novel training-free paradigm that comprehensively accelerates diffusion models by jointly optimizing model caching and quantization techniques. Specifically, we employ a dynamic programming approach to determine the optimal cache schedule, in which the properties of caching and quantization are carefully considered to minimize errors. Additionally, we propose decoupled error correction to further mitigate the coupled and accumulated errors step by step. Experimental results show that CacheQuant achieves a 5.18 speedup and 4 compression for Stable Diffusion on MS-COCO, with only a 0.02 loss in CLIP score. Our code are open-sourced: https://github.com/BienLuky/CacheQuant .

Efficient Event-based Semantic Segmentation with Spike-driven Lightweight Transformer-based Networks

Dec 17, 2024

Abstract:Event-based semantic segmentation has great potential in autonomous driving and robotics due to the advantages of event cameras, such as high dynamic range, low latency, and low power cost. Unfortunately, current artificial neural network (ANN)-based segmentation methods suffer from high computational demands, the requirements for image frames, and massive energy consumption, limiting their efficiency and application on resource-constrained edge/mobile platforms. To address these problems, we introduce SLTNet, a spike-driven lightweight transformer-based network designed for event-based semantic segmentation. Specifically, SLTNet is built on efficient spike-driven convolution blocks (SCBs) to extract rich semantic features while reducing the model's parameters. Then, to enhance the long-range contextural feature interaction, we propose novel spike-driven transformer blocks (STBs) with binary mask operations. Based on these basic blocks, SLTNet employs a high-efficiency single-branch architecture while maintaining the low energy consumption of the Spiking Neural Network (SNN). Finally, extensive experiments on DDD17 and DSEC-Semantic datasets demonstrate that SLTNet outperforms state-of-the-art (SOTA) SNN-based methods by at least 7.30% and 3.30% mIoU, respectively, with extremely 5.48x lower energy consumption and 1.14x faster inference speed.

TTAQ: Towards Stable Post-training Quantization in Continuous Domain Adaptation

Dec 13, 2024Abstract:Post-training quantization (PTQ) reduces excessive hardware cost by quantizing full-precision models into lower bit representations on a tiny calibration set, without retraining. Despite the remarkable progress made through recent efforts, traditional PTQ methods typically encounter failure in dynamic and ever-changing real-world scenarios, involving unpredictable data streams and continual domain shifts, which poses greater challenges. In this paper, we propose a novel and stable quantization process for test-time adaptation (TTA), dubbed TTAQ, to address the performance degradation of traditional PTQ in dynamically evolving test domains. To tackle domain shifts in quantizer, TTAQ proposes the Perturbation Error Mitigation (PEM) and Perturbation Consistency Reconstruction (PCR). Specifically, PEM analyzes the error propagation and devises a weight regularization scheme to mitigate the impact of input perturbations. On the other hand, PCR introduces consistency learning to ensure that quantized models provide stable predictions for same sample. Furthermore, we introduce Adaptive Balanced Loss (ABL) to adjust the logits by taking advantage of the frequency and complexity of the class, which can effectively address the class imbalance caused by unpredictable data streams during optimization. Extensive experiments are conducted on multiple datasets with generic TTA methods, proving that TTAQ can outperform existing baselines and encouragingly improve the accuracy of low bit PTQ models in continually changing test domains. For instance, TTAQ decreases the mean error of 2-bit models on ImageNet-C dataset by an impressive 10.1\%.

DilateQuant: Accurate and Efficient Diffusion Quantization via Weight Dilation

Sep 25, 2024

Abstract:Diffusion models have shown excellent performance on various image generation tasks, but the substantial computational costs and huge memory footprint hinder their low-latency applications in real-world scenarios. Quantization is a promising way to compress and accelerate models. Nevertheless, due to the wide range and time-varying activations in diffusion models, existing methods cannot maintain both accuracy and efficiency simultaneously for low-bit quantization. To tackle this issue, we propose DilateQuant, a novel quantization framework for diffusion models that offers comparable accuracy and high efficiency. Specifically, we keenly aware of numerous unsaturated in-channel weights, which can be cleverly exploited to reduce the range of activations without additional computation cost. Based on this insight, we propose Weight Dilation (WD) that maximally dilates the unsaturated in-channel weights to a constrained range through a mathematically equivalent scaling. WD costlessly absorbs the activation quantization errors into weight quantization. The range of activations decreases, which makes activations quantization easy. The range of weights remains constant, which makes model easy to converge in training stage. Considering the temporal network leads to time-varying activations, we design a Temporal Parallel Quantizer (TPQ), which sets time-step quantization parameters and supports parallel quantization for different time steps, significantly improving the performance and reducing time cost. To further enhance performance while preserving efficiency, we introduce a Block-wise Knowledge Distillation (BKD) to align the quantized models with the full-precision models at a block level. The simultaneous training of time-step quantization parameters and weights minimizes the time required, and the shorter backpropagation paths decreases the memory footprint of the quantization process.

K-Sort Arena: Efficient and Reliable Benchmarking for Generative Models via K-wise Human Preferences

Aug 26, 2024

Abstract:The rapid advancement of visual generative models necessitates efficient and reliable evaluation methods. Arena platform, which gathers user votes on model comparisons, can rank models with human preferences. However, traditional Arena methods, while established, require an excessive number of comparisons for ranking to converge and are vulnerable to preference noise in voting, suggesting the need for better approaches tailored to contemporary evaluation challenges. In this paper, we introduce K-Sort Arena, an efficient and reliable platform based on a key insight: images and videos possess higher perceptual intuitiveness than texts, enabling rapid evaluation of multiple samples simultaneously. Consequently, K-Sort Arena employs K-wise comparisons, allowing K models to engage in free-for-all competitions, which yield much richer information than pairwise comparisons. To enhance the robustness of the system, we leverage probabilistic modeling and Bayesian updating techniques. We propose an exploration-exploitation-based matchmaking strategy to facilitate more informative comparisons. In our experiments, K-Sort Arena exhibits 16.3x faster convergence compared to the widely used ELO algorithm. To further validate the superiority and obtain a comprehensive leaderboard, we collect human feedback via crowdsourced evaluations of numerous cutting-edge text-to-image and text-to-video models. Thanks to its high efficiency, K-Sort Arena can continuously incorporate emerging models and update the leaderboard with minimal votes. Our project has undergone several months of internal testing and is now available at https://huggingface.co/spaces/ksort/K-Sort-Arena

MGRQ: Post-Training Quantization For Vision Transformer With Mixed Granularity Reconstruction

Jun 13, 2024

Abstract:Post-training quantization (PTQ) efficiently compresses vision models, but unfortunately, it accompanies a certain degree of accuracy degradation. Reconstruction methods aim to enhance model performance by narrowing the gap between the quantized model and the full-precision model, often yielding promising results. However, efforts to significantly improve the performance of PTQ through reconstruction in the Vision Transformer (ViT) have shown limited efficacy. In this paper, we conduct a thorough analysis of the reasons for this limited effectiveness and propose MGRQ (Mixed Granularity Reconstruction Quantization) as a solution to address this issue. Unlike previous reconstruction schemes, MGRQ introduces a mixed granularity reconstruction approach. Specifically, MGRQ enhances the performance of PTQ by introducing Extra-Block Global Supervision and Intra-Block Local Supervision, building upon Optimized Block-wise Reconstruction. Extra-Block Global Supervision considers the relationship between block outputs and the model's output, aiding block-wise reconstruction through global supervision. Meanwhile, Intra-Block Local Supervision reduces generalization errors by aligning the distribution of outputs at each layer within a block. Subsequently, MGRQ is further optimized for reconstruction through Mixed Granularity Loss Fusion. Extensive experiments conducted on various ViT models illustrate the effectiveness of MGRQ. Notably, MGRQ demonstrates robust performance in low-bit quantization, thereby enhancing the practicality of the quantized model.

A Novel Wide-Area Multiobject Detection System with High-Probability Region Searching

May 07, 2024

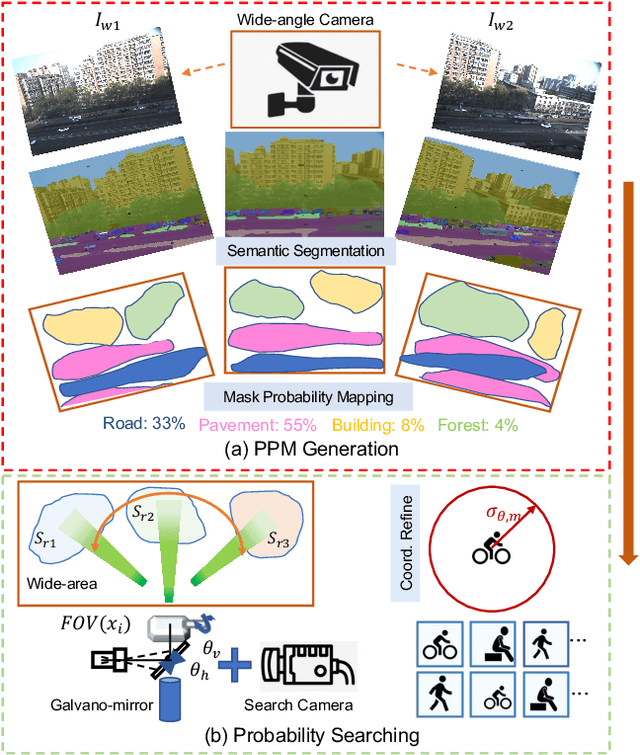

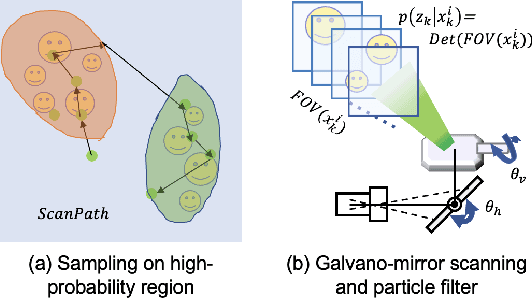

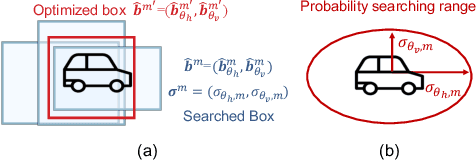

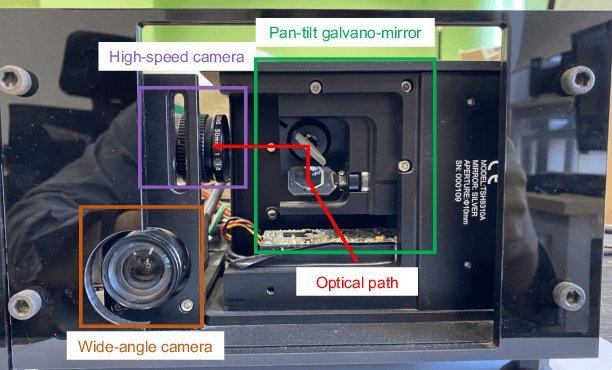

Abstract:In recent years, wide-area visual surveillance systems have been widely applied in various industrial and transportation scenarios. These systems, however, face significant challenges when implementing multi-object detection due to conflicts arising from the need for high-resolution imaging, efficient object searching, and accurate localization. To address these challenges, this paper presents a hybrid system that incorporates a wide-angle camera, a high-speed search camera, and a galvano-mirror. In this system, the wide-angle camera offers panoramic images as prior information, which helps the search camera capture detailed images of the targeted objects. This integrated approach enhances the overall efficiency and effectiveness of wide-area visual detection systems. Specifically, in this study, we introduce a wide-angle camera-based method to generate a panoramic probability map (PPM) for estimating high-probability regions of target object presence. Then, we propose a probability searching module that uses the PPM-generated prior information to dynamically adjust the sampling range and refine target coordinates based on uncertainty variance computed by the object detector. Finally, the integration of PPM and the probability searching module yields an efficient hybrid vision system capable of achieving 120 fps multi-object search and detection. Extensive experiments are conducted to verify the system's effectiveness and robustness.

* Accepted by ICRA 2024

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge