Peter Smielewski

A data-physics hybrid generative model for patient-specific post-stroke motor rehabilitation using wearable sensor data

Dec 16, 2025Abstract:Dynamic prediction of locomotor capacity after stroke is crucial for tailoring rehabilitation, yet current assessments provide only static impairment scores and do not indicate whether patients can safely perform specific tasks such as slope walking or stair climbing. Here, we develop a data-physics hybrid generative framework that reconstructs an individual stroke survivor's neuromuscular control from a single 20 m level-ground walking trial and predicts task-conditioned locomotion across rehabilitation scenarios. The system combines wearable-sensor kinematics, a proportional-derivative physics controller, a population Healthy Motion Atlas, and goal-conditioned deep reinforcement learning with behaviour cloning and generative adversarial imitation learning to generate physically plausible, patient-specific gait simulations for slopes and stairs. In 11 stroke survivors, the personalized controllers preserved idiosyncratic gait patterns while improving joint-angle and endpoint fidelity by 4.73% and 12.10%, respectively, and reducing training time to 25.56% relative to a physics-only baseline. In a multicentre pilot involving 21 inpatients, clinicians who used our locomotion predictions to guide task selection and difficulty obtained larger gains in Fugl-Meyer lower-extremity scores over 28 days of standard rehabilitation than control clinicians (mean change 6.0 versus 3.7 points). These findings indicate that our generative, task-predictive framework can augment clinical decision-making in post-stroke gait rehabilitation and provide a template for dynamically personalized motor recovery strategies.

Generalised Label-free Artefact Cleaning for Real-time Medical Pulsatile Time Series

Apr 29, 2025Abstract:Artefacts compromise clinical decision-making in the use of medical time series. Pulsatile waveforms offer probabilities for accurate artefact detection, yet most approaches rely on supervised manners and overlook patient-level distribution shifts. To address these issues, we introduce a generalised label-free framework, GenClean, for real-time artefact cleaning and leverage an in-house dataset of 180,000 ten-second arterial blood pressure (ABP) samples for training. We first investigate patient-level generalisation, demonstrating robust performances under both intra- and inter-patient distribution shifts. We further validate its effectiveness through challenging cross-disease cohort experiments on the MIMIC-III database. Additionally, we extend our method to photoplethysmography (PPG), highlighting its applicability to diverse medical pulsatile signals. Finally, its integration into ICM+, a clinical research monitoring software, confirms the real-time feasibility of our framework, emphasising its practical utility in continuous physiological monitoring. This work provides a foundational step toward precision medicine in improving the reliability of high-resolution medical time series analysis

Wearable intelligent throat enables natural speech in stroke patients with dysarthria

Nov 28, 2024

Abstract:Wearable silent speech systems hold significant potential for restoring communication in patients with speech impairments. However, seamless, coherent speech remains elusive, and clinical efficacy is still unproven. Here, we present an AI-driven intelligent throat (IT) system that integrates throat muscle vibrations and carotid pulse signal sensors with large language model (LLM) processing to enable fluent, emotionally expressive communication. The system utilizes ultrasensitive textile strain sensors to capture high-quality signals from the neck area and supports token-level processing for real-time, continuous speech decoding, enabling seamless, delay-free communication. In tests with five stroke patients with dysarthria, IT's LLM agents intelligently corrected token errors and enriched sentence-level emotional and logical coherence, achieving low error rates (4.2% word error rate, 2.9% sentence error rate) and a 55% increase in user satisfaction. This work establishes a portable, intuitive communication platform for patients with dysarthria with the potential to be applied broadly across different neurological conditions and in multi-language support systems.

A Unified Platform for At-Home Post-Stroke Rehabilitation Enabled by Wearable Technologies and Artificial Intelligence

Nov 28, 2024

Abstract:At-home rehabilitation for post-stroke patients presents significant challenges, as continuous, personalized care is often limited outside clinical settings. Additionally, the absence of comprehensive solutions addressing diverse rehabilitation needs in home environments complicates recovery efforts. Here, we introduce a smart home platform that integrates wearable sensors, ambient monitoring, and large language model (LLM)-powered assistance to provide seamless health monitoring and intelligent support. The system leverages machine learning enabled plantar pressure arrays for motor recovery assessment (94% classification accuracy), a wearable eye-tracking module for cognitive evaluation, and ambient sensors for precise smart home control (100% operational success, <1 s latency). Additionally, the LLM-powered agent, Auto-Care, offers real-time interventions, such as health reminders and environmental adjustments, enhancing user satisfaction by 29%. This work establishes a fully integrated platform for long-term, personalized rehabilitation, offering new possibilities for managing chronic conditions and supporting aging populations.

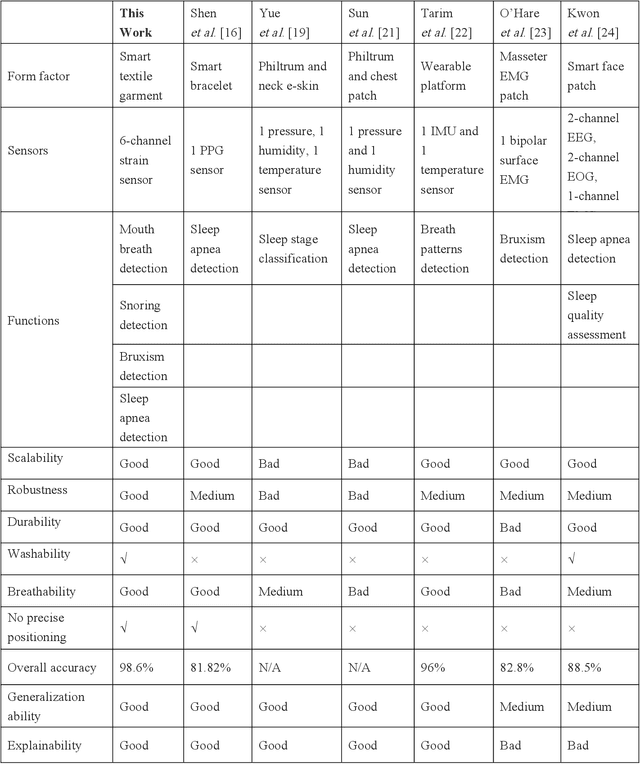

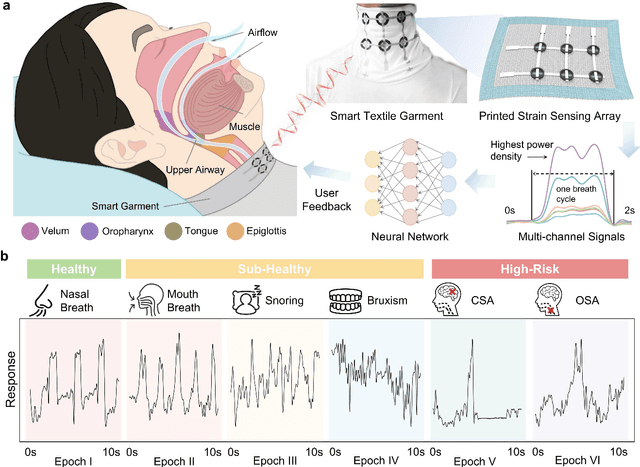

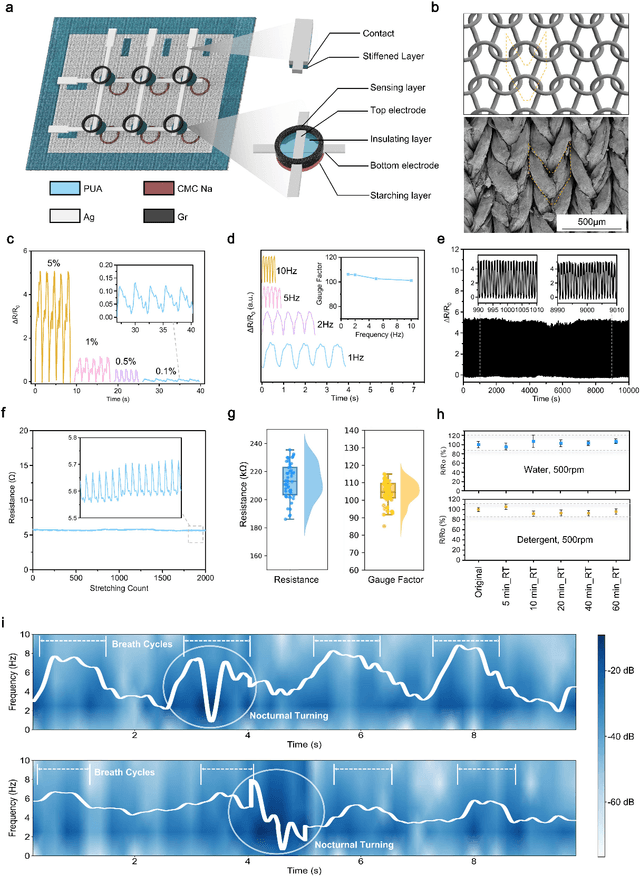

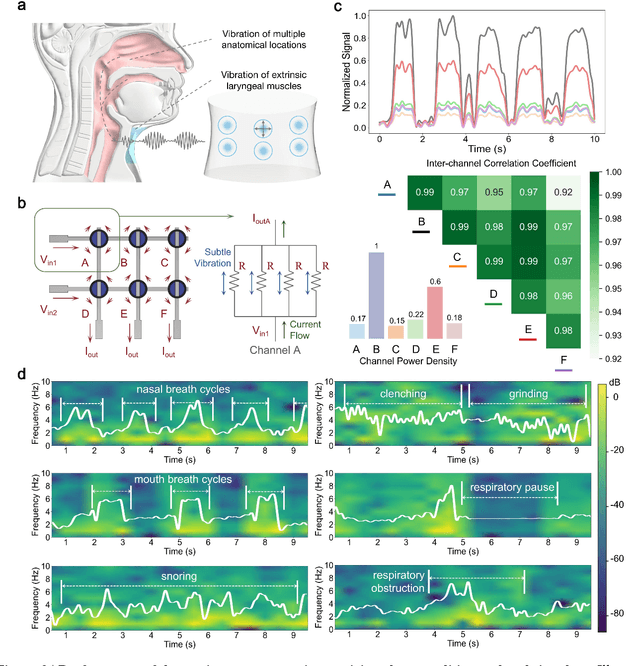

A deep learning-enabled smart garment for versatile sleep behaviour monitoring

Aug 01, 2024

Abstract:Continuous monitoring and accurate detection of complex sleep patterns associated to different sleep-related conditions is essential, not only for enhancing sleep quality but also for preventing the risk of developing chronic illnesses associated to unhealthy sleep. Despite significant advances in research, achieving versatile recognition of various unhealthy and sub-healthy sleep patterns with simple wearable devices at home remains a significant challenge. Here, we report a robust and durable ultrasensitive strain sensor array printed on a smart garment, in its collar region. This solution allows detecting subtle vibrations associated with multiple sleep patterns at the extrinsic laryngeal muscles. Equipped with a deep learning neural network, it can precisely identify six sleep states-nasal breathing, mouth breathing, snoring, bruxism, central sleep apnea (CSA), and obstructive sleep apnea (OSA)-with an impressive accuracy of 98.6%, all without requiring specific positioning. We further demonstrate its explainability and generalization capabilities in practical applications. Explainable artificial intelligence (XAI) visualizations reflect comprehensive signal pattern analysis with low bias. Transfer learning tests show that the system can achieve high accuracy (overall accuracy of 95%) on new users with very few-shot learning (less than 15 samples per class). The scalable manufacturing process, robustness, high accuracy, and excellent generalization of the smart garment make it a promising tool for next-generation continuous sleep monitoring.

Deep Learning-Based Longitudinal Prediction of Childhood Myopia Progression Using Fundus Image Sequences and Baseline Refraction Data

Jul 31, 2024

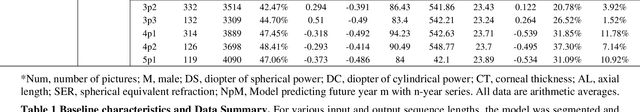

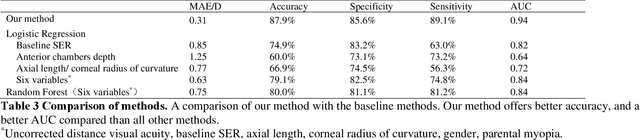

Abstract:Childhood myopia constitutes a significant global health concern. It exhibits an escalating prevalence and has the potential to evolve into severe, irreversible conditions that detrimentally impact familial well-being and create substantial economic costs. Contemporary research underscores the importance of precisely predicting myopia progression to enable timely and effective interventions, thereby averting severe visual impairment in children. Such predictions predominantly rely on subjective clinical assessments, which are inherently biased and resource-intensive, thus hindering their widespread application. In this study, we introduce a novel, high-accuracy method for quantitatively predicting the myopic trajectory and myopia risk in children using only fundus images and baseline refraction data. This approach was validated through a six-year longitudinal study of 3,408 children in Henan, utilizing 16,211 fundus images and corresponding refractive data. Our method based on deep learning demonstrated predictive accuracy with an error margin of 0.311D per year and AUC scores of 0.944 and 0.995 for forecasting the risks of developing myopia and high myopia, respectively. These findings confirm the utility of our model in supporting early intervention strategies and in significantly reducing healthcare costs, particularly by obviating the need for additional metadata and repeated consultations. Furthermore, our method was designed to rely only on fundus images and refractive error data, without the need for meta data or multiple inquiries from doctors, strongly reducing the associated medical costs and facilitating large-scale screening. Our model can even provide good predictions based on only a single time measurement. Consequently, the proposed method is an important means to reduce medical inequities caused by economic disparities.

DeepClean -- self-supervised artefact rejection for intensive care waveform data using generative deep learning

Sep 05, 2019

Abstract:Waveform physiological data is important in the treatment of critically ill patients in the intensive care unit. Such recordings are susceptible to artefacts, which must be removed before the data can be re-used for alerting or reprocessed for other clinical or research purposes. Accurate removal of artefacts reduces both bias and uncertainty in clinical assessment and the false positive rate of intensive care unit alarms, and is therefore a key component in providing optimal clinical care. In this work, we present DeepClean; a prototype self-supervised artefact detection system using a convolutional variational autoencoder deep neural network that avoids costly and painstaking manual annotation, requiring only easily-obtained 'good' data for training. For a test case with invasive arterial blood pressure, we demonstrate that our algorithm can detect the presence of an artefact within a 10-second sample of data with sensitivity and specificity around 90%. Furthermore, DeepClean was able to identify regions of artefact within such samples with high accuracy and we show that it significantly outperforms a baseline principle component analysis approach in both signal reconstruction and artefact detection. DeepClean learns a generative model and therefore may also be used for imputation of missing data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge