Muzi Xu

Wearable intelligent throat enables natural speech in stroke patients with dysarthria

Nov 28, 2024

Abstract:Wearable silent speech systems hold significant potential for restoring communication in patients with speech impairments. However, seamless, coherent speech remains elusive, and clinical efficacy is still unproven. Here, we present an AI-driven intelligent throat (IT) system that integrates throat muscle vibrations and carotid pulse signal sensors with large language model (LLM) processing to enable fluent, emotionally expressive communication. The system utilizes ultrasensitive textile strain sensors to capture high-quality signals from the neck area and supports token-level processing for real-time, continuous speech decoding, enabling seamless, delay-free communication. In tests with five stroke patients with dysarthria, IT's LLM agents intelligently corrected token errors and enriched sentence-level emotional and logical coherence, achieving low error rates (4.2% word error rate, 2.9% sentence error rate) and a 55% increase in user satisfaction. This work establishes a portable, intuitive communication platform for patients with dysarthria with the potential to be applied broadly across different neurological conditions and in multi-language support systems.

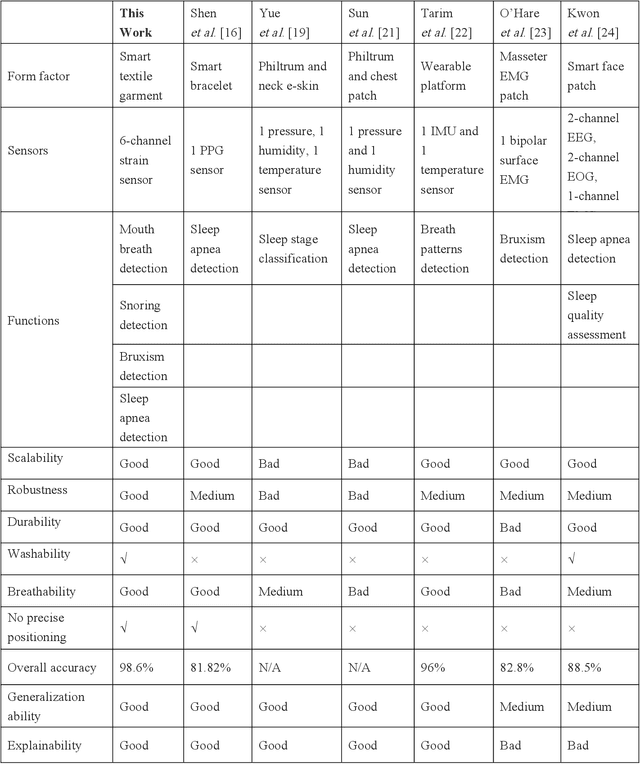

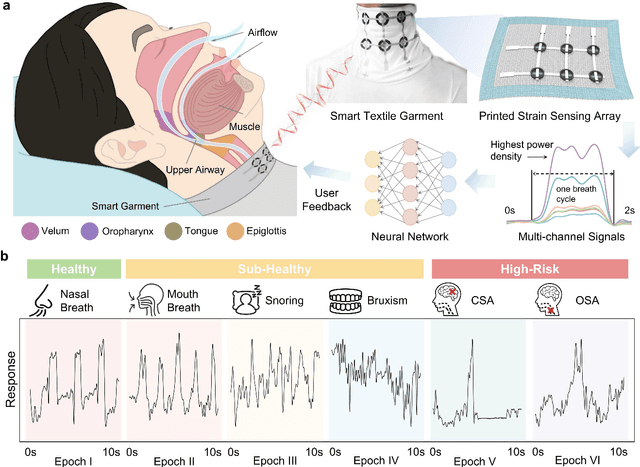

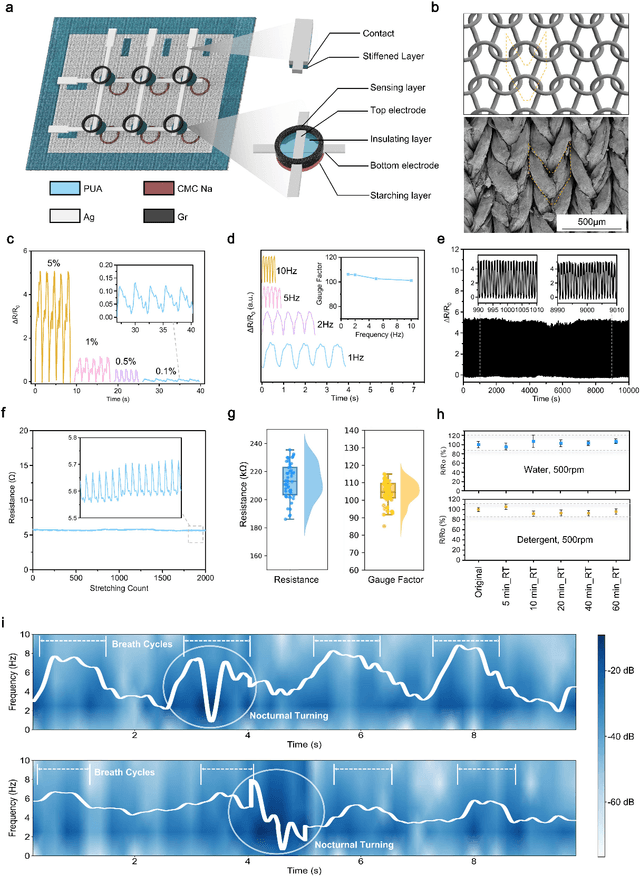

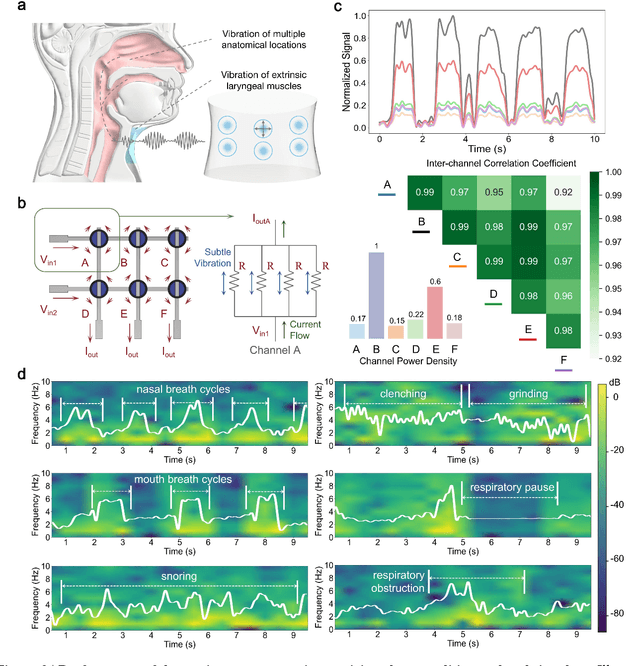

A deep learning-enabled smart garment for versatile sleep behaviour monitoring

Aug 01, 2024

Abstract:Continuous monitoring and accurate detection of complex sleep patterns associated to different sleep-related conditions is essential, not only for enhancing sleep quality but also for preventing the risk of developing chronic illnesses associated to unhealthy sleep. Despite significant advances in research, achieving versatile recognition of various unhealthy and sub-healthy sleep patterns with simple wearable devices at home remains a significant challenge. Here, we report a robust and durable ultrasensitive strain sensor array printed on a smart garment, in its collar region. This solution allows detecting subtle vibrations associated with multiple sleep patterns at the extrinsic laryngeal muscles. Equipped with a deep learning neural network, it can precisely identify six sleep states-nasal breathing, mouth breathing, snoring, bruxism, central sleep apnea (CSA), and obstructive sleep apnea (OSA)-with an impressive accuracy of 98.6%, all without requiring specific positioning. We further demonstrate its explainability and generalization capabilities in practical applications. Explainable artificial intelligence (XAI) visualizations reflect comprehensive signal pattern analysis with low bias. Transfer learning tests show that the system can achieve high accuracy (overall accuracy of 95%) on new users with very few-shot learning (less than 15 samples per class). The scalable manufacturing process, robustness, high accuracy, and excellent generalization of the smart garment make it a promising tool for next-generation continuous sleep monitoring.

Ultrasensitive Textile Strain Sensors Redefine Wearable Silent Speech Interfaces with High Machine Learning Efficiency

Dec 07, 2023Abstract:Our research presents a wearable Silent Speech Interface (SSI) technology that excels in device comfort, time-energy efficiency, and speech decoding accuracy for real-world use. We developed a biocompatible, durable textile choker with an embedded graphene-based strain sensor, capable of accurately detecting subtle throat movements. This sensor, surpassing other strain sensors in sensitivity by 420%, simplifies signal processing compared to traditional voice recognition methods. Our system uses a computationally efficient neural network, specifically a one-dimensional convolutional neural network with residual structures, to decode speech signals. This network is energy and time-efficient, reducing computational load by 90% while achieving 95.25% accuracy for a 20-word lexicon and swiftly adapting to new users and words with minimal samples. This innovation demonstrates a practical, sensitive, and precise wearable SSI suitable for daily communication applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge